Recent research from China has proposed a technique for improving the standard of images generated by Latent Diffusion Models (LDMs) models similar to Stable Diffusion.

The tactic focuses on optimizing the of a picture – areas more than likely to draw human attention.

Source: https://arxiv.org/pdf/2410.10257

Traditional methods, optimize the uniformly, while the brand new approach leverages a saliency detector to discover and prioritize more ‘essential’ regions, as humans do.

In quantitative and qualitative tests, the researchers’ method was capable of outperform prior diffusion-based models, each when it comes to image quality and fidelity to text prompts.

The brand new approach also scored best in a human perception trial with 100 participants.

Natural Selection

Saliency, the power to prioritize information in the actual world and in images, is an essential part of human vision.

An easy example of that is the increased attention to detail that classical art assigns to essential areas of a painting, similar to the face, in a portrait, or the masts of a ship, in a sea-based subject; in such examples, the artist’s attention converges on the central subject material, meaning that broad details similar to a portrait background or the distant waves of a storm are sketchier and more broadly representative than detailed.

Informed by human studies, machine learning methods have arisen over the past decade that may replicate or not less than approximate this human locus of interest in any picture.

Source: https://arxiv.org/pdf/1312.6034

Within the run of research literature, the preferred saliency map detector over the past five years has been the 2016 Gradient-weighted Class Activation Mapping (Grad-CAM) initiative, which later evolved into the improved Grad-CAM++ system, amongst other variants and refinements.

Grad-CAM uses the gradient activation of a semantic token (similar to ‘dog’ or ‘cat’) to supply a visible map of where the concept or annotation seems prone to be represented within the image.

Source: https://arxiv.org/pdf/1610.02391

Human surveys on the outcomes obtained by these methods have revealed a correspondence between these mathematical individuations of key interest points in a picture, and human attention (when scanning the image).

SGOOL

The recent paper considers what saliency can bring to text-to-image (and, potentially, text-to-video) systems similar to Stable Diffusion and Flux.

When interpreting a user’s text-prompt, Latent Diffusion Models explore their trained latent space for learned visual concepts that correspond with the words or phrases used. They then parse these found data-points through a denoising process, where random noise is regularly evolved right into a creative interpretation of the user’s text-prompt.

At this point, nevertheless, the model gives . Because the popularization of diffusion models in 2022, with the launch of OpenAI’s available Dall-E image generators, and the following open-sourcing of Stability.ai’s Stable Diffusion framework, users have found that ‘essential’ sections of a picture are sometimes under-served.

Considering that in a typical depiction of a human, the person’s face (which is of maximum importance to the viewer) is prone to occupy not more than 10-35% of the whole image, this democratic approach to attention dispersal works against each the character of human perception and the history of art and photography.

When the buttons on an individual’s jeans receive the identical computing heft as their eyes, the allocation of resources might be said to be non-optimal.

Subsequently, the brand new method proposed by the authors, titled (SGOOL), uses a saliency mapper to extend attention on neglected areas of an image, devoting fewer resources to sections prone to remain on the periphery of the viewer’s attention.

Method

The SGOOL pipeline includes image generation, saliency mapping, and optimization, with the general image and saliency-refined image jointly processed.

The diffusion model’s latent embeddings are optimized directly with fine-tuning, removing the necessity to train a particular model. Stanford University’s Denoising Diffusion Implicit Model (DDIM) sampling method, familiar to users of Stable Diffusion, is tailored to include the secondary information provided by saliency maps.

The paper states:

Since this method requires multiple iterations of the denoising process, the authors adopted the Direct Optimization Of Diffusion Latents (DOODL) framework, which provides an invertible diffusion process – though it still applies attention to the whole thing of the image.

To define areas of human interest, the researchers employed the University of Dundee’s 2022 TransalNet framework.

Source: https://discovery.dundee.ac.uk/ws/portalfiles/portal/89737376/1_s2.0_S0925231222004714_main.pdf

The salient regions processed by TransalNet were then cropped to generate conclusive saliency sections prone to be of most interest to actual people.

The difference between the user text and the image needs to be considered, when it comes to defining a loss function that may determine if the method is working. For this, a version of OpenAI’s Contrastive Language–Image Pre-training (CLIP) – by now a mainstay of the image synthesis research sector – was used, along with consideration of the estimated semantic distance between the text prompt and the worldwide (non-saliency) image output.

The authors assert:

Data and Tests

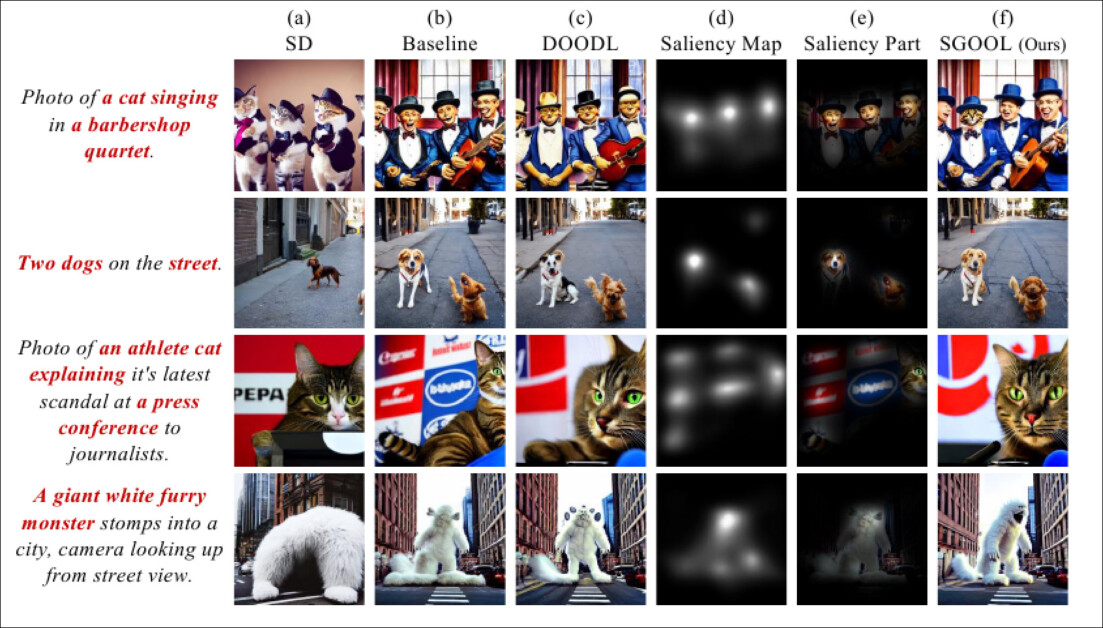

To check SGOOL, the authors used a ‘vanilla’ distribution of Stable Diffusion V1.4 (denoted as ‘SD’ in test results) and Stable Diffusion with CLIP guidance (denoted as ‘baseline’ in results).

The system was evaluated against three public datasets: CommonSyntacticProcesses (CSP), DrawBench, and DailyDallE*.

The latter accommodates 99 elaborate prompts from an artist featured in one in every of OpenAI’s blog posts, while DrawBench offers 200 prompts across 11 categories. CSP consists of 52 prompts based on eight diverse grammatical cases.

For SD, baseline and SGOOL, within the tests, the CLIP model was used over ViT/B-32 to generate the image and text embeddings. The identical prompt and random seed was used. The output size was 256×256, and the default weights and settings of TransalNet were employed.

Besides the CLIP rating metric, an estimated Human Preference Rating (HPS) was used, along with a real-world study with 100 participants.

In regard to the quantitative results depicted within the table above, the paper states:

The authors further estimated the box plots of the HPS and CLIP scores in respect to the previous approaches:

They comment:

The researchers note that while the baseline model is capable of improve the standard of image output, it doesn’t consider the salient areas of the image. They contend that SGOOL, in arriving at a compromise between global and salient image evaluation, obtains higher images.

In qualitative (automated) comparisons, the variety of optimizations was set to 50 for SGOOL and DOODL.

Here the authors observe:

They further note that SGOOL, in contrast, generates images which are more consistent with the unique prompt.

Within the human perception test, 100 volunteers evaluated test images for quality and semantic consistency (i.e., how closely they adhered to their source text-prompts). The participants had unlimited time to make their selections.

Because the paper points out, the authors’ method is notably preferred over the prior approaches.

Conclusion

Not long after the shortcomings addressed on this paper became evident in local installations of Stable Diffusion, various bespoke methods (similar to After Detailer) emerged to force the system to use extra attention to areas that were of greater human interest.

Nonetheless, this sort of approach requires that the diffusion system initially undergo its normal means of applying equal attention to each a part of the image, with the increased work being done as an additional stage.

The evidence from SGOOL suggests that applying basic human psychology to the prioritization of image sections could greatly enhance the initial inference, without post-processing steps.

*