Sentence embedding is a technique that maps sentences to vectors of real numbers. Ideally, these vectors would capture the semantic of a sentence and be highly generic. Such representations could then be used for a lot of downstream applications reminiscent of clustering, text mining, or query answering.

We developed state-of-the-art sentence embedding models as a part of the project “Train the Best Sentence Embedding Model Ever with 1B Training Pairs”. This project took place throughout the Community week using JAX/Flax for NLP & CV, organized by Hugging Face. We benefited from efficient hardware infrastructure to run the project: 7 TPUs v3-8, in addition to guidance from Google’s Flax, JAX, and Cloud team members about efficient deep learning frameworks!

Training methodology

Model

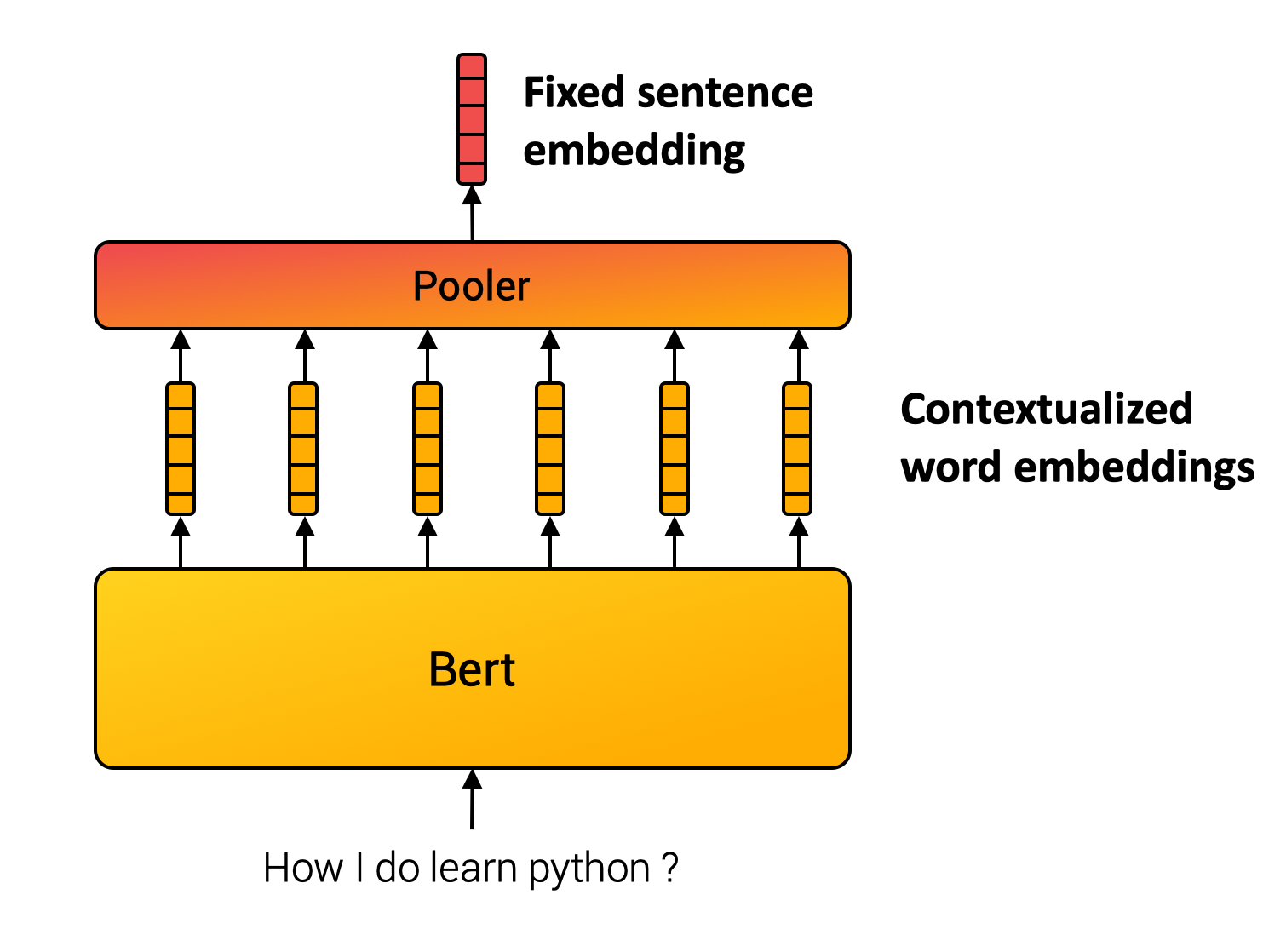

Unlike words, we are able to not define a finite set of sentences. Sentence embedding methods, due to this fact, compose inner words to compute the ultimate representation. For instance, SentenceBert model (Reimers and Gurevych, 2019) uses Transformer, the cornerstone of many NLP applications, followed by a pooling operation over the contextualized word vectors. (c.f. Figure below.)

Multiple Negative Rating Loss

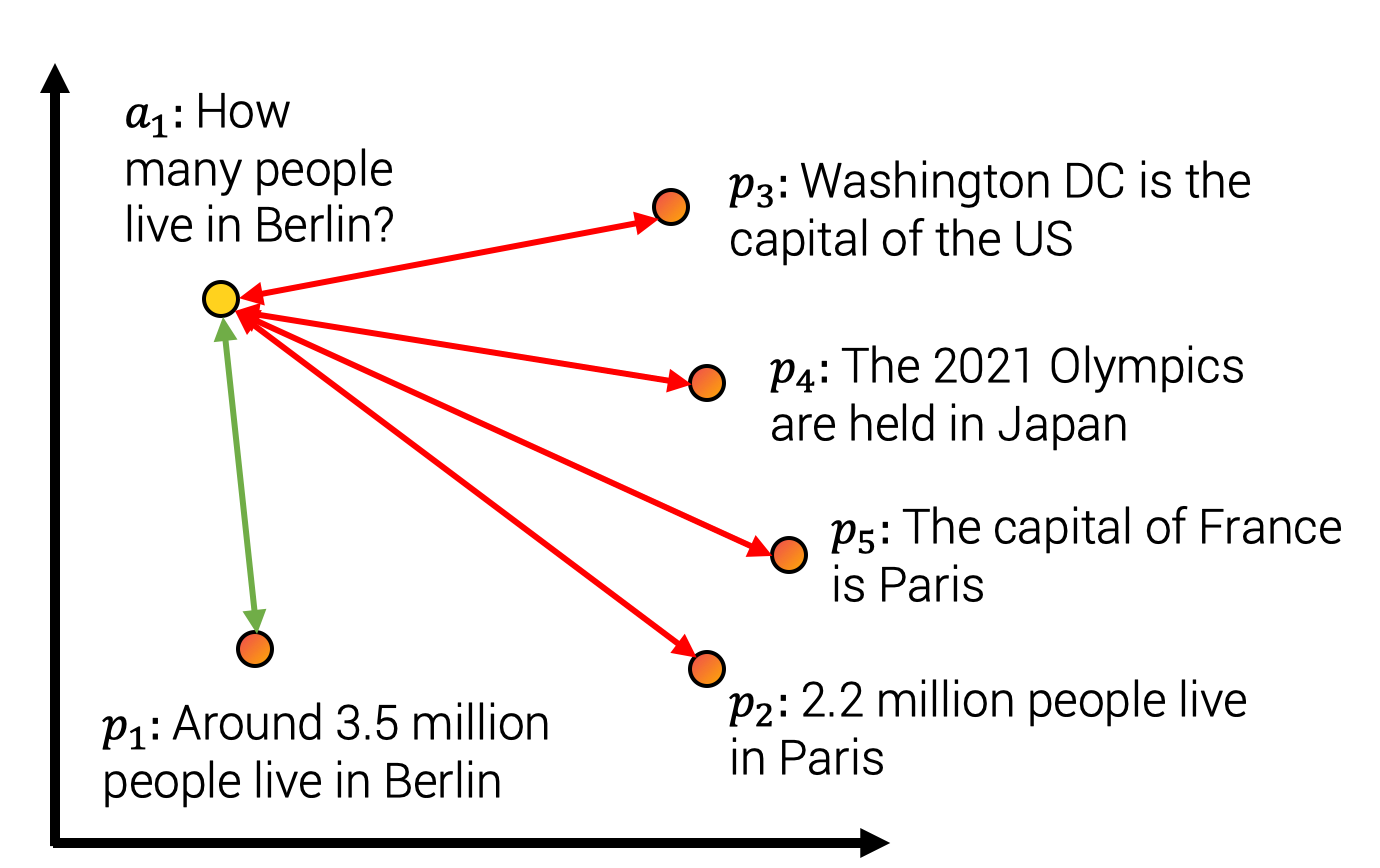

The parameters from the composition module are often learned using a self-supervised objective. For the project, we used a contrastive training method illustrated within the figure below. We constitute a dataset with sentence pairs such that sentences from the pair have a detailed meaning. For instance, we consider pairs reminiscent of (query, answer-passage), (query, duplicate_question),(paper title, cited paper title). Our model is then trained to map pairs to shut vectors while assigning unmatched pairs to distant vectors within the embedding space. This training method can also be called training with in-batch negatives, InfoNCE or NTXentLoss.

Formally, given a batch of coaching samples, the model optimises the next loss function:

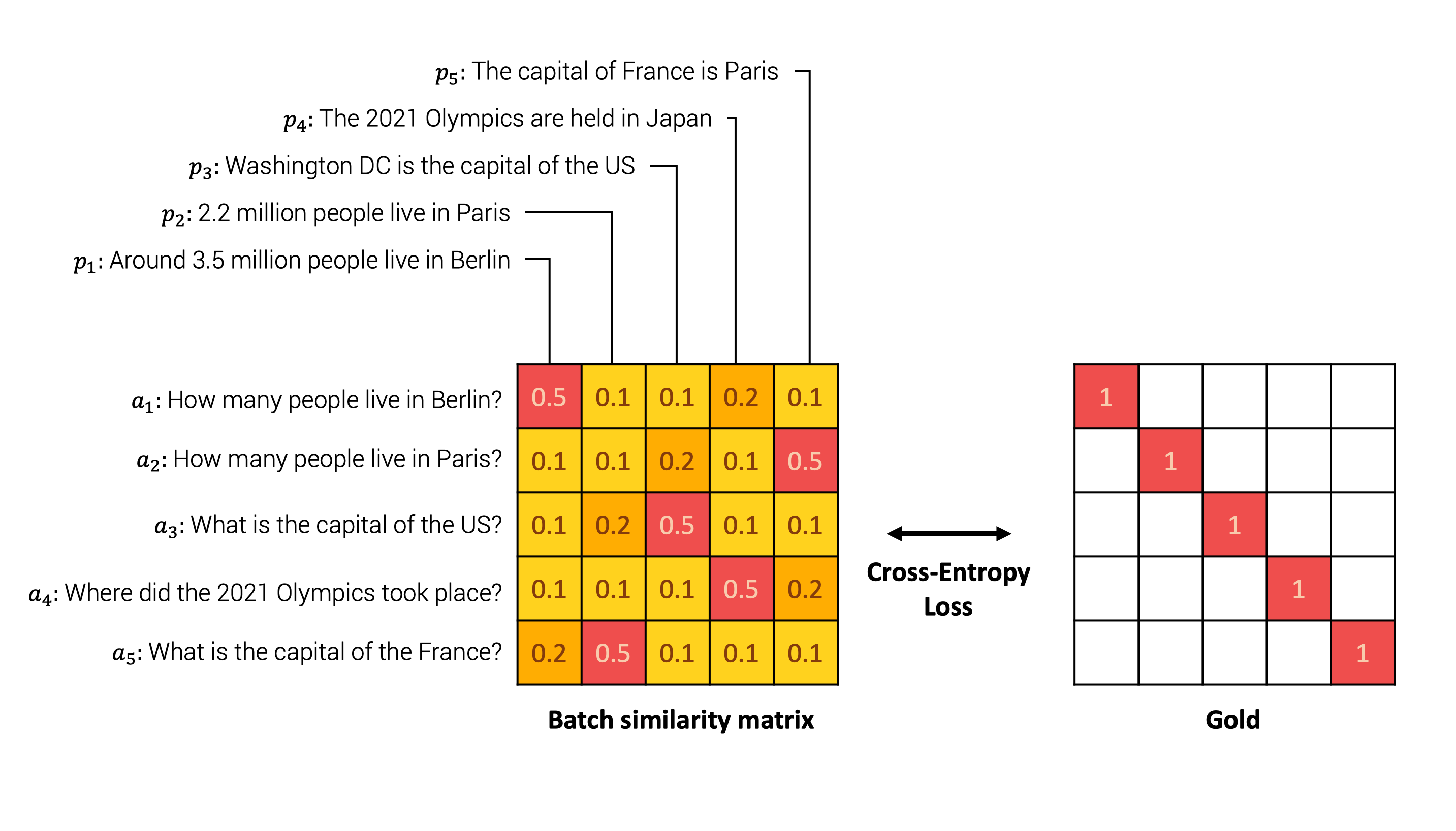

An illustrative example could be seen below. The model first embeds each sentence from every pair within the batch. Then, we compute a similarity matrix between every possible pair . We then compare the similarity matrix with the bottom truth, which indicates the unique pairs. Finally, we perform the comparison using the cross entropy loss.

Intuitively, the model should assign high similarity to the sentences « How many individuals live in Berlin? » and « Around 3.5 million people live in Berlin » and low similarity to other negative answers reminiscent of « The capital of France is Paris » as detailed within the Figure below.

Within the loss equation, sim indicates a similarity function between . The similarity function may very well be either the Cosine-Similarity or the Dot-Product operator. Each methods have their pros and cons summarized below (Thakur et al., 2021, Bachrach et al., 2014):

| Cosine-similarity | Dot-product |

|---|---|

| Vector has highest similarity to itself since . | Other vectors can have higher dot-products . |

| With normalised vectors it is the same as the dot product. The max vector length is equals 1. | It could be slower with certain approximate nearest neighbour methods because the max vector not known. |

| With normalised vectors, it’s proportional to euclidian distance. It really works with k-means clustering. | It doesn’t work with k-means clustering. |

In practice, we used a scaled similarity because rating differences tends to be too small and apply a scaling factor such that with typically (Henderson and al., 2020, Radford and al., 2021).

Improving Quality with Higher Batches

In our method, we construct batches of sample pairs . We consider all other samples from the batch, , as negatives sample pairs. The batch composition is due to this fact a key training aspect. Given the literature within the domain, we mainly focused on three foremost facets of the batch.

1. Size matters

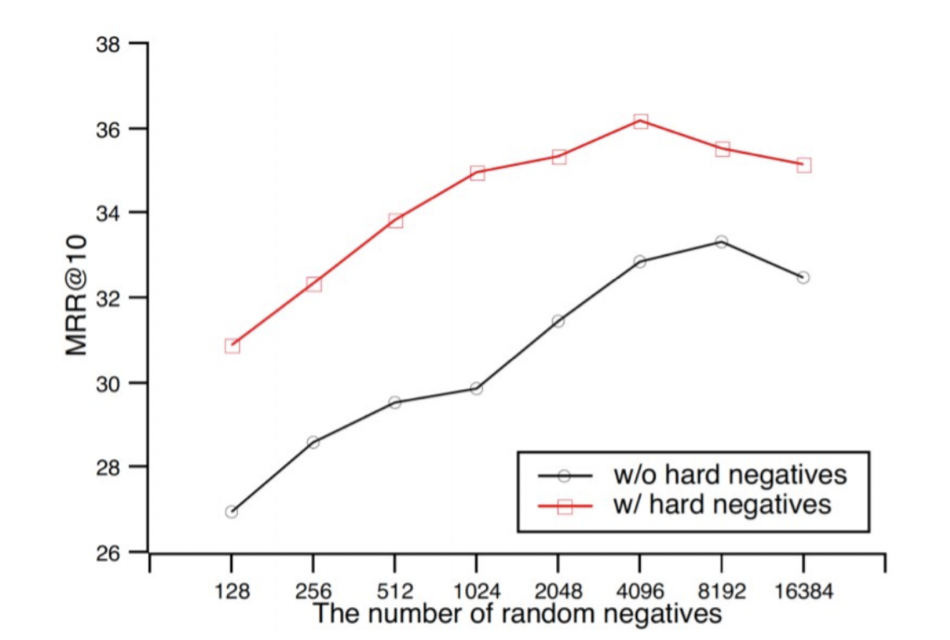

In contrastive learning, a bigger batch size is synonymous with higher performance. As shown within the Figure extracted from Qu and al., (2021), a bigger batch size increases the outcomes.

2. Hard Negatives

In the identical figure, we observe that including hard negatives also improves performance. Hard negatives are sample that are hard to tell apart from . In our example, it may very well be the pairs « What’s the capital of France? » and « What’s the capital of the US? » which have a detailed semantic content and requires precisely understanding the total sentence to be answered accurately. Quite the opposite, the samples « What’s the capital of France? » and «What number of Star Wars movies is there?» are more easy to tell apart since they don’t discuss with the identical topic.

3. Cross dataset batches

We concatenated multiple datasets to coach our models. We built a big batch and gathered samples from the identical batch dataset to limit the subject distribution and favor hard negatives. Nonetheless, we also mix not less than two datasets within the batch to learn a world structure between topics and never only a neighborhood structure inside a subject.

Training infrastructure and data

As mentioned earlier, the amount of knowledge and the batch size directly impact the model performances. As a part of the project, we benefited from efficient hardware infrastructure. We trained our models on TPUs that are compute units developed by Google and super efficient for matrix multiplications. TPUs have some hardware specificities which could require some specific code implementation.

Moreover, we trained models on a big corpus as we concatenated multiple datasets as much as 1 billion sentence pairs! All datasets used are detailed for every model within the model card.

Conclusion

You could find all models and datasets we created throughout the challenge in our HuggingFace repository. We trained 20 general-purpose Sentence Transformers models reminiscent of Mini-LM (Wang and al., 2020), RoBERTa (liu and al., 2019), DistilBERT (Sanh and al., 2020) and MPNet (Song and al., 2020). Our models achieve SOTA on multiple general-purpose Sentence Similarity evaluation tasks. We also shared 8 datasets specialized for Query Answering, Sentence-Similarity, and Gender Evaluation.

General sentence embeddings could be used for a lot of applications. We built a Spaces demo to showcase several applications:

- The sentence similarity module compares the similarity of the foremost text with other texts of your alternative. Within the background, the demo extracts the embedding for every text and computes the similarity between the source sentence and the opposite using cosine similarity.

- Asymmetric QA compares the reply likeliness of a given query with answer candidates of your alternative.

- Search / Cluster returns nearby answers from a question. For instance, for those who input « python », it can retrieve closest sentences using dot-product distance.

- Gender Bias Evaluation report inherent gender bias in training set via random sampling of the sentences. Given an anchor text without mentioning gender for goal occupation and a pair of propositions with gendered pronouns, we compare if models assign a better similarity to a given proposition and due to this fact evaluate their proportion to favor a selected gender.

The Community week using JAX/Flax for NLP & CV has been an intense and highly rewarding experience! The standard of Google’s Flax, JAX, and Cloud and Hugging Face team members’ guidance and their presence helped us all learn lots. We hope all projects had as much fun as we did in ours. Each time you could have questions or suggestions, don’t hesitate to contact us!