This post is step one of a journey for Hugging Face to democratize

state-of-the-art Machine Learning production performance.

To get there, we’ll work hand in hand with our

Hardware Partners, as we now have with Intel below.

Join us on this journey, and follow Optimum, our latest open source library!

Why 🤗 Optimum?

🤯 Scaling Transformers is difficult

What do Tesla, Google, Microsoft and Facebook all have in common?

Well many things, but certainly one of them is all of them run billions of Transformer model predictions

on daily basis. Transformers for AutoPilot to drive your Tesla (lucky you!),

for Gmail to finish your sentences,

for Facebook to translate your posts on the fly,

for Bing to reply your natural language queries.

Transformers have brought a step change improvement

within the accuracy of Machine Learning models, have conquered NLP and are actually expanding

to other modalities starting with Speech

and Vision.

But taking these massive models into production, and making them run fast at scale is a large challenge

for any Machine Learning Engineering team.

What in the event you don’t have a whole lot of highly expert Machine Learning Engineers on payroll just like the above corporations?

Through Optimum, our latest open source library, we aim to construct the definitive toolkit for Transformers production performance,

and enable maximum efficiency to coach and run models on specific hardware.

🏭 Optimum puts Transformers to work

To get optimal performance training and serving models, the model acceleration techniques must be specifically compatible with the targeted hardware.

Each hardware platform offers specific software tooling,

features and knobs that may have a big impact on performance.

Similarly, to benefit from advanced model acceleration techniques like sparsity and quantization, optimized kernels must be compatible with the operators on silicon,

and specific to the neural network graph derived from the model architecture.

Diving into this three-dimensional compatibility matrix and the way to use model acceleration libraries is daunting work,

which few Machine Learning Engineers have experience on.

Optimum goals to make this work easy, providing performance optimization tools targeting efficient AI hardware,

inbuilt collaboration with our Hardware Partners, and switch Machine Learning Engineers into ML Optimization wizards.

With the Transformers library, we made it easy for researchers and engineers to make use of state-of-the-art models,

abstracting away the complexity of frameworks, architectures and pipelines.

With the Optimum library, we’re making it easy for engineers to leverage all of the available hardware features at their disposal,

abstracting away the complexity of model acceleration on hardware platforms.

🤗 Optimum in practice: the way to quantize a model for Intel Xeon CPU

🤔 Why quantization is very important but tricky to get right

Pre-trained language models similar to BERT have achieved state-of-the-art results on a big selection of natural language processing tasks,

other Transformer based models similar to ViT and Speech2Text have achieved state-of-the-art results on computer vision and speech tasks respectively:

transformers are in all places within the Machine Learning world and are here to remain.

Nonetheless, putting transformer-based models into production might be tricky and expensive as they need loads of compute power to work.

To unravel this many techniques exist, the preferred being quantization.

Unfortunately, typically quantizing a model requires loads of work, for a lot of reasons:

- The model must be edited: some ops must be replaced by their quantized counterparts, latest ops must be inserted (quantization and dequantization nodes),

and others must be adapted to the proven fact that weights and activations will probably be quantized.

This part might be very time-consuming because frameworks similar to PyTorch work in eager mode, meaning that the changes mentioned above must be added to the model implementation itself.

PyTorch now provides a tool called torch.fx that means that you can trace and transform your model without having to really change the model implementation, however it is hard to make use of when tracing is just not supported to your model out of the box.

On top of the particular editing, it’s also vital to seek out which parts of the model must be edited,

which ops have an available quantized kernel counterpart and which ops don’t, and so forth.

-

Once the model has been edited, there are numerous parameters to play with to seek out one of the best quantization settings:

- Which type of observers should I take advantage of for range calibration?

- Which quantization scheme should I take advantage of?

- Which quantization related data types (int8, uint8, int16) are supported on my goal device?

-

Balance the trade-off between quantization and an appropriate accuracy loss.

-

Export the quantized model for the goal device.

Although PyTorch and TensorFlow made great progress in making things easy for quantization,

the complexities of transformer based models makes it hard to make use of the provided tools out of the box and get something working without putting up a ton of effort.

💡 How Intel is solving quantization and more with Neural Compressor

Intel® Neural Compressor (formerly known as Low Precision Optimization Tool or LPOT) is an open-source python library designed to assist users deploy low-precision inference solutions.

The latter applies low-precision recipes for deep-learning models to realize optimal product objectives,

similar to inference performance and memory usage, with expected performance criteria.

Neural Compressor supports post-training quantization, quantization-aware training and dynamic quantization.

With a purpose to specify the quantization approach, objective and performance criteria, the user must provide a configuration yaml file specifying the tuning parameters.

The configuration file can either be hosted on the Hugging Face’s Model Hub or might be given through a neighborhood directory path.

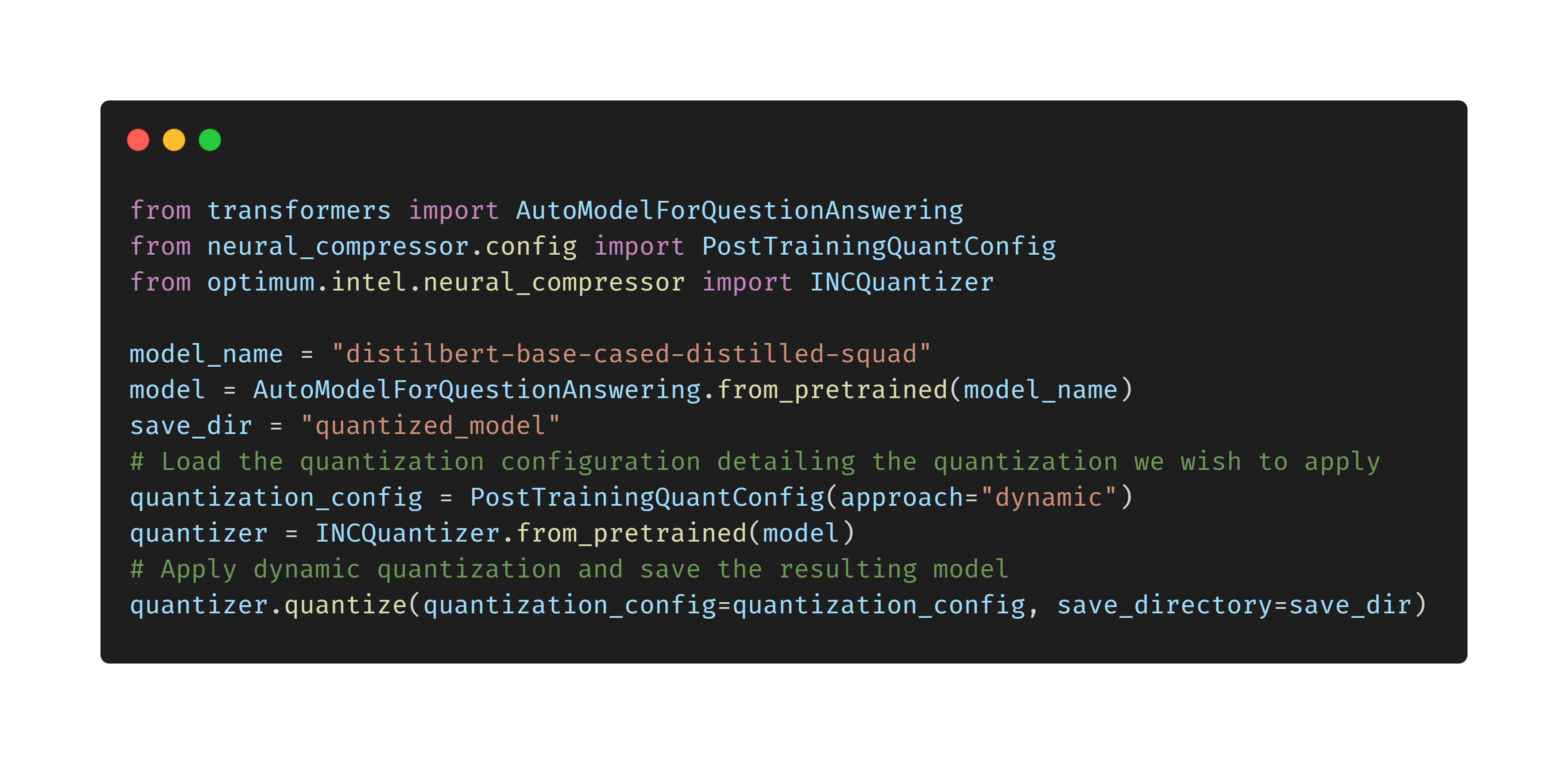

🔥 Methods to easily quantize Transformers for Intel Xeon CPUs with Optimum

Follow 🤗 Optimum: a journey to democratize ML production performance

⚡️State of the Art Hardware

Optimum will give attention to achieving optimal production performance on dedicated hardware, where software and hardware acceleration techniques might be applied for max efficiency.

We are going to work hand in hand with our Hardware Partners to enable, test and maintain acceleration, and deliver it in a simple and accessible way through Optimum, as we did with Intel and Neural Compressor.

We are going to soon announce latest Hardware Partners who’ve joined us on our journey toward Machine Learning efficiency.

🔮 State-of-the-Art Models

The collaboration with our Hardware Partners will yield hardware-specific optimized model configurations and artifacts,

which we’ll make available to the AI community via the Hugging Face Model Hub.

We hope that Optimum and hardware-optimized models will speed up the adoption of efficiency in production workloads,

which represent most of the mixture energy spent on Machine Learning.

And most of all, we hope that Optimum will speed up the adoption of Transformers at scale, not only for the largest tech corporations, but for all of us.

🌟 A journey of collaboration: join us, follow our progress

Every journey starts with a primary step, and ours was the general public release of Optimum.

Join us and make your first step by giving the library a Star,

so you possibly can follow along as we introduce latest supported hardware, acceleration techniques and optimized models.

Should you would really like to see latest hardware and features be supported in Optimum,

or you might be concerned about joining us to work on the intersection of software and hardware, please reach out to us at hardware@huggingface.co