Summer is now officially over and these previous few months have been quite busy at Hugging Face. From recent features within the Hub to research and Open Source development, our team has been working hard to empower the community through open and collaborative technology.

On this blog post you may make amends for every thing that happened at Hugging Face in June, July and August!

This post covers a wide selection of areas our team has been working on, so don’t hesitate to skip to the parts that interest you probably the most 🤗

Latest Features

In the previous few months, the Hub went from 10,000 public model repositories to over 16,000 models! Kudos to our community for sharing so many amazing models with the world. And beyond the numbers, we now have a ton of cool recent features to share with you!

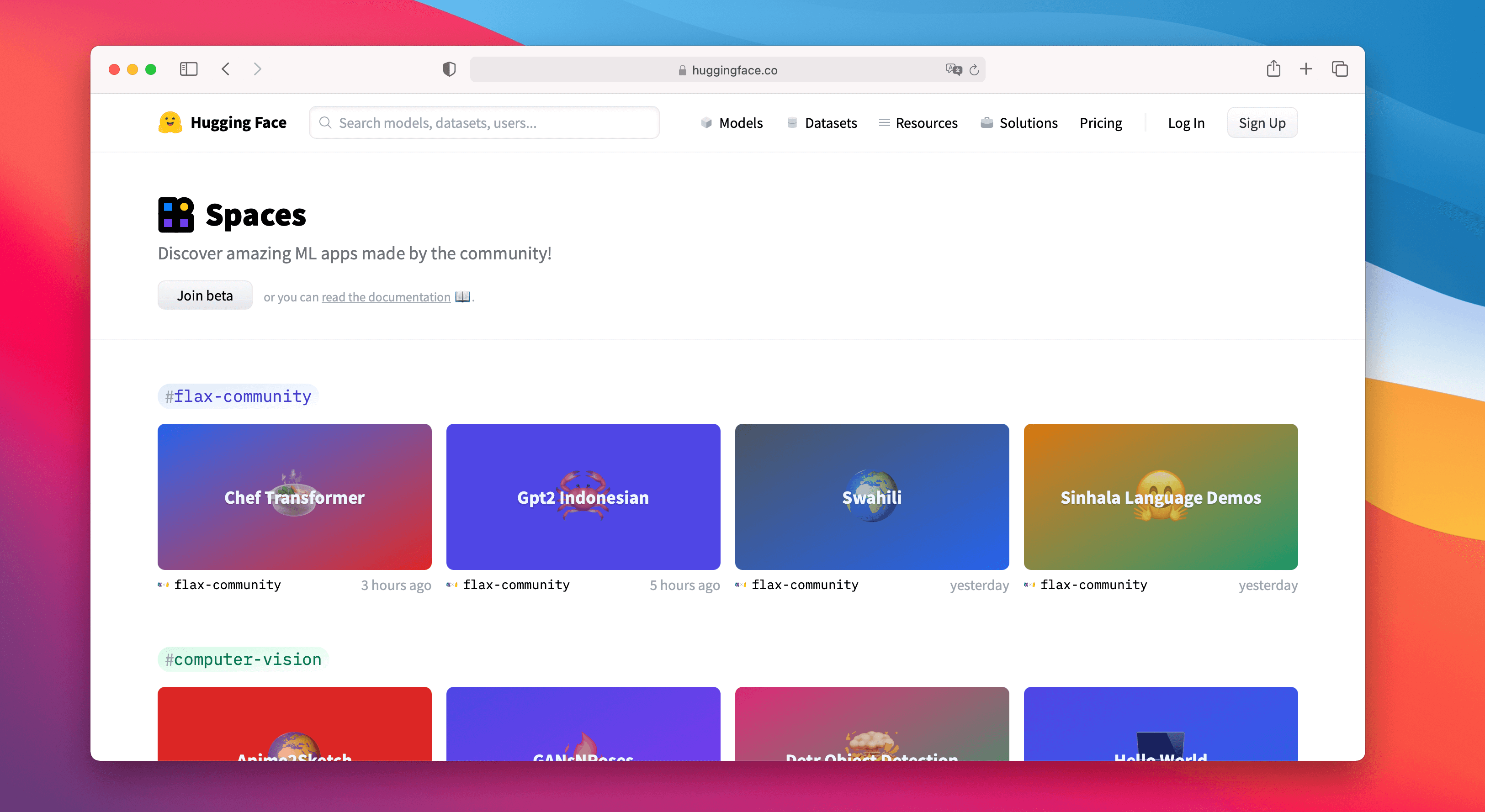

Spaces Beta (hf.co/spaces)

Spaces is an easy and free solution to host Machine Learning demo applications directly in your user profile or your organization hf.co profile. We support two awesome SDKs that permit you construct cool apps easily in Python: Gradio and Streamlit. In a matter of minutes you’ll be able to deploy an app and share it with the community! 🚀

Spaces allows you to arrange secrets, permits custom requirements, and might even be managed directly from GitHub repos. You may join for the beta at hf.co/spaces. Listed here are a few of our favorites!

Share Some Love

You may now like every model, dataset, or Space on http://huggingface.co, meaning you’ll be able to share some love with the community ❤️. You can too keep watch over who’s liking what by clicking on the likes box 👀. Go ahead and like your individual repos, we’re not judging 😉.

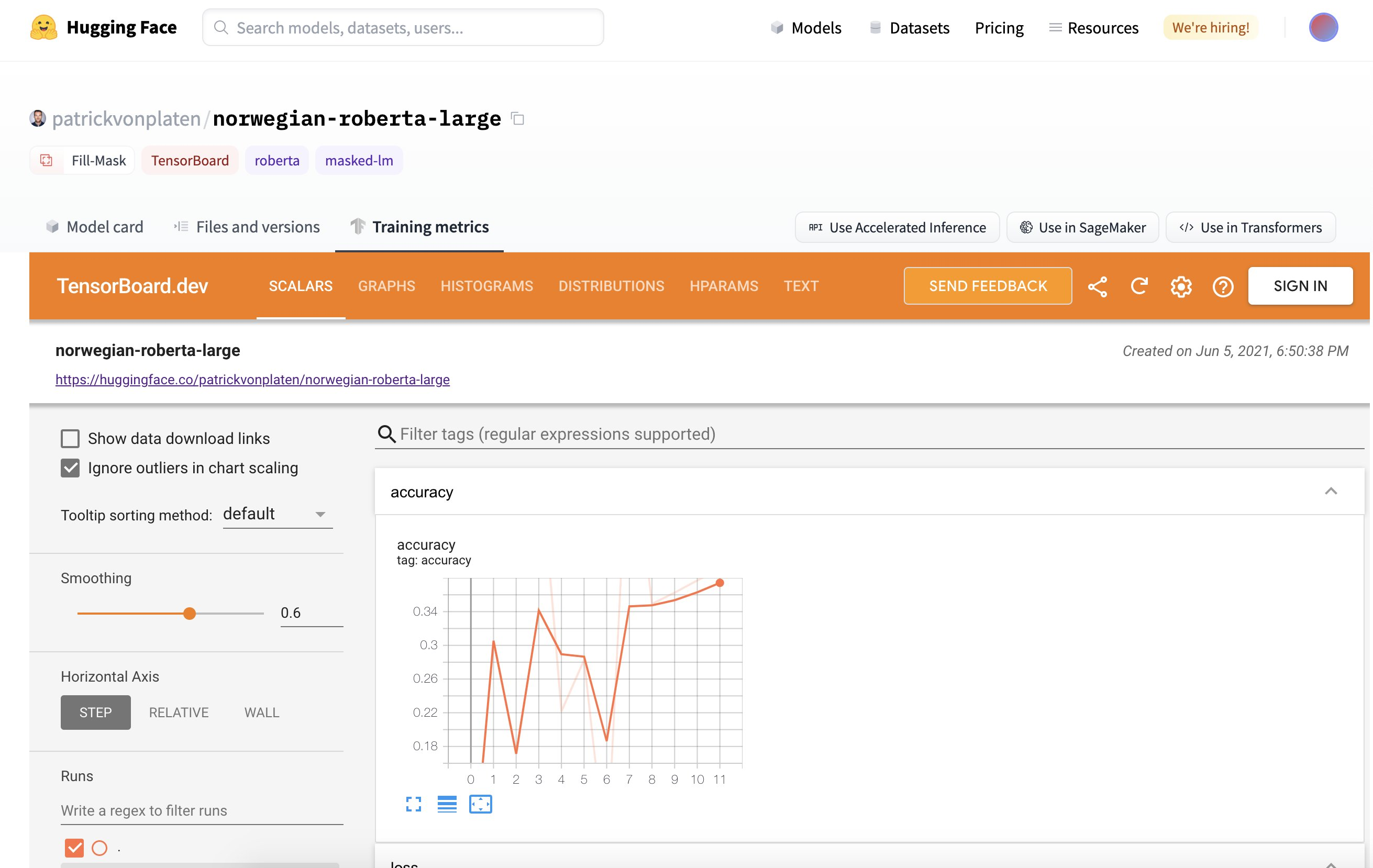

TensorBoard Integration

In late June, we launched a TensorBoard integration for all our models. If there are TensorBoard traces within the repo, an automatic, free TensorBoard instance is launched for you. This works with each private and non-private repositories and for any library that has TensorBoard traces!

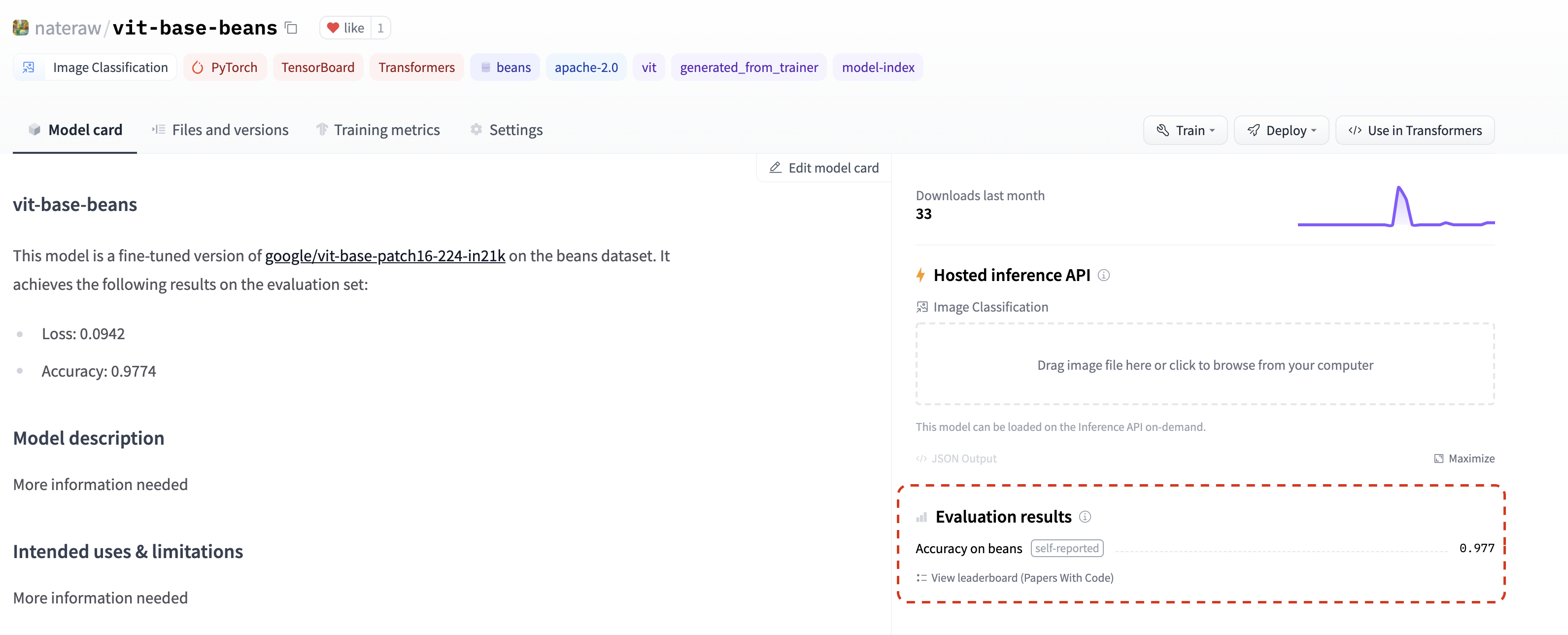

Metrics

In July, we added the power to list evaluation metrics in model repos by adding them to their model card📈. If you happen to add an evaluation metric under the model-index section of your model card, it is going to be displayed proudly in your model repo.

If that wasn’t enough, these metrics might be robotically linked to the corresponding Papers With Code leaderboard. Meaning as soon as you share your model on the Hub, you’ll be able to compare your results side-by-side with others in the neighborhood. 💪

Take a look at this repo for example, paying close attention to model-index section of its model card to see how you’ll be able to do that yourself and find the metrics in Papers with Code robotically.

Latest Widgets

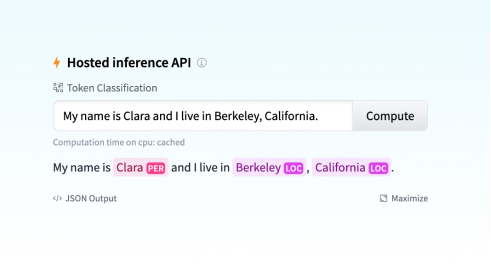

The Hub has 18 widgets that allow users to check out models directly within the browser.

With our latest integrations to Sentence Transformers, we also introduced two recent widgets: feature extraction and sentence similarity.

The newest audio classification widget enables many cool use cases: language identification, street sound detection 🚨, command recognition, speaker identification, and more! You may do that out with transformers and speechbrain models today! 🔊 (Beware, once you try a few of the models, you may have to bark out loud)

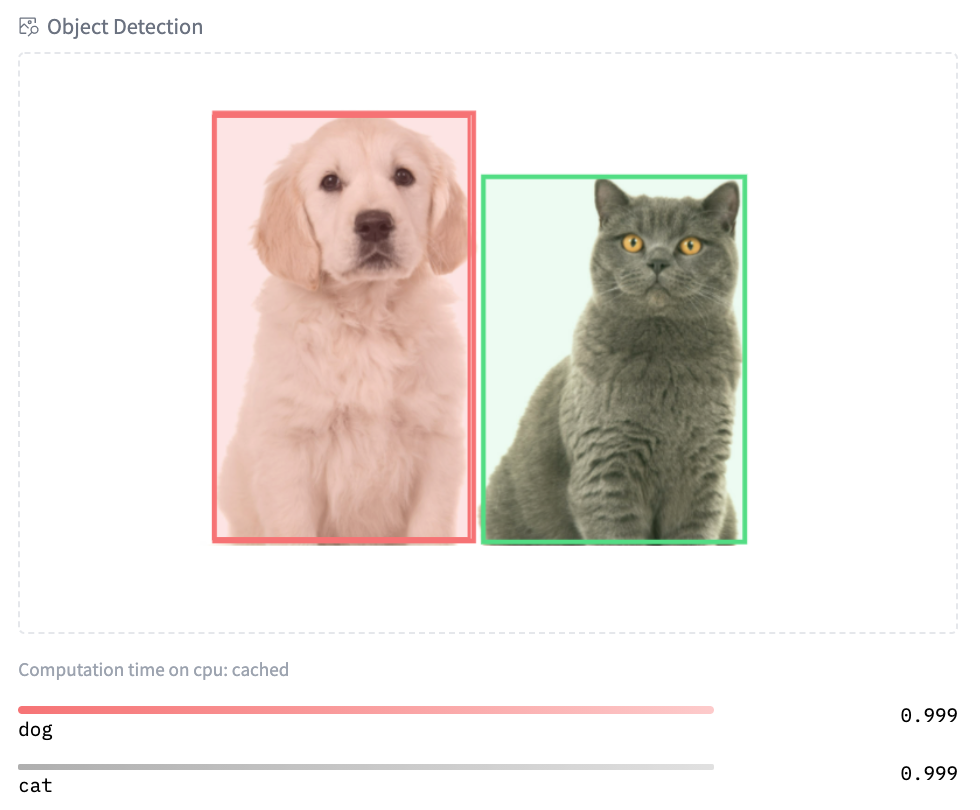

You may try our early demo of structured data classification with Scikit-learn. And at last, we also introduced recent widgets for image-related models: text to image, image classification, and object detection. Try image classification with Google’s ViT model here and object detection with Facebook AI’s DETR model here!

More Features

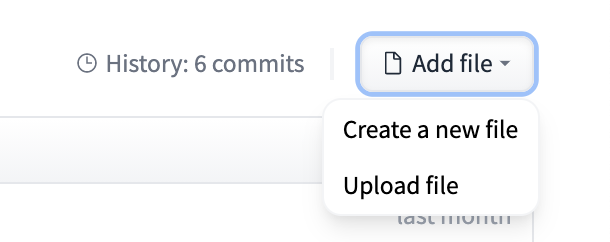

That is not every thing that has happened within the Hub. We have introduced recent and improved documentation of the Hub. We also introduced two widely requested features: users can now transfer/rename repositories and directly upload recent files to the Hub.

Community

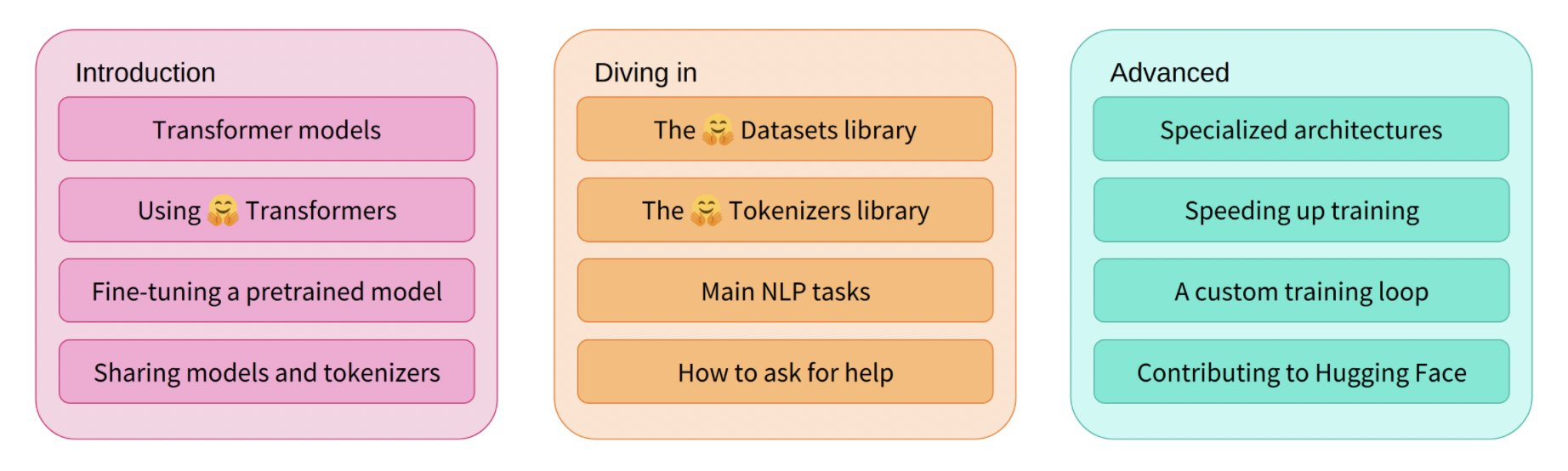

Hugging Face Course

In June, we launched the primary a part of our free online course! The course teaches you every thing in regards to the 🤗 Ecosystem: Transformers, Tokenizers, Datasets, Speed up, and the Hub. You can too find links to the course lessons within the official documentation of our libraries. The live sessions for all chapters might be found on our YouTube channel. Stay tuned for the subsequent a part of the course which we’ll be launching later this yr!

JAX/FLAX Sprint

In July we hosted our biggest community event ever with almost 800 participants! On this event co-organized with the JAX/Flax and Google Cloud teams, compute-intensive NLP, Computer Vision, and Speech projects were made accessible to a wider audience of engineers and researchers by providing free TPUv3s. The participants created over 170 models, 22 datasets, and 38 Spaces demos 🤯. You may explore all of the amazing demos and projects here.

There have been talks around JAX/Flax, Transformers, large-scale language modeling, and more! You’ll find all recordings here.

We’re really excited to share the work of the three winning teams!

-

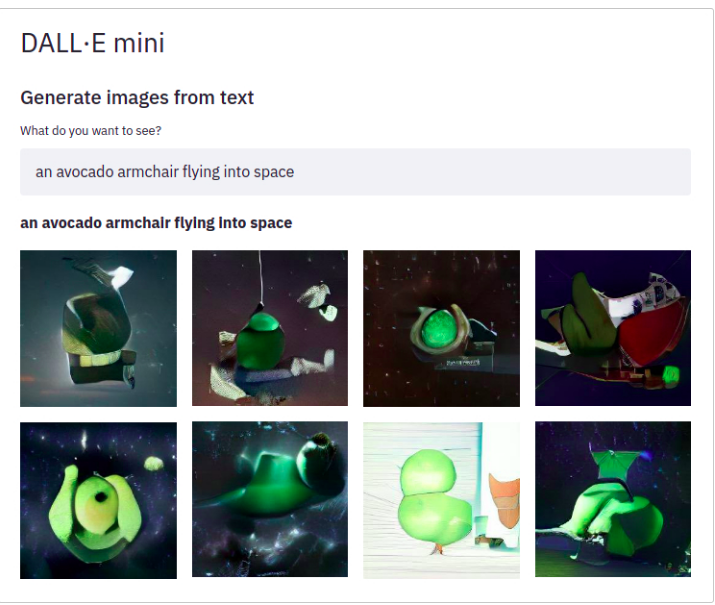

Dall-e mini. DALL·E mini is a model that generates images from any prompt you give! DALL·E mini is 27 times smaller than the unique DALL·E and still has impressive results.

-

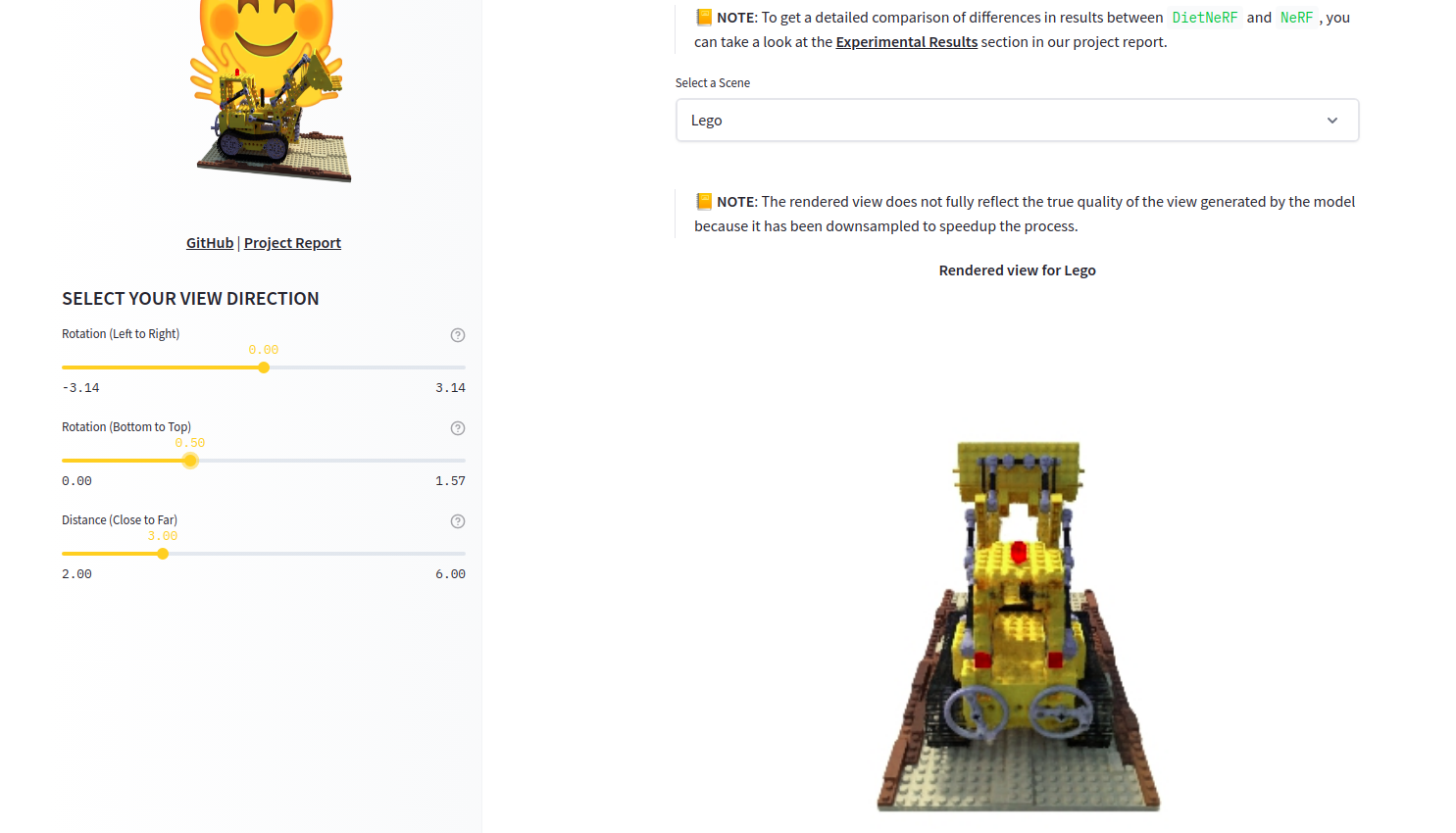

DietNerf. DietNerf is a 3D neural view synthesis model designed for few-shot learning of 3D scene reconstruction using 2D views. That is the primary Open Source implementation of the “Putting Nerf on a Food plan” paper.

-

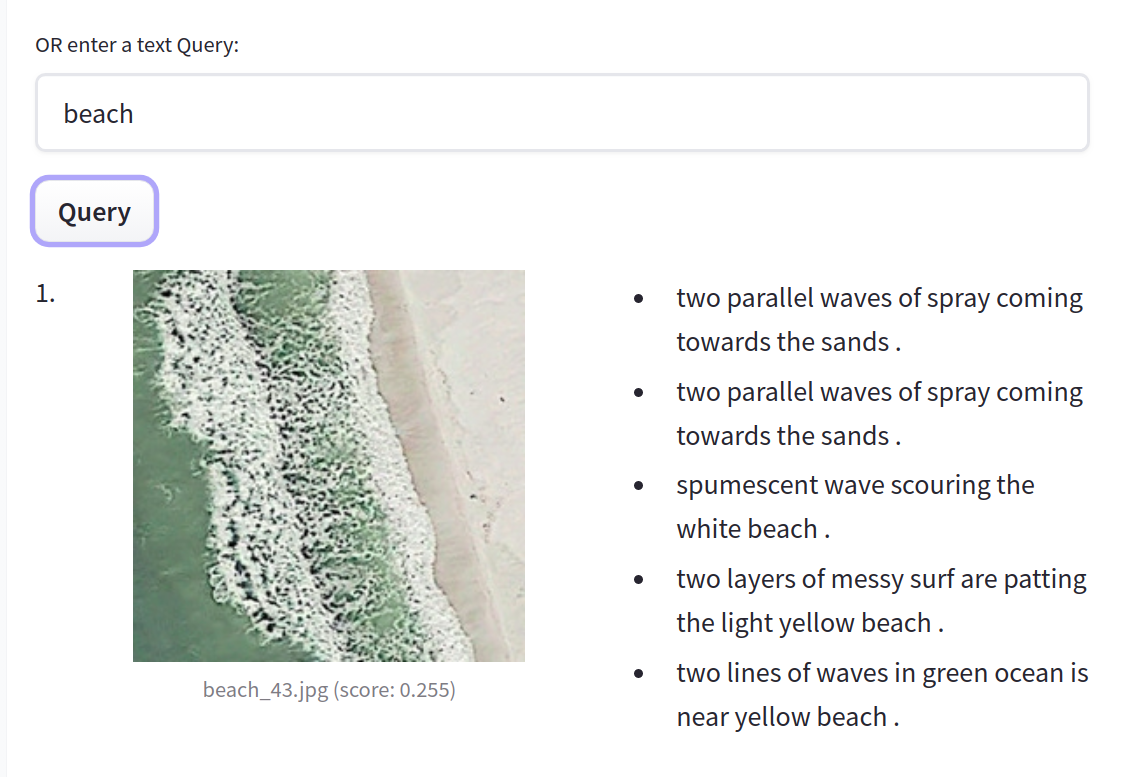

CLIP RSIC. CLIP RSIC is a CLIP model fine-tuned on distant sensing image data to enable zero-shot satellite image classification and captioning. This project demonstrates how effective fine-tuned CLIP models might be for specialised domains.

Other than these very cool projects, we’re enthusiastic about how these community events enable training large and multi-modal models for multiple languages. For instance, we saw the primary ever Open Source big LMs for some low-resource languages like Swahili, Polish and Marathi.

Bonus

On top of every thing we just shared, our team has been doing a lot of other things. Listed here are just a few of them:

- 📖 This 3-part video series shows the speculation on learn how to train state-of-the-art sentence embedding models.

- We presented at PyTorch Community Voices and took part in a QA (video).

- Hugging Face has collaborated with NLP in Spanish and SpainAI in a Spanish course that teaches concepts and state-of-the art architectures in addition to their applications through use cases.

- We presented at MLOps World Demo Days.

Open Source

Latest in Transformers

Summer has been an exciting time for 🤗 Transformers! The library reached 50,000 stars, 30 million total downloads, and almost 1000 contributors! 🤩

So what’s recent? JAX/Flax is now the third supported framework with over 5000 models within the Hub! You’ll find actively maintained examples for various tasks corresponding to text classification. We’re also working hard on improving our TensorFlow support: all our examples have been reworked to be more robust, TensorFlow idiomatic, and clearer. This includes examples corresponding to summarization, translation, and named entity recognition.

You may now easily publish your model to the Hub, including robotically authored model cards, evaluation metrics, and TensorBoard instances. There may be also increased support for exporting models to ONNX with the brand new transformers.onnx module.

python -m transformers.onnx --model=bert-base-cased onnx/bert-base-cased/

The last 4 releases introduced many recent cool models!

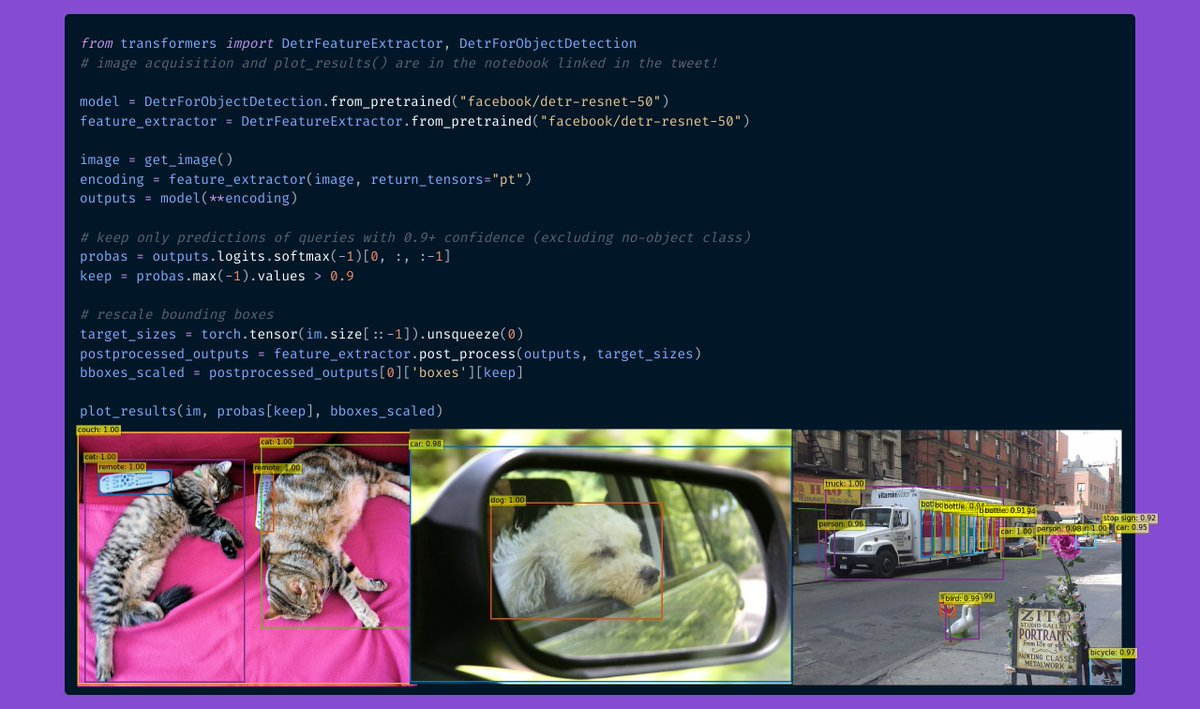

- DETR can do fast end-to-end object detection and image segmentation. Take a look at a few of our community tutorials!

- ByT5 is the primary tokenizer-free model within the Hub! You’ll find all available checkpoints here.

- CANINE is one other tokenizer-free encoder-only model by Google AI, operating directly on the character level. You’ll find all (multilingual) checkpoints here.

- HuBERT shows exciting results for downstream audio tasks corresponding to command classification and emotion recognition. Check the models here.

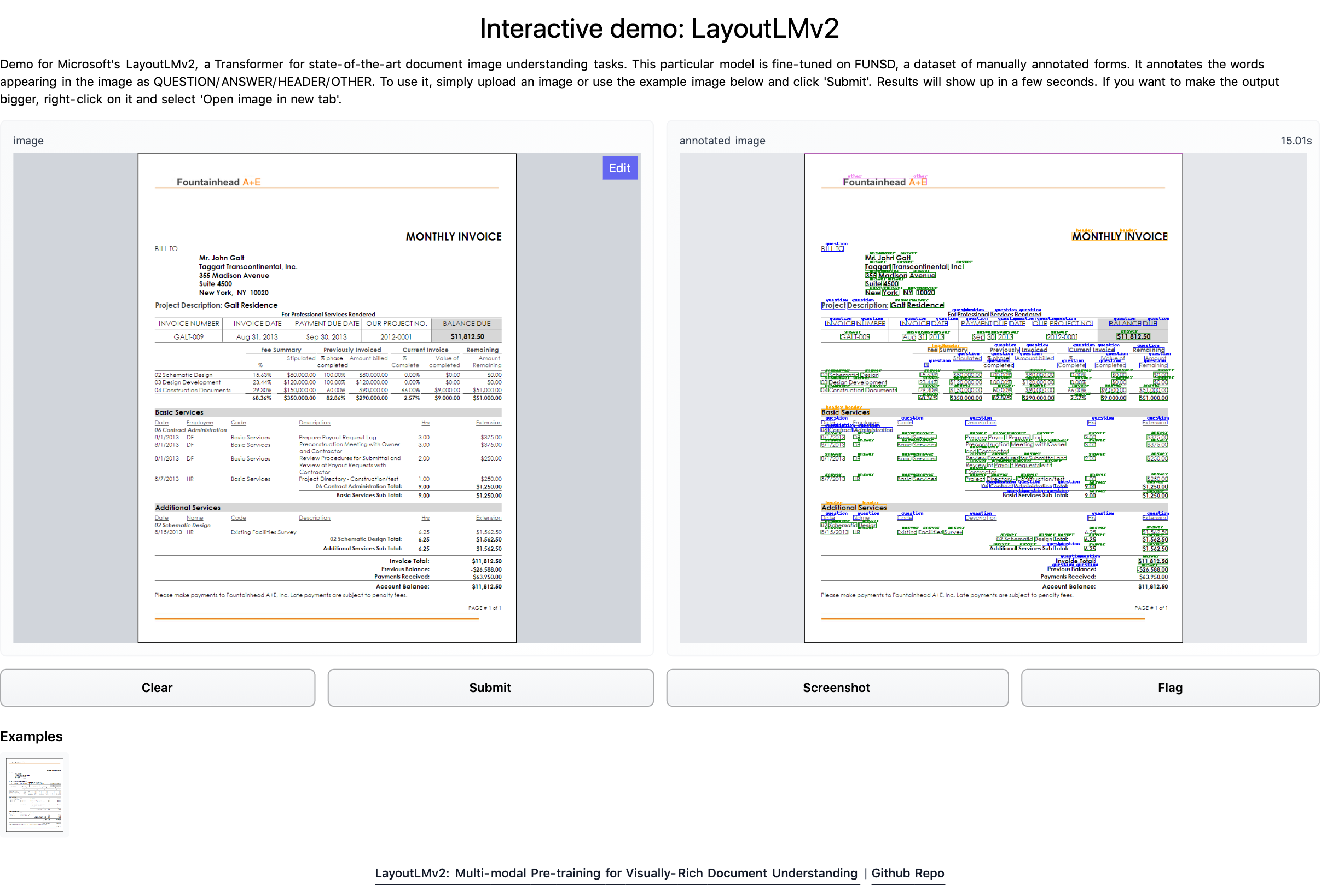

- LayoutLMv2 and LayoutXLM are two incredible models able to parsing document images (like PDFs) by incorporating text, layout, and visual information. We built a Space demo so you’ll be able to directly try it out! Demo notebooks might be found here.

- BEiT by Microsoft Research makes self-supervised Vision Transformers outperform supervised ones, using a clever pre-training objective inspired by BERT.

- RemBERT, a big multilingual Transformer that outperforms XLM-R (and mT5 with an analogous variety of parameters) in zero-shot transfer.

- Splinter which might be used for few-shot query answering. Given only 128 examples, Splinter is capable of reach ~73% F1 on SQuAD, outperforming MLM-based models by 24 points!

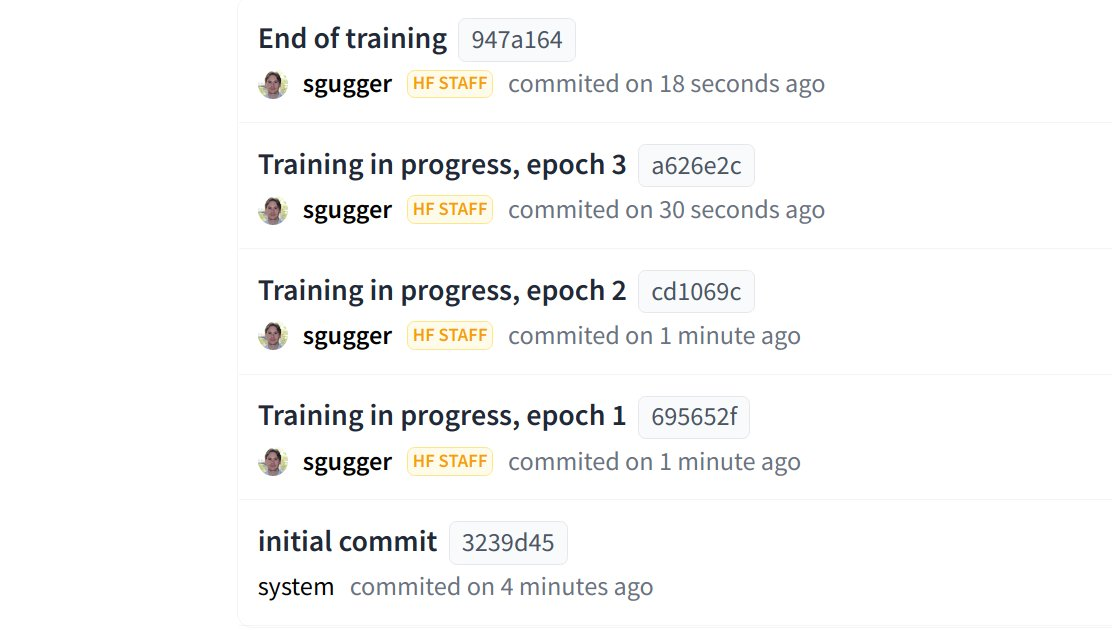

The Hub is now integrated into transformers, with the power to push to the Hub configuration, model, and tokenizer files without leaving the Python runtime! The Trainer can now push on to the Hub each time a checkpoint is saved:

Latest in Datasets

You’ll find 1400 public datasets in https://huggingface.co/datasets due to the awesome contributions from all our community. 💯

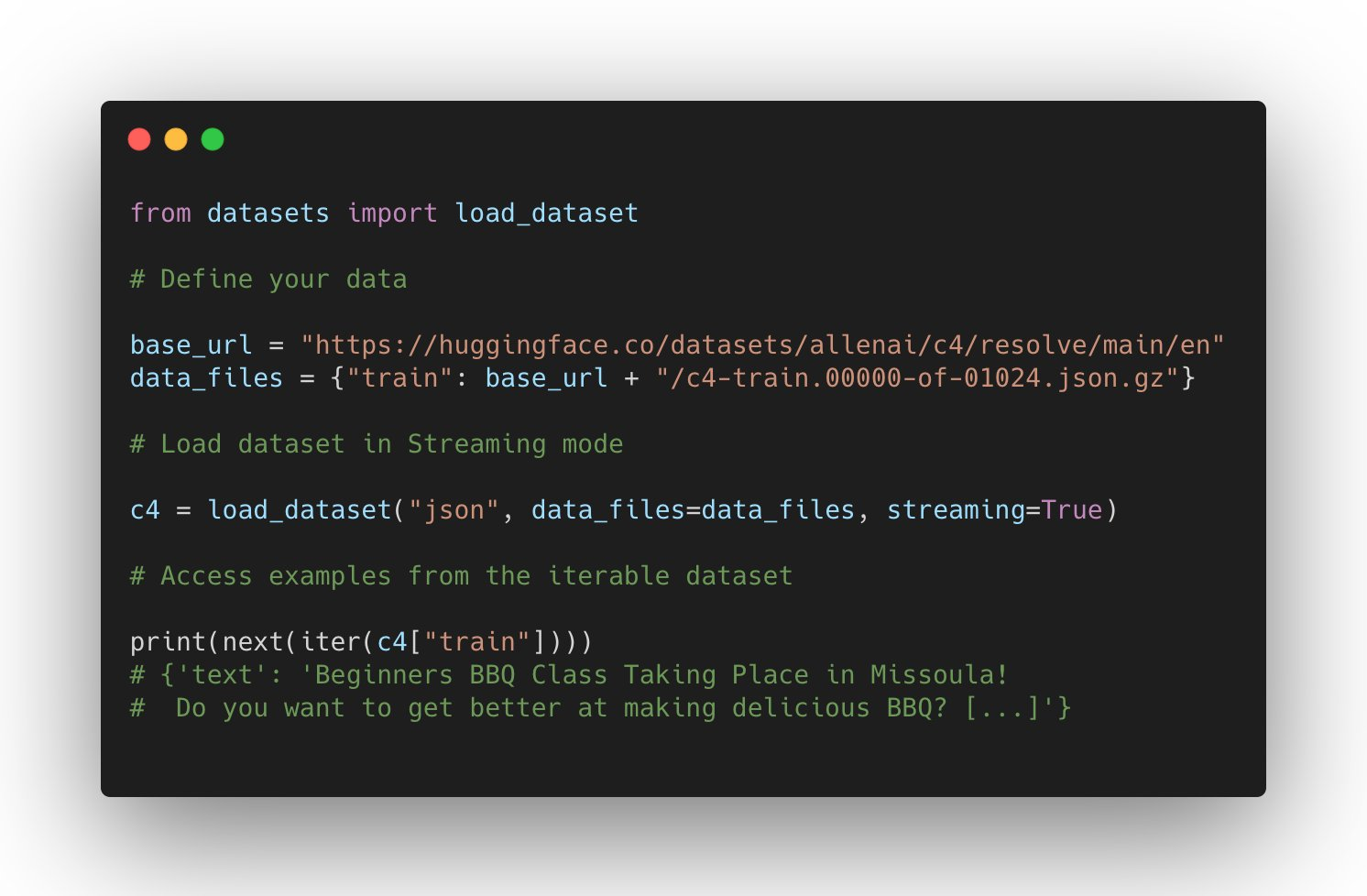

The support for datasets keeps growing: it may be utilized in JAX, process parquet files, use distant files, and has wider support for other domains corresponding to Automatic Speech Recognition and Image Classification.

Users also can directly host and share their datasets to the community just by uploading their data files in a repository on the Dataset Hub.

What are the brand new datasets highlights? Microsoft CodeXGlue datasets for multiple coding tasks (code completion, generation, search, etc), huge datasets corresponding to C4 and MC4, and lots of more corresponding to RussianSuperGLUE and DISFL-QA.

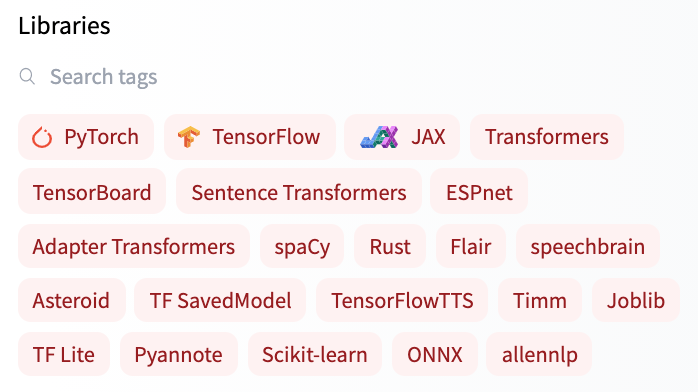

Welcoming recent Libraries to the Hub

Other than having deep integration with transformers-based models, the Hub can also be constructing great partnerships with Open Source ML libraries to offer free model hosting and versioning. We have been achieving this with our huggingface_hub Open-Source library in addition to recent Hub documentation.

All spaCy canonical pipelines can now be present in the official spaCy organization, and any user can share their pipelines with a single command python -m spacy huggingface-hub. To read more about it, head to https://huggingface.co/blog/spacy. You may try all canonical spaCy models directly within the Hub within the demo Space!

One other exciting integration is Sentence Transformers. You may read more about it within the blog announcement: yow will discover over 200 models within the Hub, easily share your models with the remaining of the community and reuse models from the community.

But that is not all! You may now find over 100 Adapter Transformers within the Hub and check out out Speechbrain models with widgets directly within the browser for various tasks corresponding to audio classification. If you happen to’re all for our collaborations to integrate recent ML libraries to the Hub, you’ll be able to read more about them here.

Solutions

Coming soon: Infinity

Transformers latency right down to 1ms? 🤯🤯🤯

We now have been working on a extremely sleek solution to attain unmatched efficiency for state-of-the-art Transformer models, for corporations to deploy in their very own infrastructure.

- Infinity comes as a single-container and might be deployed in any production environment.

- It will possibly achieve 1ms latency for BERT-like models on GPU and 4-10ms on CPU 🤯🤯🤯

- Infinity meets the very best security requirements and might be integrated into your system without the necessity for web access. You will have control over all incoming and outgoing traffic.

⚠️ Join us for a live announcement and demo on Sep 28, where we might be showcasing Infinity for the primary time in public!

NEW: Hardware Acceleration

Hugging Face is partnering with leading AI hardware accelerators corresponding to Intel, Qualcomm and GraphCore to make state-of-the-art production performance accessible and extend training capabilities on SOTA hardware. As step one on this journey, we introduced a brand new Open Source library: 🤗 Optimum – the ML optimization toolkit for production performance 🏎. Learn more on this blog post.

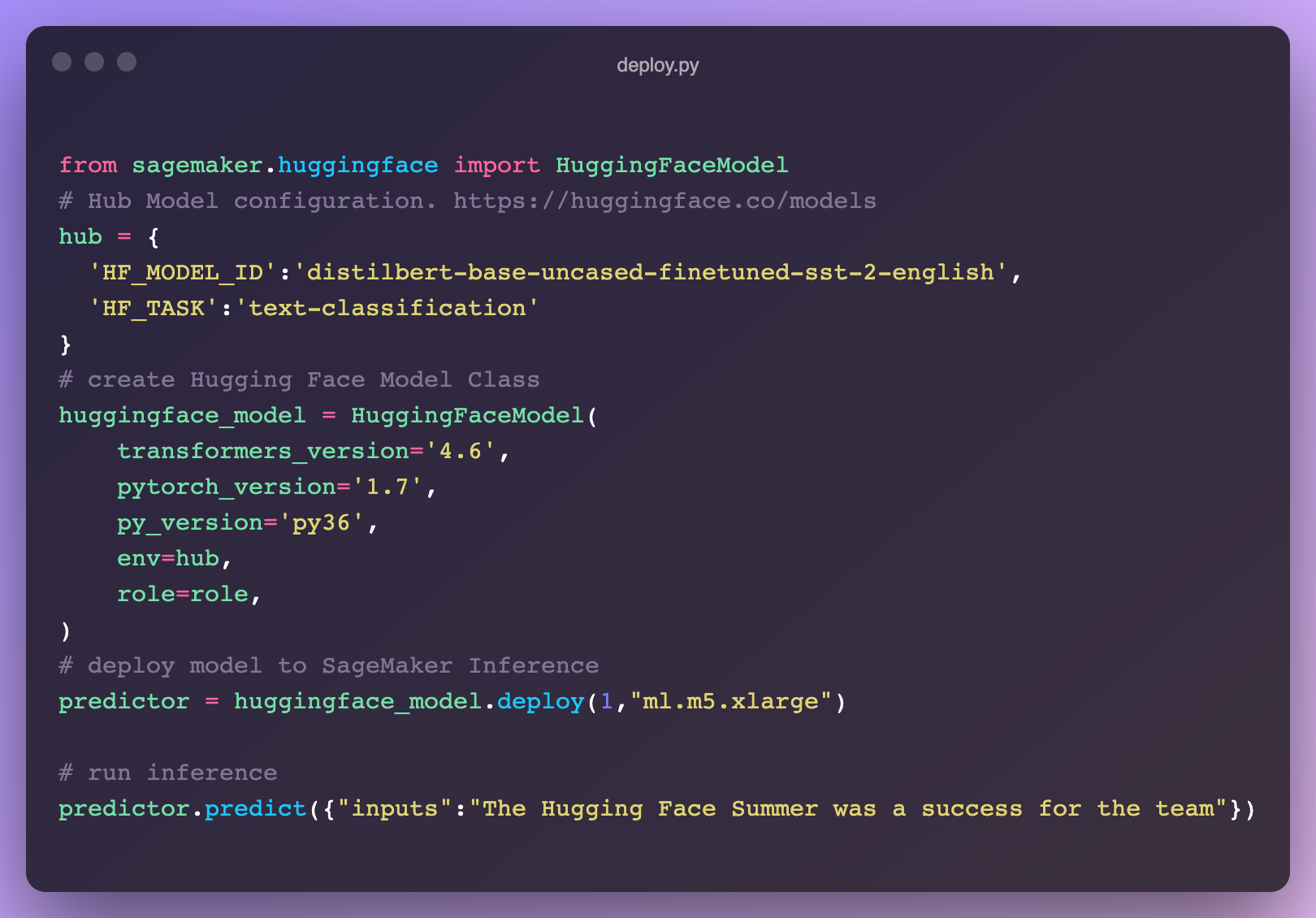

NEW: Inference on SageMaker

We launched a recent integration with AWS to make it easier than ever to deploy 🤗 Transformers in SageMaker 🔥. Pick up the code snippet right from the 🤗 Hub model page! Learn more about learn how to leverage transformers in SageMaker in our docs or try these video tutorials.

For questions reach out to us on the forum: https://discuss.huggingface.co/c/sagemaker/17

NEW: AutoNLP In Your Browser

We released a brand new AutoNLP experience: an online interface to coach models straight out of your browser! Now all it takes is a couple of clicks to coach, evaluate and deploy 🤗 Transformers models on your individual data. Try it out – NO CODE needed!

Inference API

Webinar:

We hosted a live webinar to point out learn how to add Machine Learning capabilities with just a couple of lines of code. We also built a VSCode extension that leverages the Hugging Face Inference API to generate comments describing Python code.

Hugging Face + Zapier Demo

20,000+ Machine Learning models connected to three,000+ apps? 🤯 By leveraging the Inference API, you’ll be able to now easily connect models right into apps like Gmail, Slack, Twitter, and more. On this demo video, we created a zap that uses this code snippet to research your Twitter mentions and alerts you on Slack in regards to the negative ones.

Hugging Face + Google Sheets Demo

With the Inference API, you’ll be able to easily use zero-shot classification right into your spreadsheets in Google Sheets. Just add this script in Tools -> Script Editor:

Few-shot learning in practice

We wrote a blog post about what Few-Shot Learning is and explores how GPT-Neo and 🤗 Accelerated Inference API are used to generate your individual predictions.

Expert Acceleration Program

Take a look at out the brand recent home for the Expert Acceleration Program; you’ll be able to now get direct, premium support from our Machine Learning experts and construct higher ML solutions, faster.

Research

At BigScience we held our first live event (for the reason that kick off) in July BigScience Episode #1. Our second event BigScience Episode #2 was held on September twentieth, 2021 with technical talks and updates by the BigScience working groups and invited talks by Jade Abbott (Masakhane), Percy Liang (Stanford CRFM), Stella Biderman (EleutherAI) and more. We now have accomplished the primary large-scale training on Jean Zay, a 13B English only decoder model (yow will discover the small print here), and we’re currently deciding on the architecture of the second model. The organization working group has filed the applying for the second half of the compute budget: Jean Zay V100 : 2,500,000 GPU hours. 🚀

In June, we shared the results of our collaboration with the Yandex research team: DeDLOC, a technique to collaboratively train your large neural networks, i.e. without using an HPC cluster, but with various accessible resources corresponding to Google Colaboratory or Kaggle notebooks, personal computers or preemptible VMs. Due to this method, we were capable of train sahajBERT, a Bengali language model, with 40 volunteers! And our model competes with the cutting-edge, and even is the most effective for the downstream task of classification on Soham News Article Classification dataset. You may read more about it on this blog post. That is a captivating line of research because it could make model pre-training far more accessible (financially speaking)!

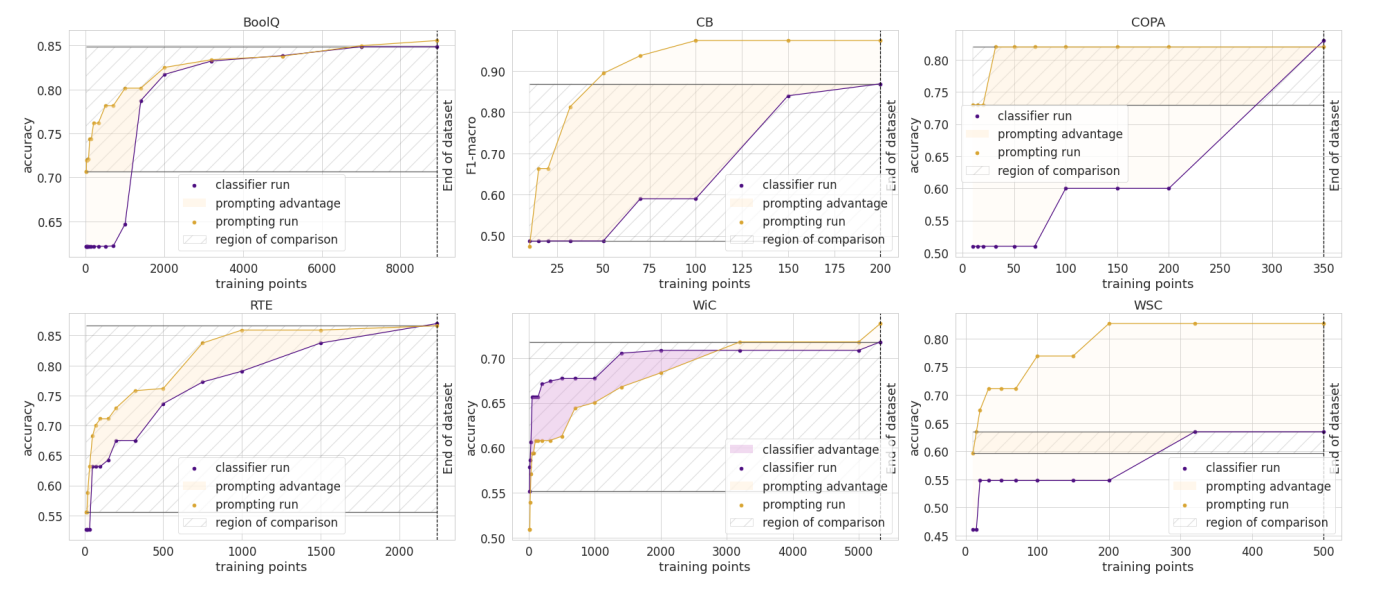

In June our paper, How Many Data Points is a Prompt Value?, got a Best Paper award at NAACL! In it, we reconcile and compare traditional and prompting approaches to adapt pre-trained models, finding that human-written prompts are value as much as hundreds of supervised data points on recent tasks. You can too read its blog post.

We’re looking forward to EMNLP this yr where we now have 4 accepted papers!

- Our paper “Datasets: A Community Library for Natural Language Processing” documents the Hugging Face Datasets project that has over 300 contributors. This community project gives quick access to lots of of datasets to researchers. It has facilitated recent use cases of cross-dataset NLP, and has advanced features for tasks like indexing and streaming large datasets.

- Our collaboration with researchers from TU Darmstadt lead to a different paper accepted on the conference (“Avoiding Inference Heuristics in Few-shot Prompt-based Finetuning”). On this paper, we show that prompt-based fine-tuned language models (which achieve strong performance in few-shot setups) still suffer from learning surface heuristics (sometimes called dataset biases), a pitfall that zero-shot models don’t exhibit.

- Our submission “Block Pruning For Faster Transformers” has also been accepted as an extended paper. On this paper, we show learn how to use block sparsity to acquire each fast and small Transformer models. Our experiments yield models that are 2.4x faster and 74% smaller than BERT on SQuAD.

Last words

😎 🔥 Summer was fun! So many things have happened! We hope you enjoyed reading this blog post and searching forward to share the brand new projects we’re working on. See you within the winter! ❄️