At Hugging Face, we’re contributing to the ecosystem for Deep Reinforcement Learning researchers and enthusiasts. Recently, we now have integrated Deep RL frameworks reminiscent of Stable-Baselines3.

And today we’re comfortable to announce that we integrated the Decision Transformer, an Offline Reinforcement Learning method, into the 🤗 transformers library and the Hugging Face Hub. We’ve some exciting plans for improving accessibility in the sector of Deep RL and we’re looking forward to sharing them with you over the approaching weeks and months.

What’s Offline Reinforcement Learning?

Deep Reinforcement Learning (RL) is a framework to construct decision-making agents. These agents aim to learn optimal behavior (policy) by interacting with the environment through trial and error and receiving rewards as unique feedback.

The agent’s goal is to maximise its cumulative reward, called return. Because RL is predicated on the reward hypothesis: all goals might be described because the maximization of the expected cumulative reward.

Deep Reinforcement Learning agents learn with batches of experience. The query is, how do they collect it?:

A comparison between Reinforcement Learning in an Online and Offline setting, figure taken from this post

In online reinforcement learning, the agent gathers data directly: it collects a batch of experience by interacting with the environment. Then, it uses this experience immediately (or via some replay buffer) to learn from it (update its policy).

But this means that either you train your agent directly in the true world or have a simulator. In the event you don’t have one, you must construct it, which might be very complex ( reflect the complex reality of the true world in an environment?), expensive, and insecure since if the simulator has flaws, the agent will exploit them in the event that they provide a competitive advantage.

Alternatively, in offline reinforcement learning, the agent only uses data collected from other agents or human demonstrations. It doesn’t interact with the environment.

The method is as follows:

- Create a dataset using a number of policies and/or human interactions.

- Run offline RL on this dataset to learn a policy

This method has one drawback: the counterfactual queries problem. What will we do if our agent decides to do something for which we don’t have the information? As an example, turning right on an intersection but we don’t have this trajectory.

There’s already exists some solutions on this topic, but when you wish to know more about offline reinforcement learning you’ll be able to watch this video

Introducing Decision Transformers

The Decision Transformer model was introduced by “Decision Transformer: Reinforcement Learning via Sequence Modeling” by Chen L. et al. It abstracts Reinforcement Learning as a conditional-sequence modeling problem.

The fundamental idea is that as an alternative of coaching a policy using RL methods, reminiscent of fitting a worth function, that can tell us what motion to take to maximise the return (cumulative reward), we use a sequence modeling algorithm (Transformer) that, given a desired return, past states, and actions, will generate future actions to realize this desired return. It’s an autoregressive model conditioned on the specified return, past states, and actions to generate future actions that achieve the specified return.

It is a complete shift within the Reinforcement Learning paradigm since we use generative trajectory modeling (modeling the joint distribution of the sequence of states, actions, and rewards) to switch conventional RL algorithms. It implies that in Decision Transformers, we don’t maximize the return but fairly generate a series of future actions that achieve the specified return.

The method goes this fashion:

- We feed the last K timesteps into the Decision Transformer with 3 inputs:

- Return-to-go

- State

- Motion

- The tokens are embedded either with a linear layer if the state is a vector or CNN encoder if it’s frames.

- The inputs are processed by a GPT-2 model which predicts future actions via autoregressive modeling.

Decision Transformer architecture. States, actions, and returns are fed into modality specific linear embeddings and a positional episodic timestep encoding is added. Tokens are fed right into a GPT architecture which predicts actions autoregressively using a causal self-attention mask. Figure from [1].

Using the Decision Transformer in 🤗 Transformers

The Decision Transformer model is now available as a part of the 🤗 transformers library. As well as, we share nine pre-trained model checkpoints for continuous control tasks within the Gym environment.

An “expert” Decision Transformers model, learned using offline RL within the Gym Walker2d environment.

Install the package

pip install git+https://github.com/huggingface/transformers

Loading the model

Using the Decision Transformer is comparatively easy, but because it is an autoregressive model, some care must be taken with a purpose to prepare the model’s inputs at each time-step. We’ve prepared each a Python script and a Colab notebook that demonstrates use this model.

Loading a pretrained Decision Transformer is easy within the 🤗 transformers library:

from transformers import DecisionTransformerModel

model_name = "edbeeching/decision-transformer-gym-hopper-expert"

model = DecisionTransformerModel.from_pretrained(model_name)

Creating the environment

We offer pretrained checkpoints for the Gym Hopper, Walker2D and Halfcheetah. Checkpoints for Atari environments will soon be available.

import gym

env = gym.make("Hopper-v3")

state_dim = env.observation_space.shape[0]

act_dim = env.action_space.shape[0]

Autoregressive prediction function

The model performs an autoregressive prediction; that’s to say that predictions made at the present time-step t are sequentially conditioned on the outputs from previous time-steps. This function is kind of meaty, so we are going to aim to elucidate it within the comments.

def get_action(model, states, actions, rewards, returns_to_go, timesteps):

states = states.reshape(1, -1, model.config.state_dim)

actions = actions.reshape(1, -1, model.config.act_dim)

returns_to_go = returns_to_go.reshape(1, -1, 1)

timesteps = timesteps.reshape(1, -1)

states = states[:, -model.config.max_length :]

actions = actions[:, -model.config.max_length :]

returns_to_go = returns_to_go[:, -model.config.max_length :]

timesteps = timesteps[:, -model.config.max_length :]

padding = model.config.max_length - states.shape[1]

attention_mask = torch.cat([torch.zeros(padding), torch.ones(states.shape[1])])

attention_mask = attention_mask.to(dtype=torch.long).reshape(1, -1)

states = torch.cat([torch.zeros((1, padding, state_dim)), states], dim=1).float()

actions = torch.cat([torch.zeros((1, padding, act_dim)), actions], dim=1).float()

returns_to_go = torch.cat([torch.zeros((1, padding, 1)), returns_to_go], dim=1).float()

timesteps = torch.cat([torch.zeros((1, padding), dtype=torch.long), timesteps], dim=1)

state_preds, action_preds, return_preds = model(

states=states,

actions=actions,

rewards=rewards,

returns_to_go=returns_to_go,

timesteps=timesteps,

attention_mask=attention_mask,

return_dict=False,)

return action_preds[0, -1]

Evaluating the model

To be able to evaluate the model, we want some additional information; the mean and standard deviation of the states that were used during training. Fortunately, these can be found for every of the checkpoint’s model card on the Hugging Face Hub!

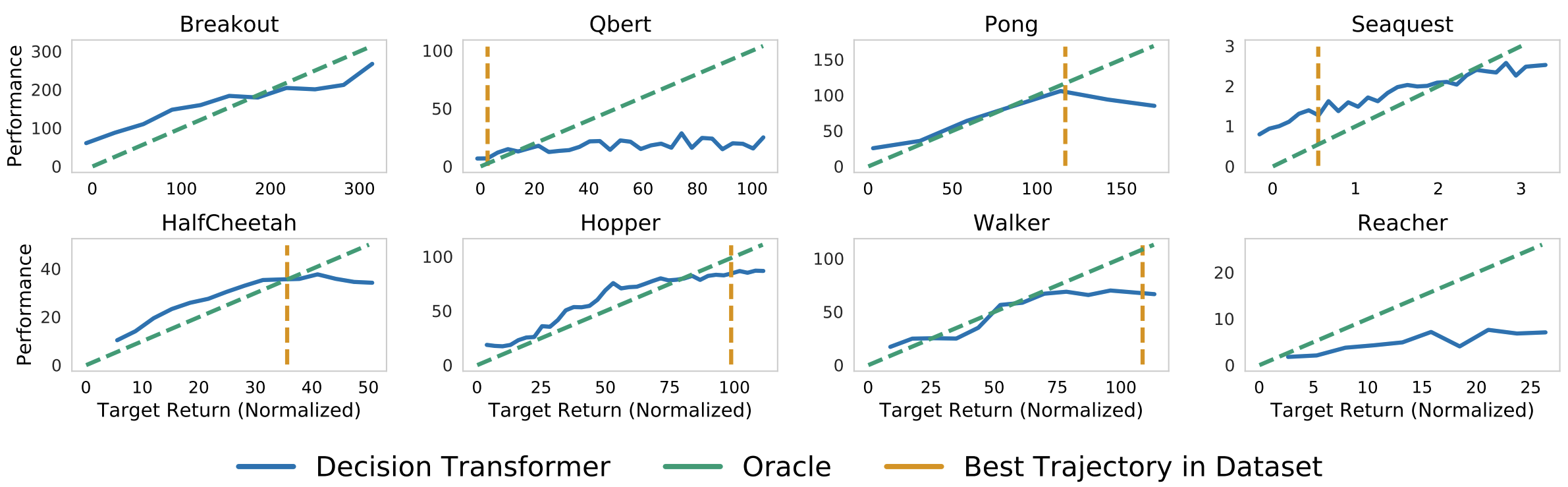

We also need a goal return for the model. That is the ability of return conditioned Offline Reinforcement Learning: we will use the goal return to regulate the performance of the policy. This could possibly be really powerful in a multiplayer setting, where we would really like to regulate the performance of an opponent bot to be at an appropriate difficulty for the player. The authors show a fantastic plot of this of their paper!

Sampled (evaluation) returns accrued by Decision Transformer when conditioned on

the desired goal (desired) returns. Top: Atari. Bottom: D4RL medium-replay datasets. Figure from [1].

TARGET_RETURN = 3.6

MAX_EPISODE_LENGTH = 1000

state_mean = np.array(

[1.3490015, -0.11208222, -0.5506444, -0.13188992, -0.00378754, 2.6071432,

0.02322114, -0.01626922, -0.06840388, -0.05183131, 0.04272673,])

state_std = np.array(

[0.15980862, 0.0446214, 0.14307782, 0.17629202, 0.5912333, 0.5899924,

1.5405099, 0.8152689, 2.0173461, 2.4107876, 5.8440027,])

state_mean = torch.from_numpy(state_mean)

state_std = torch.from_numpy(state_std)

state = env.reset()

target_return = torch.tensor(TARGET_RETURN).float().reshape(1, 1)

states = torch.from_numpy(state).reshape(1, state_dim).float()

actions = torch.zeros((0, act_dim)).float()

rewards = torch.zeros(0).float()

timesteps = torch.tensor(0).reshape(1, 1).long()

for t in range(max_ep_len):

actions = torch.cat([actions, torch.zeros((1, act_dim))], dim=0)

rewards = torch.cat([rewards, torch.zeros(1)])

motion = get_action(model,

(states - state_mean) / state_std,

actions,

rewards,

target_return,

timesteps)

actions[-1] = motion

motion = motion.detach().numpy()

state, reward, done, _ = env.step(motion)

cur_state = torch.from_numpy(state).reshape(1, state_dim)

states = torch.cat([states, cur_state], dim=0)

rewards[-1] = reward

pred_return = target_return[0, -1] - (reward / scale)

target_return = torch.cat([target_return, pred_return.reshape(1, 1)], dim=1)

timesteps = torch.cat([timesteps, torch.ones((1, 1)).long() * (t + 1)], dim=1)

if done:

break

You can find a more detailed example, with the creation of videos of the agent in our Colab notebook.

Conclusion

Along with Decision Transformers, we would like to support more use cases and tools from the Deep Reinforcement Learning community. Due to this fact, it will be great to listen to your feedback on the Decision Transformer model, and more generally anything we will construct with you that may be useful for RL. Be happy to reach out to us.

What’s next?

In the approaching weeks and months, we plan on supporting other tools from the ecosystem:

- Integrating RL-baselines3-zoo

- Uploading RL-trained-agents models into the Hub: an enormous collection of pre-trained Reinforcement Learning agents using stable-baselines3

- Integrating other Deep Reinforcement Learning libraries

- Implementing Convolutional Decision Transformers For Atari

- And more to come back 🥳

One of the best approach to keep up a correspondence is to join our discord server to exchange with us and with the community.

References

[1] Chen, Lili, et al. “Decision transformer: Reinforcement learning via sequence modeling.” Advances in neural information processing systems 34 (2021).

[2] Agarwal, Rishabh, Dale Schuurmans, and Mohammad Norouzi. “An optimistic perspective on offline reinforcement learning.” International Conference on Machine Learning. PMLR, 2020.

Acknowledgements

We would really like to thank the paper’s first authors, Kevin Lu and Lili Chen, for his or her constructive conversations.