Lately, language model pre-training has achieved great success via leveraging large-scale textual data. By employing pre-training tasks resembling masked language modeling, these models have demonstrated surprising performance on several downstream tasks. Nevertheless, the dramatic gap between the pre-training task (e.g., language modeling) and the downstream task (e.g., table query answering) makes existing pre-training not efficient enough. In practice, we frequently need an extremely great amount of pre-training data to acquire promising improvement, even for domain-adaptive pretraining. How might we design a pre-training task to shut the gap, and thus speed up pre-training?

Overview

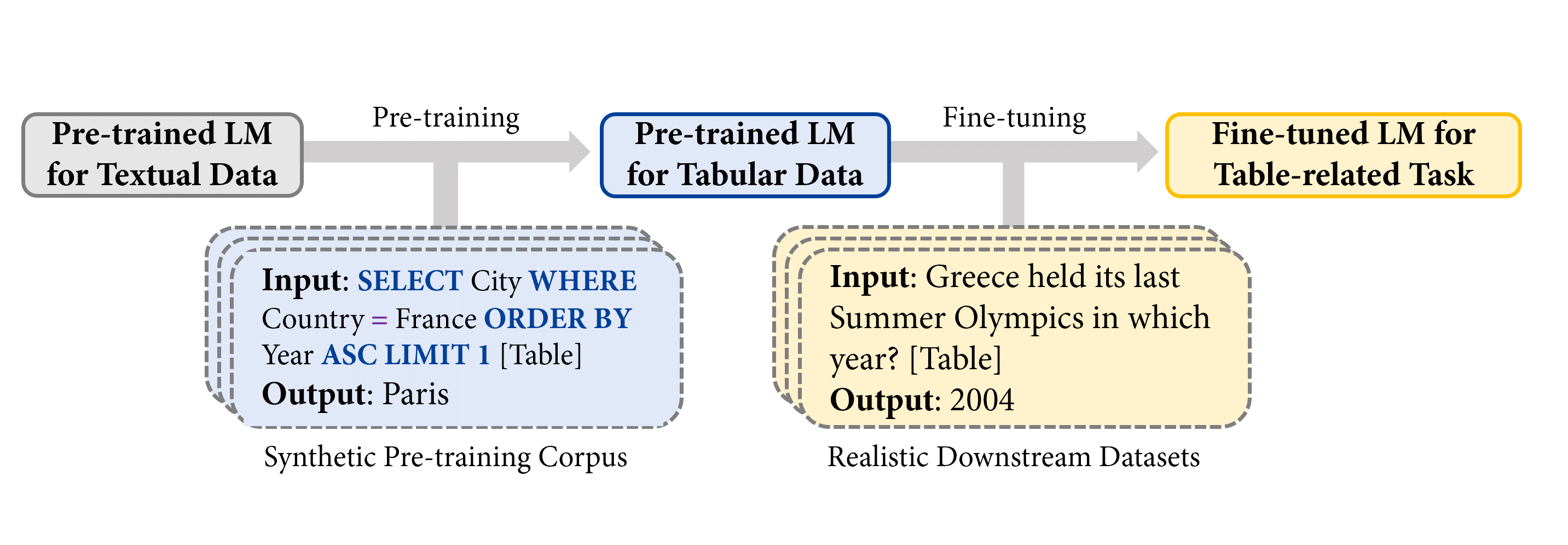

In “TAPEX: Table Pre-training via Learning a Neural SQL Executor“, we explore using synthetic data as a proxy for real data during pre-training, and display its powerfulness with TAPEX (Table Pre-training via Execution) for example. In TAPEX, we show that table pre-training might be achieved by learning a neural SQL executor over an artificial corpus.

Note: [Table] is a placeholder for the user provided table within the input.

As shown within the figure above, by systematically sampling executable SQL queries and their execution outputs over tables, TAPEX first synthesizes an artificial and non-natural pre-training corpus. Then, it continues to pre-train a language model (e.g., BART) to output the execution results of SQL queries, which mimics the technique of a neural SQL executor.

Pre-training

The next figure illustrates the pre-training process. At each step, we first take a table from the net. The instance table is about Olympics Games. Then we will sample an executable SQL query SELECT City WHERE Country = France ORDER BY 12 months ASC LIMIT 1. Through an off-the-shelf SQL executor (e.g., MySQL), we will obtain the query’s execution result Paris. Similarly, by feeding the concatenation of the SQL query and the flattened table to the model (e.g., BART encoder) as input, the execution result serves because the supervision for the model (e.g., BART decoder) as output.

Why use programs resembling SQL queries somewhat than natural language sentences as a source for pre-training? The best advantage is that the range and scale of programs might be systematically guaranteed, in comparison with uncontrollable natural language sentences. Due to this fact, we will easily synthesize a various, large-scale, and high-quality pre-training corpus by sampling SQL queries.

You possibly can try the trained neural SQL executor in 🤗 Transformers as below:

from transformers import TapexTokenizer, BartForConditionalGeneration

import pandas as pd

tokenizer = TapexTokenizer.from_pretrained("microsoft/tapex-large-sql-execution")

model = BartForConditionalGeneration.from_pretrained("microsoft/tapex-large-sql-execution")

data = {

"yr": [1896, 1900, 1904, 2004, 2008, 2012],

"city": ["athens", "paris", "st. louis", "athens", "beijing", "london"]

}

table = pd.DataFrame.from_dict(data)

query = "select yr where city = beijing"

encoding = tokenizer(table=table, query=query, return_tensors="pt")

outputs = model.generate(**encoding)

print(tokenizer.batch_decode(outputs, skip_special_tokens=True))

Nice-tuning

During fine-tuning, we feed the concatenation of the natural language query and the flattened table to the model as input, the reply labeled by annotators serves because the supervision for the model as output. Wish to fine-tune TAPEX by yourself? You possibly can take a look at the fine-tuning script here, which has been officially integrated into 🤗 Transformers 4.19.0!

And by now, all available TAPEX models have interactive widgets officially supported by Huggingface! You possibly can try to reply some questions as below.

| Repository | Stars | Contributors | Programming language |

|---|---|---|---|

| Transformers | 36542 | 651 | Python |

| Datasets | 4512 | 77 | Python |

| Tokenizers | 3934 | 34 | Rust, Python and NodeJS |

This model might be loaded on the Inference API on-demand.

Experiments

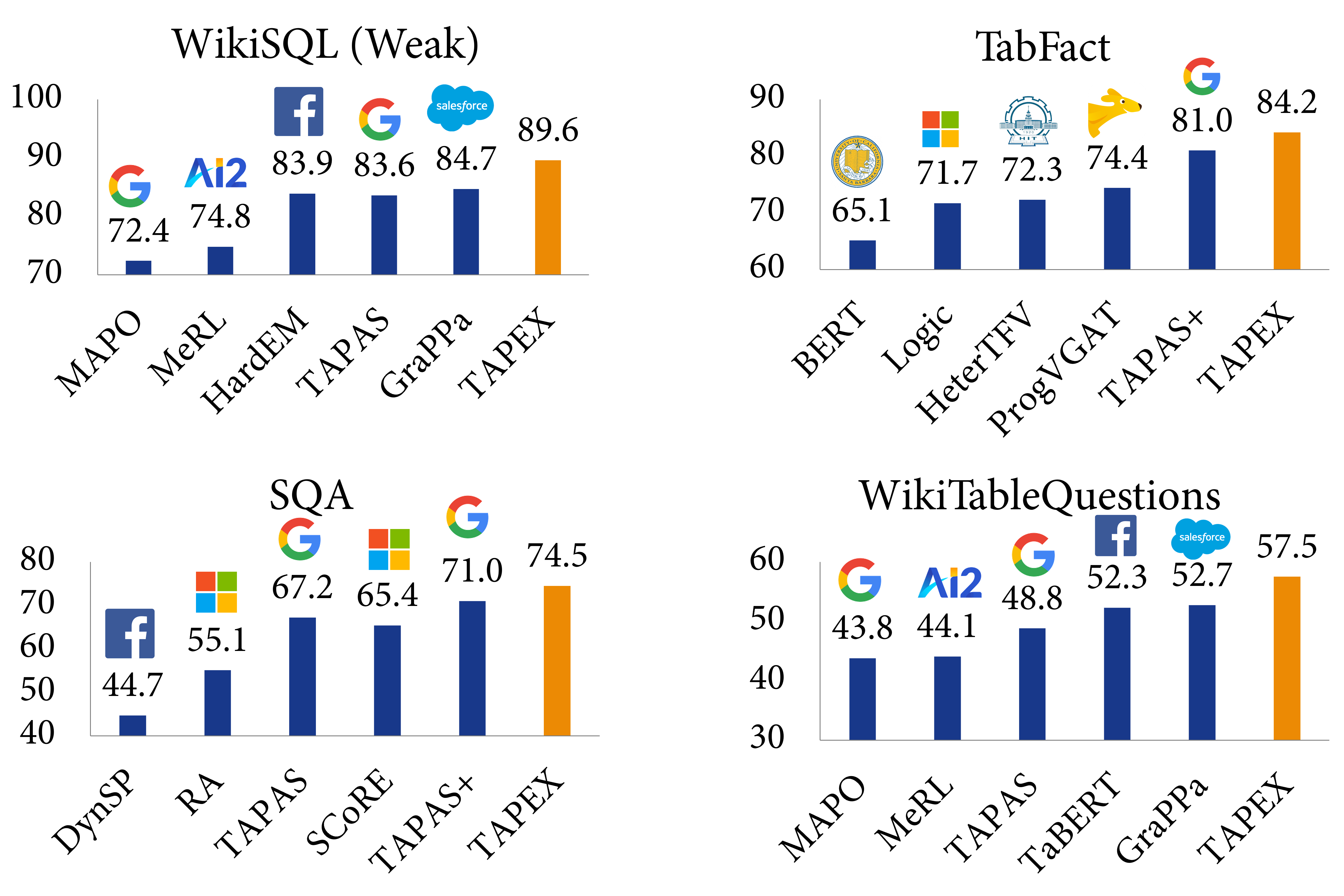

We evaluate TAPEX on 4 benchmark datasets, including WikiSQL (Weak), WikiTableQuestions, SQA and TabFact. The primary three datasets are about table query answering, while the last one is about table fact verification, each requiring joint reasoning about tables and natural language. Below are some examples from probably the most difficult dataset, WikiTableQuestions:

| Query | Answer |

|---|---|

| based on the table, what’s the last title that spicy horse produced? | Akaneiro: Demon Hunters |

| what’s the difference in runners-up from coleraine academical institution and royal school dungannon? | 20 |

| what were the primary and last movies greenstreet acted in? | The Maltese Falcon, Malaya |

| during which olympic games did arasay thondike not finish in the highest 20? | 2012 |

| which broadcaster hosted 3 titles but that they had just one episode? | Channel 4 |

Experimental results display that TAPEX outperforms previous table pre-training approaches by a big margin and ⭐achieves latest state-of-the-art results on all of them⭐. This includes the improvements on the weakly-supervised WikiSQL denotation accuracy to 89.6% (+2.3% over SOTA, +3.8% over BART), the TabFact accuracy to 84.2% (+3.2% over SOTA, +3.0% over BART), the SQA denotation accuracy to 74.5% (+3.5% over SOTA, +15.9% over BART), and the WikiTableQuestion denotation accuracy to 57.5% (+4.8% over SOTA, +19.5% over BART). To our knowledge, that is the primary work to use pre-training via synthetic executable programs and to realize latest state-of-the-art results on various downstream tasks.

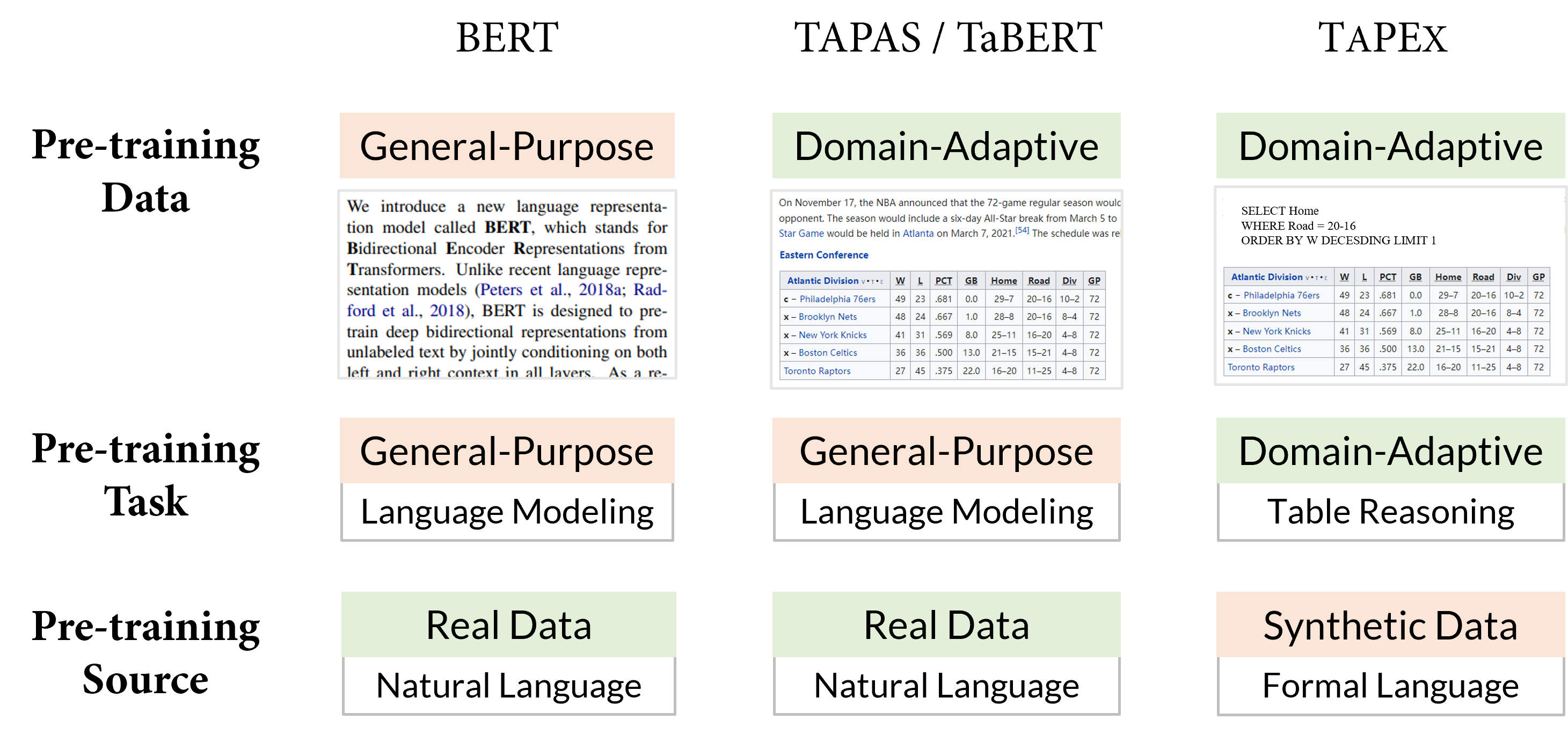

Comparison to Previous Table Pre-training

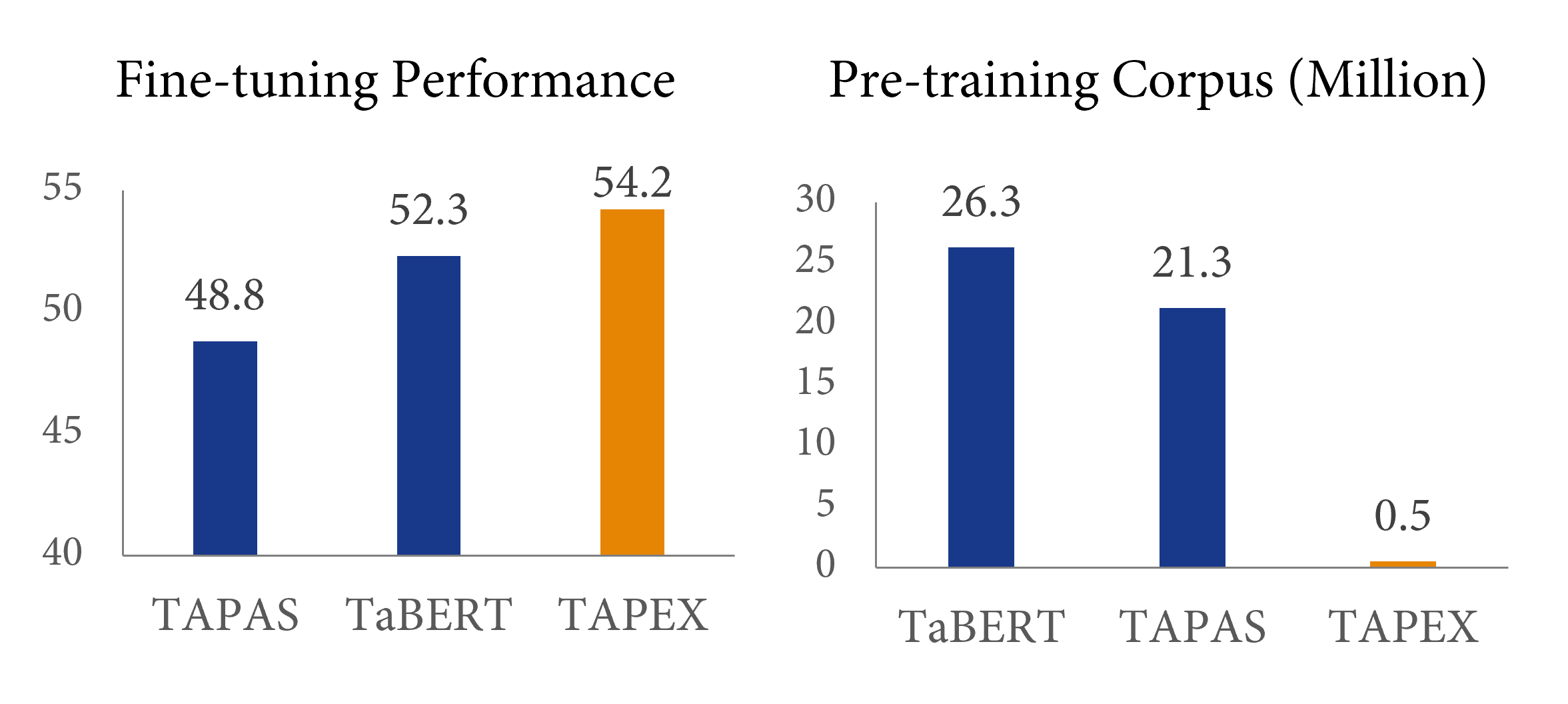

The earliest work on table pre-training, TAPAS from Google Research – also available in 🤗 Transformers – and TaBERT from Meta AI, have revealed that collecting more domain-adaptive data can improve the downstream performance. Nevertheless, these previous works mainly employ general-purpose pre-training tasks, e.g., language modeling or its variants. TAPEX explores a special path by sacrificing the naturalness of the pre-trained source with a purpose to obtain a domain-adaptive pre-trained task, i.e. SQL execution. A graphical comparison of BERT, TAPAS/TaBERT and our TAPEX might be seen below.

We imagine the SQL execution task is closer to the downstream table query answering task, especially from the attitude of structural reasoning capabilities. Imagine you might be faced with a SQL query SELECT City ORDER BY 12 months and a natural query Sort all cities by yr. The reasoning paths required by the SQL query and the query are similar, except that SQL is a little more rigid than natural language. If a language model might be pre-trained to faithfully “execute” SQL queries and produce correct results, it must have a deep understanding on natural language with similar intents.

What in regards to the efficiency? How efficient is such a pre-training method in comparison with the previous pre-training? The reply is given within the above figure: compared with previous table pre-training method TaBERT, TAPEX could yield 2% improvement only using 2% of the pre-training corpus, achieving a speedup of nearly 50 times! With a bigger pre-training corpus (e.g., 5 million

Conclusion

On this blog, we introduce TAPEX, a table pre-training approach whose corpus is robotically synthesized via sampling SQL queries and their execution results. TAPEX addresses the information scarcity challenge in table pre-training by learning a neural SQL executor on a various, large-scale, and high-quality synthetic corpus. Experimental results on 4 downstream datasets display that TAPEX outperforms previous table pre-training approaches by a big margin, with a better pre-training efficiency.

Take Away

What can we learn from the success of TAPEX? I suggest that, especially if you should perform efficient continual pre-training, it’s possible you’ll try these options:

- Synthesize an accurate and small corpus, as an alternative of mining a big but noisy corpus from the Web.

- Simulate domain-adaptive skills via programs, as an alternative of general-purpose language modeling via natural language sentences.