⚠️ A latest updated version of this text is on the market here 👉 https://huggingface.co/deep-rl-course/unit1/introduction

This text is a component of the Deep Reinforcement Learning Class. A free course from beginner to expert. Check the syllabus here.

⚠️ A latest updated version of this text is on the market here 👉 https://huggingface.co/deep-rl-course/unit1/introduction

This text is a component of the Deep Reinforcement Learning Class. A free course from beginner to expert. Check the syllabus here.

In the primary a part of this unit, we learned concerning the value-based methods and the difference between Monte Carlo and Temporal Difference Learning.

So, within the second part, we’ll study Q-Learning, and implement our first RL agent from scratch, a Q-Learning agent, and can train it in two environments:

- Frozen Lake v1 ❄️: where our agent might want to go from the starting state (S) to the goal state (G) by walking only on frozen tiles (F) and avoiding holes (H).

- An autonomous taxi 🚕: where the agent will need to learn to navigate a city to transport its passengers from point A to point B.

This unit is key if you wish to give you the option to work on Deep Q-Learning (Unit 3).

So let’s start! 🚀

Introducing Q-Learning

What’s Q-Learning?

Q-Learning is an off-policy value-based method that uses a TD approach to coach its action-value function:

- Off-policy: we’ll discuss that at the tip of this chapter.

- Value-based method: finds the optimal policy not directly by training a worth or action-value function that may tell us the worth of every state or each state-action pair.

- Uses a TD approach: updates its action-value function at each step as an alternative of at the tip of the episode.

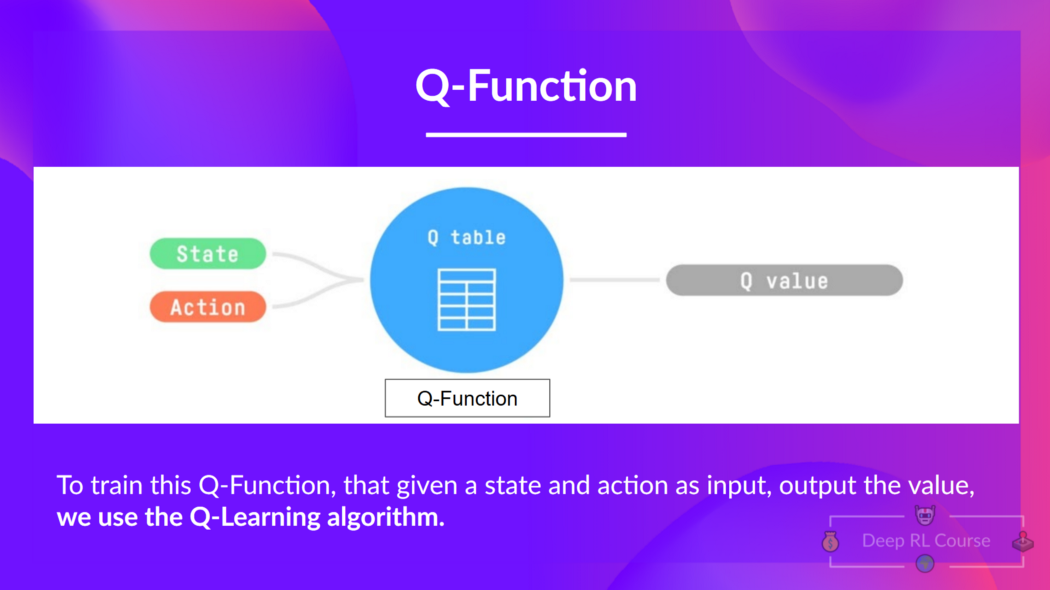

Q-Learning is the algorithm we use to coach our Q-Function, an action-value function that determines the worth of being at a specific state and taking a particular motion at that state.

The Q comes from “the Quality” of that motion at that state.

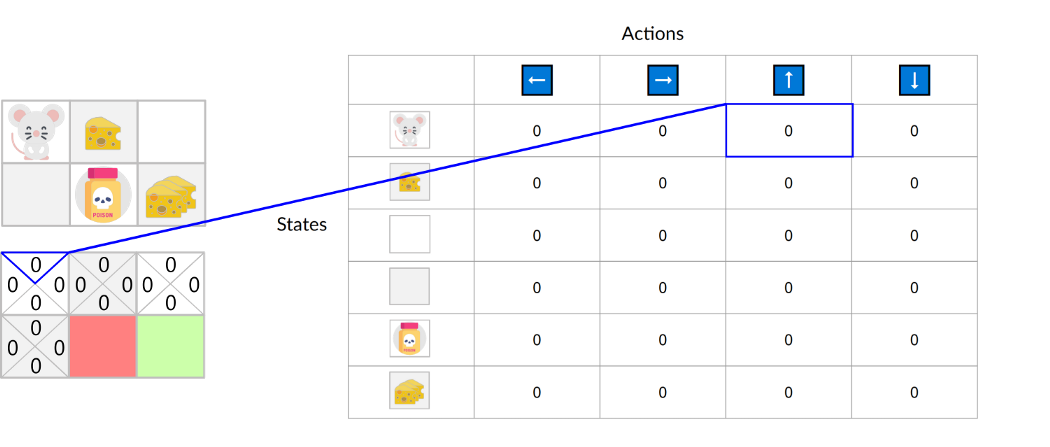

Internally, our Q-function has a Q-table, a table where each cell corresponds to a state-action value pair value. Consider this Q-table as the memory or cheat sheet of our Q-function.

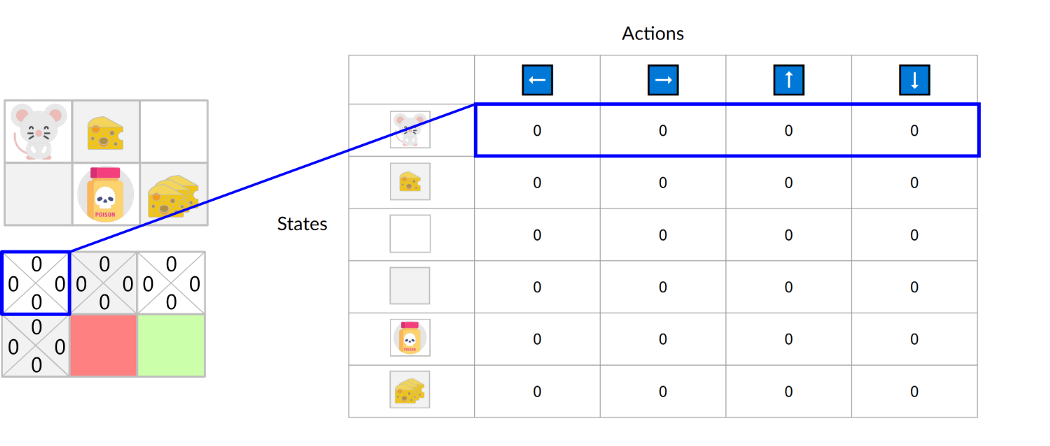

If we take this maze example:

The Q-Table is initialized. That is why all values are = 0. This table comprises, for every state, the 4 state-action values.

Here we see that the state-action value of the initial state and going up is 0:

Due to this fact, Q-function comprises a Q-table that has the worth of each-state motion pair. And given a state and motion, our Q-Function will search inside its Q-table to output the worth.

If we recap, Q-Learning is the RL algorithm that:

- Trains Q-Function (an action-value function) which internally is a Q-table that comprises all of the state-action pair values.

- Given a state and motion, our Q-Function will search into its Q-table the corresponding value.

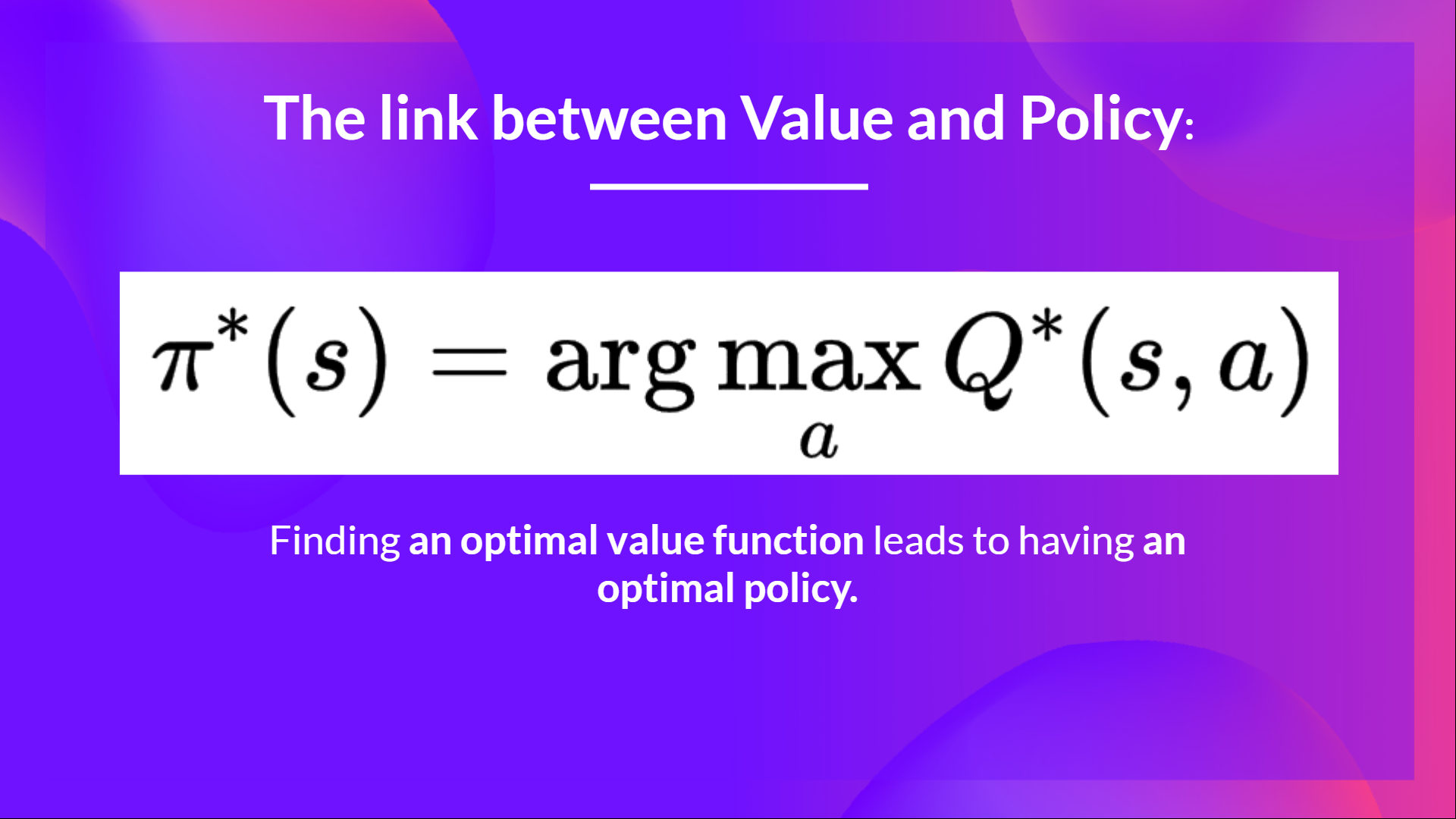

- When the training is completed, now we have an optimal Q-function, which implies now we have optimal Q-Table.

- And if we have an optimal Q-function, we have an optimal policy since we know for every state what’s the perfect motion to take.

But, at first, our Q-Table is useless because it gives arbitrary values for every state-action pair (more often than not, we initialize the Q-Table to 0 values). But, as we’ll explore the environment and update our Q-Table, it is going to give us higher and higher approximations.

So now that we understand what Q-Learning, Q-Function, and Q-Table are, let’s dive deeper into the Q-Learning algorithm.

The Q-Learning algorithm

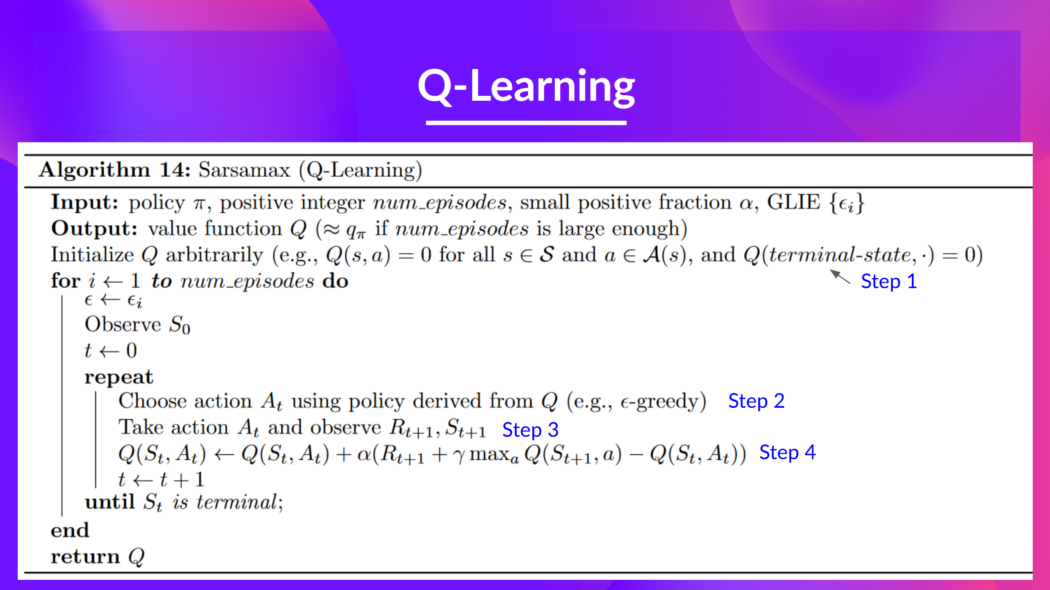

That is the Q-Learning pseudocode; let’s study each part and see how it really works with a straightforward example before implementing it. Do not be intimidated by it, it’s simpler than it looks! We’ll go over each step.

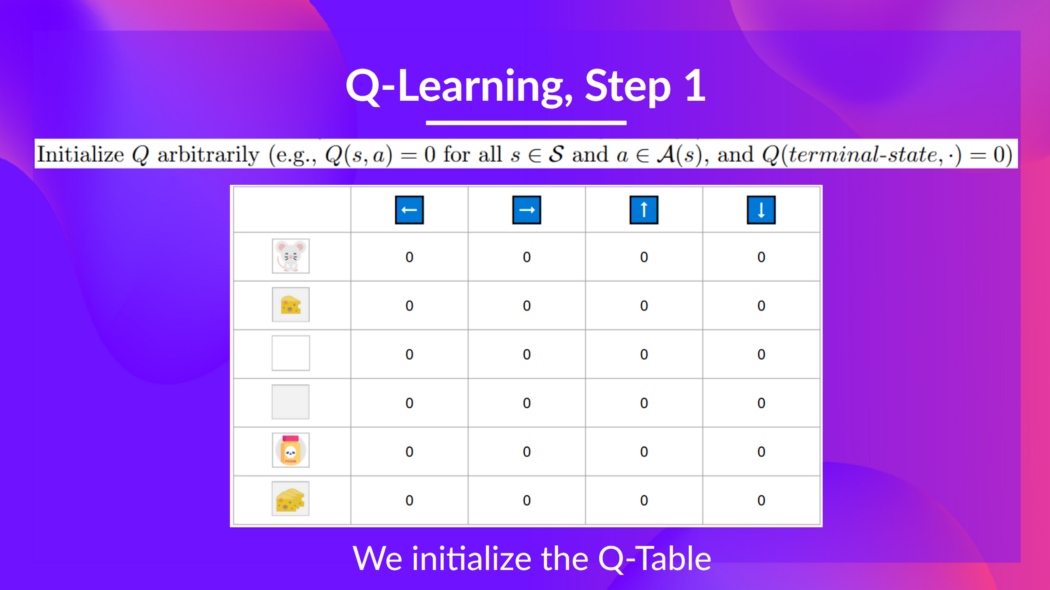

Step 1: We initialize the Q-Table

We want to initialize the Q-Table for every state-action pair. More often than not, we initialize with values of 0.

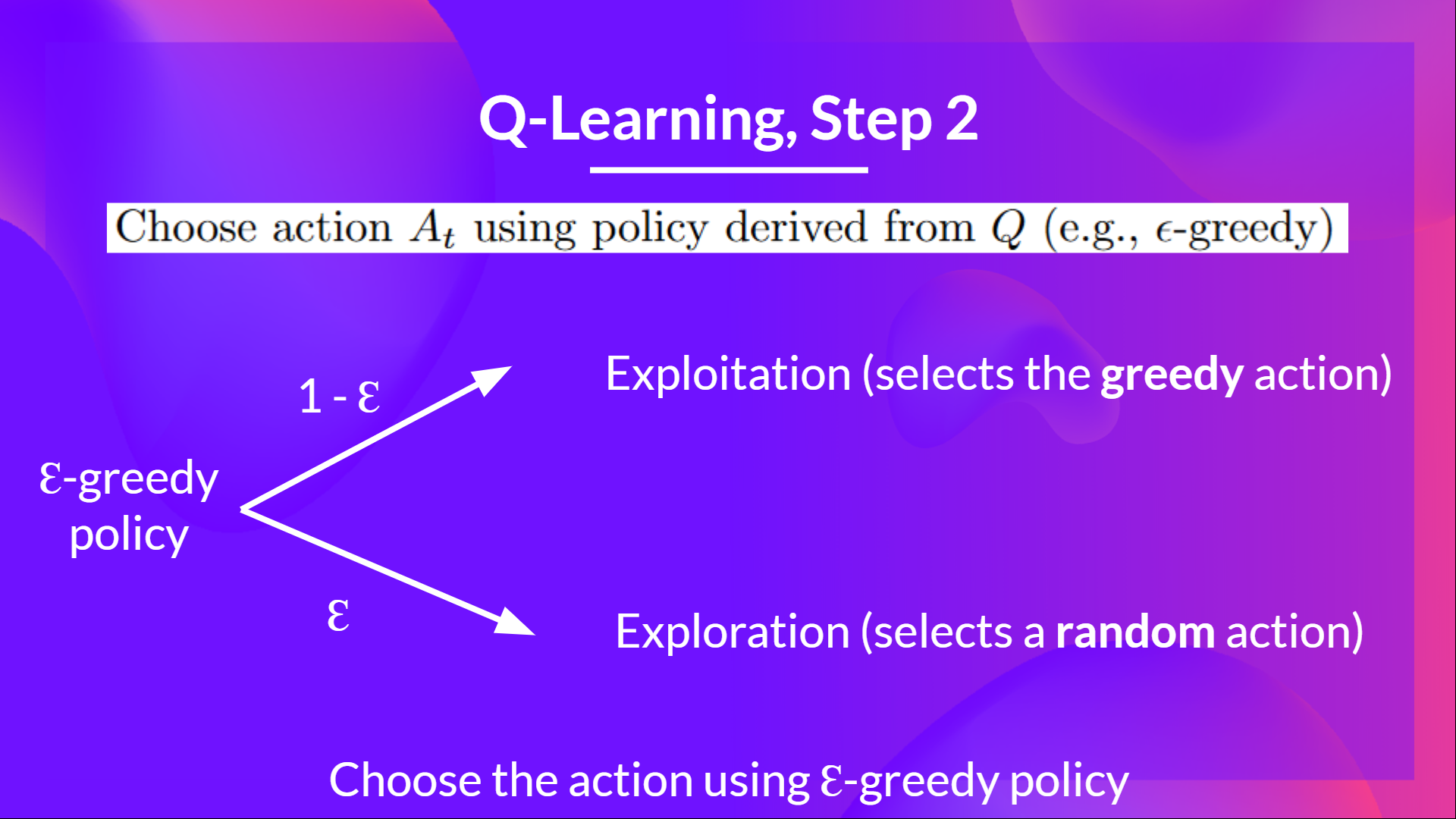

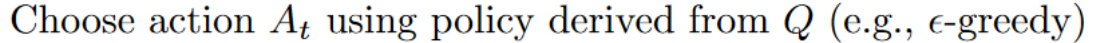

Step 2: Select motion using Epsilon Greedy Strategy

Epsilon Greedy Strategy is a policy that handles the exploration/exploitation trade-off.

The concept is that we define epsilon ɛ = 1.0:

- With probability 1 — ɛ : we do exploitation (aka our agent selects the motion with the best state-action pair value).

- With probability ɛ: we do exploration (trying random motion).

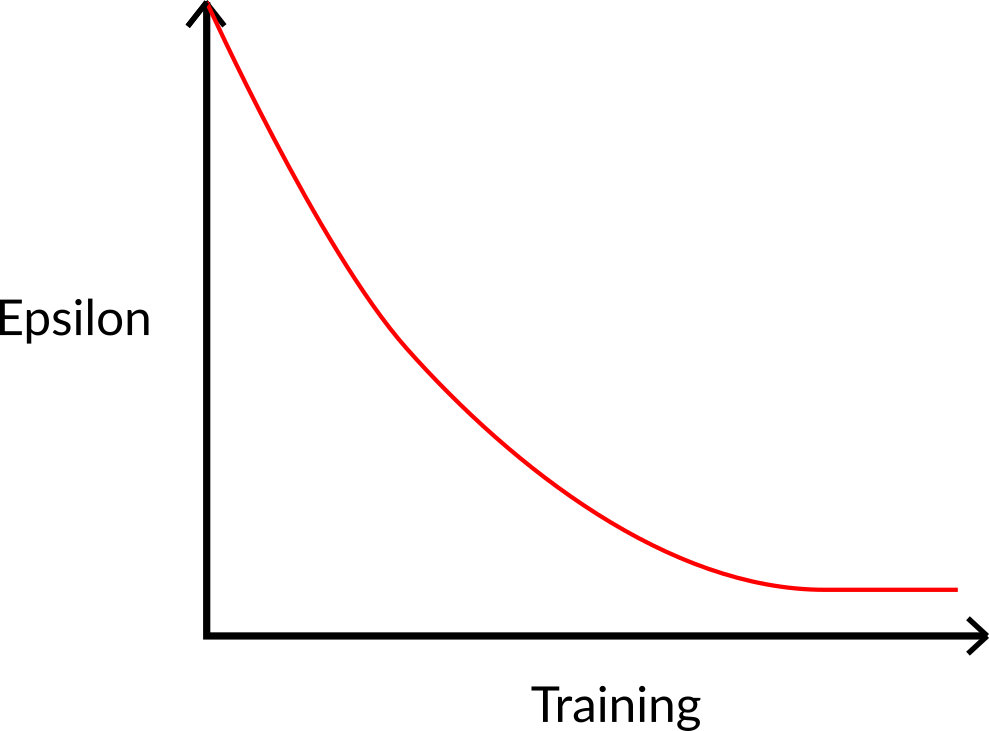

Originally of the training, the probability of doing exploration can be huge since ɛ could be very high, so more often than not, we’ll explore. But because the training goes on, and consequently our Q-Table gets higher and higher in its estimations, we progressively reduce the epsilon value since we’ll need less and fewer exploration and more exploitation.

Step 3: Perform motion At, gets reward Rt+1 and next state St+1

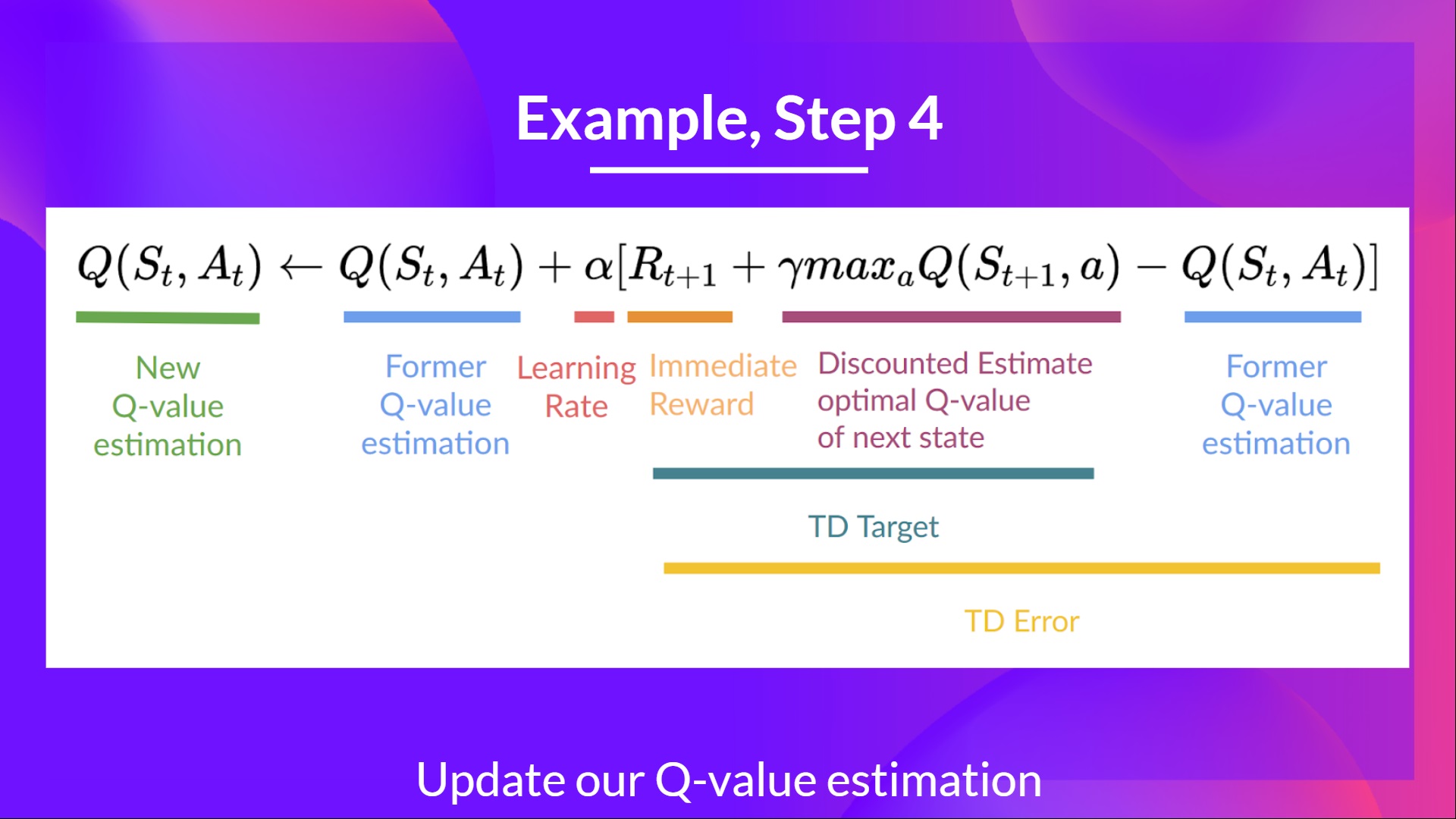

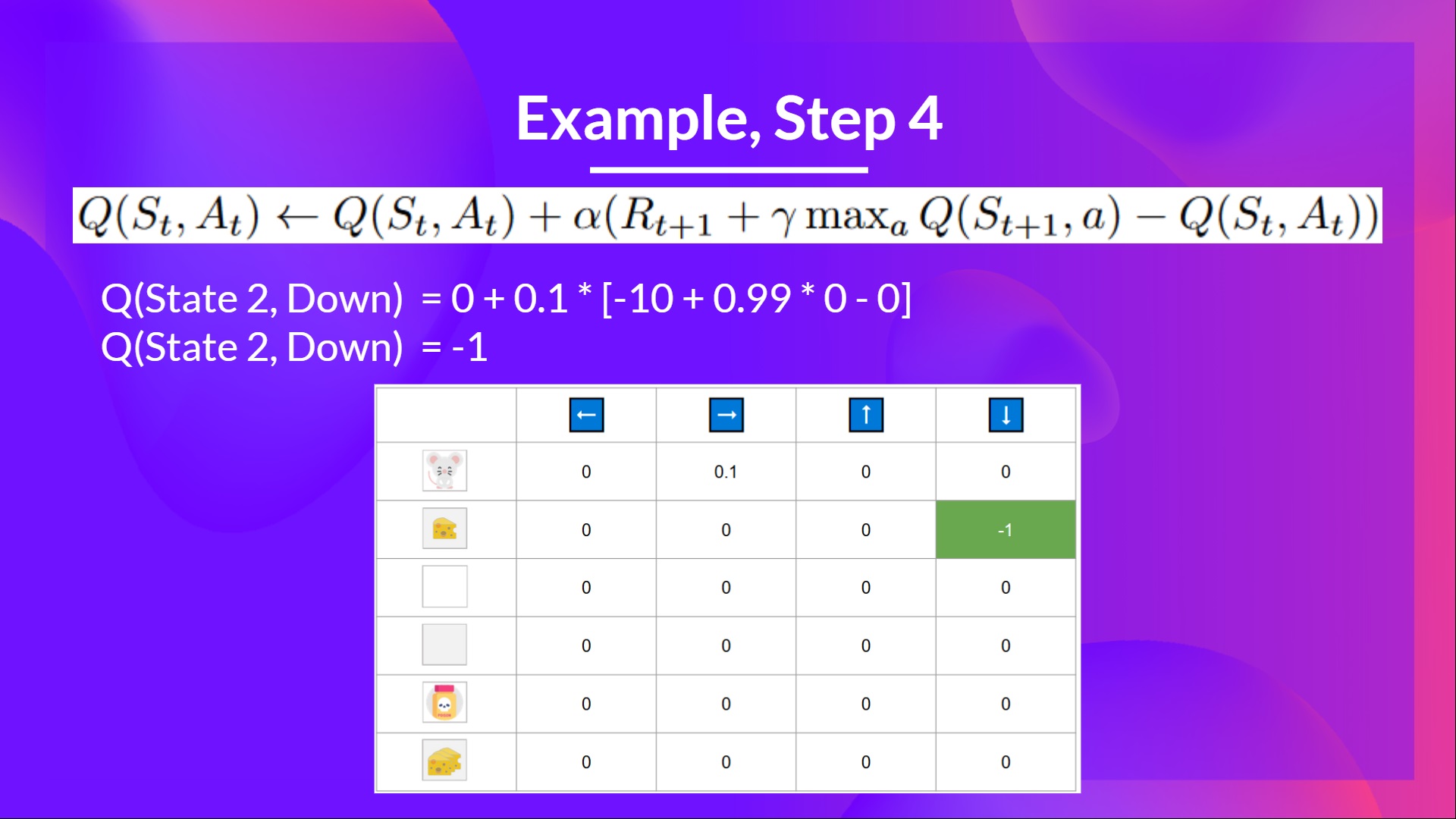

Step 4: Update Q(St, At)

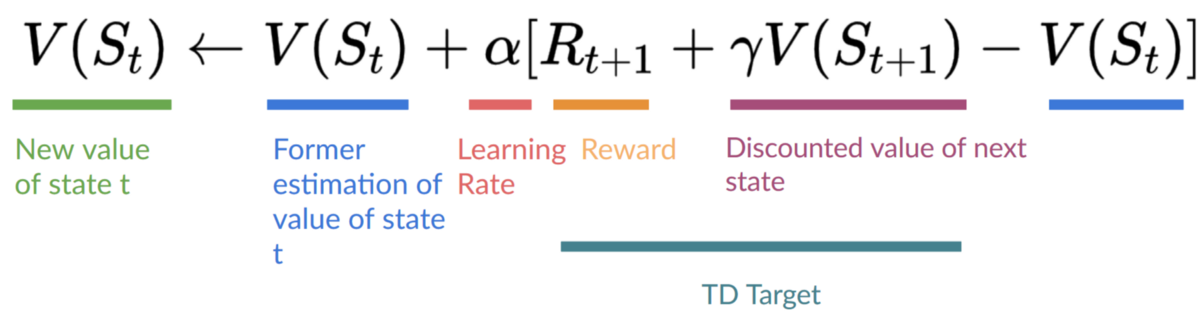

Do not forget that in TD Learning, we update our policy or value function (depending on the RL method we decide) after one step of the interaction.

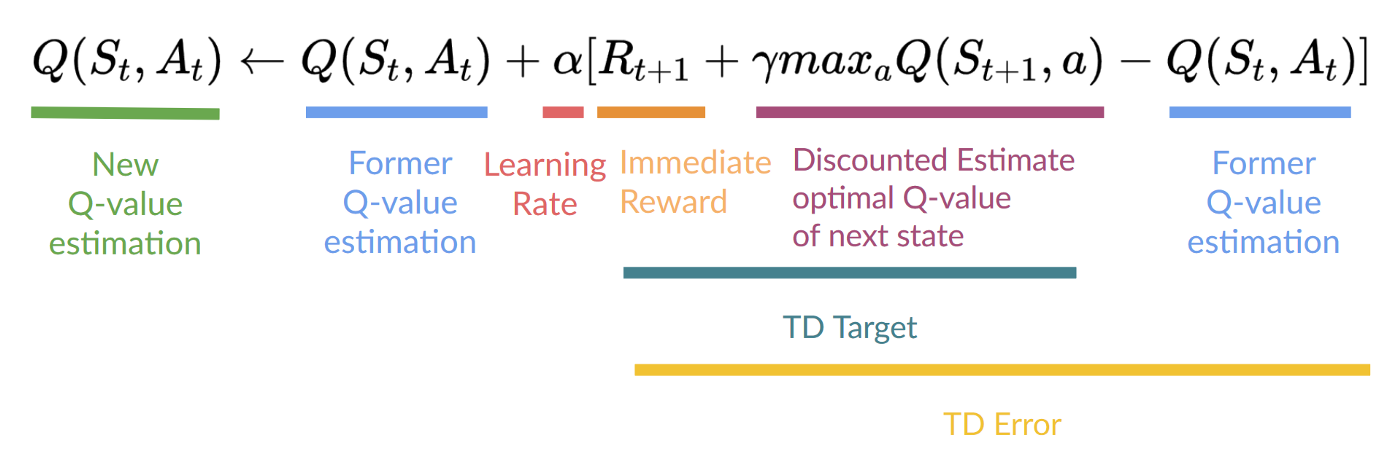

To provide our TD goal, we used the immediate reward plus the discounted value of the subsequent state best state-action pair (we call that bootstrap).

Due to this fact, our update formula goes like this:

It implies that to update our :

- We want .

- To update our Q-value at a given state-action pair, we use the TD goal.

How can we form the TD goal?

- We obtain the reward after taking the motion .

- To get the best next-state-action pair value, we use a greedy policy to pick the subsequent best motion. Note that this shouldn’t be an epsilon greedy policy, it will all the time take the motion with the best state-action value.

Then when the update of this Q-value is completed. We start in a new_state and choose our motion using our epsilon-greedy policy again.

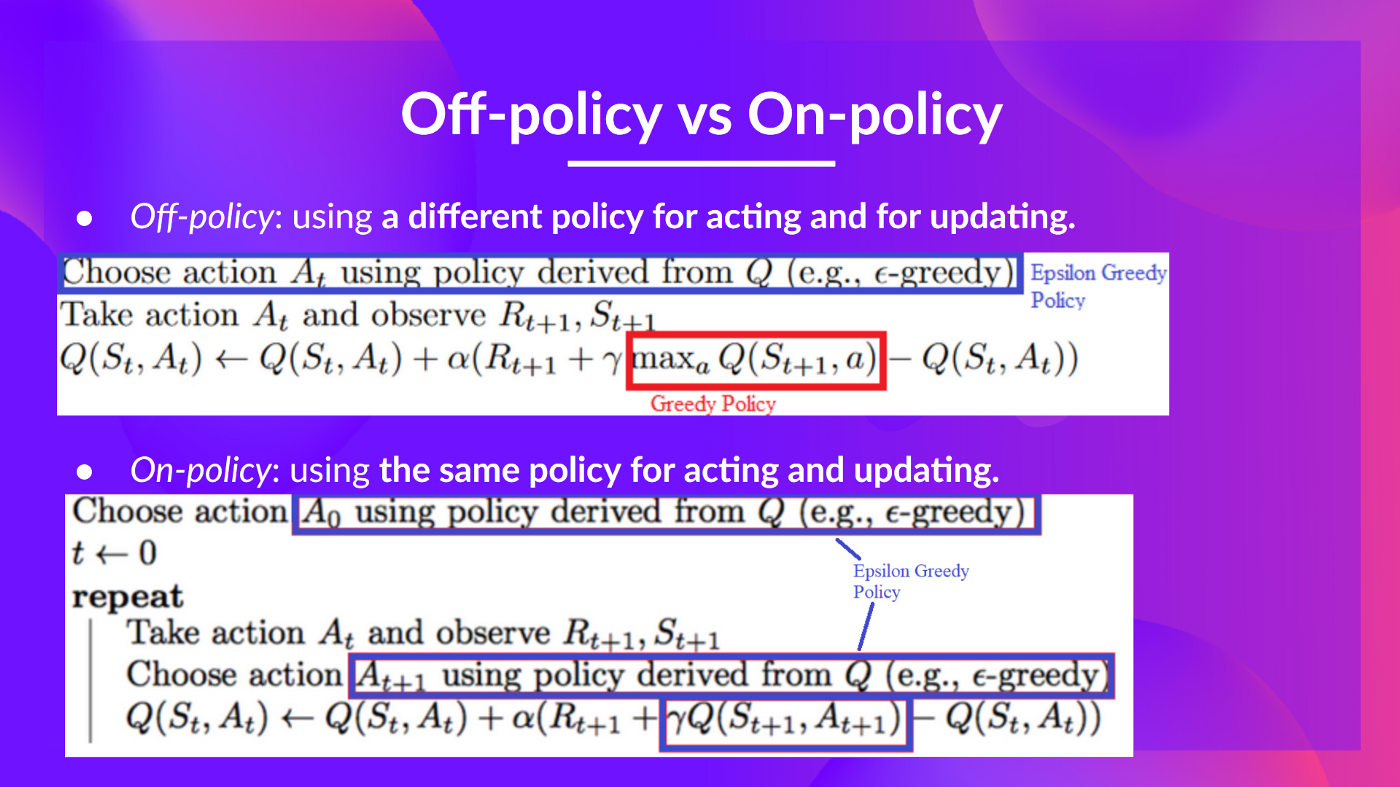

It’s why we are saying that that is an off-policy algorithm.

Off-policy vs On-policy

The difference is subtle:

- Off-policy: using a distinct policy for acting and updating.

As an illustration, with Q-Learning, the Epsilon greedy policy (acting policy), is different from the greedy policy that’s used to pick the perfect next-state motion value to update our Q-value (updating policy).

Is different from the policy we use throughout the training part:

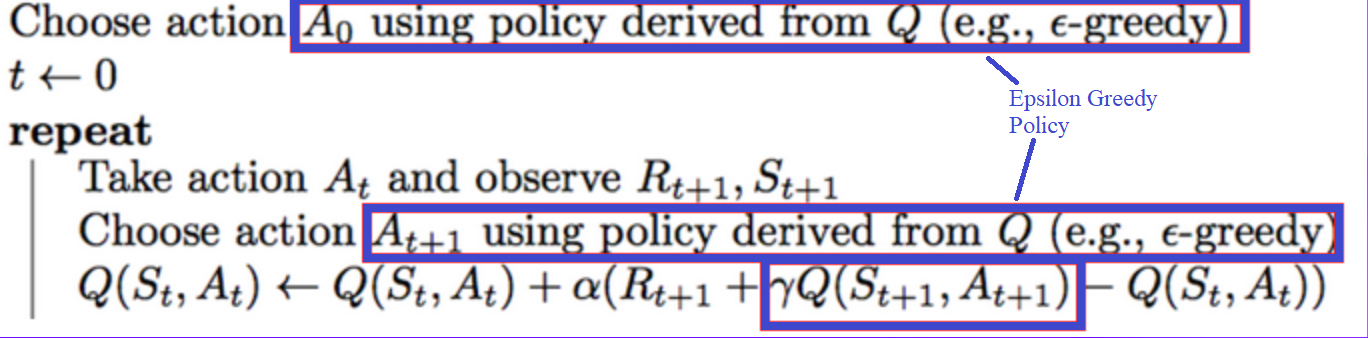

- On-policy: using the same policy for acting and updating.

As an illustration, with Sarsa, one other value-based algorithm, the Epsilon-Greedy Policy selects the next_state-action pair, not a greedy policy.

A Q-Learning example

To higher understand Q-Learning, let’s take a straightforward example:

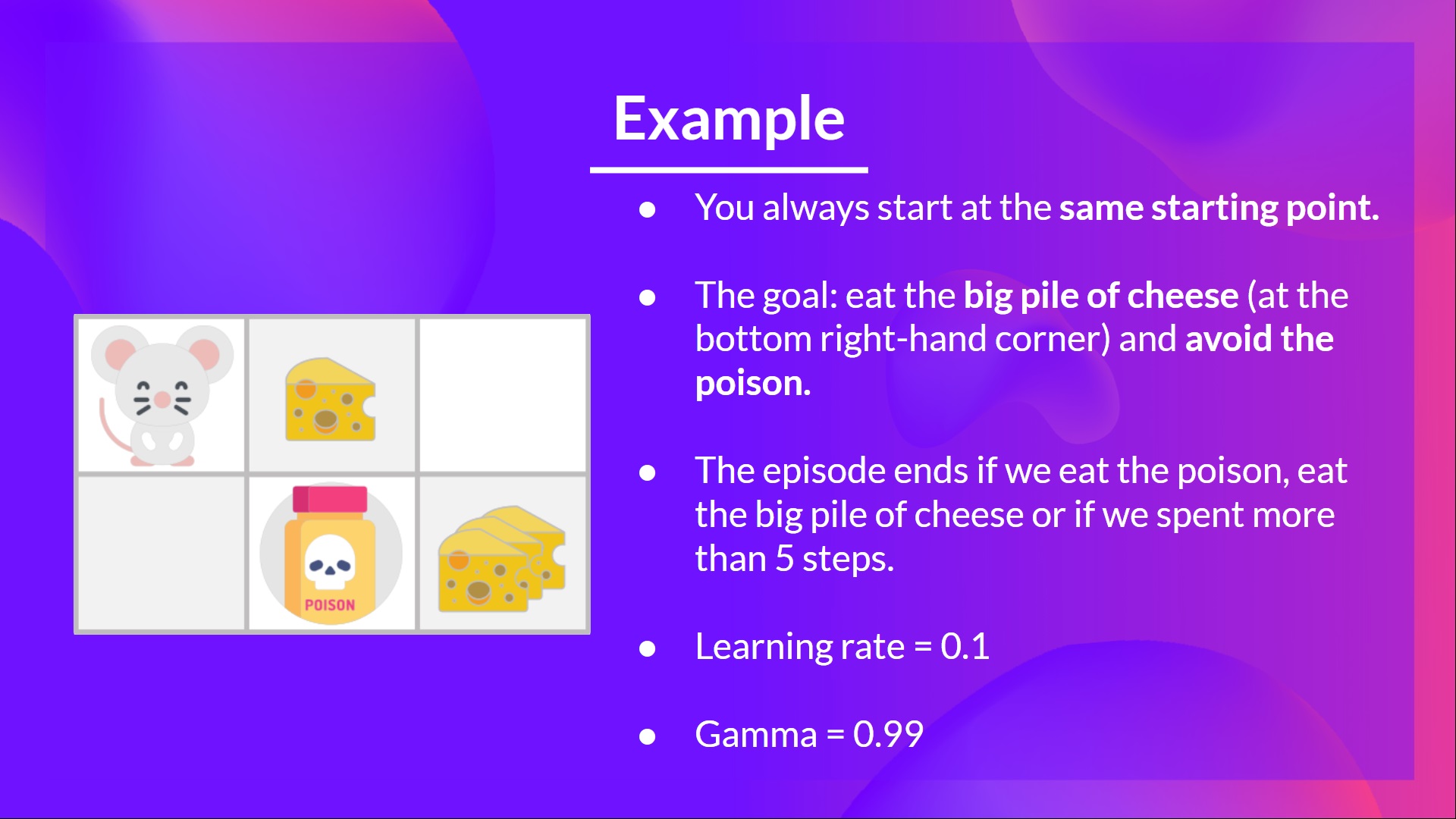

- You are a mouse on this tiny maze. You mostly start at the identical place to begin.

- The goal is to eat the large pile of cheese at the underside right-hand corner and avoid the poison. In spite of everything, who doesn’t like cheese?

- The episode ends if we eat the poison, eat the large pile of cheese or if we spent greater than five steps.

- The educational rate is 0.1

- The gamma (discount rate) is 0.99

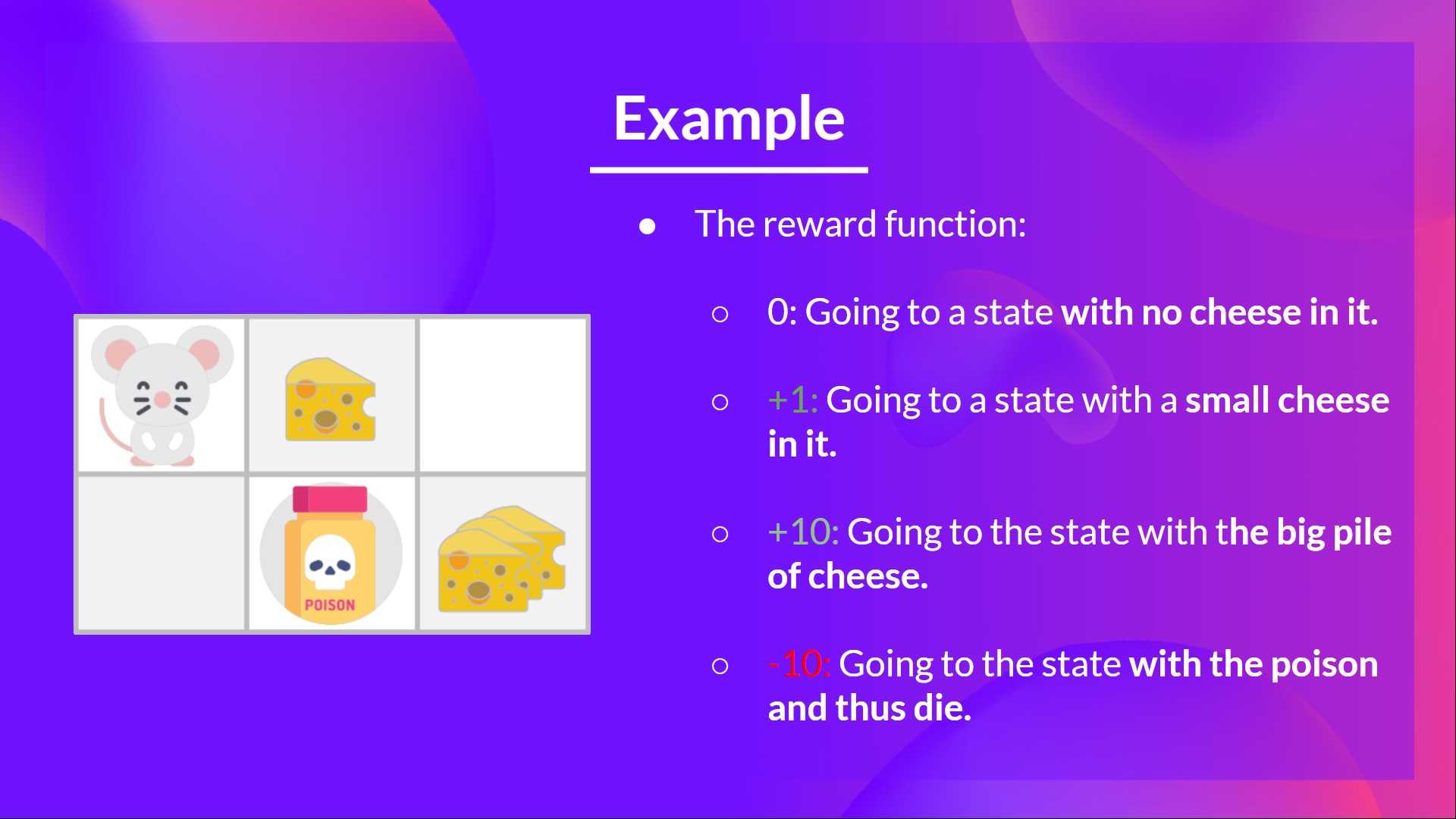

The reward function goes like this:

- +0: Going to a state with no cheese in it.

- +1: Going to a state with a small cheese in it.

- +10: Going to the state with the large pile of cheese.

- -10: Going to the state with the poison and thus die.

- +0 If we spend greater than five steps.

To coach our agent to have an optimal policy (so a policy that goes right, right, down), we’ll use the Q-Learning algorithm.

Step 1: We initialize the Q-Table

So, for now, our Q-Table is useless; we’d like to coach our Q-function using the Q-Learning algorithm.

Let’s do it for two training timesteps:

Training timestep 1:

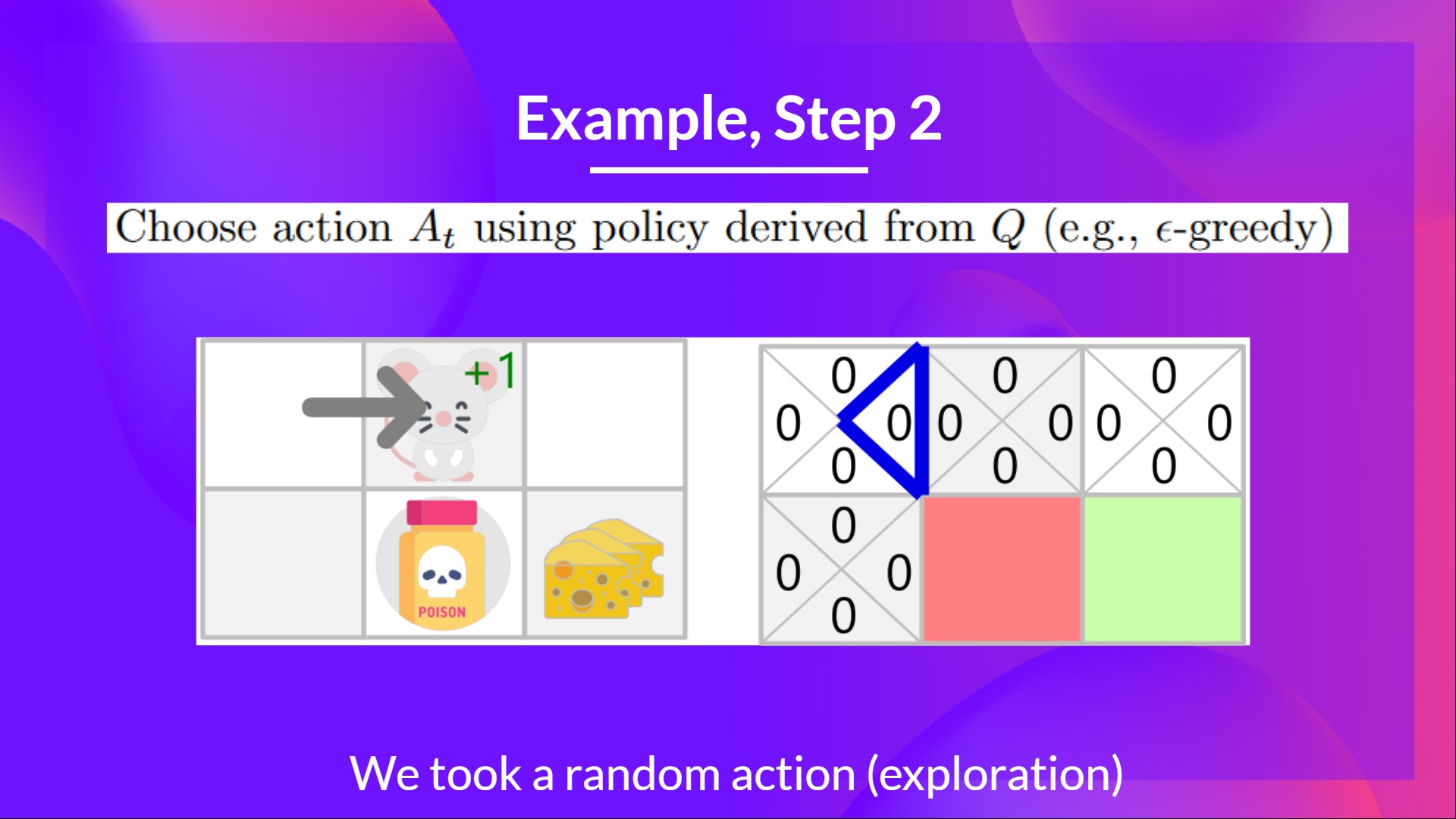

Step 2: Select motion using Epsilon Greedy Strategy

Because epsilon is big = 1.0, I take a random motion, on this case, I’m going right.

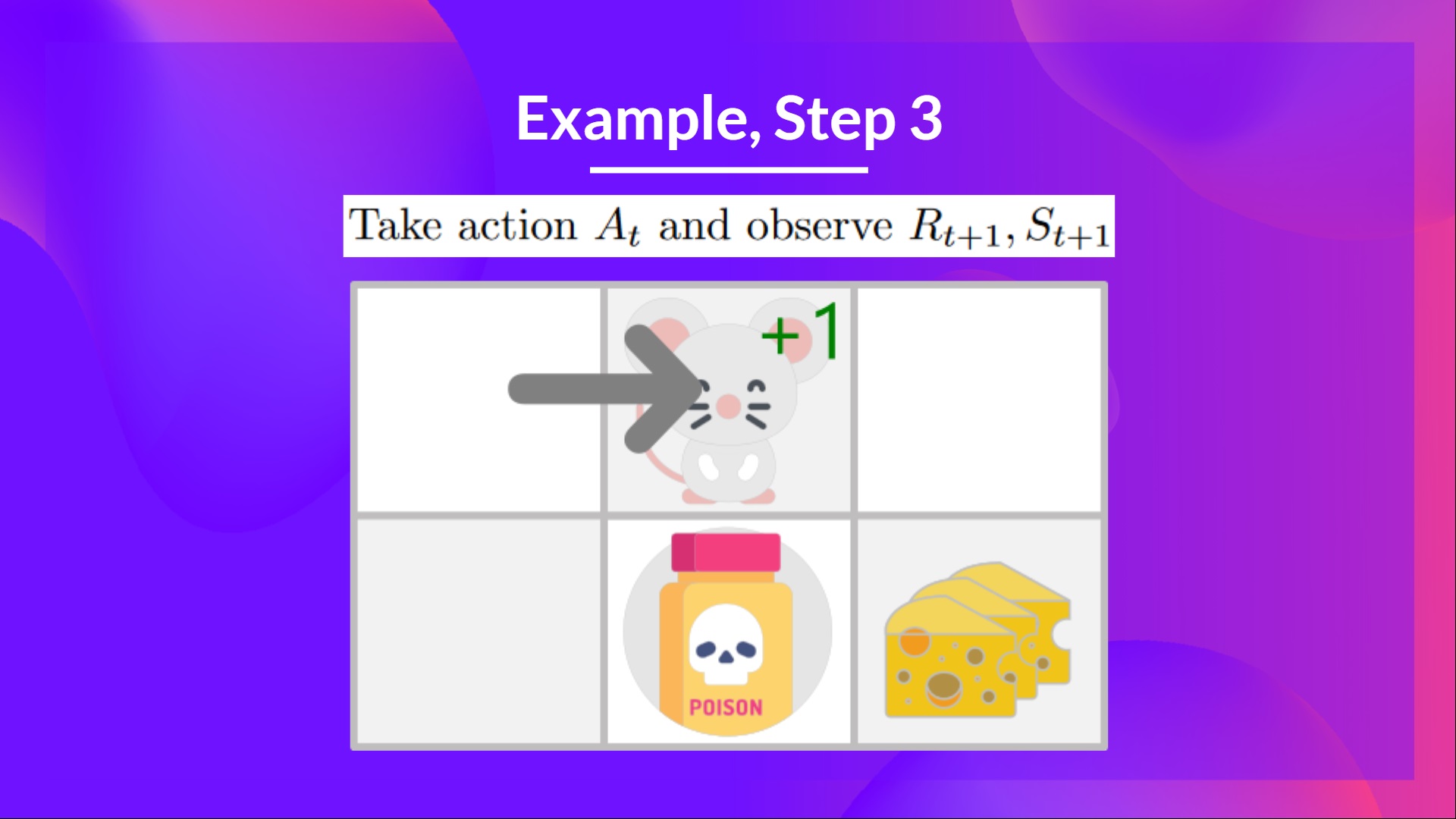

Step 3: Perform motion At, gets Rt+1 and St+1

By going right, I’ve got a small cheese, so , and I’m in a brand new state.

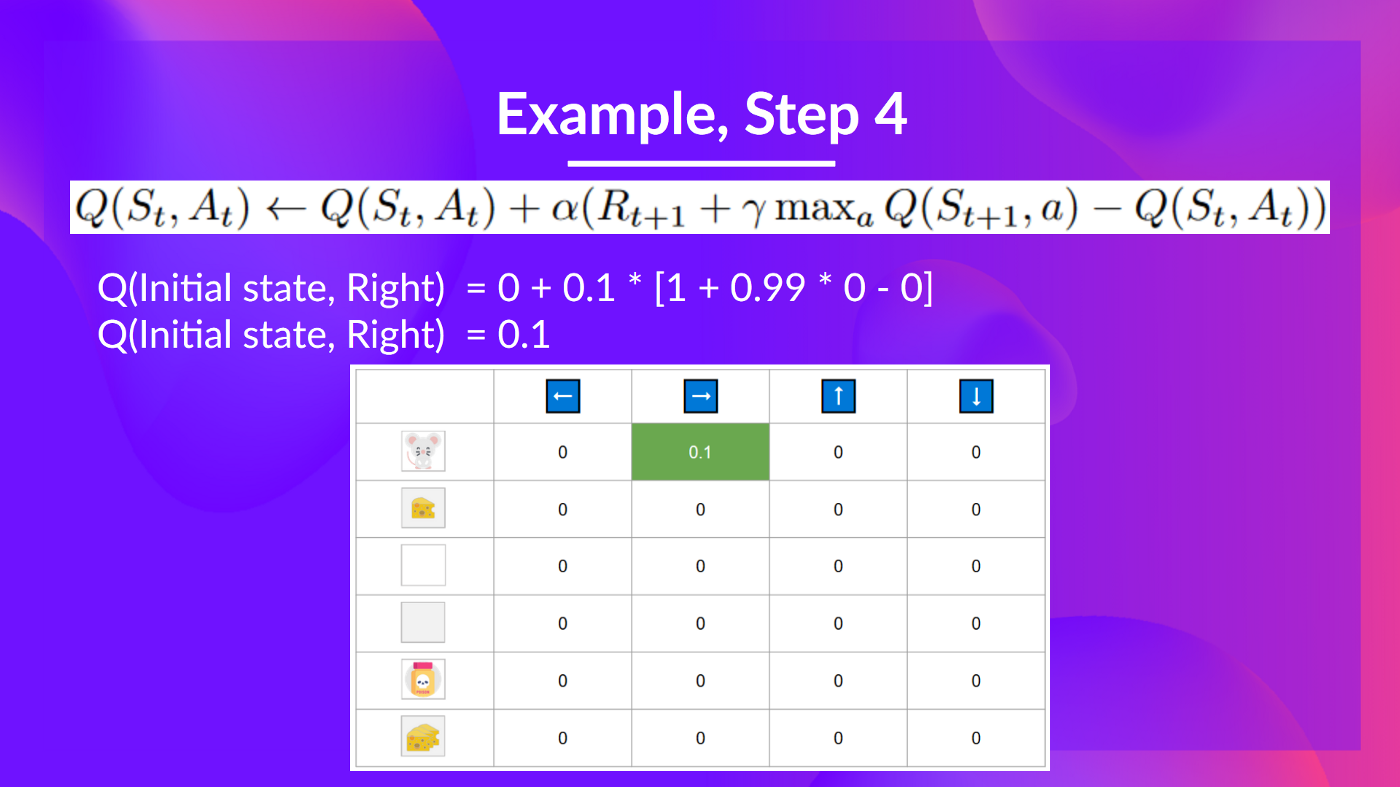

Step 4: Update

We will now update using our formula.

Training timestep 2:

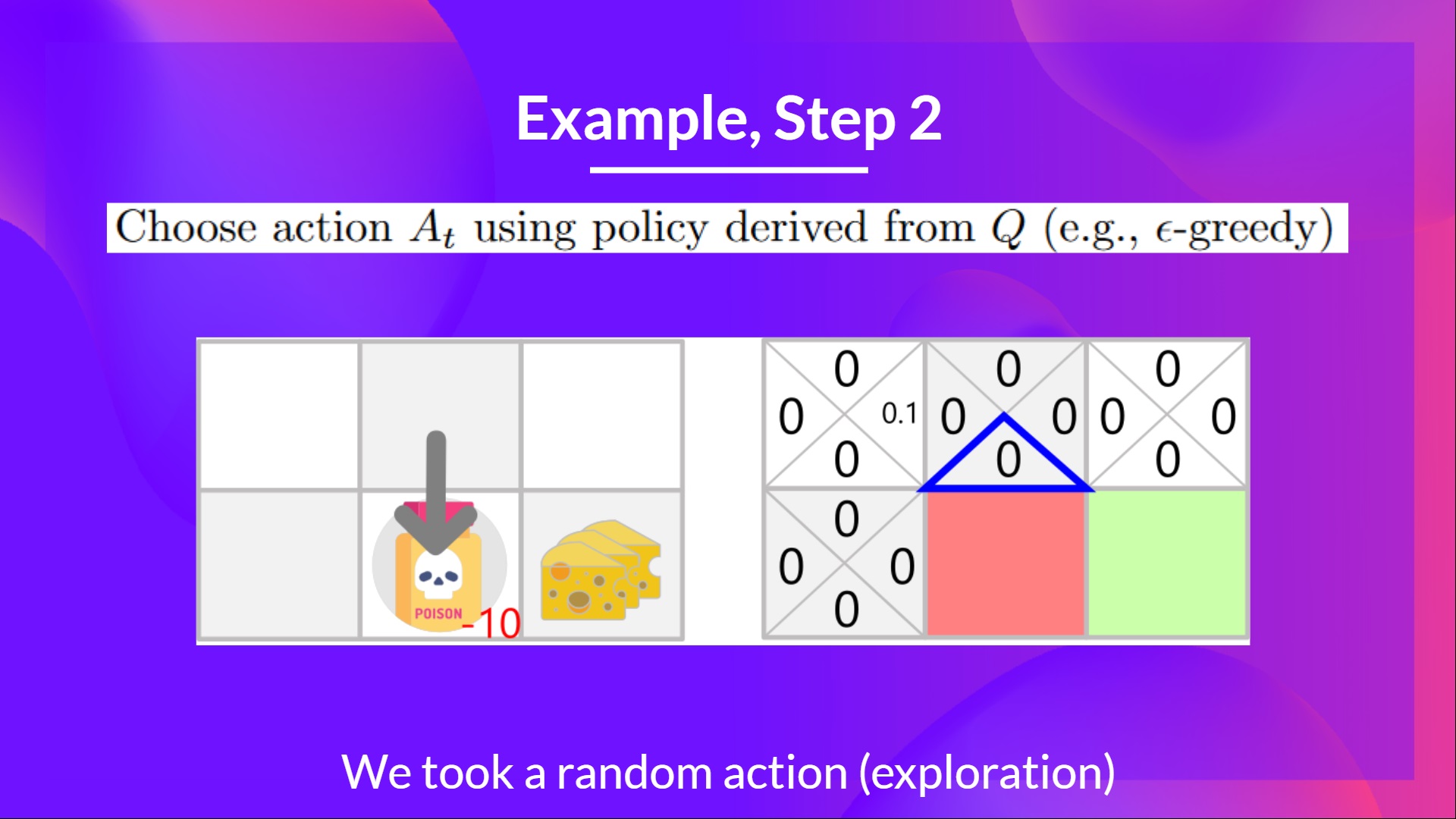

Step 2: Select motion using Epsilon Greedy Strategy

I take a random motion again, since epsilon is big 0.99 (since we decay it a little bit bit because because the training progress, we would like less and fewer exploration).

I took motion down. Not a very good motion because it leads me to the poison.

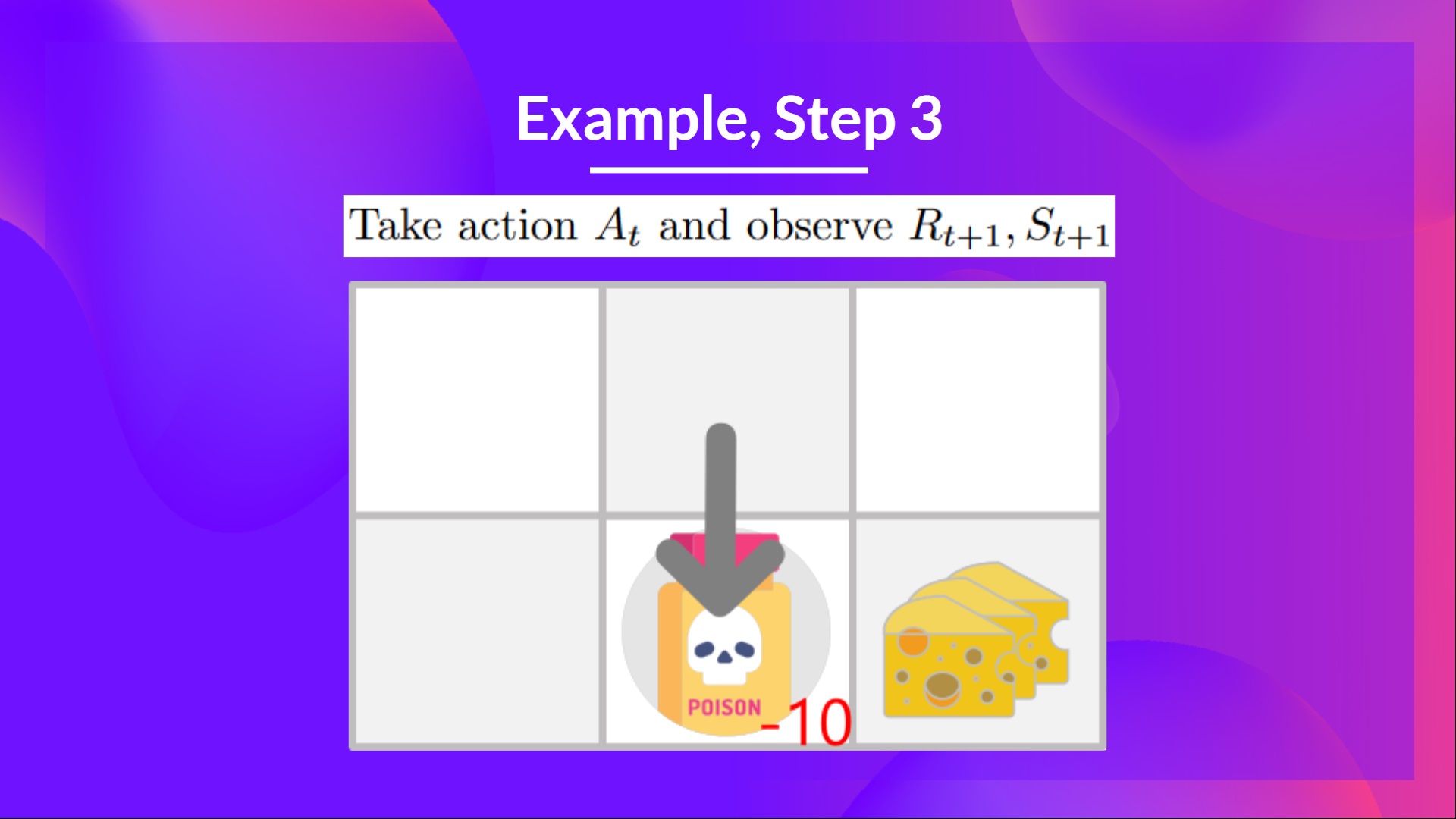

Step 3: Perform motion At, gets and St+1

Because I’m going to the poison state, I get , and I die.

Step 4: Update

Because we’re dead, we start a brand new episode. But what we see here is that with two explorations steps, my agent became smarter.

As we proceed exploring and exploiting the environment and updating Q-values using TD goal, Q-Table will give us higher and higher approximations. And thus, at the tip of the training, we’ll get an estimate of the optimal Q-Function.

Now that we studied the speculation of Q-Learning, let’s implement it from scratch. A Q-Learning agent that we are going to train in two environments:

- Frozen-Lake-v1 ❄️ (non-slippery version): where our agent might want to go from the starting state (S) to the goal state (G) by walking only on frozen tiles (F) and avoiding holes (H).

- An autonomous taxi 🚕 will need to learn to navigate a city to transport its passengers from point A to point B.

Start the tutorial here 👉 https://colab.research.google.com/github/huggingface/deep-rl-class/blob/important/unit2/unit2.ipynb

The leaderboard 👉 https://huggingface.co/spaces/chrisjay/Deep-Reinforcement-Learning-Leaderboard

Congrats on ending this chapter! There was a number of information. And congrats on ending the tutorials. You’ve just implemented your first RL agent from scratch and shared it on the Hub 🥳.

Implementing from scratch whenever you study a brand new architecture is vital to grasp how it really works.

That’s normal if you happen to still feel confused with all these elements. This was the identical for me and for all individuals who studied RL.

Take time to essentially grasp the fabric before continuing.

And since the perfect strategy to learn and avoid the illusion of competence is to check yourself. We wrote a quiz to allow you to find where you could reinforce your study.

Check your knowledge here 👉 https://github.com/huggingface/deep-rl-class/blob/important/unit2/quiz2.md

It’s essential to master these elements and having a solid foundations before entering the fun part.

Don’t hesitate to switch the implementation, try ways to enhance it and alter environments, the perfect strategy to learn is to try things on your individual!

We published additional readings within the syllabus if you wish to go deeper 👉 https://github.com/huggingface/deep-rl-class/blob/important/unit2/README.md

In the subsequent unit, we’re going to study Deep-Q-Learning.

And remember to share with your mates who need to learn 🤗 !

Finally, we would like to enhance and update the course iteratively together with your feedback. If you could have some, please fill this way 👉 https://forms.gle/3HgA7bEHwAmmLfwh9