⚠️ A latest updated version of this text is on the market here 👉 https://huggingface.co/deep-rl-course/unit1/introduction

This text is a component of the Deep Reinforcement Learning Class. A free course from beginner to expert. Check the syllabus here.

⚠️ A latest updated version of this text is on the market here 👉 https://huggingface.co/deep-rl-course/unit1/introduction

This text is a component of the Deep Reinforcement Learning Class. A free course from beginner to expert. Check the syllabus here.

Within the last Unit, we learned about Advantage Actor Critic (A2C), a hybrid architecture combining value-based and policy-based methods that help to stabilize the training by reducing the variance with:

- An Actor that controls how our agent behaves (policy-based method).

- A Critic that measures how good the motion taken is (value-based method).

Today we’ll find out about Proximal Policy Optimization (PPO), an architecture that improves our agent’s training stability by avoiding too large policy updates. To try this, we use a ratio that may indicates the difference between our current and old policy and clip this ratio from a selected range .

Doing this may ensure that our policy update is not going to be too large and that the training is more stable.

After which, after the speculation, we’ll code a PPO architecture from scratch using PyTorch and bulletproof our implementation with CartPole-v1 and LunarLander-v2.

Sounds exciting? Let’s start!

The intuition behind PPO

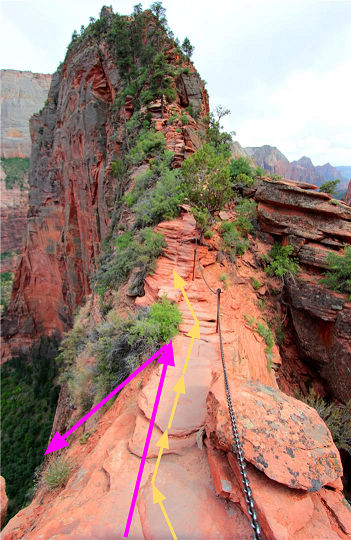

The thought with Proximal Policy Optimization (PPO) is that we would like to enhance the training stability of the policy by limiting the change you make to the policy at each training epoch: we would like to avoid having too large policy updates.

For 2 reasons:

- We all know empirically that smaller policy updates during training are more prone to converge to an optimal solution.

- A too big step in a policy update can lead to falling “off the cliff” (getting a nasty policy) and having an extended time and even no possibility to get better.

So with PPO, we update the policy conservatively. To achieve this, we want to measure how much the present policy modified in comparison with the previous one using a ratio calculation between the present and former policy. And we clip this ratio in a variety , meaning that we remove the motivation for the present policy to go too removed from the old one (hence the proximal policy term).

Introducing the Clipped Surrogate Objective

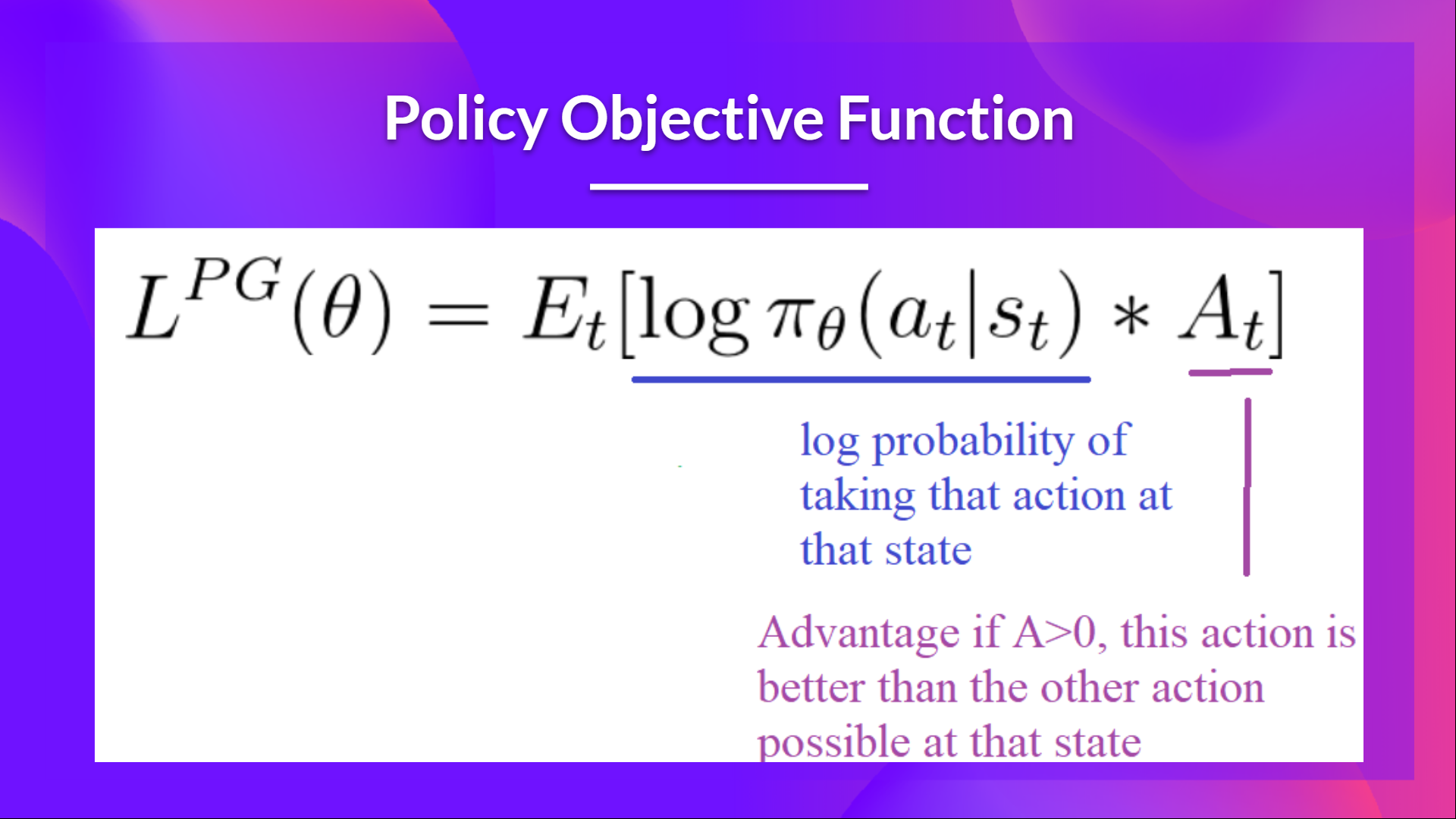

Recap: The Policy Objective Function

Let’s remember what’s the target to optimize in Reinforce:

The thought was that by taking a gradient ascent step on this function (similar to taking gradient descent of the negative of this function), we’d push our agent to take actions that result in higher rewards and avoid harmful actions.

Nonetheless, the issue comes from the step size:

- Too small, the training process was too slow

- Too high, there was an excessive amount of variability within the training

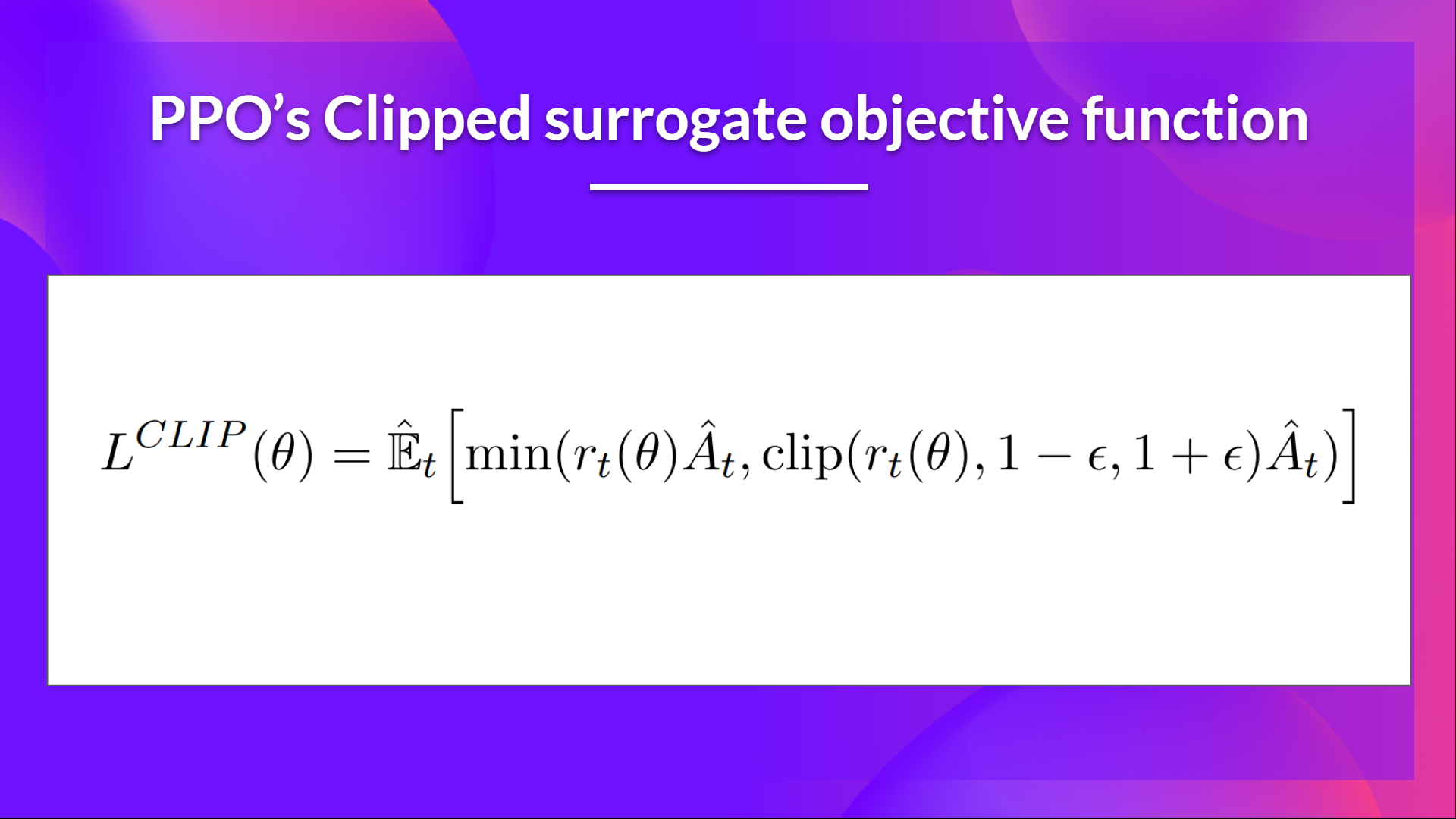

Here with PPO, the concept is to constrain our policy update with a brand new objective function called the Clipped surrogate objective function that will constrain the policy change in a small range using a clip.

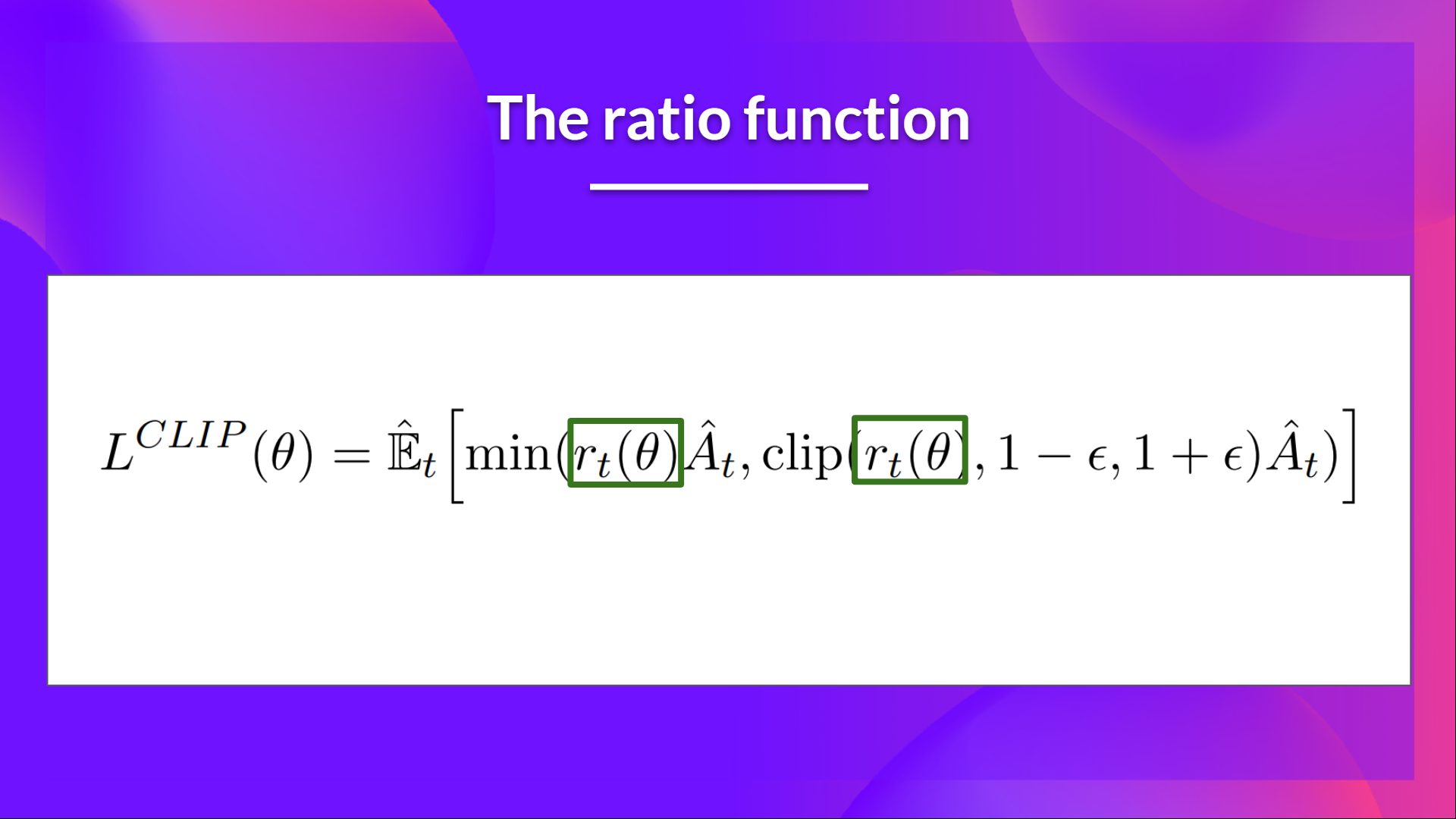

This latest function is designed to avoid destructive large weights updates :

Let’s study each part to grasp how it really works.

The Ratio Function

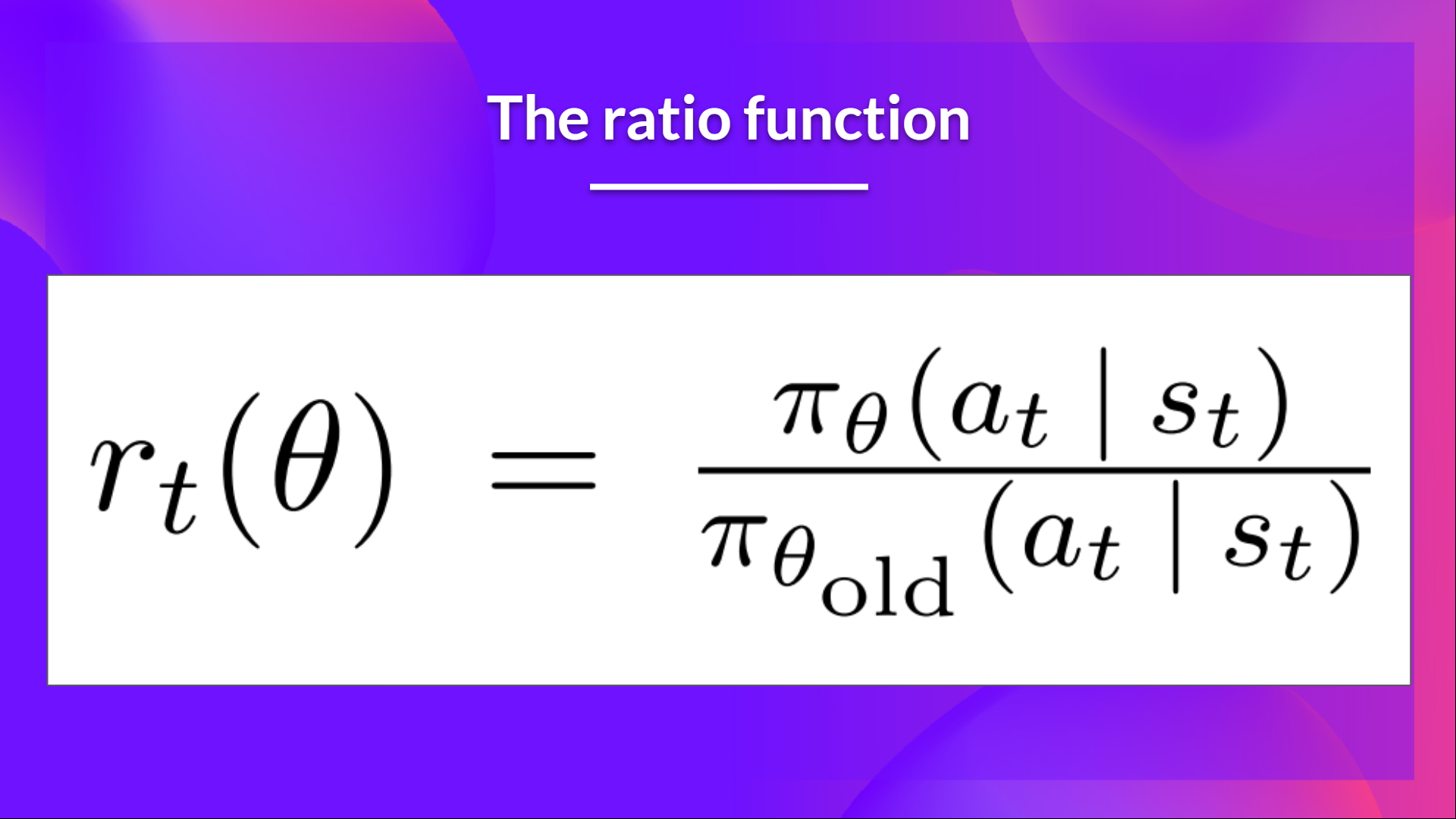

This ratio is calculated this manner:

It’s the probability of taking motion at state in the present policy divided by the previous one.

As we are able to see, denotes the probability ratio between the present and old policy:

- If , the motion at state is more likely in the present policy than the old policy.

- If is between 0 and 1, the motion is less likely for the present policy than for the old one.

So this probability ratio is an easy method to estimate the divergence between old and current policy.

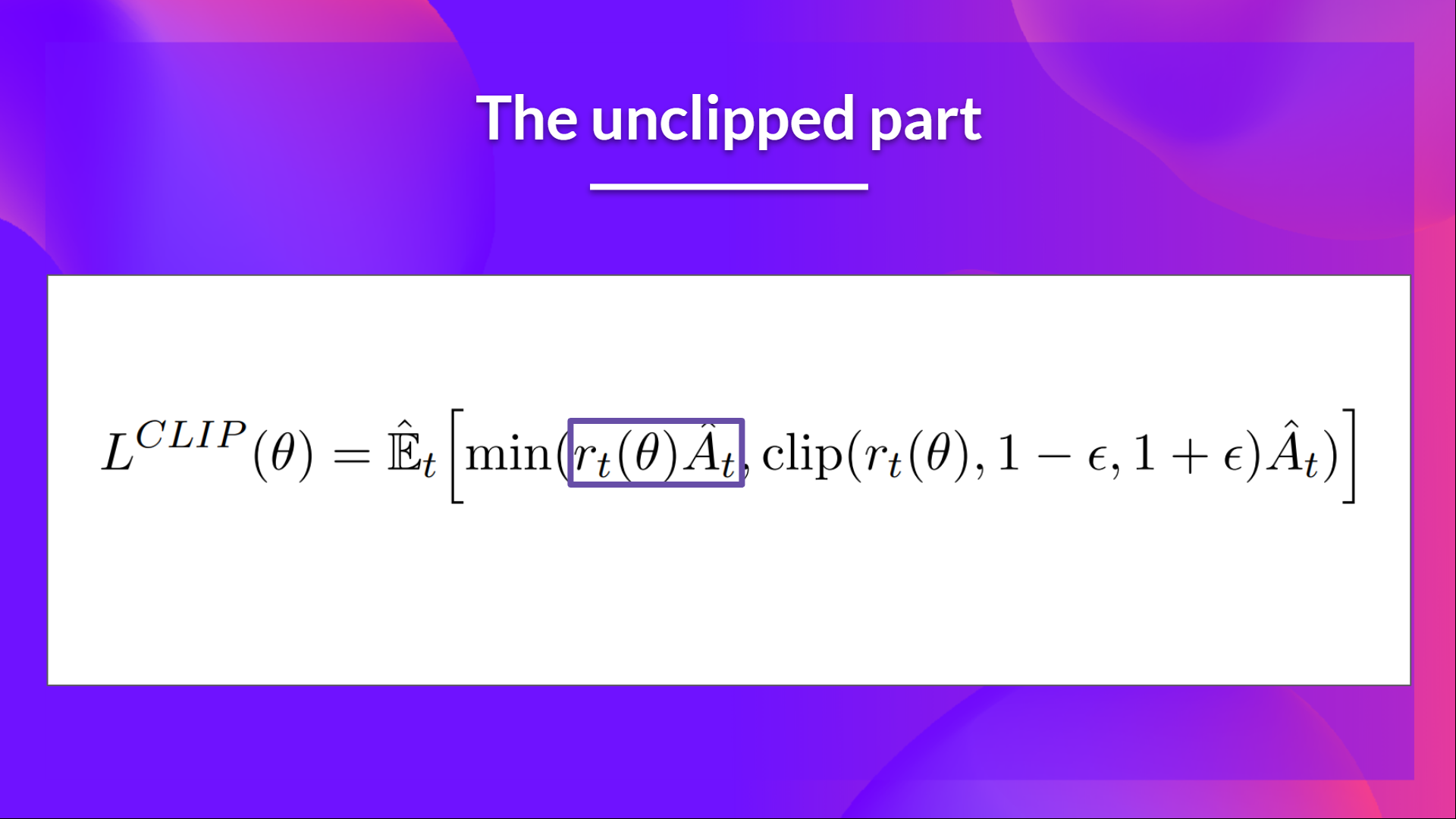

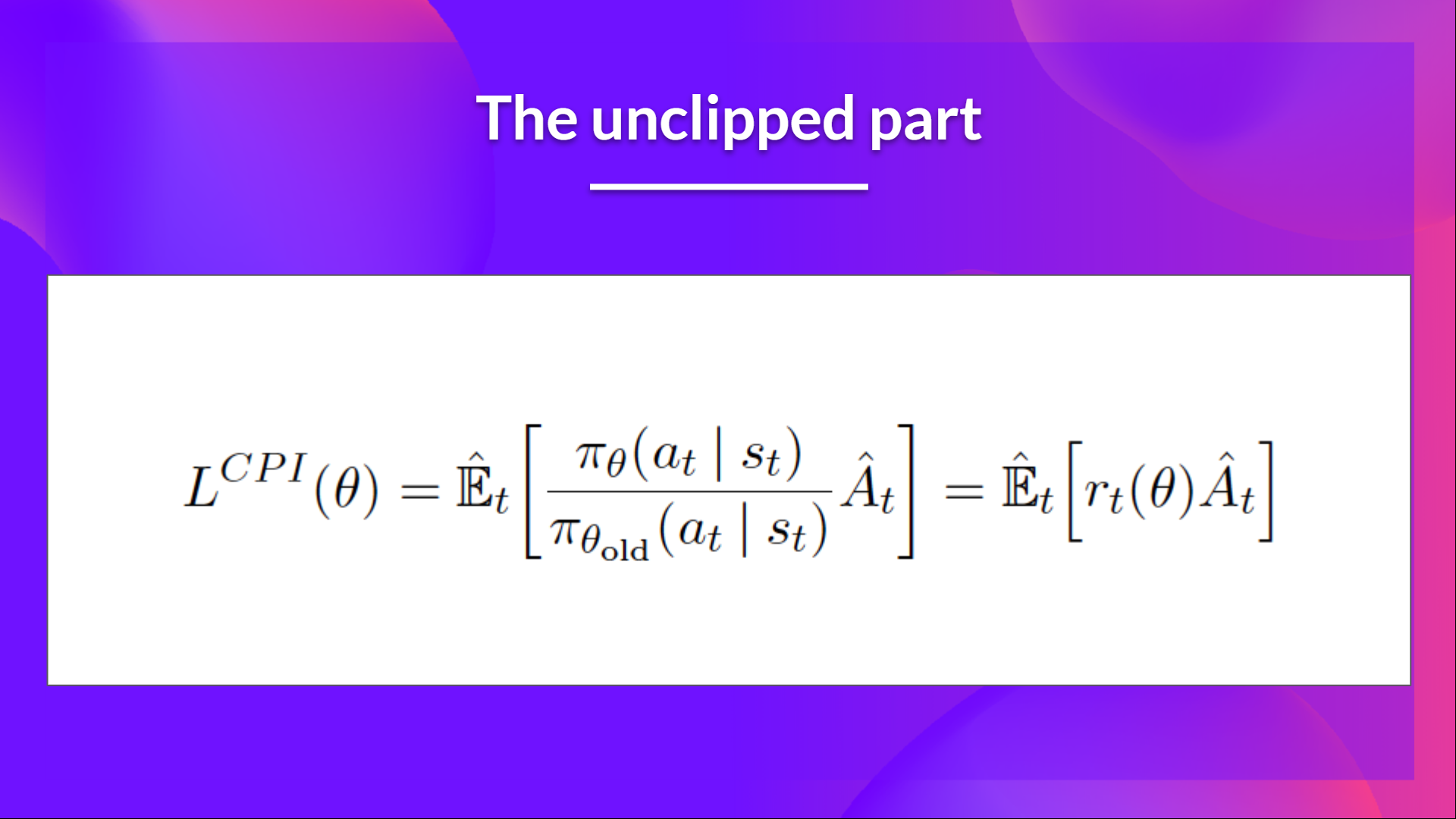

The unclipped a part of the Clipped Surrogate Objective function

This ratio can replace the log probability we use within the policy objective function. This provides us the left a part of the brand new objective function: multiplying the ratio by the advantage.

Nonetheless, with out a constraint, if the motion taken is far more probable in our current policy than in our former, this may result in a major policy gradient step and, due to this fact, an excessive policy update.

The clipped A part of the Clipped Surrogate Objective function

Consequently, we want to constrain this objective function by penalizing changes that result in a ratio away from 1 (within the paper, the ratio can only vary from 0.8 to 1.2).

By clipping the ratio, we be sure that we shouldn’t have a too large policy update because the present policy cannot be too different from the older one.

To try this, we’ve two solutions:

- TRPO (Trust Region Policy Optimization) uses KL divergence constraints outside the target function to constrain the policy update. But this method is complicated to implement and takes more computation time.

- PPO clip probability ratio directly in the target function with its Clipped surrogate objective function.

This clipped part is a version where rt(theta) is clipped between .

With the Clipped Surrogate Objective function, we’ve two probability ratios, one non-clipped and one clipped in a variety (between , epsilon is a hyperparameter that helps us to define this clip range (within the paper .).

Then, we take the minimum of the clipped and non-clipped objective, so the ultimate objective is a lower certain (pessimistic certain) of the unclipped objective.

Taking the minimum of the clipped and non-clipped objective means we’ll select either the clipped or the non-clipped objective based on the ratio and advantage situation.

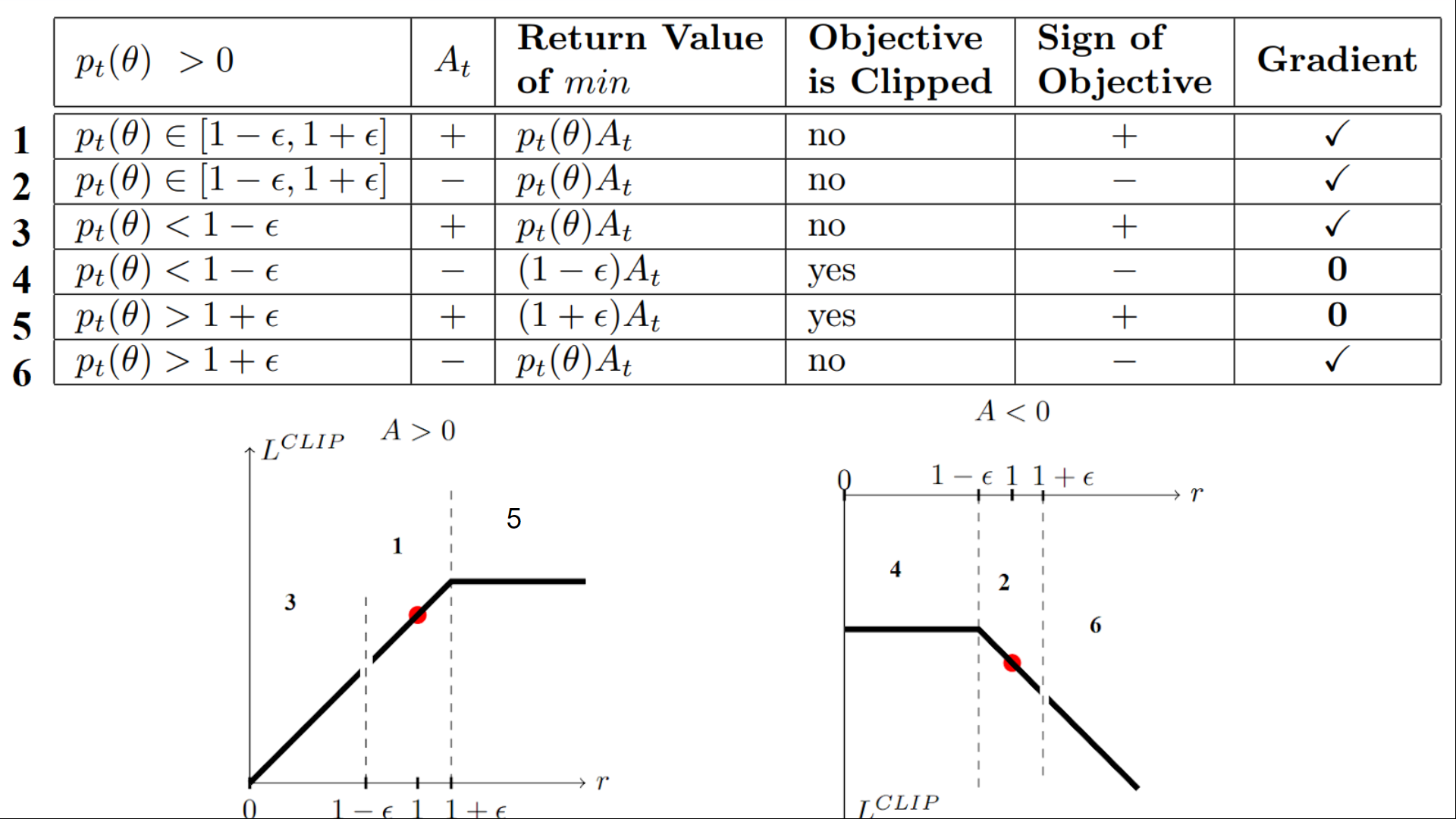

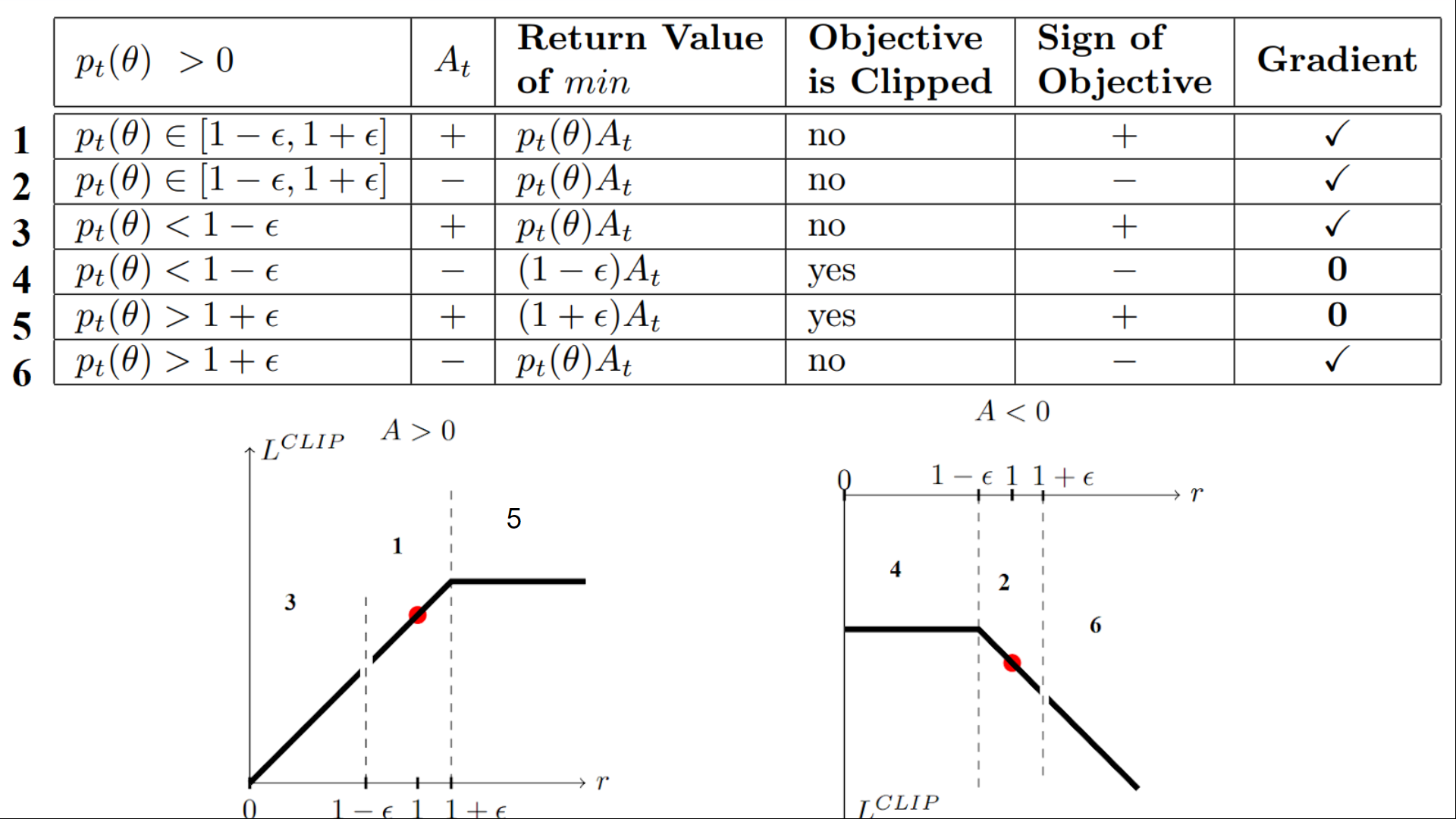

Visualize the Clipped Surrogate Objective

Don’t fret. It’s normal if this seems complex to handle right away. But we’ll see what this Clipped Surrogate Objective Function looks like, and this may enable you to to visualise higher what is going on on.

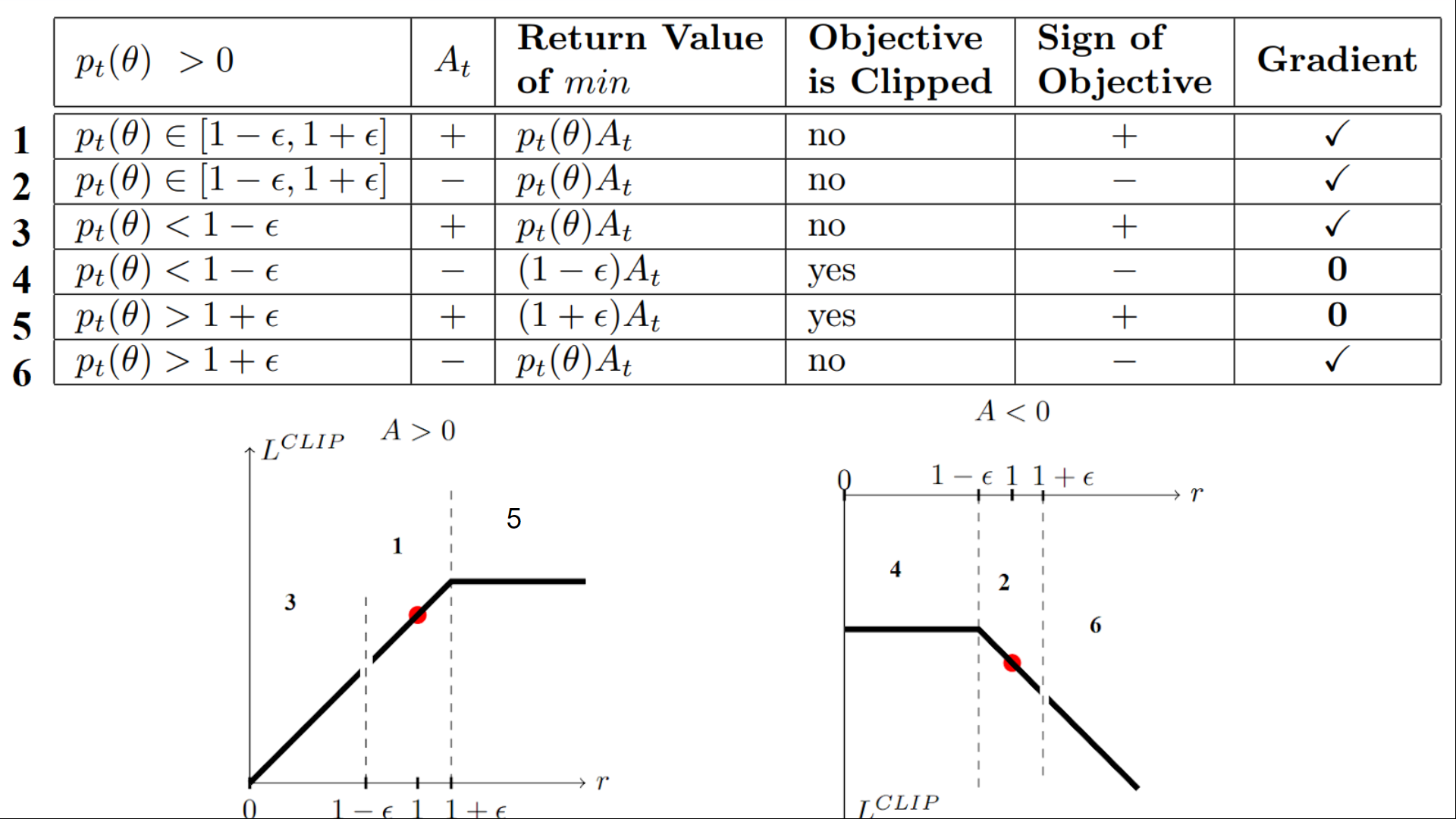

Explanation of Proximal Policy Optimization” by Daniel Bick

We now have six different situations. Remember first that we take the minimum between the clipped and unclipped objectives.

Case 1 and a couple of: the ratio is between the range

In situations 1 and a couple of, the clipping doesn’t apply for the reason that ratio is between the range

In situation 1, we’ve a positive advantage: the motion is healthier than the common of all of the actions in that state. Subsequently, we must always encourage our current policy to extend the probability of taking that motion in that state.

For the reason that ratio is between intervals, we are able to increase our policy’s probability of taking that motion at that state.

In situation 2, we’ve a negative advantage: the motion is worse than the common of all actions at that state. Subsequently, we must always discourage our current policy from taking that motion in that state.

For the reason that ratio is between intervals, we are able to decrease the probability that our policy takes that motion at that state.

Case 3 and 4: the ratio is below the range

Explanation of Proximal Policy Optimization” by Daniel Bick

If the probability ratio is lower than , the probability of taking that motion at that state is far lower than with the old policy.

If, like in situation 3, the advantage estimate is positive (A>0), then you ought to increase the probability of taking that motion at that state.

But when, like situation 4, the advantage estimate is negative, we don’t desire to diminish further the probability of taking that motion at that state. Subsequently, the gradient is = 0 (since we’re on a flat line), so we do not update our weights.

Case 5 and 6: the ratio is above the range

Explanation of Proximal Policy Optimization” by Daniel Bick

If the probability ratio is higher than , the probability of taking that motion at that state in the present policy is much higher than in the previous policy.

If, like in situation 5, the advantage is positive, we don’t desire to get too greedy. We have already got a better probability of taking that motion at that state than the previous policy. Subsequently, the gradient is = 0 (since we’re on a flat line), so we do not update our weights.

If, like in situation 6, the advantage is negative, we would like to diminish the probability of taking that motion at that state.

So if we recap, we only update the policy with the unclipped objective part. When the minimum is the clipped objective part, we do not update our policy weights for the reason that gradient will equal 0.

So we update our policy provided that:

- Our ratio is within the range

- Our ratio is outside the range, but the advantage results in getting closer to the range

- Being below the ratio however the advantage is > 0

- Being above the ratio however the advantage is < 0

You may wonder why, when the minimum is the clipped ratio, the gradient is 0. When the ratio is clipped, the derivative on this case is not going to be the derivative of the however the derivative of either or the derivative of which each = 0.

To summarize, because of this clipped surrogate objective, we restrict the range that the present policy can vary from the old one. Because we remove the motivation for the probability ratio to maneuver outside of the interval since, the clip have the effect to gradient. If the ratio is > or < the gradient will likely be equal to 0.

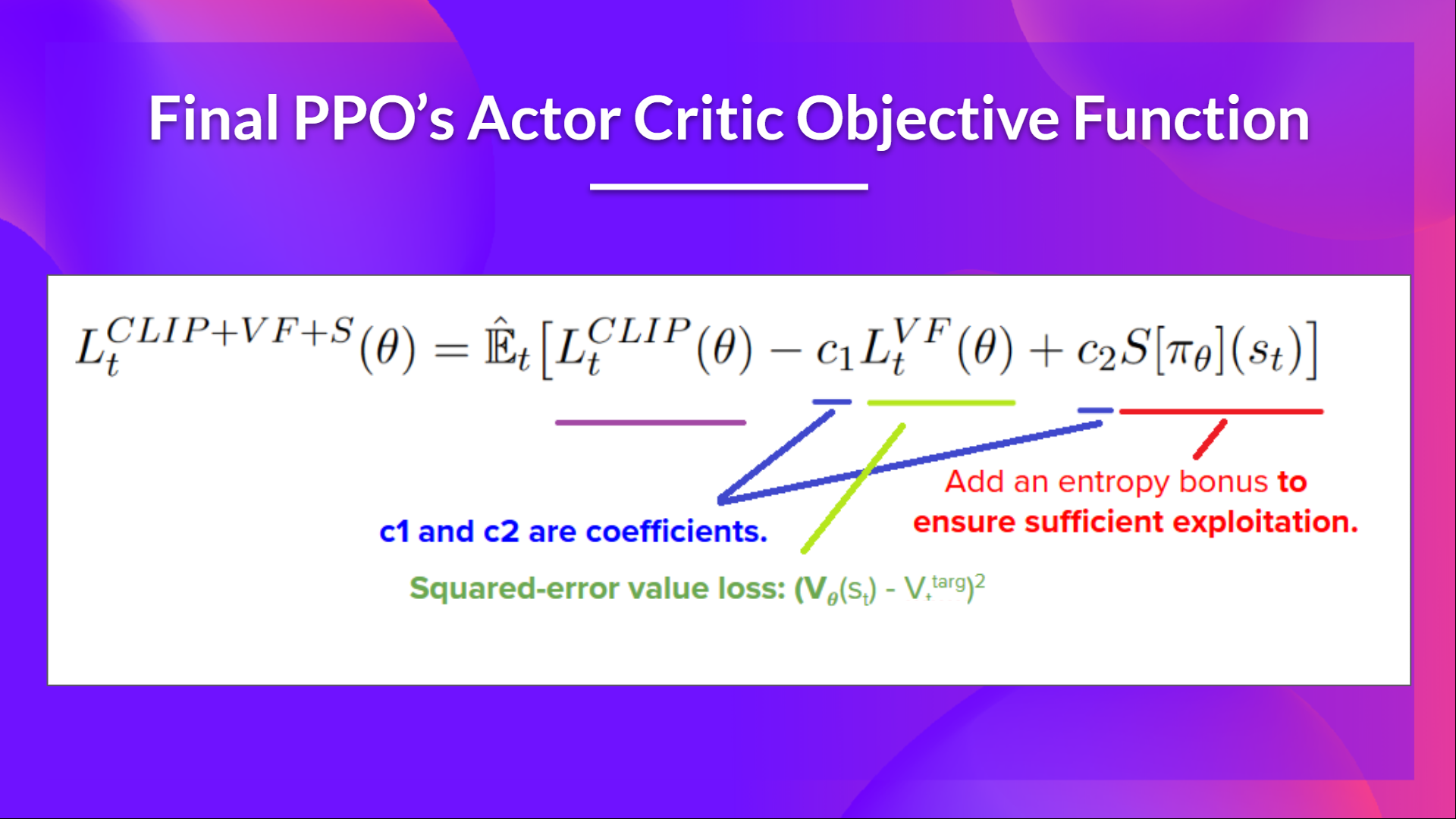

The ultimate Clipped Surrogate Objective Loss for PPO Actor-Critic style looks like this, it’s a mix of Clipped Surrogate Objective function, Value Loss Function and Entropy bonus:

That was quite complex. Take time to grasp these situations by taking a look at the table and the graph. You have to understand why this is sensible. If you ought to go deeper, the perfect resource is the article Towards Delivering a Coherent Self-Contained Explanation of Proximal Policy Optimization” by Daniel Bick, especially part 3.4.

Let’s code our PPO Agent

Now that we studied the speculation behind PPO, the perfect method to understand how it really works is to implement it from scratch.

Implementing an architecture from scratch is the perfect method to understand it, and it’s a superb habit. We now have already done it for a value-based method with Q-Learning and a Policy-based method with Reinforce.

So, to give you the option to code it, we’ll use two resources:

Then, to check its robustness, we’ll train it in 2 different classical environments:

And eventually, we will likely be push the trained model to the Hub to judge and visualize your agent playing.

LunarLander-v2 is the primary environment you used once you began this course. At the moment, you didn’t know the way it worked, and now, you may code it from scratch and train it. How incredible is that 🤩.

Start the tutorial here 👉 https://github.com/huggingface/deep-rl-class/blob/foremost/unit8/unit8.ipynb

Congrats on ending this chapter! There was quite a lot of information. And congrats on ending the tutorial. 🥳, this was considered one of the toughest of the course.

Don’t hesitate to coach your agent in other environments. The best method to learn is to try things on your individual!

I would like you to take into consideration your progress for the reason that first Unit. With these eight units, you’ve got built a robust background in Deep Reinforcement Learning. Congratulations!

But this isn’t the tip, even when the foundations a part of the course is finished, this isn’t the tip of the journey. We’re working on latest elements:

- Adding latest environments and tutorials.

- A piece about multi-agents (self-play, collaboration, competition).

- One other one about offline RL and Decision Transformers.

- Paper explained articles.

- And more to return.

The very best method to be in contact is to join the course in order that we keep you updated 👉 http://eepurl.com/h1pElX

And remember to share with your pals who need to learn 🤗!

Finally, together with your feedback, we would like to enhance and update the course iteratively. If you’ve got some, please fill this way 👉 https://forms.gle/3HgA7bEHwAmmLfwh9

See you next time!