At Hugging Face, we’re working on tackling various problems in open-source machine learning, including, hosting models securely and openly, enabling reproducibility, explainability and collaboration. We’re thrilled to introduce you to our latest library: Skops! With Skops, you possibly can host your scikit-learn models on the Hugging Face Hub, create model cards for model documentation and collaborate with others.

Let’s undergo an end-to-end example: train a model first, and see step-by-step learn how to leverage Skops for sklearn in production.

import sklearn

from sklearn.datasets import load_breast_cancer

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

X, y = load_breast_cancer(as_frame=True, return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=42

)

model = DecisionTreeClassifier().fit(X_train, y_train)

You should utilize any model filename and serialization method, like pickle or joblib. In the meanwhile, our backend uses joblib to load the model. hub_utils.init creates a neighborhood folder containing the model within the given path, and the configuration file containing the specifications of the environment the model is trained in. The information and the duty passed to the init will help Hugging Face Hub enable the inference widget on the model page in addition to discoverability features to seek out the model.

from skops import hub_utils

import pickle

model_path = "example.pkl"

local_repo = "my-awesome-model"

with open(model_path, mode="bw") as f:

pickle.dump(model, file=f)

hub_utils.init(

model=model_path,

requirements=[f"scikit-learn={sklearn.__version__}"],

dst=local_repo,

task="tabular-classification",

data=X_test,

)

The repository now incorporates the serialized model and the configuration file.

The configuration incorporates the next:

- features of the model,

- the necessities of the model,

- an example input taken from

X_testthat we have passed, - name of the model file,

- name of the duty to be solved here.

We are going to now create the model card. The cardboard should match the expected Hugging Face Hub format: a markdown part and a metadata section, which is a yaml section at the highest. The keys to the metadata section are defined here and are used for the discoverability of the models.

The content of the model card is set by a template that has a:

yamlsection on top for metadata (e.g. model license, library name, and more)- markdown section with free text and sections to be filled (e.g. easy description of the model),

The next sections are extracted byskopsto fill within the model card: - Hyperparameters of the model,

- Interactive diagram of the model,

- For metadata, library name, task identifier (e.g. tabular-classification), and knowledge required by the inference widget are filled.

We are going to walk you thru learn how to programmatically pass information to fill the model card. You may take a look at our documentation on the default template provided by skops, and its sections here to see what the template expects and what it looks like here.

You may create the model card by instantiating the Card class from skops. During model serialization, the duty name and library name are written to the configuration file. This information can also be needed in the cardboard’s metadata, so you need to use the metadata_from_config method to extract the metadata from the configuration file and pass it to the cardboard while you create it. You may add information and metadata using add.

from skops import card

model_card = card.Card(model, metadata=card.metadata_from_config(Path(destination_folder)))

limitations = "This model isn't able to be utilized in production."

model_description = "This can be a DecisionTreeClassifier model trained on breast cancer dataset."

model_card_authors = "skops_user"

get_started_code = "import pickle nwith open(dtc_pkl_filename, 'rb') as file: n clf = pickle.load(file)"

citation_bibtex = "bibtexn@inproceedings{...,yr={2020}}"

model_card.add(

citation_bibtex=citation_bibtex,

get_started_code=get_started_code,

model_card_authors=model_card_authors,

limitations=limitations,

model_description=model_description,

)

model_card.metadata.license = "mit"

We are going to now evaluate the model and add an outline of the evaluation method with add. The metrics are added by add_metrics, which will probably be parsed right into a table.

from sklearn.metrics import (ConfusionMatrixDisplay, confusion_matrix,

accuracy_score, f1_score)

y_pred = model.predict(X_test)

model_card.add(eval_method="The model is evaluated using test split, on accuracy and F1 rating with macro average.")

model_card.add_metrics(accuracy=accuracy_score(y_test, y_pred))

model_card.add_metrics(**{"f1 rating": f1_score(y_test, y_pred, average="micro")})

We may also add any plot of our alternative to the cardboard using add_plot like below.

import matplotlib.pyplot as plt

from pathlib import Path

cm = confusion_matrix(y_test, y_pred, labels=model.classes_)

disp = ConfusionMatrixDisplay(confusion_matrix=cm, display_labels=model.classes_)

disp.plot()

plt.savefig(Path(local_repo) / "confusion_matrix.png")

model_card.add_plot(confusion_matrix="confusion_matrix.png")

Let’s save the model card within the local repository. The file name here ought to be README.md because it is what Hugging Face Hub expects.

model_card.save(Path(local_repo) / "README.md")

We are able to now push the repository to the Hugging Face Hub. For this, we’ll use push from hub_utils. Hugging Face Hub requires tokens for authentication, subsequently you want to pass your token in either notebook_login for those who’re logging in from a notebook, or huggingface-cli login for those who’re logging in from the CLI.

repo_id = "skops-user/my-awesome-model"

hub_utils.push(

repo_id=repo_id,

source=local_repo,

token=token,

commit_message="pushing files to the repo from the instance!",

create_remote=True,

)

Once we push the model to the Hub, anyone can use it unless the repository is private. You may download the models using download. Aside from the model file, the repository incorporates the model configuration and the environment requirements.

download_repo = "downloaded-model"

hub_utils.download(repo_id=repo_id, dst=download_repo)

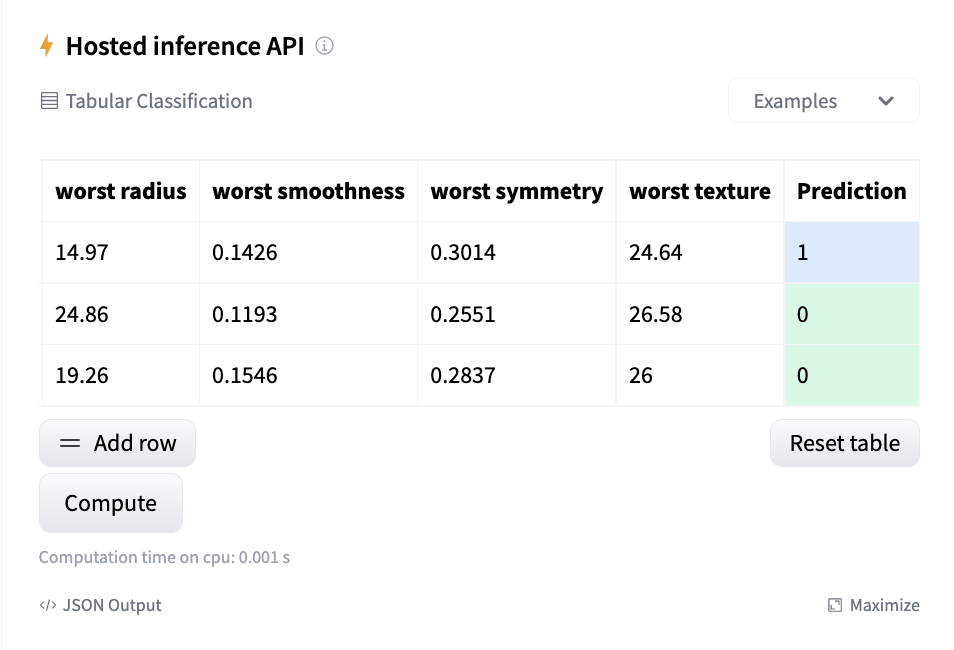

The inference widget is enabled to make predictions within the repository.

If the necessities of your project have modified, you need to use update_env to update the environment.

hub_utils.update_env(path=local_repo, requirements=["scikit-learn"])

You may see the instance repository pushed with above code here.

We have now prepared two examples to indicate learn how to save your models and use model card utilities. Yow will discover them within the resources section below.