This blog post will show how easy it’s to fine-tune pre-trained Transformer models to your dataset using the Hugging Face Optimum library on Graphcore Intelligence Processing Units (IPUs). For example, we are going to show a step-by-step guide and supply a notebook that takes a big, widely-used chest X-ray dataset and trains a vision transformer (ViT) model.

Introducing vision transformer (ViT) models

In 2017 a gaggle of Google AI researchers published a paper introducing the transformer model architecture. Characterised by a novel self-attention mechanism, transformers were proposed as a brand new and efficient group of models for language applications. Indeed, within the last five years, transformers have seen explosive popularity and are actually accepted because the de facto standard for natural language processing (NLP).

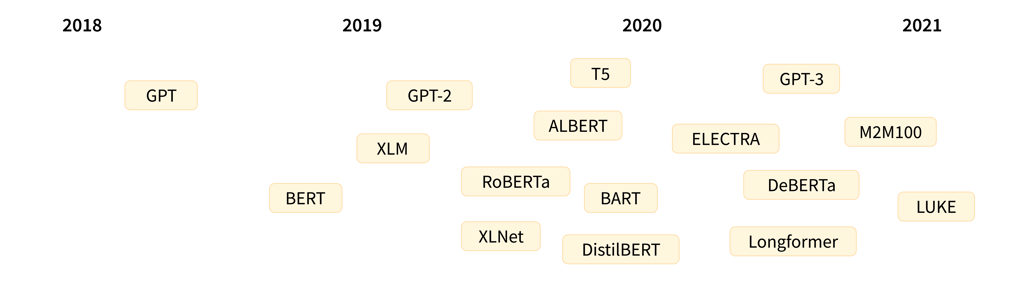

Transformers for language are perhaps most notably represented by the rapidly evolving GPT and BERT model families. Each can run easily and efficiently on Graphcore IPUs as a part of the growing Hugging Face Optimum Graphcore library).

A timeline showing releases of outstanding transformer language models (credit: Hugging Face)

An in-depth explainer in regards to the transformer model architecture (with a deal with NLP) will be found on the Hugging Face website.

While transformers have seen initial success in language, they’re extremely versatile and will be used for a variety of other purposes including computer vision (CV), as we are going to cover on this blog post.

CV is an area where convolutional neural networks (CNNs) are no doubt the preferred architecture. Nevertheless, the vision transformer (ViT) architecture, first introduced in a 2021 paper from Google Research, represents a breakthrough in image recognition and uses the identical self-attention mechanism as BERT and GPT as its predominant component.

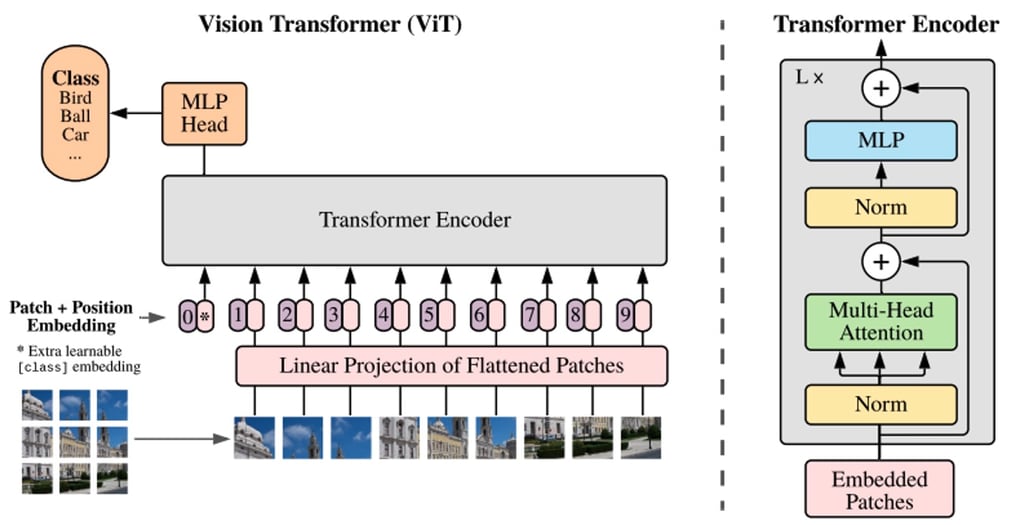

Whereas BERT and other transformer-based language processing models take a sentence (i.e., a listing of words) as input, ViT models divide an input image into several small patches, such as individual words in language processing. Each patch is linearly encoded by the transformer model right into a vector representation that will be processed individually. This approach of splitting images into patches, or visual tokens, stands in contrast to the pixel arrays utilized by CNNs.

Because of pre-training, the ViT model learns an inner representation of images that may then be used to extract visual features useful for downstream tasks. As an example, you may train a classifier on a brand new dataset of labelled images by placing a linear layer on top of the pre-trained visual encoder. One typically places a linear layer on top of the [CLS] token, because the last hidden state of this token will be seen as a representation of a complete image.

An outline of the ViT model structure as introduced in Google Research’s original 2021 paper

In comparison with CNNs, ViT models have displayed higher recognition accuracy with lower computational cost, and are applied to a variety of applications including image classification, object detection, and segmentation. Use cases within the healthcare domain alone include detection and classification for COVID-19, femur fractures, emphysema, breast cancer, and Alzheimer’s disease—amongst many others.

ViT models – an ideal fit for IPU

Graphcore IPUs are particularly well-suited to ViT models on account of their ability to parallelise training using a mixture of information pipelining and model parallelism. Accelerating this massively parallel process is made possible through IPU’s MIMD architecture and its scale-out solution centred on the IPU-Fabric.

By introducing pipeline parallelism, the batch size that will be processed per instance of information parallelism is increased, the access efficiency of the memory area handled by one IPU is improved, and the communication time of parameter aggregation for data parallel learning is reduced.

Because of the addition of a variety of pre-optimized transformer models to the open-source Hugging Face Optimum Graphcore library, it’s incredibly easy to attain a high degree of performance and efficiency when running and fine-tuning models resembling ViT on IPUs.

Through Hugging Face Optimum, Graphcore has released ready-to-use IPU-trained model checkpoints and configuration files to make it easy to coach models with maximum efficiency. This is especially helpful since ViT models generally require pre-training on a considerable amount of data. This integration enables you to use the checkpoints released by the unique authors themselves inside the Hugging Face model hub, so that you won’t must train them yourself. By letting users plug and play any public dataset, Optimum shortens the general development lifecycle of AI models and allows seamless integration to Graphcore’s state-of-the-art hardware, giving a quicker time-to-value.

For this blog post, we are going to use a ViT model pre-trained on ImageNet-21k, based on the paper An Image is Value 16×16 Words: Transformers for Image Recognition at Scale by Dosovitskiy et al. For example, we are going to show you the means of using Optimum to fine-tune ViT on the ChestX-ray14 Dataset.

The worth of ViT models for X-ray classification

As with all medical imaging tasks, radiologists spend a few years learning reliably and efficiently detect problems and make tentative diagnoses on the idea of X-ray images. To a big degree, this difficulty arises from the very minute differences and spatial limitations of the pictures, which is why computer aided detection and diagnosis (CAD) techniques have shown such great potential for impact in improving clinician workflows and patient outcomes.

At the identical time, developing any model for X-ray classification (ViT or otherwise) will entail its justifiable share of challenges:

- Training a model from scratch takes an unlimited amount of labeled data;

- The high resolution and volume requirements mean powerful compute is obligatory to coach such models; and

- The complexity of multi-class and multi-label problems resembling pulmonary diagnosis is exponentially compounded on account of the variety of disease categories.

As mentioned above, for the aim of our demonstration using Hugging Face Optimum, we don’t must train ViT from scratch. As an alternative, we are going to use model weights hosted within the Hugging Face model hub.

As an X-ray image can have multiple diseases, we are going to work with a multi-label classification model. The model in query uses google/vit-base-patch16-224-in21k checkpoints. It has been converted from the TIMM repository and pre-trained on 14 million images from ImageNet-21k. As a way to parallelise and optimise the job for IPU, the configuration has been made available through the Graphcore-ViT model card.

If that is your first time using IPUs, read the IPU Programmer’s Guide to learn the essential concepts. To run your individual PyTorch model on the IPU see the Pytorch basics tutorial, and learn how one can use Optimum through our Hugging Face Optimum Notebooks.

Training ViT on the ChestXRay-14 dataset

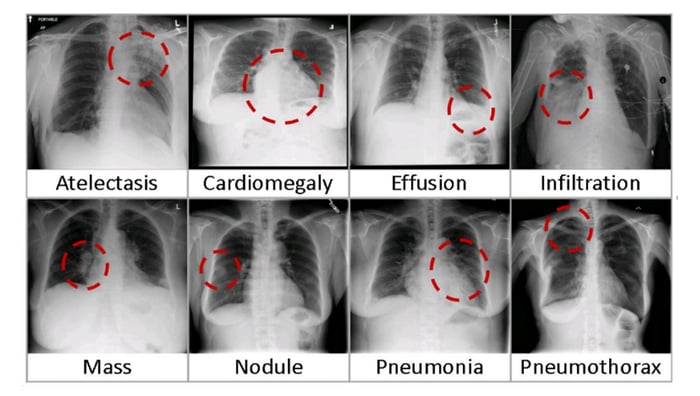

First, we’d like to download the National Institutes of Health (NIH) Clinical Center’s Chest X-ray dataset. This dataset accommodates 112,120 deidentified frontal view X-rays from 30,805 patients over a period from 1992 to 2015. The dataset covers a variety of 14 common diseases based on labels mined from the text of radiology reports using NLP techniques.

Eight visual examples of common thorax diseases (Credit: NIC)

Organising the environment

Listed below are the necessities to run this walkthrough:

The Graphcore Tutorials repository accommodates the step-by-step tutorial notebook and Python script discussed on this guide. Clone the repository and launch the walkthrough.ipynb notebook present in tutorials/tutorials/pytorch/vit_model_training/.

We’ve even made it easier and created the HF Optimum Gradient so you may launch the getting began tutorial in Free IPUs. Enroll and launch the runtime:

Getting the dataset

Download the dataset’s /images directory. You need to use bash to extract the files: for f in images*.tar.gz; do tar xfz "$f"; done.

Next, download the Data_Entry_2017_v2020.csv file, which accommodates the labels. By default, the tutorial expects the /images folder and .csv file to be in the identical folder because the script being run.

Once your Jupyter environment has the datasets, you should install and import the newest Hugging Face Optimum Graphcore package and other dependencies in requirements.txt:

%pip install -r requirements.txt

The examinations contained within the Chest X-ray dataset consist of X-ray images (greyscale, 224×224 pixels) with corresponding metadata: Finding Labels, Follow-up #,Patient ID, Patient Age, Patient Gender, View Position, OriginalImage[Width Height] and OriginalImagePixelSpacing[x y].

Next, we define the locations of the downloaded images and the file with the labels to be downloaded in Getting the dataset:

We’re going to train the Graphcore Optimum ViT model to predict diseases (defined by “Finding Label”) from the pictures. “Finding Label” will be any variety of 14 diseases or a “No Finding” label, which indicates that no disease was detected. To be compatible with the Hugging Face library, the text labels have to be transformed to N-hot encoded arrays representing the multiple labels that are needed to categorise each image. An N-hot encoded array represents the labels as a listing of booleans, true if the label corresponds to the image and false if not.

First we discover the unique labels within the dataset.

Now we transform the labels into N-hot encoded arrays:

When loading data using the datasets.load_dataset function, labels will be provided either by having folders for every of the labels (see “ImageFolder” documentation) or by having a metadata.jsonl file (see “ImageFolder with metadata” documentation). As the pictures on this dataset can have multiple labels, now we have chosen to make use of a metadata.jsonl file. We write the image file names and their associated labels to the metadata.jsonl file.

Creating the dataset

We are actually able to create the PyTorch dataset and split it into training and validation sets. This step converts the dataset to the Arrow file format which allows data to be loaded quickly during training and validation (about Arrow and Hugging Face). Because the complete dataset is being loaded and pre-processed it may take a number of minutes.

We’re going to import the ViT model from the checkpoint google/vit-base-patch16-224-in21k. The checkpoint is an ordinary model hosted by Hugging Face and is just not managed by Graphcore.

To fine-tune a pre-trained model, the brand new dataset will need to have the identical properties as the unique dataset used for pre-training. In Hugging Face, the unique dataset information is provided in a config file loaded using the AutoImageProcessor. For this model, the X-ray images are resized to the right resolution (224×224), converted from grayscale to RGB, and normalized across the RGB channels with a mean (0.5, 0.5, 0.5) and an ordinary deviation (0.5, 0.5, 0.5).

For the model to run efficiently, images have to be batched. To do that, we define the vit_data_collator function that returns batches of images and labels in a dictionary, following the default_data_collator pattern in Transformers Data Collator.

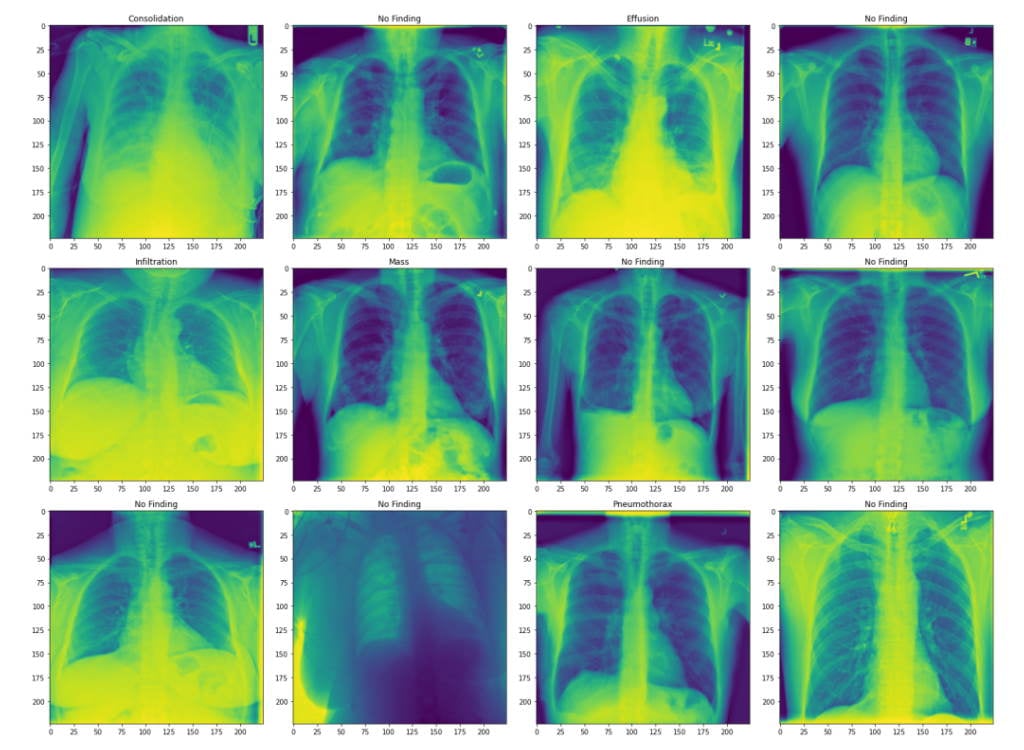

Visualising the dataset

To look at the dataset, we display the primary 10 rows of metadata.

Let’s also plot some images from the validation set with their associated labels.

The photographs are chest X-rays with labels of lung diseases the patient was diagnosed with. Here, we show the transformed images.

Our dataset is now able to be used.

Preparing the model

To coach a model on the IPU we’d like to import it from Hugging Face Hub and define a trainer using the IPUTrainer class. The IPUTrainer class takes the identical arguments as the unique Transformer Trainer and works in tandem with the IPUConfig object which specifies the behaviour for compilation and execution on the IPU.

Now we import the ViT model from Hugging Face.

To make use of this model on the IPU we’d like to load the IPU configuration, IPUConfig, which supplies control to all of the parameters specific to Graphcore IPUs (existing IPU configs will be found here). We’re going to use Graphcore/vit-base-ipu.

Let’s set our training hyperparameters using IPUTrainingArguments. This subclasses the Hugging Face TrainingArguments class, adding parameters specific to the IPU and its execution characteristics.

Implementing a custom performance metric for evaluation

The performance of multi-label classification models will be assessed using the realm under the ROC (receiver operating characteristic) curve (AUC_ROC). The AUC_ROC is a plot of the true positive rate (TPR) against the false positive rate (FPR) of various classes and at different threshold values. This can be a commonly used performance metric for multi-label classification tasks since it is insensitive to class imbalance and simple to interpret.

For this dataset, the AUC_ROC represents the flexibility of the model to separate the various diseases. A rating of 0.5 signifies that it’s 50% prone to get the right disease and a rating of 1 signifies that it may perfectly separate the diseases. This metric is just not available in Datasets, hence we’d like to implement it ourselves. HuggingFace Datasets package allows custom metric calculation through the load_metric() function. We define a compute_metrics function and expose it to Transformer’s evaluation function similar to the opposite supported metrics through the datasets package. The compute_metrics function takes the labels predicted by the ViT model and computes the realm under the ROC curve. The compute_metrics function takes an EvalPrediction object (a named tuple with a predictions and label_ids field), and has to return a dictionary string to drift.

To coach the model, we define a trainer using the IPUTrainer class which takes care of compiling the model to run on IPUs, and of performing training and evaluation. The IPUTrainer class works similar to the Hugging Face Trainer class, but takes the extra ipu_config argument.

Running the training

To speed up training we are going to load the last checkpoint if it exists.

Now we’re able to train.

Plotting convergence

Now that now we have accomplished the training, we will format and plot the trainer output to judge the training behaviour.

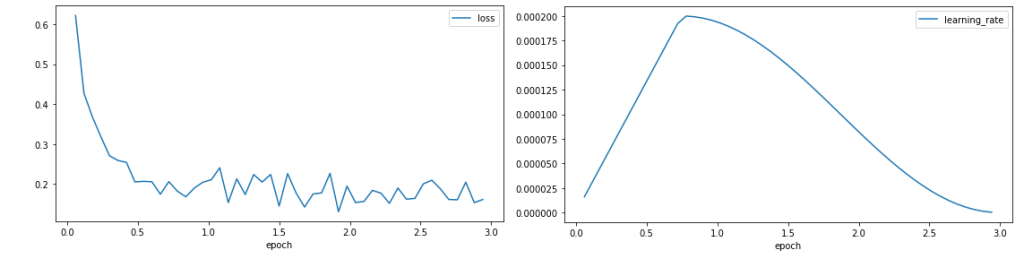

We plot the training loss and the training rate.

The loss curve shows a rapid reduction within the loss at the beginning of coaching before stabilising around 0.1, showing that the model is learning. The training rate increases through the warm-up of 25% of the training period, before following a cosine decay.

The loss curve shows a rapid reduction within the loss at the beginning of coaching before stabilising around 0.1, showing that the model is learning. The training rate increases through the warm-up of 25% of the training period, before following a cosine decay.

Running the evaluation

Now that now we have trained the model, we will evaluate its ability to predict the labels of unseen data using the validation dataset.

The metrics show the validation AUC_ROC rating the tutorial achieves after 3 epochs.

There are several directions to explore to enhance the accuracy of the model including longer training. The validation performance may also be improved through changing optimisers, learning rate, learning rate schedule, loss scaling, or using auto-loss scaling.

Try Hugging Face Optimum on IPUs at no cost

On this post, now we have introduced ViT models and have provided a tutorial for training a Hugging Face Optimum model on the IPU using an area dataset.

The whole process outlined above can now be run end-to-end inside minutes at no cost, due to Graphcore’s latest partnership with Paperspace. Launching today, the service will provide access to a collection of Hugging Face Optimum models powered by Graphcore IPUs inside Gradient—Paperspace’s web-based Jupyter notebooks.

When you’re enthusiastic about trying Hugging Face Optimum with IPUs on Paperspace Gradient including ViT, BERT, RoBERTa and more, you may enroll here and discover a getting began guide here.

More Resources for Hugging Face Optimum on IPUs

This deep dive wouldn’t have been possible without extensive support, guidance, and insights from Eva Woodbridge, James Briggs, Jinchen Ge, Alexandre Payot, Thorin Farnsworth, and all others contributing from Graphcore, in addition to Jeff Boudier, Julien Simon, and Michael Benayoun from Hugging Face.