Large Language Models (LLMs) based on the transformer architecture, like GPT, T5, and BERT have achieved state-of-the-art ends in various Natural Language Processing (NLP) tasks. They’ve also began foraying into other domains, comparable to Computer Vision (CV) (VIT, Stable Diffusion, LayoutLM) and Audio (Whisper, XLS-R). The traditional paradigm is large-scale pretraining on generic web-scale data, followed by fine-tuning to downstream tasks. Positive-tuning these pretrained LLMs on downstream datasets ends in huge performance gains compared to using the pretrained LLMs out-of-the-box (zero-shot inference, for instance).

Nevertheless, as models get larger and bigger, full fine-tuning becomes infeasible to coach on consumer hardware. As well as, storing and deploying fine-tuned models independently for every downstream task becomes very expensive, because fine-tuned models are the identical size as the unique pretrained model. Parameter-Efficient Positive-tuning (PEFT) approaches are meant to deal with each problems!

PEFT approaches only fine-tune a small variety of (extra) model parameters while freezing most parameters of the pretrained LLMs, thereby greatly decreasing the computational and storage costs. This also overcomes the problems of catastrophic forgetting, a behaviour observed through the full finetuning of LLMs. PEFT approaches have also shown to be higher than fine-tuning within the low-data regimes and generalize higher to out-of-domain scenarios. It may be applied to numerous modalities, e.g., image classification and stable diffusion dreambooth.

It also helps in portability wherein users can tune models using PEFT methods to get tiny checkpoints value just a few MBs in comparison with the big checkpoints of full fine-tuning, e.g., bigscience/mt0-xxl takes up 40GB of storage and full fine-tuning will result in 40GB checkpoints for every downstream dataset whereas using PEFT methods it might be just just a few MBs for every downstream dataset all of the while achieving comparable performance to full fine-tuning. The small trained weights from PEFT approaches are added on top of the pretrained LLM. So the identical LLM will be used for multiple tasks by adding small weights without having to exchange the whole model.

In brief, PEFT approaches enable you to get performance comparable to full fine-tuning while only having a small variety of trainable parameters.

Today, we’re excited to introduce the 🤗 PEFT library, which provides the most recent Parameter-Efficient Positive-tuning techniques seamlessly integrated with 🤗 Transformers and 🤗 Speed up. This allows using the preferred and performant models from Transformers coupled with the simplicity and scalability of Speed up. Below are the currently supported PEFT methods, with more coming soon:

- LoRA: LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS

- Prefix Tuning: P-Tuning v2: Prompt Tuning Can Be Comparable to Positive-tuning Universally Across Scales and Tasks

- Prompt Tuning: The Power of Scale for Parameter-Efficient Prompt Tuning

- P-Tuning: GPT Understands, Too

Use Cases

We explore many interesting use cases here. These are just a few of essentially the most interesting ones:

-

Using 🤗 PEFT LoRA for tuning

bigscience/T0_3Bmodel (3 Billion parameters) on consumer hardware with 11GB of RAM, comparable to Nvidia GeForce RTX 2080 Ti, Nvidia GeForce RTX 3080, etc using 🤗 Speed up’s DeepSpeed integration: peft_lora_seq2seq_accelerate_ds_zero3_offload.py. This implies you possibly can tune such large LLMs in Google Colab. -

Taking the previous example a notch up by enabling INT8 tuning of the

OPT-6.7bmodel (6.7 Billion parameters) in Google Colab using 🤗 PEFT LoRA and bitsandbytes: -

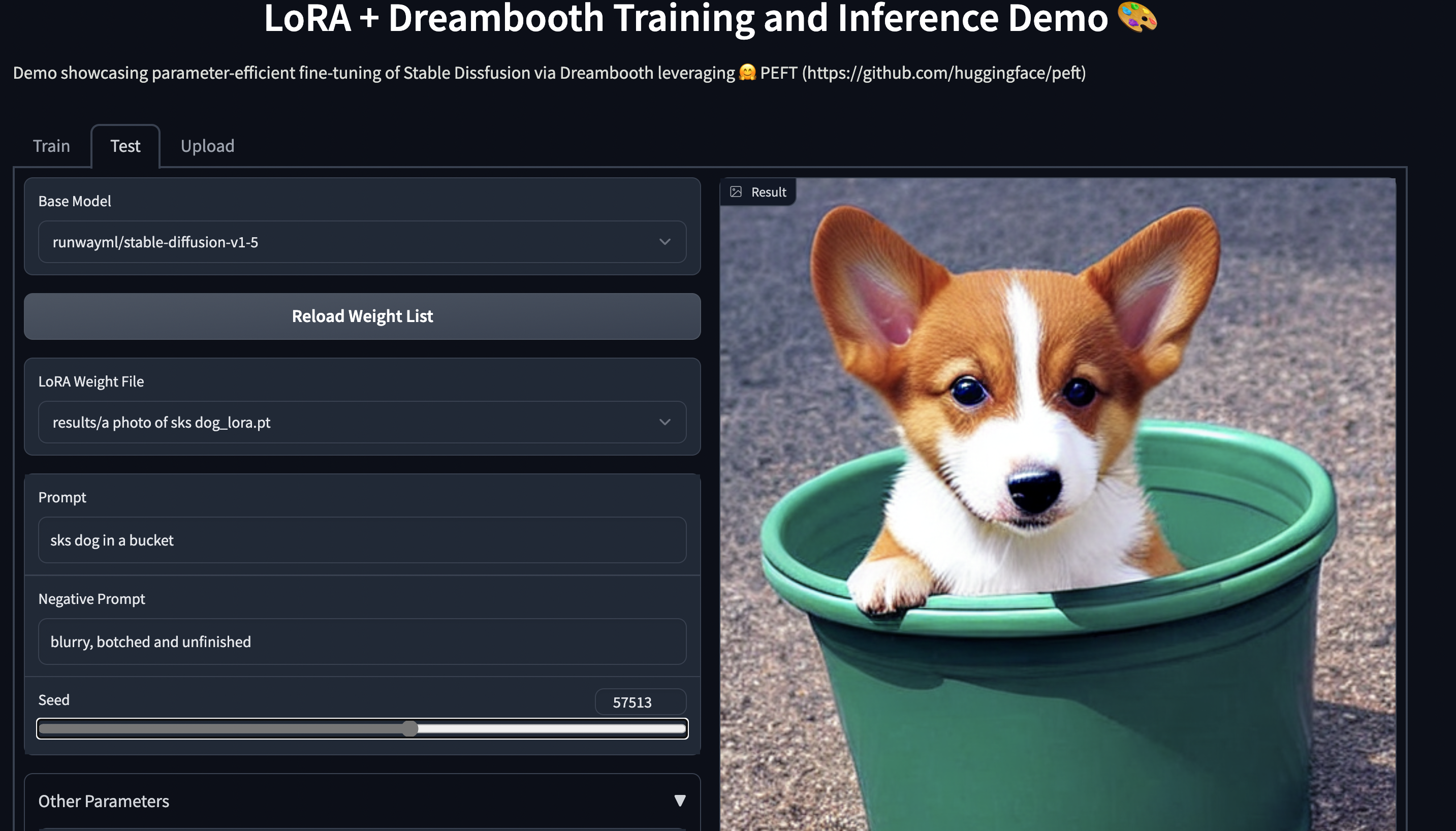

Stable Diffusion Dreambooth training using 🤗 PEFT on consumer hardware with 11GB of RAM, comparable to Nvidia GeForce RTX 2080 Ti, Nvidia GeForce RTX 3080, etc. Check out the Space demo, which should run seamlessly on a T4 instance (16GB GPU): smangrul/peft-lora-sd-dreambooth.

PEFT LoRA Dreambooth Gradio Space

Training your model using 🤗 PEFT

Let’s consider the case of fine-tuning bigscience/mt0-large using LoRA.

- Let’s get the crucial imports

from transformers import AutoModelForSeq2SeqLM

+ from peft import get_peft_model, LoraConfig, TaskType

model_name_or_path = "bigscience/mt0-large"

tokenizer_name_or_path = "bigscience/mt0-large"

- Creating config corresponding to the PEFT method

peft_config = LoraConfig(

task_type=TaskType.SEQ_2_SEQ_LM, inference_mode=False, r=8, lora_alpha=32, lora_dropout=0.1

)

- Wrapping base 🤗 Transformers model by calling

get_peft_model

model = AutoModelForSeq2SeqLM.from_pretrained(model_name_or_path)

+ model = get_peft_model(model, peft_config)

+ model.print_trainable_parameters()

# output: trainable params: 2359296 || all params: 1231940608 || trainable%: 0.19151053100118282

That is it! The remainder of the training loop stays the identical. Please refer example peft_lora_seq2seq.ipynb for an end-to-end example.

- If you end up ready to avoid wasting the model for inference, just do the next.

model.save_pretrained("output_dir")

This may only save the incremental PEFT weights that were trained. For instance, yow will discover the bigscience/T0_3B tuned using LoRA on the twitter_complaints raft dataset here: smangrul/twitter_complaints_bigscience_T0_3B_LORA_SEQ_2_SEQ_LM. Notice that it only accommodates 2 files: adapter_config.json and adapter_model.bin with the latter being just 19MB.

- To load it for inference, follow the snippet below:

from transformers import AutoModelForSeq2SeqLM

+ from peft import PeftModel, PeftConfig

peft_model_id = "smangrul/twitter_complaints_bigscience_T0_3B_LORA_SEQ_2_SEQ_LM"

config = PeftConfig.from_pretrained(peft_model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path)

+ model = PeftModel.from_pretrained(model, peft_model_id)

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model = model.to(device)

model.eval()

inputs = tokenizer("Tweet text : @HondaCustSvc Your customer support has been horrible through the recall process. I won't ever purchase a Honda again. Label :", return_tensors="pt")

with torch.no_grad():

outputs = model.generate(input_ids=inputs["input_ids"].to("cuda"), max_new_tokens=10)

print(tokenizer.batch_decode(outputs.detach().cpu().numpy(), skip_special_tokens=True)[0])

# 'criticism'

Next steps

We have released PEFT as an efficient way of tuning large LLMs on downstream tasks and domains, saving numerous compute and storage while achieving comparable performance to full finetuning. In the approaching months, we’ll be exploring more PEFT methods, comparable to (IA)3 and bottleneck adapters. Also, we’ll deal with recent use cases comparable to INT8 training of whisper-large model in Google Colab and tuning of RLHF components comparable to policy and ranker using PEFT approaches.

Within the meantime, we’re excited to see how industry practitioners apply PEFT to their use cases – if you have got any questions or feedback, open a difficulty on our GitHub repo 🤗.

Pleased Parameter-Efficient Positive-Tuning!