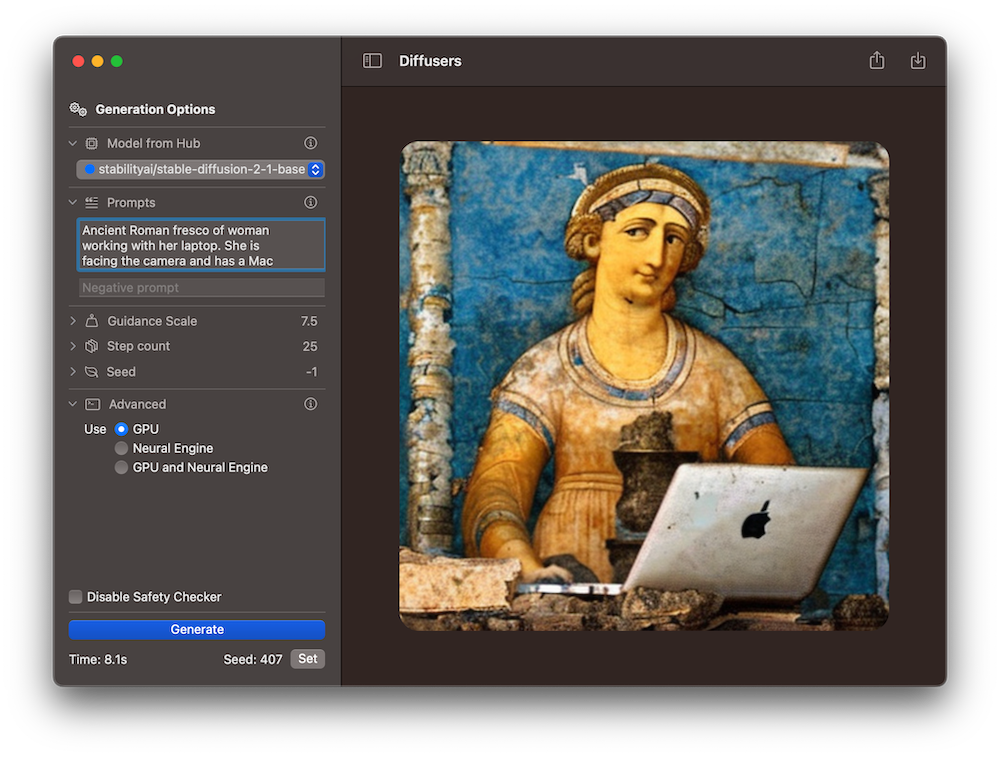

Transform your text into stunning images with ease using Diffusers for Mac, a native app powered by state-of-the-art diffusion models. It leverages a bouquet of SoTA Text-to-Image models contributed by the community to the Hugging Face Hub, and converted to Core ML for blazingly fast performance. Our latest version, 1.1, is now available on the Mac App Store with significant performance upgrades and user-friendly interface tweaks. It is a solid foundation for future feature updates. Plus, the app is fully open source with a permissive license, so you’ll be able to construct on it too! Take a look at our GitHub repository at https://github.com/huggingface/swift-coreml-diffusers for more information.

What exactly is 🧨Diffusers for Mac anyway?

The Diffusers app (App Store, source code) is the Mac counterpart to our 🧨diffusers library. This library is written in Python with PyTorch, and uses a modular design to coach and run diffusion models. It supports many alternative models and tasks, and is extremely configurable and well optimized. It runs on Mac, too, using PyTorch’s mps accelerator, which is an alternative choice to cuda on Apple Silicon.

Why would you need to run a native Mac app then? There are lots of reasons:

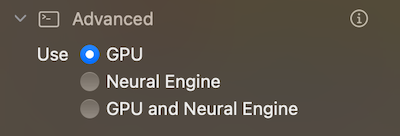

- It uses Core ML models, as an alternative of the unique PyTorch ones. This is significant because they permit for additional optimizations relevant to the specifics of Apple hardware, and since Core ML models can run on all of the compute devices in your system: the CPU, the GPU and the Neural Engine, directly – the Core ML framework will resolve what portions of your model to run on each device to make it as fast as possible. PyTorch’s

mpsdevice cannot use the Neural Engine. - It is a Mac app! We attempt to follow Apple’s design language and guidelines so it feels at home in your Mac. No need to make use of the command line, create virtual environments or fix dependencies.

- It’s local and personal. You do not need credits for online services and won’t experience long queues – just generate all the photographs you would like and use them for fun or work. Privacy is guaranteed: your prompts and pictures are yours to make use of, and won’t ever leave your computer (unless you select to share them).

- It’s open source, and it uses Swift, Swift UI and the most recent languages and technologies for Mac and iOS development. Should you are technically inclined, you should use Xcode to increase the code as you want. We welcome your contributions, too!

Performance Benchmarks

TL;DR: Depending in your computer Text-to-Image Generation might be as much as twice as fast on Diffusers 1.1. ⚡️

We have done a variety of testing on several Macs to find out the most effective mixtures of compute devices that yield optimum performance. For some computers it is best to make use of the GPU, while others work higher when the Neural Engine, or ANE, is engaged.

Come try our benchmarks. All of the mixtures use the CPU along with either the GPU or the ANE.

| Model name | Benchmark | M1 8 GB | M1 16 GB | M2 24 GB | M1 Max 64 GB |

|---|---|---|---|---|---|

| Cores (performance/GPU/ANE) | 4/8/16 | 4/8/16 | 4/8/16 | 8/32/16 | |

| Stable Diffusion 1.5 | |||||

| GPU | 32.9 | 32.8 | 21.9 | 9 | |

| ANE | 18.8 | 18.7 | 13.1 | 20.4 | |

| Stable Diffusion 2 Base | |||||

| GPU | 30.2 | 30.2 | 19.4 | 8.3 | |

| ANE | 14.5 | 14.4 | 10.5 | 15.3 | |

| Stable Diffusion 2.1 Base | |||||

| GPU | 29.6 | 29.4 | 19.5 | 8.3 | |

| ANE | 14.3 | 14.3 | 10.5 | 15.3 | |

| OFA-Sys/small-stable-diffusion-v0 | |||||

| GPU | 22.1 | 22.5 | 14.5 | 6.3 | |

| ANE | 12.3 | 12.7 | 9.1 | 13.2 |

We found that the quantity of memory doesn’t appear to play an enormous factor on performance, however the variety of CPU and GPU cores does. For instance, on a M1 Max laptop, the generation with GPU is lots faster than with ANE. That is likely since it has 4 times the variety of GPU cores (and twice as many CPU performance cores) than the usual M1 processor, for a similar amount of neural engine cores. Conversely, the usual M1 processors present in Mac Minis are twice as fast using ANE than GPU. Interestingly, we tested using each GPU and ANE accelerators together, and located that it doesn’t improve performance with respect to the most effective results obtained with just one in every of them. The cut point appears to be across the hardware characteristics of the M1 Pro chip (8 performance cores, 14 or 16 GPU cores), which we do not have access to in the meanwhile.

🧨Diffusers version 1.1 mechanically selects the most effective accelerator based on the pc where the app runs. Some device configurations, just like the “Pro” variants, should not offered by any cloud services we all know of, so our heuristics may very well be improved for them. Should you’d wish to help us gather data to maintain improving the out-of-the-box experience of our app, read on!

Community Call for Benchmark Data

We’re excited by running more comprehensive performance benchmarks on Mac devices. Should you’d wish to help, we have created this GitHub issue where you’ll be able to post your results. We’ll use them to optimize performance on an upcoming version of the app. We’re particularly excited by M1 Pro, M2 Pro and M2 Max architectures 🤗

Other Improvements in Version 1.1

Along with the performance optimization and fixing a couple of bugs, we’ve focused on adding recent features while attempting to keep the UI as easy and clean as possible. Most of them are obvious (guidance scale, optionally disable the security checker, allow generations to be canceled). Our favourite ones are the model download indicators, and a shortcut to reuse the seed from a previous generation with the intention to tweak the generation parameters.

Version 1.1 also includes additional details about what the several generation settings do. We would like 🧨Diffusers for Mac to make image generation as approachable as possible to all Mac users, not only technologists.

Next Steps

We consider there’s a variety of untapped potential for image generation within the Apple ecosystem. In future updates we wish to concentrate on the next:

- Easy accessibility to additional models from the Hub. Run any Dreambooth or fine-tuned model from the app, in a Mac-like way.

- Release a version for iOS and iPadOS.

There are lots of more ideas that we’re considering. Should you’d wish to suggest your individual, you might be most welcome to achieve this in our GitHub repo.