Ever since Stable Diffusion took the world by storm, people have been on the lookout for ways to have more control over the outcomes of the generation process. ControlNet provides a minimal interface allowing users to customize the generation process as much as a fantastic extent. With ControlNet, users can easily condition the generation with different spatial contexts reminiscent of a depth map, a segmentation map, a scribble, keypoints, and so forth!

We are able to turn a cartoon drawing into a practical photo with incredible coherence.

| Realistic Lofi Girl |

|---|

|

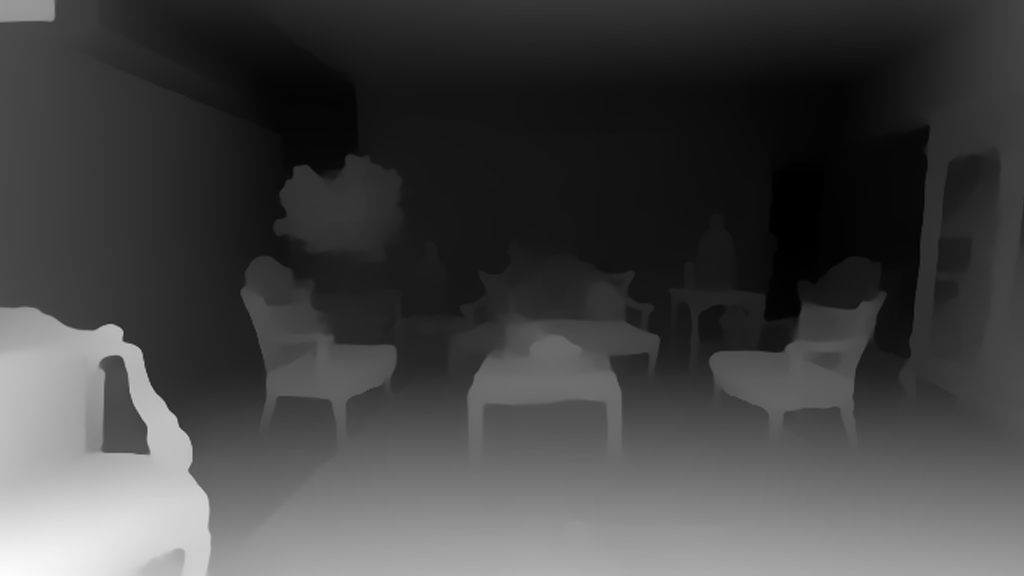

And even use it as your interior designer.

| Before | After |

|---|---|

|

|

You possibly can turn your sketch scribble into an inventive drawing.

| Before | After |

|---|---|

|

|

Also, make among the famous logos coming to life.

| Before | After |

|---|---|

|

|

With ControlNet, the sky is the limit 🌠

On this blog post, we first introduce the StableDiffusionControlNetPipeline after which show how it could possibly be applied for various control conditionings. Let’s get controlling!

ControlNet: TL;DR

ControlNet was introduced in Adding Conditional Control to Text-to-Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala.

It introduces a framework that enables for supporting various spatial contexts that may function additional conditionings to Diffusion models reminiscent of Stable Diffusion.

The diffusers implementation is customized from the unique source code.

Training ControlNet is comprised of the next steps:

- Cloning the pre-trained parameters of a Diffusion model, reminiscent of Stable Diffusion’s latent UNet, (known as “trainable copy”) while also maintaining the pre-trained parameters individually (”locked copy”). It is completed in order that the locked parameter copy can preserve the vast knowledge learned from a big dataset, whereas the trainable copy is employed to learn task-specific points.

- The trainable and locked copies of the parameters are connected via “zero convolution” layers (see here for more information) that are optimized as an element of the ControlNet framework. It is a training trick to preserve the semantics already learned by frozen model as the brand new conditions are trained.

Pictorially, training a ControlNet looks like so:

The diagram is taken from here.

A sample from the training set for ControlNet-like training looks like this (additional conditioning is via edge maps):

| Prompt | Original Image | Conditioning |

|---|---|---|

| “bird” |  |

|

Similarly, if we were to condition ControlNet with semantic segmentation maps, a training sample can be like so:

| Prompt | Original Image | Conditioning |

|---|---|---|

| “big house” |  |

|

Every recent variety of conditioning requires training a brand new copy of ControlNet weights.

The paper proposed 8 different conditioning models which are all supported in Diffusers!

For inference, each the pre-trained diffusion models weights in addition to the trained ControlNet weights are needed. For instance, using Stable Diffusion v1-5

with a ControlNet checkpoint require roughly 700 million more parameters in comparison with just using the unique Stable Diffusion model, which makes ControlNet a bit more memory-expensive for inference.

Since the pre-trained diffusion models are locked during training, one only needs to modify out the ControlNet parameters when using a unique conditioning. This makes it fairly easy

to deploy multiple ControlNet weights in a single application as we’ll see below.

The StableDiffusionControlNetPipeline

Before we start, we wish to present an enormous shout-out to the community contributor Takuma Mori for having led the mixing of ControlNet into Diffusers ❤️ .

To experiment with ControlNet, Diffusers exposes the StableDiffusionControlNetPipeline much like

the other Diffusers pipelines. Central to the StableDiffusionControlNetPipeline is the controlnet argument which lets us provide a specific trained ControlNetModel instance while keeping the pre-trained diffusion model weights the identical.

We’ll explore different use cases with the StableDiffusionControlNetPipeline on this blog post. The primary ControlNet model we’re going to walk through is the Canny model – that is probably the most popular models that generated among the amazing images you might be libely seeing on the web.

We welcome you to run the code snippets shown within the sections below with this Colab Notebook.

Before we start, let’s make certain we have now all of the obligatory libraries installed:

pip install diffusers==0.14.0 transformers xformers git+https://github.com/huggingface/speed up.git

To process different conditionings depending on the chosen ControlNet, we also need to put in some

additional dependencies:

pip install opencv-contrib-python

pip install controlnet_aux

We’ll use the famous painting “Girl With A Pearl” for this instance. So, let’s download the image and have a look:

from diffusers.utils import load_image

image = load_image(

"https://hf.co/datasets/huggingface/documentation-images/resolve/major/diffusers/input_image_vermeer.png"

)

image

Next, we’ll put the image through the canny pre-processor:

import cv2

from PIL import Image

import numpy as np

image = np.array(image)

low_threshold = 100

high_threshold = 200

image = cv2.Canny(image, low_threshold, high_threshold)

image = image[:, :, None]

image = np.concatenate([image, image, image], axis=2)

canny_image = Image.fromarray(image)

canny_image

As we are able to see, it is basically edge detection:

Now, we load runwaylml/stable-diffusion-v1-5 in addition to the ControlNet model for canny edges.

The models are loaded in half-precision (torch.dtype) to permit for fast and memory-efficient inference.

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

import torch

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16)

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

As a substitute of using Stable Diffusion’s default PNDMScheduler, we use considered one of the currently fastest

diffusion model schedulers, called UniPCMultistepScheduler.

Selecting an improved scheduler can drastically reduce inference time – in our case we’re capable of reduce the variety of inference steps from 50 to twenty while roughly

keeping the identical image generation quality. More information regarding schedulers may be found here.

from diffusers import UniPCMultistepScheduler

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

As a substitute of loading our pipeline on to GPU, we as an alternative enable smart CPU offloading which

may be achieved with the enable_model_cpu_offload function.

Keep in mind that during inference diffusion models, reminiscent of Stable Diffusion require not only one but multiple model components which are run sequentially.

Within the case of Stable Diffusion with ControlNet, we first use the CLIP text encoder, then the diffusion model unet and control net, then the VAE decoder and at last run a security checker.

Most components are only run once through the diffusion process and are thus not required to occupy GPU memory on a regular basis. By enabling smart model offloading, we make certain

that every component is just loaded into GPU when it’s needed in order that we are able to significantly save memory consumption without significantly slowing down infenence.

Note: When running enable_model_cpu_offload, don’t manually move the pipeline to GPU with .to("cuda") – once CPU offloading is enabled, the pipeline robotically takes care of GPU memory management.

pipe.enable_model_cpu_offload()

Finally, we wish to take full advantage of the amazing FlashAttention/xformers attention layer acceleration, so let’s enable this! If this command doesn’t give you the results you want, you may not have xformers accurately installed.

On this case, you may just skip the next line of code.

pipe.enable_xformers_memory_efficient_attention()

Now we’re able to run the ControlNet pipeline!

We still provide a prompt to guide the image generation process, similar to what we’d normally do with a Stable Diffusion image-to-image pipeline. Nonetheless, ControlNet will allow so much more control over the generated image because we’ll have the opportunity to regulate the precise composition in generated image with the canny edge image we just created.

It would be fun to see some images where contemporary celebrities posing for this very same painting from the seventeenth century. And it’s very easy to do this with ControlNet, all we have now to do is to incorporate the names of those celebrities within the prompt!

Let’s first create a straightforward helper function to display images as a grid.

def image_grid(imgs, rows, cols):

assert len(imgs) == rows * cols

w, h = imgs[0].size

grid = Image.recent("RGB", size=(cols * w, rows * h))

grid_w, grid_h = grid.size

for i, img in enumerate(imgs):

grid.paste(img, box=(i % cols * w, i // cols * h))

return grid

Next, we define the input prompts and set a seed for reproducibility.

prompt = ", highest quality, extremely detailed"

prompt = [t + prompt for t in ["Sandra Oh", "Kim Kardashian", "rihanna", "taylor swift"]]

generator = [torch.Generator(device="cpu").manual_seed(2) for i in range(len(prompt))]

Finally, we are able to run the pipeline and display the image!

output = pipe(

prompt,

canny_image,

negative_prompt=["monochrome, lowres, bad anatomy, worst quality, low quality"] * 4,

num_inference_steps=20,

generator=generator,

)

image_grid(output.images, 2, 2)

We are able to effortlessly mix ControlNet with fine-tuning too! For instance, we are able to fine-tune a model with DreamBooth, and use it to render ourselves into different scenes.

On this post, we’re going to use our beloved Mr Potato Head for instance to indicate easy methods to use ControlNet with DreamBooth.

We are able to use the identical ControlNet. Nonetheless, as an alternative of using the Stable Diffusion 1.5, we’re going to load the Mr Potato Head model into our pipeline – Mr Potato Head is a Stable Diffusion model fine-tuned with Mr Potato Head concept using Dreambooth 🥔

Let’s run the above commands again, keeping the identical controlnet though!

model_id = "sd-dreambooth-library/mr-potato-head"

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id,

controlnet=controlnet,

torch_dtype=torch.float16,

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

pipe.enable_xformers_memory_efficient_attention()

Now let’s make Mr Potato posing for Johannes Vermeer!

generator = torch.manual_seed(2)

prompt = "a photograph of sks mr potato head, highest quality, extremely detailed"

output = pipe(

prompt,

canny_image,

negative_prompt="monochrome, lowres, bad anatomy, worst quality, low quality",

num_inference_steps=20,

generator=generator,

)

output.images[0]

It’s noticeable that Mr Potato Head is just not the perfect candidate but he tried his best and did a reasonably good job in capturing among the essence 🍟

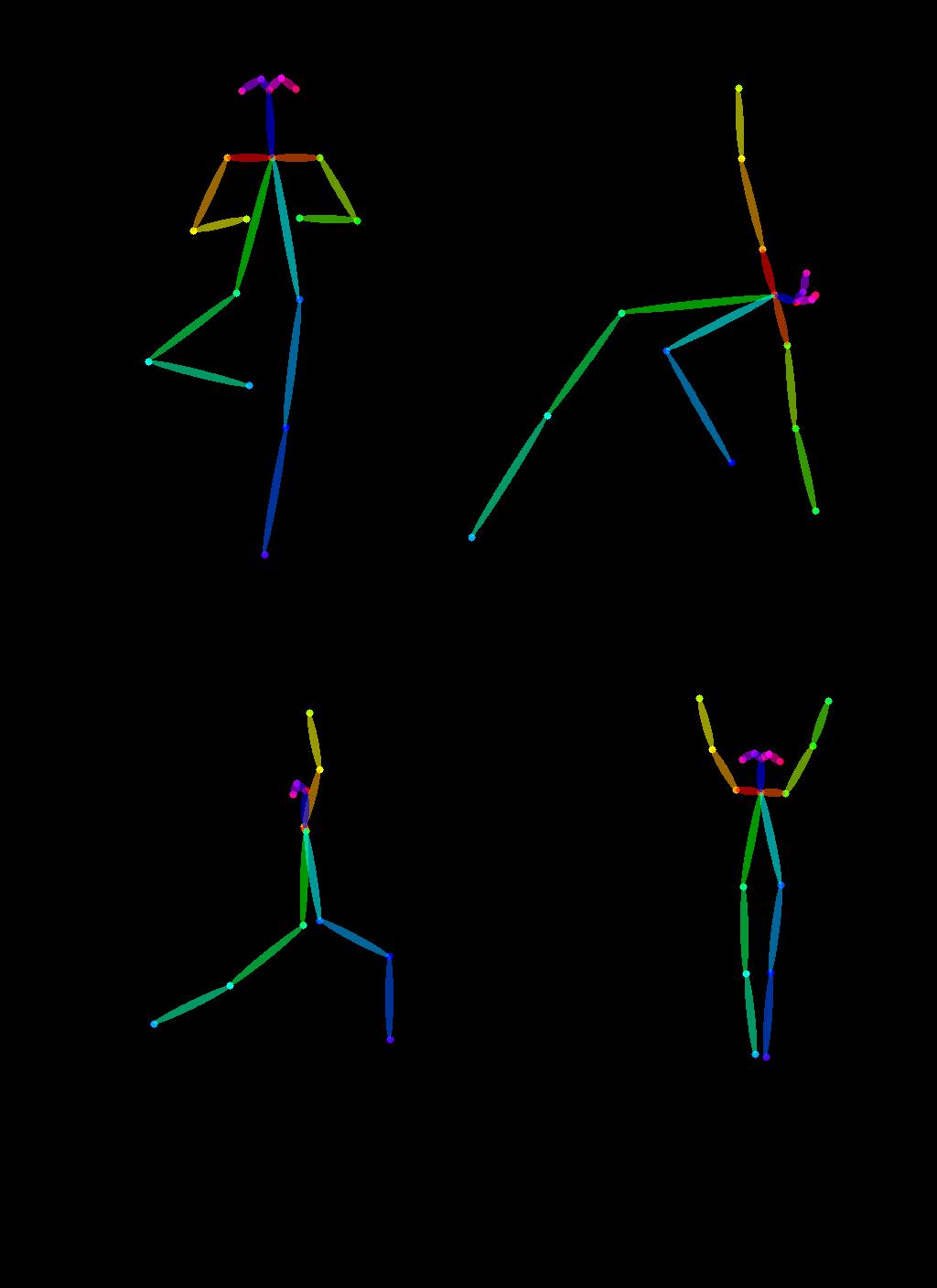

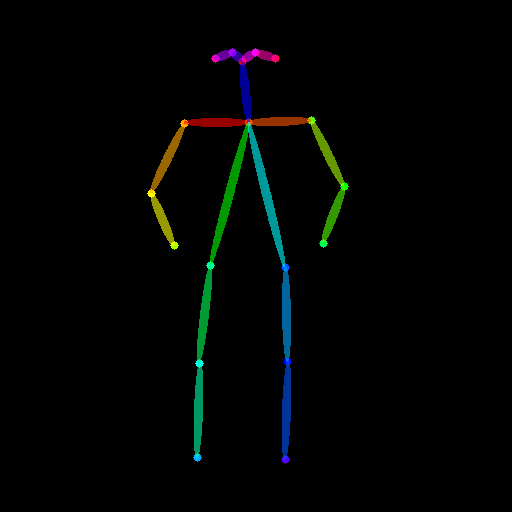

One other exclusive application of ControlNet is that we are able to take a pose from one image and reuse it to generate a unique image with the very same pose. So on this next example, we’re going to teach superheroes easy methods to do yoga using Open Pose ControlNet!

First, we’ll have to get some images of individuals doing yoga:

urls = "yoga1.jpeg", "yoga2.jpeg", "yoga3.jpeg", "yoga4.jpeg"

imgs = [

load_image("https://huggingface.co/datasets/YiYiXu/controlnet-testing/resolve/main/" + url)

for url in urls

]

image_grid(imgs, 2, 2)

Now let’s extract yoga poses using the OpenPose pre-processors which are handily available via controlnet_aux.

from controlnet_aux import OpenposeDetector

model = OpenposeDetector.from_pretrained("lllyasviel/ControlNet")

poses = [model(img) for img in imgs]

image_grid(poses, 2, 2)

To make use of these yoga poses to generate recent images, let’s create a Open Pose ControlNet. We’ll generate some super-hero images but within the yoga poses shown above. Let’s go 🚀

controlnet = ControlNetModel.from_pretrained(

"fusing/stable-diffusion-v1-5-controlnet-openpose", torch_dtype=torch.float16

)

model_id = "runwayml/stable-diffusion-v1-5"

pipe = StableDiffusionControlNetPipeline.from_pretrained(

model_id,

controlnet=controlnet,

torch_dtype=torch.float16,

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_model_cpu_offload()

Now it’s yoga time!

generator = [torch.Generator(device="cpu").manual_seed(2) for i in range(4)]

prompt = "super-hero character, highest quality, extremely detailed"

output = pipe(

[prompt] * 4,

poses,

negative_prompt=["monochrome, lowres, bad anatomy, worst quality, low quality"] * 4,

generator=generator,

num_inference_steps=20,

)

image_grid(output.images, 2, 2)

Combining multiple conditionings

Multiple ControlNet conditionings may be combined for a single image generation. Pass a listing of ControlNets to the pipeline’s constructor and a corresponding list of conditionings to __call__.

When combining conditionings, it is useful to mask conditionings such that they don’t overlap. In the instance, we mask the center of the canny map where the pose conditioning is positioned.

It could actually even be helpful to differ the controlnet_conditioning_scales to emphasise one conditioning over the opposite.

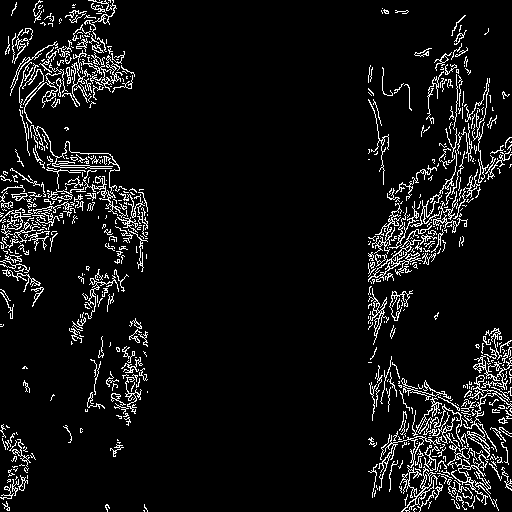

Canny conditioning

The unique image

Prepare the conditioning

from diffusers.utils import load_image

from PIL import Image

import cv2

import numpy as np

from diffusers.utils import load_image

canny_image = load_image(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/major/diffusers/landscape.png"

)

canny_image = np.array(canny_image)

low_threshold = 100

high_threshold = 200

canny_image = cv2.Canny(canny_image, low_threshold, high_threshold)

zero_start = canny_image.shape[1] // 4

zero_end = zero_start + canny_image.shape[1] // 2

canny_image[:, zero_start:zero_end] = 0

canny_image = canny_image[:, :, None]

canny_image = np.concatenate([canny_image, canny_image, canny_image], axis=2)

canny_image = Image.fromarray(canny_image)

Openpose conditioning

The unique image

Prepare the conditioning

from controlnet_aux import OpenposeDetector

from diffusers.utils import load_image

openpose = OpenposeDetector.from_pretrained("lllyasviel/ControlNet")

openpose_image = load_image(

"https://huggingface.co/datasets/huggingface/documentation-images/resolve/major/diffusers/person.png"

)

openpose_image = openpose(openpose_image)

Running ControlNet with multiple conditionings

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

import torch

controlnet = [

ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-openpose", torch_dtype=torch.float16),

ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-canny", torch_dtype=torch.float16),

]

pipe = StableDiffusionControlNetPipeline.from_pretrained(

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float16

)

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

pipe.enable_xformers_memory_efficient_attention()

pipe.enable_model_cpu_offload()

prompt = "a large standing in a fantasy landscape, highest quality"

negative_prompt = "monochrome, lowres, bad anatomy, worst quality, low quality"

generator = torch.Generator(device="cpu").manual_seed(1)

images = [openpose_image, canny_image]

image = pipe(

prompt,

images,

num_inference_steps=20,

generator=generator,

negative_prompt=negative_prompt,

controlnet_conditioning_scale=[1.0, 0.8],

).images[0]

image.save("./multi_controlnet_output.png")

Throughout the examples, we explored multiple facets of the StableDiffusionControlNetPipeline to indicate how easy and intuitive it’s mess around with ControlNet via Diffusers. Nonetheless, we didn’t cover every kind of conditionings supported by ControlNet. To know more about those, we encourage you to envision out the respective model documentation pages:

We welcome you to mix these different elements and share your results with @diffuserslib. Make sure to take a look at the Colab Notebook to take among the above examples for a spin!

We also showed some techniques to make the generation process faster and memory-friendly by utilizing a quick scheduler, smart model offloading and xformers. With these techniques combined the generation process takes only ~3 seconds on a V100 GPU and consumes just ~4 GBs of VRAM for a single image ⚡️ On free services like Google Colab, generation takes about 5s on the default GPU (T4), whereas the unique implementation requires 17s to create the identical result! Combining all of the pieces within the diffusers toolbox is an actual superpower 💪

Conclusion

We’ve been playing so much with StableDiffusionControlNetPipeline, and our experience has been fun up to now! We’re excited to see what the community builds on top of this pipeline. If you would like to take a look at other pipelines and techniques supported in Diffusers that allow for controlled generation, take a look at our official documentation.

When you cannot wait to check out ControlNet directly, we got you covered as well! Simply click on considered one of the next spaces to mess around with ControlNet: