ChatGPT and chatbot-powered applications have captured significant attention within the Natural Language Processing (NLP) domain. The community is continually looking for strong, reliable and open-source models for his or her applications and use cases.

The rise of those powerful models stems from the democratization and widespread adoption of transformer-based models, first introduced by Vaswani et al. in 2017. These models significantly outperformed previous SoTA NLP models based on Recurrent Neural Networks (RNNs), which were considered dead after that paper.

Through this blogpost, we are going to introduce the combination of a brand new architecture, RWKV, that mixes the benefits of each RNNs and transformers, and that has been recently integrated into the Hugging Face transformers library.

Overview of the RWKV project

The RWKV project was kicked off and is being led by Bo Peng, who’s actively contributing and maintaining the project. The community, organized within the official discord channel, is continually enhancing the project’s artifacts on various topics resembling performance (RWKV.cpp, quantization, etc.), scalability (dataset processing & scrapping) and research (chat-fine tuning, multi-modal finetuning, etc.). The GPUs for training RWKV models are donated by Stability AI.

You possibly can get entangled by joining the official discord channel and learn more in regards to the general ideas behind RWKV in these two blogposts: https://johanwind.github.io/2023/03/23/rwkv_overview.html / https://johanwind.github.io/2023/03/23/rwkv_details.html

Transformer Architecture vs RNNs

The RNN architecture is considered one of the primary widely used Neural Network architectures for processing a sequence of information, contrary to classic architectures that take a set size input. It takes as input the present “token” (i.e. current data point of the datastream), the previous “state”, and computes the expected next token, and the expected next state. The brand new state is then used to compute the prediction of the following token, and so forth.

A RNN will be also used in numerous “modes”, due to this fact enabling the opportunity of applying RNNs on different scenarios, as denoted by Andrej Karpathy’s blogpost, resembling one-to-one (image-classification), one-to-many (image captioning), many-to-one (sequence classification), many-to-many (sequence generation), etc.

Because RNNs use the identical weights to compute predictions at every step, they struggle to memorize information for long-range sequences attributable to the vanishing gradient issue. Efforts have been made to handle this limitation by introducing recent architectures resembling LSTMs or GRUs. Nonetheless, the transformer architecture proved to be essentially the most effective to this point in resolving this issue.

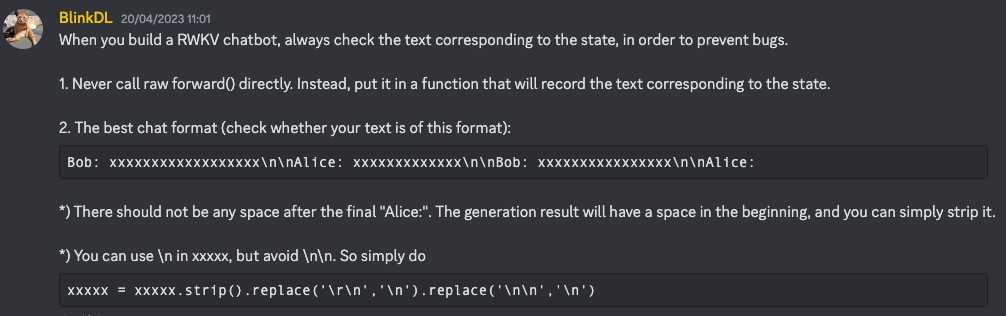

Within the transformer architecture, the input tokens are processed concurrently within the self-attention module. The tokens are first linearly projected into different spaces using the query, key and value weights. The resulting matrices are directly used to compute the eye scores (through softmax, as shown below), then multiplied by the worth hidden states to acquire the ultimate hidden states. This design enables the architecture to effectively mitigate the long-range sequence issue, and likewise perform faster inference and training in comparison with RNN models.

|

|---|

| Formulation of attention scores in RWKV models. Source: RWKV blogpost |

During training, Transformer architecture has several benefits over traditional RNNs and CNNs. Some of the significant benefits is its ability to learn contextual representations. Unlike the RNNs and CNNs, which process input sequences one word at a time, Transformer architecture processes input sequences as a complete. This enables it to capture long-range dependencies between words within the sequence, which is especially useful for tasks resembling language translation and query answering.

During inference, RNNs have some benefits in speed and memory efficiency. These benefits include simplicity, attributable to needing only matrix-vector operations, and memory efficiency, because the memory requirements don’t grow during inference. Moreover, the computation speed stays the identical with context window length attributable to how computations only act on the present token and the state.

The RWKV architecture

RWKV is inspired by Apple’s Attention Free Transformer. The architecture has been rigorously simplified and optimized such that it may be transformed into an RNN. As well as, a variety of tricks has been added resembling TokenShift & SmallInitEmb (the list of tricks is listed in the README of the official GitHub repository) to spice up its performance to match GPT. Without these, the model would not be as performant.

For training, there may be an infrastructure to scale the training as much as 14B parameters as of now, and a few issues have been iteratively fixed in RWKV-4 (latest version as of today), resembling numerical instability.

RWKV as a mix of RNNs and transformers

Easy methods to mix the most effective of transformers and RNNs? The foremost drawback of transformer-based models is that it may turn out to be difficult to run a model with a context window that’s larger than a certain value, as the eye scores are computed concurrently for your complete sequence.

RNNs natively support very long context lengths – only limited by the context length seen in training, but this will be prolonged to tens of millions of tokens with careful coding. Currently, there are RWKV models trained on a context length of 8192 (ctx8192) they usually are as fast as ctx1024 models and require the identical amount of RAM.

The foremost drawbacks of traditional RNN models and the way RWKV is different:

- Traditional RNN models are unable to utilize very long contexts (LSTM can only manage ~100 tokens when used as a LM). Nonetheless, RWKV can utilize hundreds of tokens and beyond, as shown below:

- Traditional RNN models can’t be parallelized when training. RWKV is comparable to a “linearized GPT” and it trains faster than GPT.

By combining each benefits right into a single architecture, the hope is that RWKV can grow to turn out to be greater than the sum of its parts.

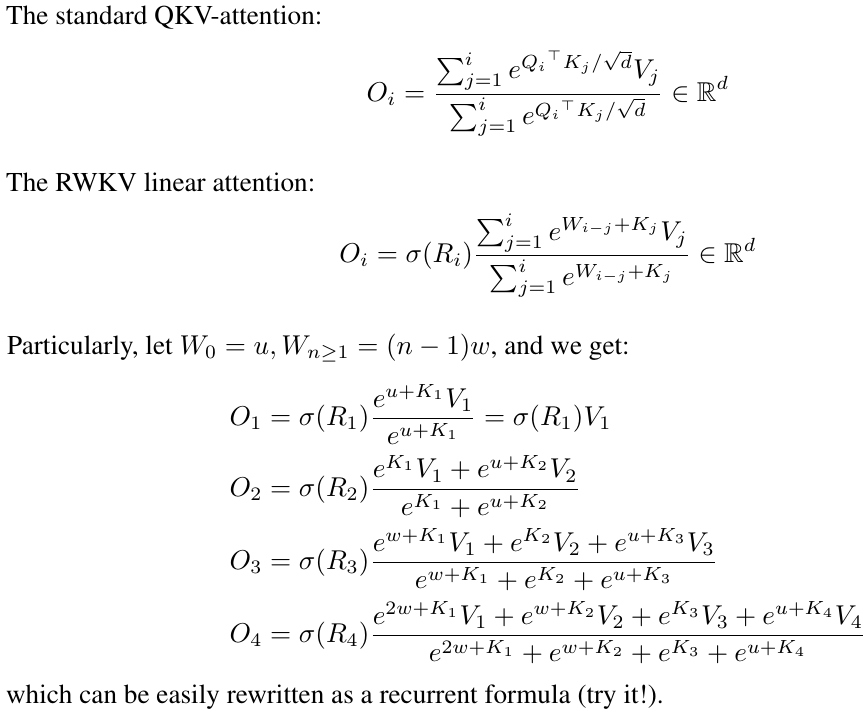

RWKV attention formulation

The model architecture may be very much like classic transformer-based models (i.e. an embedding layer, multiple an identical layers, layer normalization, and a Causal Language Modeling head to predict the following token). The one difference is on the eye layer, which is totally different from the normal transformer-based models.

To achieve a more comprehensive understanding of the eye layer, we recommend to delve into the detailed explanation provided in a blog post by Johan Sokrates Wind.

Existing checkpoints

Pure language models: RWKV-4 models

Most adopted RWKV models range from ~170M parameters to 14B parameters. In accordance with the RWKV overview blog post, these models have been trained on the Pile dataset and evaluated against other SoTA models on different benchmarks, they usually appear to perform quite well, with very comparable results against them.

Instruction Superb-tuned/Chat Version: RWKV-4 Raven

Bo has also trained a “chat” version of the RWKV architecture, the RWKV-4 Raven model. It’s a RWKV-4 pile (RWKV model pretrained on The Pile dataset) model fine-tuned on ALPACA, CodeAlpaca, Guanaco, GPT4All, ShareGPT and more. The model is obtainable in multiple versions, with models trained on different languages (English only, English + Chinese + Japanese, English + Japanese, etc.) and different sizes (1.5B parameters, 7B parameters, 14B parameters).

All of the HF converted models can be found on Hugging Face Hub, within the RWKV organization.

🤗 Transformers integration

The architecture has been added to the transformers library due to this Pull Request. As of the time of writing, you should utilize it by installing transformers from source, or through the use of the foremost branch of the library. The architecture is tightly integrated with the library, and you should utilize it as you’d some other architecture.

Allow us to walk through some examples below.

Text Generation Example

To generate text given an input prompt you should utilize pipeline to generate text:

from transformers import pipeline

model_id = "RWKV/rwkv-4-169m-pile"

prompt = "nIn a shocking finding, scientist discovered a herd of dragons living in a distant, previously unexplored valley, in Tibet. Much more surprising to the researchers was the incontrovertible fact that the dragons spoke perfect Chinese."

pipe = pipeline("text-generation", model=model_id)

print(pipe(prompt, max_new_tokens=20))

>>> [{'generated_text': 'nIn a shocking finding, scientist discovered a herd of dragons living in a remote, previously unexplored valley, in Tibet. Even more surprising to the researchers was the fact that the dragons spoke perfect Chinese.nnThe researchers found that the dragons were able to communicate with each other, and that they were'}]

Or you’ll be able to run and begin from the snippet below:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("RWKV/rwkv-4-169m-pile")

tokenizer = AutoTokenizer.from_pretrained("RWKV/rwkv-4-169m-pile")

prompt = "nIn a shocking finding, scientist discovered a herd of dragons living in a distant, previously unexplored valley, in Tibet. Much more surprising to the researchers was the incontrovertible fact that the dragons spoke perfect Chinese."

inputs = tokenizer(prompt, return_tensors="pt")

output = model.generate(inputs["input_ids"], max_new_tokens=20)

print(tokenizer.decode(output[0].tolist()))

>>> In a shocking finding, scientist discovered a herd of dragons living in a distant, previously unexplored valley, in Tibet. Much more surprising to the researchers was the incontrovertible fact that the dragons spoke perfect Chinese.nnThe researchers found that the dragons were capable of communicate with one another, and that they were

Use the raven models (chat models)

You possibly can prompt the chat model within the alpaca style, here is an example below:

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "RWKV/rwkv-raven-1b5"

model = AutoModelForCausalLM.from_pretrained(model_id).to(0)

tokenizer = AutoTokenizer.from_pretrained(model_id)

query = "Tell me about ravens"

prompt = f"### Instruction: {query}n### Response:"

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(inputs["input_ids"], max_new_tokens=100)

print(tokenizer.decode(output[0].tolist(), skip_special_tokens=True))

>>>

In accordance with Bo, higher instruction techniques are detailed in this discord message (be sure to hitch the channel before clicking)

Weights conversion

Any user could easily convert the unique RWKV weights to the HF format by simply running the conversion script provided within the transformers library. First, push the “raw” weights to the Hugging Face Hub (let’s denote that repo as RAW_HUB_REPO, and the raw file RAW_FILE), then run the conversion script:

python convert_rwkv_checkpoint_to_hf.py --repo_id RAW_HUB_REPO --checkpoint_file RAW_FILE --output_dir OUTPUT_DIR

If you ought to push the converted model on the Hub (for instance, under dummy_user/converted-rwkv), first forget to log in with huggingface-cli login before pushing the model, then run:

python convert_rwkv_checkpoint_to_hf.py --repo_id RAW_HUB_REPO --checkpoint_file RAW_FILE --output_dir OUTPUT_DIR --push_to_hub --model_name dummy_user/converted-rwkv

Future work

Multi-lingual RWKV

Bo is currently working on a multilingual corpus to coach RWKV models. Recently a brand new multilingual tokenizer has been released.

Community-oriented and research projects

The RWKV community may be very lively and dealing on several follow up directions, an inventory of cool projects will be find in a dedicated channel on discord (be sure to hitch the channel before clicking the link).

There may be also a channel dedicated to research around this architecure, be happy to hitch and contribute!

Model Compression and Acceleration

Attributable to only needing matrix-vector operations, RWKV is a really perfect candidate for non-standard and experimental computing hardware, resembling photonic processors/accelerators.

Due to this fact, the architecture may naturally profit from classic acceleration and compression techniques (resembling ONNX, 4-bit/8-bit quantization, etc.), and we hope this shall be democratized for developers and practitioners along with the transformers integration of the architecture.

RWKV may profit from the acceleration techniques proposed by optimum library within the near future.

A few of these techniques are highlighted within the rwkv.cpp repository or rwkv-cpp-cuda repository.

Acknowledgements

The Hugging Face team would really like to thank Bo and RWKV community for his or her time and for answering our questions on the architecture. We might also wish to thank them for his or her help and support and we stay up for see more adoption of RWKV models within the HF ecosystem.

We also would really like to acknowledge the work of Johan Wind for his blogpost on RWKV, which helped us loads to know the architecture and its potential.

And at last, we would really like to spotlight anf acknowledge the work of ArEnSc for starting over the initial transformers PR.

Also big kudos to Merve Noyan, Maria Khalusova and Pedro Cuenca for kindly reviewing this blogpost to make it significantly better!

Citation

In case you use RWKV in your work, please use the next cff citation.