Today, we’re thrilled to announce that Hugging Face expands its collaboration with Microsoft to bring open-source models from the Hugging Face Hub to Azure Machine Learning. Together we built a brand new Hugging Face Hub Model Catalog available directly inside Azure Machine Learning Studio, crammed with hundreds of the most well-liked Transformers models from the Hugging Face Hub. With this recent integration, you’ll be able to now deploy Hugging Face models in only a couple of clicks on managed endpoints, running onto secure and scalable Azure infrastructure.

This recent experience expands upon the strategic partnership we announced last yr after we launched Azure Machine Learning Endpoints as a brand new managed app in Azure Marketplace, to simplify the experience of deploying large language models on Azure. Although our previous marketplace solution was a promising initial step, it had some limitations we could only overcome through a native integration inside Azure Machine Learning. To deal with these challenges and enhance customers experience, we collaborated with Microsoft to supply a totally integrated experience for Hugging Face users inside Azure Machine Learning Studio.

Hosting over 200,000 open-source models, and serving over 1 million model downloads a day, Hugging Face is the go-to destination for all of Machine Learning. But deploying Transformers to production stays a challenge today.

Certainly one of the essential problems developers and organizations face is how difficult it’s to deploy and scale production-grade inference APIs. After all, a simple option is to depend on cloud-based AI services. Although they’re very simple to make use of, these services are often powered by a limited set of models that won’t support the task type you would like, and that can not be deeply customized, if in any respect. Alternatively, cloud-based ML services or in-house platforms provide you with full control, but on the expense of more time, complexity and price. As well as, many corporations have strict security, compliance, and privacy requirements mandating that they only deploy models on infrastructure over which they’ve administrative control.

“With the brand new Hugging Face Hub model catalog, natively integrated inside Azure Machine Learning, we’re opening a brand new page in our partnership with Microsoft, offering a brilliant easy way for enterprise customers to deploy Hugging Face models for real-time inference, all inside their secure Azure environment.” said Julien Simon, Chief Evangelist at Hugging Face.

“The combination of Hugging Face’s open-source models into Azure Machine Learning represents our commitment to empowering developers with industry-leading AI tools,” said John Montgomery, Corporate Vice President, Azure AI Platform at Microsoft. “This collaboration not only simplifies the deployment strategy of large language models but additionally provides a secure and scalable environment for real-time inferencing. It’s an exciting milestone in our mission to speed up AI initiatives and convey revolutionary solutions to the market swiftly and securely, backed by the ability of Azure infrastructure.”

Deploying Hugging Face models on Azure Machine Learning has never been easier:

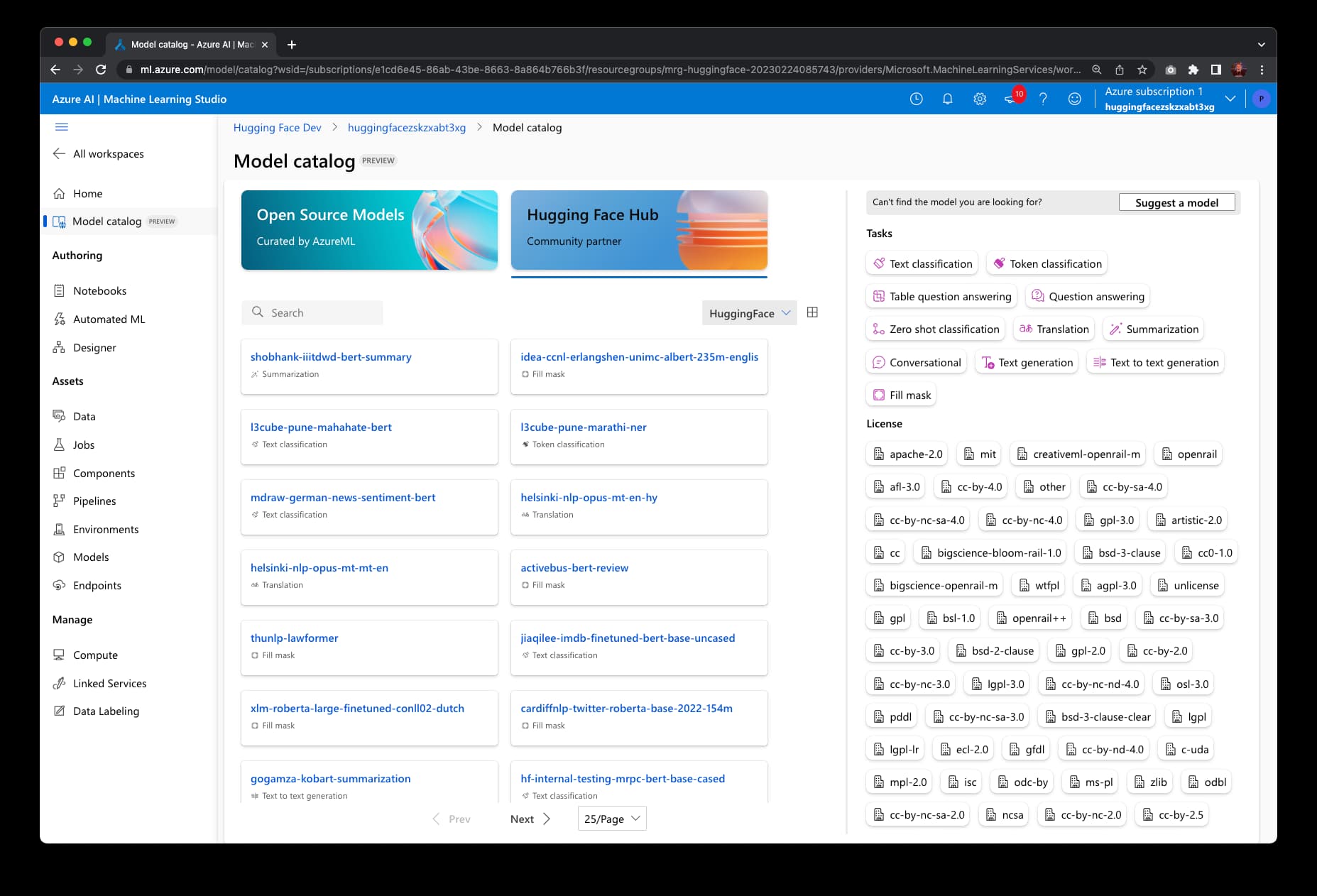

- Open the Hugging Face registry in Azure Machine Learning Studio.

- Click on the Hugging Face Model Catalog.

- Filter by task or license and search the models.

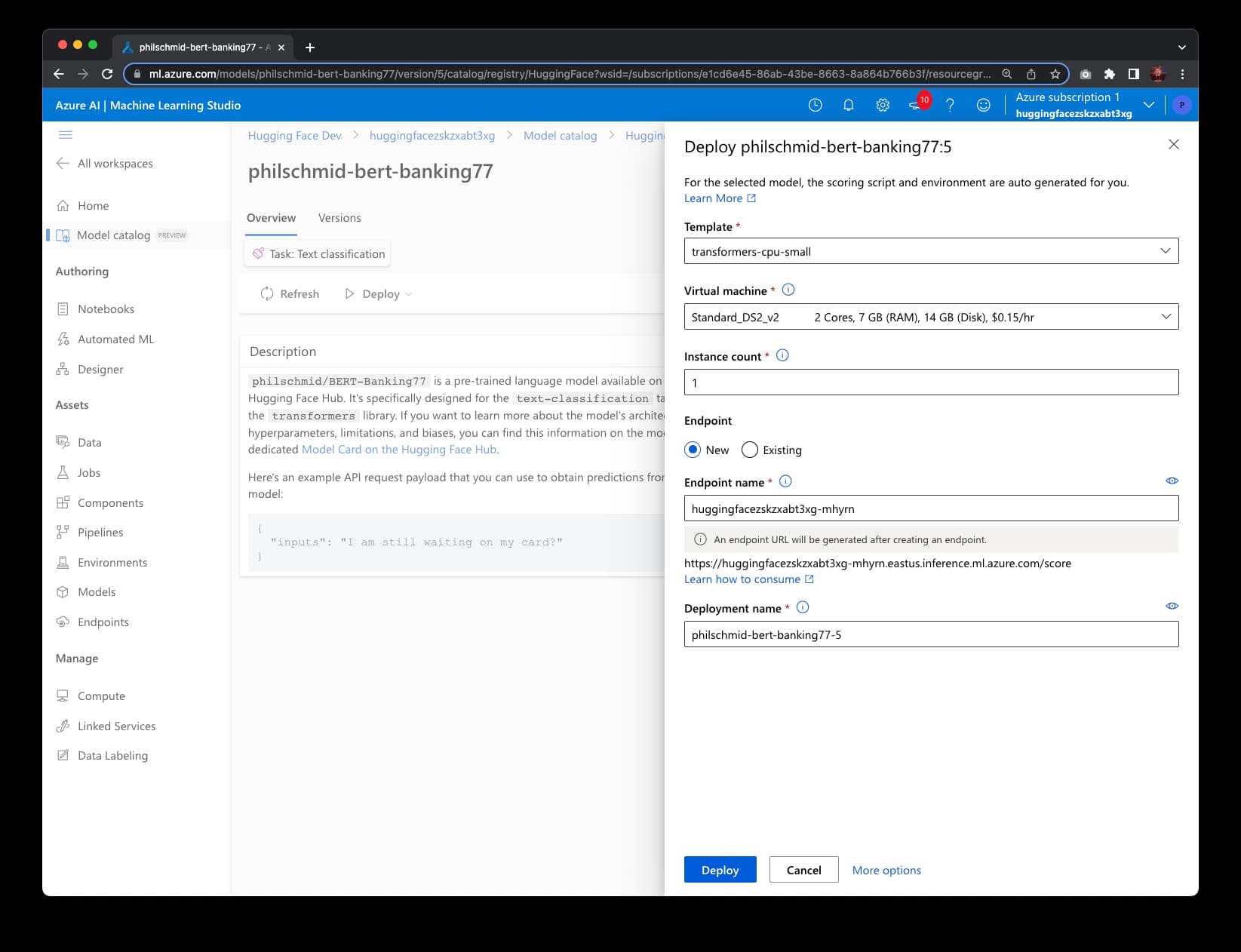

- Click the model tile to open the model page and select the real-time deployment choice to deploy the model.

- Select an Azure instance type and click on deploy.

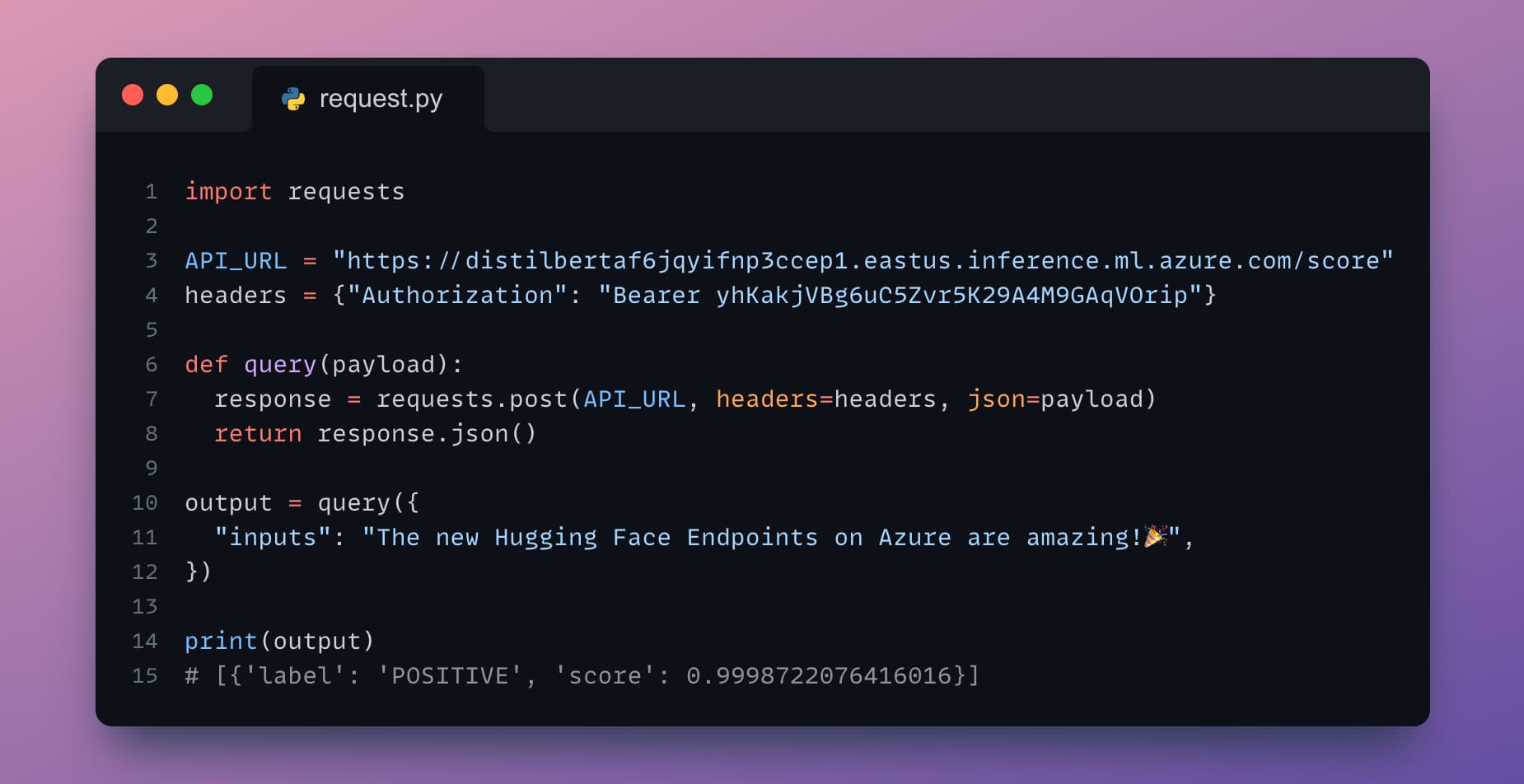

Inside minutes, you’ll be able to test your endpoint and add its inference API to your application. It’s never been easier!

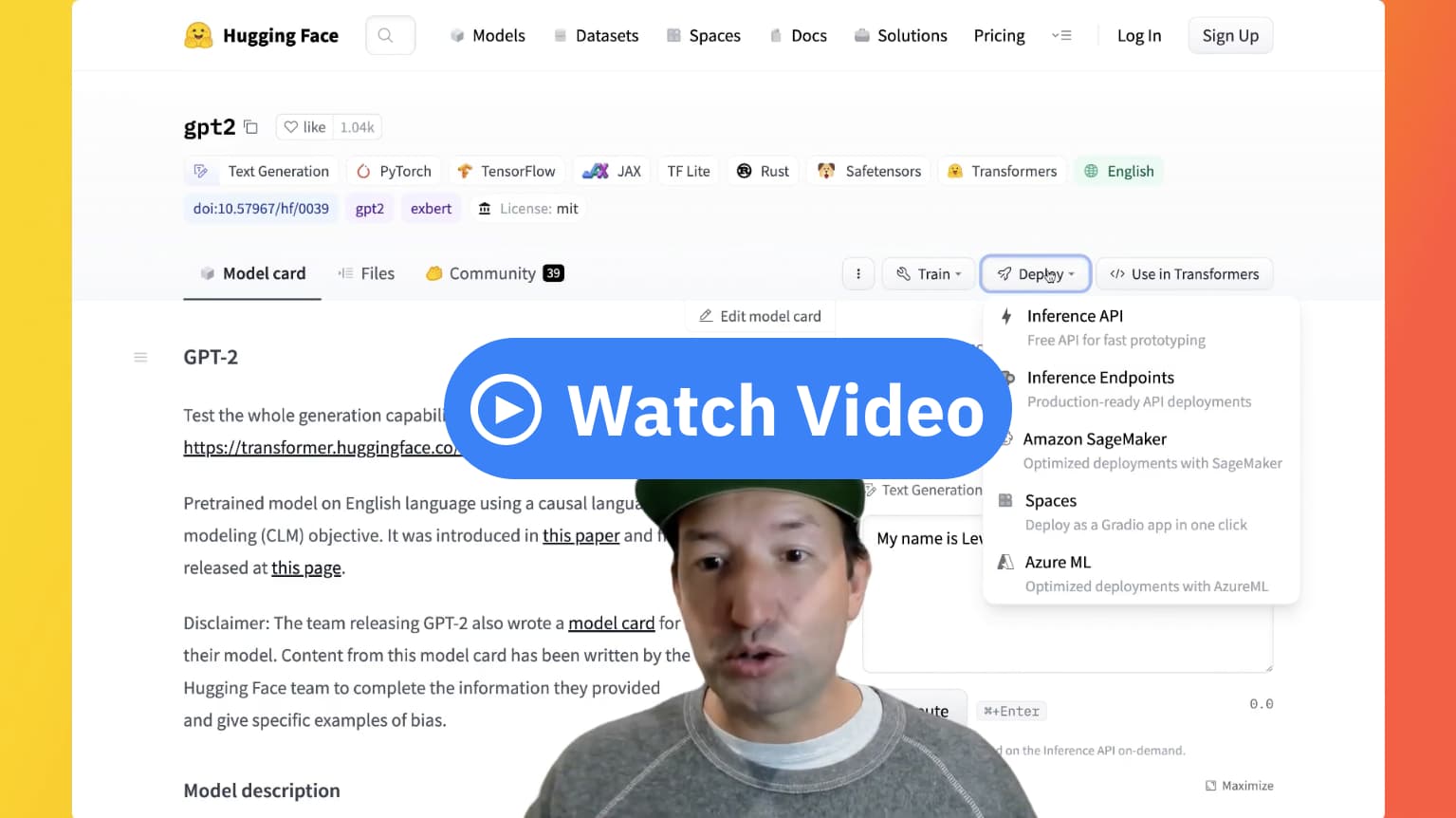

In the event you’d wish to see the service in motion, you’ll be able to click on the image below to launch a video walkthrough.

Hugging Face Model Catalog on Azure Machine Learning is out there today in public preview in all Azure Regions where Azure Machine Learning is out there. Give the service a try to tell us your feedback and questions within the forum!