The Hugging Face Hub is devoted to providing open access to datasets for everybody and giving users the tools to explore and understand them. Yow will discover lots of the datasets used to coach popular large language models (LLMs) like Falcon, Dolly, MPT, and StarCoder. There are tools for addressing fairness and bias in datasets like Disaggregators, and tools for previewing examples inside a dataset just like the Dataset Viewer.

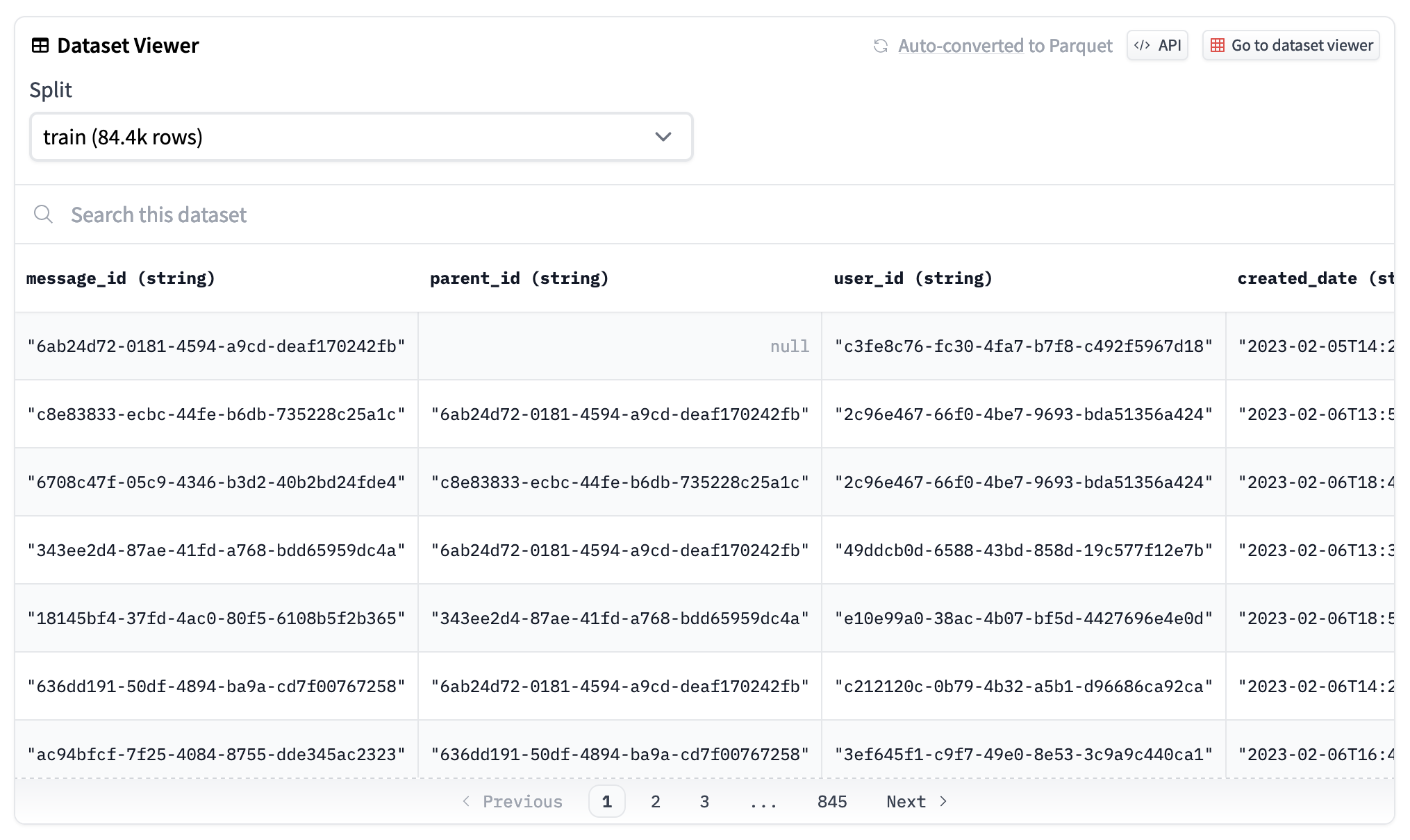

A preview of the OpenAssistant dataset with the Dataset Viewer.

We’re glad to share that we recently added one other feature to provide help to analyze datasets on the Hub; you possibly can run SQL queries with DuckDB on any dataset stored on the Hub! In keeping with the 2022 StackOverflow Developer Survey, SQL is the third hottest programming language. We also wanted a quick database management system (DBMS) designed for running analytical queries, which is why we’re enthusiastic about integrating with DuckDB. We hope this permits much more users to access and analyze datasets on the Hub!

TLDR

The dataset viewer routinely converts all public datasets on the Hub to Parquet files, that you would be able to see by clicking on the “Auto-converted to Parquet” button at the highest of a dataset page. You can too access the list of the Parquet files URLs with a straightforward HTTP call.

r = requests.get("https://datasets-server.huggingface.co/parquet?dataset=blog_authorship_corpus")

j = r.json()

urls = [f['url'] for f in j['parquet_files'] if f['split'] == 'train']

urls

['https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00000-of-00002.parquet',

'https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00001-of-00002.parquet']

Create a connection to DuckDB and install and cargo the httpfs extension to permit reading and writing distant files:

import duckdb

url = "https://huggingface.co/datasets/blog_authorship_corpus/resolve/refs%2Fconvert%2Fparquet/blog_authorship_corpus/blog_authorship_corpus-train-00000-of-00002.parquet"

con = duckdb.connect()

con.execute("INSTALL httpfs;")

con.execute("LOAD httpfs;")

When you’re connected, you possibly can start writing SQL queries!

con.sql(f"""SELECT horoscope,

count(*),

AVG(LENGTH(text)) AS avg_blog_length

FROM '{url}'

GROUP BY horoscope

ORDER BY avg_blog_length

DESC LIMIT(5)"""

)

To learn more, try the documentation.

From dataset to Parquet

Parquet files are columnar, making them more efficient to store, load and analyze. This is very essential if you’re working with large datasets, which we’re seeing increasingly more of within the LLM era. To support this, the dataset viewer routinely converts and publishes any public dataset on the Hub as Parquet files. The URL to the Parquet files could be retrieved with the /parquet endpoint.

Analyze with DuckDB

DuckDB offers super impressive performance for running complex analytical queries. It’s capable of execute a SQL query directly on a distant Parquet file with none overhead. With the httpfs extension, DuckDB is capable of query distant files comparable to datasets stored on the Hub using the URL provided from the /parquet endpoint. DuckDB also supports querying multiple Parquet files which is actually convenient since the dataset viewer shards big datasets into smaller 500MB chunks.

Looking forward

Knowing what’s inside a dataset is vital for developing models because it could possibly impact model quality in all types of ways! By allowing users to jot down and execute any SQL query on Hub datasets, that is one other way for us to enable open access to datasets and help users be more aware of the datasets contents. We’re excited for you to do that out, and we’re looking forward to what sort of insights your evaluation uncovers!