A number of months ago, we introduced the Informer model (Zhou, Haoyi, et al., 2021), which is a Time Series Transformer that won the AAAI 2021 best paper award. We also provided an example for multivariate probabilistic forecasting with Informer. On this post, we discuss the query: Are Transformers Effective for Time Series Forecasting? (AAAI 2023). As we are going to see, they’re.

Firstly, we are going to provide empirical evidence that Transformers are indeed Effective for Time Series Forecasting. Our comparison shows that the straightforward linear model, generally known as DLinear, will not be higher than Transformers as claimed. When put next against equivalent sized models in the identical setting because the linear models, the Transformer-based models perform higher on the test set metrics we consider.

Afterwards, we are going to introduce the Autoformer model (Wu, Haixu, et al., 2021), which was published in NeurIPS 2021 after the Informer model. The Autoformer model is now available in 🤗 Transformers. Finally, we are going to discuss the DLinear model, which is an easy feedforward network that uses the decomposition layer from Autoformer. The DLinear model was first introduced in Are Transformers Effective for Time Series Forecasting? and claimed to outperform Transformer-based models in time-series forecasting.

Let’s go!

Benchmarking – Transformers vs. DLinear

Within the paper Are Transformers Effective for Time Series Forecasting?, published recently in AAAI 2023,

the authors claim that Transformers will not be effective for time series forecasting. They compare the Transformer-based models against a straightforward linear model, which they call DLinear.

The DLinear model uses the decomposition layer from the Autoformer model, which we are going to introduce later on this post. The authors claim that the DLinear model outperforms the Transformer-based models in time-series forecasting.

Is that so? Let’s discover.

| Dataset | Autoformer (uni.) MASE | DLinear MASE |

|---|---|---|

Traffic |

0.910 | 0.965 |

Exchange-Rate |

1.087 | 1.690 |

Electricity |

0.751 | 0.831 |

The table above shows the outcomes of the comparison between the Autoformer and DLinear models on the three datasets utilized in the paper.

The outcomes show that the Autoformer model outperforms the DLinear model on all three datasets.

Next, we are going to present the brand new Autoformer model together with the DLinear model. We’ll showcase easy methods to compare them on the Traffic dataset from the table above, and supply explanations for the outcomes we obtained.

TL;DR: A straightforward linear model, while advantageous in certain cases, has no capability to include covariates in comparison with more complex models like transformers within the univariate setting.

Autoformer – Under The Hood

Autoformer builds upon the standard approach to decomposing time series into seasonality and trend-cycle components. That is achieved through the incorporation of a Decomposition Layer, which boosts the model’s ability to capture these components accurately. Furthermore, Autoformer introduces an revolutionary auto-correlation mechanism that replaces the usual self-attention utilized in the vanilla transformer. This mechanism enables the model to utilize period-based dependencies in the eye, thus improving the general performance.

Within the upcoming sections, we are going to delve into the 2 key contributions of Autoformer: the Decomposition Layer and the Attention (Autocorrelation) Mechanism. We will even provide code examples for example how these components function inside the Autoformer architecture.

Decomposition Layer

Decomposition has long been a well-liked method in time series evaluation, nevertheless it had not been extensively incorporated into deep learning models until the introduction of the Autoformer paper. Following a temporary explanation of the concept, we are going to show how the concept is applied in Autoformer using PyTorch code.

Decomposition of Time Series

In time series evaluation, decomposition is a technique of breaking down a time series into three systematic components: trend-cycle, seasonal variation, and random fluctuations.

The trend component represents the long-term direction of the time series, which may be increasing, decreasing, or stable over time. The seasonal component represents the recurring patterns that occur inside the time series, resembling yearly or quarterly cycles. Finally, the random (sometimes called “irregular”) component represents the random noise in the info that can not be explained by the trend or seasonal components.

Two important sorts of decomposition are additive and multiplicative decomposition, that are implemented within the great statsmodels library. By decomposing a time series into these components, we will higher understand and model the underlying patterns in the info.

But how can we incorporate decomposition into the Transformer architecture? Let’s examine how Autoformer does it.

Decomposition in Autoformer

Autoformer incorporates a decomposition block as an inner operation of the model, as presented within the Autoformer’s architecture above. As may be seen, the encoder and decoder use a decomposition block to aggregate the trend-cyclical part and extract the seasonal part from the series progressively. The concept of inner decomposition has demonstrated its usefulness for the reason that publication of Autoformer. Subsequently, it has been adopted in several other time series papers, resembling FEDformer (Zhou, Tian, et al., ICML 2022) and DLinear (Zeng, Ailing, et al., AAAI 2023), highlighting its significance in time series modeling.

Now, let’s define the decomposition layer formally:

For an input series with length , the decomposition layer returns defined as:

And the implementation in PyTorch:

import torch

from torch import nn

class DecompositionLayer(nn.Module):

"""

Returns the trend and the seasonal parts of the time series.

"""

def __init__(self, kernel_size):

super().__init__()

self.kernel_size = kernel_size

self.avg = nn.AvgPool1d(kernel_size=kernel_size, stride=1, padding=0)

def forward(self, x):

"""Input shape: Batch x Time x EMBED_DIM"""

num_of_pads = (self.kernel_size - 1) // 2

front = x[:, 0:1, :].repeat(1, num_of_pads, 1)

end = x[:, -1:, :].repeat(1, num_of_pads, 1)

x_padded = torch.cat([front, x, end], dim=1)

x_trend = self.avg(x_padded.permute(0, 2, 1)).permute(0, 2, 1)

x_seasonal = x - x_trend

return x_seasonal, x_trend

As you possibly can see, the implementation is kind of easy and may be utilized in other models, as we are going to see with DLinear. Now, let’s explain the second contribution – Attention (Autocorrelation) Mechanism.

Attention (Autocorrelation) Mechanism

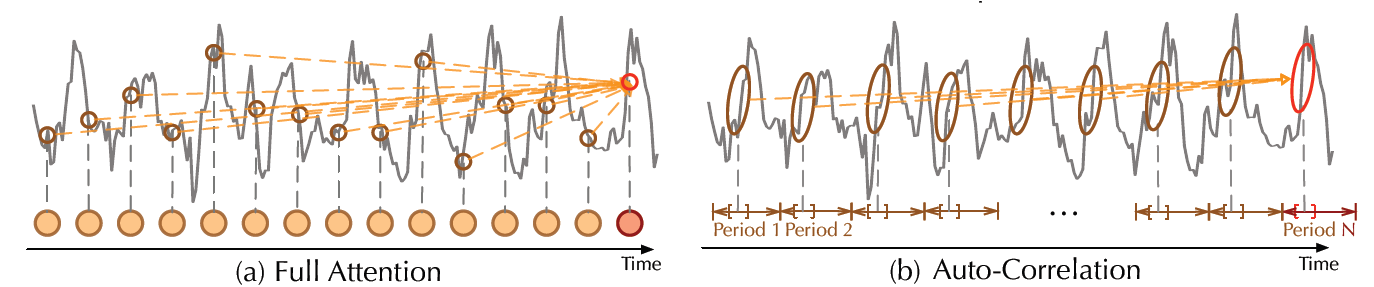

|

|---|

| Vanilla self attention vs Autocorrelation mechanism, from the paper |

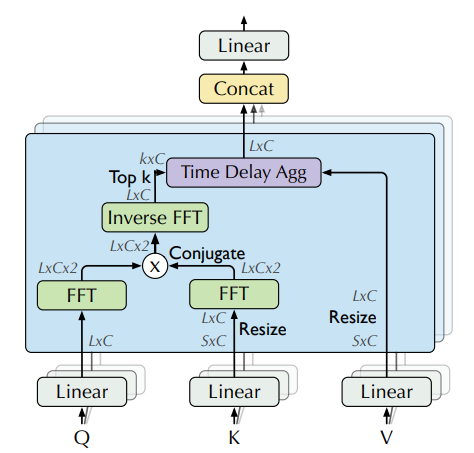

Along with the decomposition layer, Autoformer employs a novel auto-correlation mechanism which replaces the self-attention seamlessly. Within the vanilla Time Series Transformer, attention weights are computed within the time domain and point-wise aggregated. However, as may be seen within the figure above, Autoformer computes them within the frequency domain (using fast fourier transform) and aggregates them by time delay.

In the next sections, we are going to dive into these topics intimately and explain them with code examples.

Frequency Domain Attention

|

|---|

| Attention weights computation in frequency domain using FFT, from the paper |

In theory, given a time lag , autocorrelation for a single discrete variable is used to measure the “relationship” (pearson correlation) between the variable’s current value at time to its past value at time :

Using autocorrelation, Autoformer extracts frequency-based dependencies from the queries and keys, as a substitute of the usual dot-product between them. You may give it some thought as a alternative for the term within the self-attention.

In practice, autocorrelation of the queries and keys for all lags is calculated without delay by FFT. By doing so, the autocorrelation mechanism achieves time complexity (where is the input time length), much like Informer’s ProbSparse attention. Note that the idea behind computing autocorrelation using FFT is predicated on the Wiener–Khinchin theorem, which is outside the scope of this blog post.

Now, we’re able to see the code in PyTorch:

import torch

def autocorrelation(query_states, key_states):

"""

Computes autocorrelation(Q,K) using `torch.fft`.

Give it some thought as a alternative for the QK^T within the self-attention.

Assumption: states are resized to same shape of [batch_size, time_length, embedding_dim].

"""

query_states_fft = torch.fft.rfft(query_states, dim=1)

key_states_fft = torch.fft.rfft(key_states, dim=1)

attn_weights = query_states_fft * torch.conj(key_states_fft)

attn_weights = torch.fft.irfft(attn_weights, dim=1)

return attn_weights

Quite easy! 😎 Please remember that this is simply a partial implementation of autocorrelation(Q,K), and the total implementation may be present in 🤗 Transformers.

Next, we are going to see easy methods to aggregate our attn_weights with the values by time delay, process which is termed as Time Delay Aggregation.

Time Delay Aggregation

Let’s consider the autocorrelations (known as attn_weights) as . The query arises: how can we aggregate these with ? In the usual self-attention mechanism, this aggregation is achieved through dot-product. Nevertheless, in Autoformer, we employ a special approach. Firstly, we align by calculating its value for every time delay , which can be generally known as Rolling. Subsequently, we conduct element-wise multiplication between the aligned and the autocorrelations. Within the provided figure, you possibly can observe the left side showcasing the rolling of by time delay, while the suitable side illustrates the element-wise multiplication with the autocorrelations.

It will probably be summarized with the next equations:

And that is it! Note that is controlled by a hyperparameter called autocorrelation_factor (much like sampling_factor in Informer), and softmax is applied to the autocorrelations before the multiplication.

Now, we’re able to see the ultimate code:

import torch

import math

def time_delay_aggregation(attn_weights, value_states, autocorrelation_factor=2):

"""

Computes aggregation as value_states.roll(delay) * top_k_autocorrelations(delay).

The end result is the autocorrelation-attention output.

Give it some thought as a alternative of the dot-product between attn_weights and value states.

The autocorrelation_factor is used to seek out top k autocorrelations delays.

Assumption: value_states and attn_weights shape: [batch_size, time_length, embedding_dim]

"""

bsz, num_heads, tgt_len, channel = ...

time_length = value_states.size(1)

autocorrelations = attn_weights.view(bsz, num_heads, tgt_len, channel)

top_k = int(autocorrelation_factor * math.log(time_length))

autocorrelations_mean = torch.mean(autocorrelations, dim=(1, -1))

top_k_autocorrelations, top_k_delays = torch.topk(autocorrelations_mean, top_k, dim=1)

top_k_autocorrelations = torch.softmax(top_k_autocorrelations, dim=-1)

delays_agg = torch.zeros_like(value_states).float()

for i in range(top_k):

value_states_roll_delay = value_states.roll(shifts=-int(top_k_delays[i]), dims=1)

top_k_at_delay = top_k_autocorrelations[:, i]

top_k_resized = top_k_at_delay.view(-1, 1, 1).repeat(num_heads, tgt_len, channel)

delays_agg += value_states_roll_delay * top_k_resized

attn_output = delays_agg.contiguous()

return attn_output

We did it! The Autoformer model is now available within the 🤗 Transformers library, and easily called AutoformerModel.

Our strategy with this model is to point out the performance of the univariate Transformer models compared to the DLinear model which is inherently univariate as will shown next. We will even present the outcomes from two multivariate Transformer models trained on the identical data.

DLinear – Under The Hood

Actually, DLinear is conceptually easy: it’s just a completely connected with the Autoformer’s DecompositionLayer.

It uses the DecompositionLayer above to decompose the input time series into the residual (the seasonality) and trend part. Within the forward pass each part is passed through its own linear layer, which projects the signal to an appropriate prediction_length-sized output. The ultimate output is the sum of the 2 corresponding outputs within the point-forecasting model:

def forward(self, context):

seasonal, trend = self.decomposition(context)

seasonal_output = self.linear_seasonal(seasonal)

trend_output = self.linear_trend(trend)

return seasonal_output + trend_output

Within the probabilistic setting one can project the context length arrays to prediction-length * hidden dimensions via the linear_seasonal and linear_trend layers. The resulting outputs are added and reshaped to (prediction_length, hidden). Finally, a probabilistic head maps the latent representations of size hidden to the parameters of some distribution.

In our benchmark, we use the implementation of DLinear from GluonTS.

Example: Traffic Dataset

We wish to point out empirically the performance of Transformer-based models within the library, by benchmarking on the traffic dataset, a dataset with 862 time series. We’ll train a shared model on each of the person time series (i.e. univariate setting).

Every time series represents the occupancy value of a sensor and is within the range [0, 1]. We’ll keep the next hyperparameters fixed for all of the models:

prediction_length = 24

context_length = prediction_length*2

batch_size = 128

num_batches_per_epoch = 100

epochs = 50

scaling = "std"

The transformers models are all relatively small with:

encoder_layers=2

decoder_layers=2

d_model=16

As a substitute of showing easy methods to train a model using Autoformer, one can just replace the model within the previous two blog posts (TimeSeriesTransformer and Informer) with the brand new Autoformer model and train it on the traffic dataset. So as to not repeat ourselves, we’ve already trained the models and pushed them to the HuggingFace Hub. We’ll use those models for evaluation.

Load Dataset

Let’s first install the crucial libraries:

!pip install -q transformers datasets evaluate speed up "gluonts[torch]" ujson tqdm

The traffic dataset, utilized by Lai et al. (2017), incorporates the San Francisco Traffic. It incorporates 862 hourly time series showing the road occupancy rates within the range on the San Francisco Bay Area freeways from 2015 to 2016.

from gluonts.dataset.repository.datasets import get_dataset

dataset = get_dataset("traffic")

freq = dataset.metadata.freq

prediction_length = dataset.metadata.prediction_length

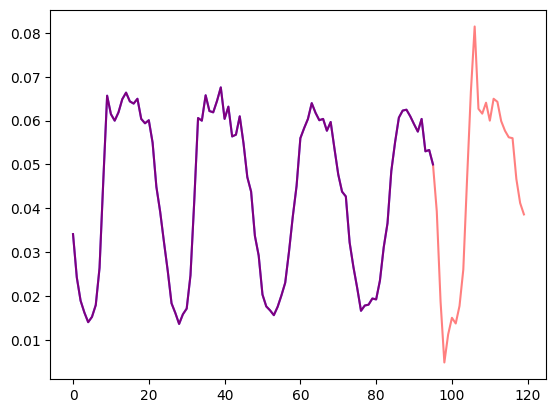

Let’s visualize a time series within the dataset and plot the train/test split:

import matplotlib.pyplot as plt

train_example = next(iter(dataset.train))

test_example = next(iter(dataset.test))

num_of_samples = 4*prediction_length

figure, axes = plt.subplots()

axes.plot(train_example["target"][-num_of_samples:], color="blue")

axes.plot(

test_example["target"][-num_of_samples - prediction_length :],

color="red",

alpha=0.5,

)

plt.show()

Let’s define the train/test splits:

train_dataset = dataset.train

test_dataset = dataset.test

Define Transformations

Next, we define the transformations for the info, specifically for the creation of the time features (based on the dataset or universal ones).

We define a Chain of transformations from GluonTS (which is a bit comparable to torchvision.transforms.Compose for images). It allows us to mix several transformations right into a single pipeline.

The transformations below are annotated with comments to elucidate what they do. At a high level, we are going to iterate over the person time series of our dataset and add/remove fields or features:

from transformers import PretrainedConfig

from gluonts.time_feature import time_features_from_frequency_str

from gluonts.dataset.field_names import FieldName

from gluonts.transform import (

AddAgeFeature,

AddObservedValuesIndicator,

AddTimeFeatures,

AsNumpyArray,

Chain,

ExpectedNumInstanceSampler,

RemoveFields,

SelectFields,

SetField,

TestSplitSampler,

Transformation,

ValidationSplitSampler,

VstackFeatures,

RenameFields,

)

def create_transformation(freq: str, config: PretrainedConfig) -> Transformation:

remove_field_names = []

if config.num_static_real_features == 0:

remove_field_names.append(FieldName.FEAT_STATIC_REAL)

if config.num_dynamic_real_features == 0:

remove_field_names.append(FieldName.FEAT_DYNAMIC_REAL)

if config.num_static_categorical_features == 0:

remove_field_names.append(FieldName.FEAT_STATIC_CAT)

return Chain(

[RemoveFields(field_names=remove_field_names)]

+ (

[

AsNumpyArray(

field=FieldName.FEAT_STATIC_CAT,

expected_ndim=1,

dtype=int,

)

]

if config.num_static_categorical_features > 0

else []

)

+ (

[

AsNumpyArray(

field=FieldName.FEAT_STATIC_REAL,

expected_ndim=1,

)

]

if config.num_static_real_features > 0

else []

)

+ [

AsNumpyArray(

field=FieldName.TARGET,

expected_ndim=1 if config.input_size == 1 else 2,

),

AddObservedValuesIndicator(

target_field=FieldName.TARGET,

output_field=FieldName.OBSERVED_VALUES,

),

AddTimeFeatures(

start_field=FieldName.START,

target_field=FieldName.TARGET,

output_field=FieldName.FEAT_TIME,

time_features=time_features_from_frequency_str(freq),

pred_length=config.prediction_length,

),

AddAgeFeature(

target_field=FieldName.TARGET,

output_field=FieldName.FEAT_AGE,

pred_length=config.prediction_length,

log_scale=True,

),

VstackFeatures(

output_field=FieldName.FEAT_TIME,

input_fields=[FieldName.FEAT_TIME, FieldName.FEAT_AGE]

+ (

[FieldName.FEAT_DYNAMIC_REAL]

if config.num_dynamic_real_features > 0

else []

),

),

RenameFields(

mapping={

FieldName.FEAT_STATIC_CAT: "static_categorical_features",

FieldName.FEAT_STATIC_REAL: "static_real_features",

FieldName.FEAT_TIME: "time_features",

FieldName.TARGET: "values",

FieldName.OBSERVED_VALUES: "observed_mask",

}

),

]

)

Define InstanceSplitter

For training/validation/testing we next create an InstanceSplitter which is used to sample windows from the dataset (as, remember, we won’t pass the whole history of values to the model as a consequence of time and memory constraints).

The instance splitter samples random context_length sized and subsequent prediction_length sized windows from the info, and appends a past_ or future_ key to any temporal keys in time_series_fields for the respective windows. The instance splitter may be configured into three different modes:

mode="train": Here we sample the context and prediction length windows randomly from the dataset given to it (the training dataset)mode="validation": Here we sample the very last context length window and prediction window from the dataset given to it (for the back-testing or validation likelihood calculations)mode="test": Here we sample the very last context length window only (for the prediction use case)

from gluonts.transform import InstanceSplitter

from gluonts.transform.sampler import InstanceSampler

from typing import Optional

def create_instance_splitter(

config: PretrainedConfig,

mode: str,

train_sampler: Optional[InstanceSampler] = None,

validation_sampler: Optional[InstanceSampler] = None,

) -> Transformation:

assert mode in ["train", "validation", "test"]

instance_sampler = {

"train": train_sampler

or ExpectedNumInstanceSampler(

num_instances=1.0, min_future=config.prediction_length

),

"validation": validation_sampler

or ValidationSplitSampler(min_future=config.prediction_length),

"test": TestSplitSampler(),

}[mode]

return InstanceSplitter(

target_field="values",

is_pad_field=FieldName.IS_PAD,

start_field=FieldName.START,

forecast_start_field=FieldName.FORECAST_START,

instance_sampler=instance_sampler,

past_length=config.context_length + max(config.lags_sequence),

future_length=config.prediction_length,

time_series_fields=["time_features", "observed_mask"],

)

Create PyTorch DataLoaders

Next, it is time to create PyTorch DataLoaders, which permit us to have batches of (input, output) pairs – or in other words (past_values, future_values).

from typing import Iterable

import torch

from gluonts.itertools import Cyclic, Cached

from gluonts.dataset.loader import as_stacked_batches

def create_train_dataloader(

config: PretrainedConfig,

freq,

data,

batch_size: int,

num_batches_per_epoch: int,

shuffle_buffer_length: Optional[int] = None,

cache_data: bool = True,

**kwargs,

) -> Iterable:

PREDICTION_INPUT_NAMES = [

"past_time_features",

"past_values",

"past_observed_mask",

"future_time_features",

]

if config.num_static_categorical_features > 0:

PREDICTION_INPUT_NAMES.append("static_categorical_features")

if config.num_static_real_features > 0:

PREDICTION_INPUT_NAMES.append("static_real_features")

TRAINING_INPUT_NAMES = PREDICTION_INPUT_NAMES + [

"future_values",

"future_observed_mask",

]

transformation = create_transformation(freq, config)

transformed_data = transformation.apply(data, is_train=True)

if cache_data:

transformed_data = Cached(transformed_data)

instance_splitter = create_instance_splitter(config, "train")

stream = Cyclic(transformed_data).stream()

training_instances = instance_splitter.apply(stream)

return as_stacked_batches(

training_instances,

batch_size=batch_size,

shuffle_buffer_length=shuffle_buffer_length,

field_names=TRAINING_INPUT_NAMES,

output_type=torch.tensor,

num_batches_per_epoch=num_batches_per_epoch,

)

def create_backtest_dataloader(

config: PretrainedConfig,

freq,

data,

batch_size: int,

**kwargs,

):

PREDICTION_INPUT_NAMES = [

"past_time_features",

"past_values",

"past_observed_mask",

"future_time_features",

]

if config.num_static_categorical_features > 0:

PREDICTION_INPUT_NAMES.append("static_categorical_features")

if config.num_static_real_features > 0:

PREDICTION_INPUT_NAMES.append("static_real_features")

transformation = create_transformation(freq, config)

transformed_data = transformation.apply(data)

instance_sampler = create_instance_splitter(config, "validation")

testing_instances = instance_sampler.apply(transformed_data, is_train=True)

return as_stacked_batches(

testing_instances,

batch_size=batch_size,

output_type=torch.tensor,

field_names=PREDICTION_INPUT_NAMES,

)

def create_test_dataloader(

config: PretrainedConfig,

freq,

data,

batch_size: int,

**kwargs,

):

PREDICTION_INPUT_NAMES = [

"past_time_features",

"past_values",

"past_observed_mask",

"future_time_features",

]

if config.num_static_categorical_features > 0:

PREDICTION_INPUT_NAMES.append("static_categorical_features")

if config.num_static_real_features > 0:

PREDICTION_INPUT_NAMES.append("static_real_features")

transformation = create_transformation(freq, config)

transformed_data = transformation.apply(data, is_train=False)

instance_sampler = create_instance_splitter(config, "test")

testing_instances = instance_sampler.apply(transformed_data, is_train=False)

return as_stacked_batches(

testing_instances,

batch_size=batch_size,

output_type=torch.tensor,

field_names=PREDICTION_INPUT_NAMES,

)

Evaluate on Autoformer

We’ve got already pre-trained an Autoformer model on this dataset, so we will just fetch the model and evaluate it on the test set:

from transformers import AutoformerConfig, AutoformerForPrediction

config = AutoformerConfig.from_pretrained("kashif/autoformer-traffic-hourly")

model = AutoformerForPrediction.from_pretrained("kashif/autoformer-traffic-hourly")

test_dataloader = create_backtest_dataloader(

config=config,

freq=freq,

data=test_dataset,

batch_size=64,

)

At inference time, we are going to use the model’s generate() method for predicting prediction_length steps into the long run from the very last context window of every time series within the training set.

from speed up import Accelerator

accelerator = Accelerator()

device = accelerator.device

model.to(device)

model.eval()

forecasts_ = []

for batch in test_dataloader:

outputs = model.generate(

static_categorical_features=batch["static_categorical_features"].to(device)

if config.num_static_categorical_features > 0

else None,

static_real_features=batch["static_real_features"].to(device)

if config.num_static_real_features > 0

else None,

past_time_features=batch["past_time_features"].to(device),

past_values=batch["past_values"].to(device),

future_time_features=batch["future_time_features"].to(device),

past_observed_mask=batch["past_observed_mask"].to(device),

)

forecasts_.append(outputs.sequences.cpu().numpy())

The model outputs a tensor of shape (batch_size, variety of samples, prediction length, input_size).

On this case, we get 100 possible values for the subsequent 24 hours for every of the time series within the test dataloader batch which when you recall from above is 64:

forecasts_[0].shape

>>> (64, 100, 24)

We’ll stack them vertically, to get forecasts for all time-series within the test dataset: We’ve got 7 rolling windows within the test set which is why we find yourself with a complete of 7 * 862 = 6034 predictions:

import numpy as np

forecasts = np.vstack(forecasts_)

print(forecasts.shape)

>>> (6034, 100, 24)

We will evaluate the resulting forecast with respect to the bottom truth out of sample values present within the test set. For that, we’ll use the 🤗 Evaluate library, which incorporates the MASE metrics.

We calculate the metric for every time series within the dataset and return the common:

from tqdm.autonotebook import tqdm

from evaluate import load

from gluonts.time_feature import get_seasonality

mase_metric = load("evaluate-metric/mase")

forecast_median = np.median(forecasts, 1)

mase_metrics = []

for item_id, ts in enumerate(tqdm(test_dataset)):

training_data = ts["target"][:-prediction_length]

ground_truth = ts["target"][-prediction_length:]

mase = mase_metric.compute(

predictions=forecast_median[item_id],

references=np.array(ground_truth),

training=np.array(training_data),

periodicity=get_seasonality(freq))

mase_metrics.append(mase["mase"])

So the result for the Autoformer model is:

print(f"Autoformer univariate MASE: {np.mean(mase_metrics):.3f}")

>>> Autoformer univariate MASE: 0.910

To plot the prediction for any time series with respect to the bottom truth test data, we define the next helper:

import matplotlib.dates as mdates

import pandas as pd

test_ds = list(test_dataset)

def plot(ts_index):

fig, ax = plt.subplots()

index = pd.period_range(

start=test_ds[ts_index][FieldName.START],

periods=len(test_ds[ts_index][FieldName.TARGET]),

freq=test_ds[ts_index][FieldName.START].freq,

).to_timestamp()

ax.plot(

index[-5*prediction_length:],

test_ds[ts_index]["target"][-5*prediction_length:],

label="actual",

)

plt.plot(

index[-prediction_length:],

np.median(forecasts[ts_index], axis=0),

label="median",

)

plt.gcf().autofmt_xdate()

plt.legend(loc="best")

plt.show()

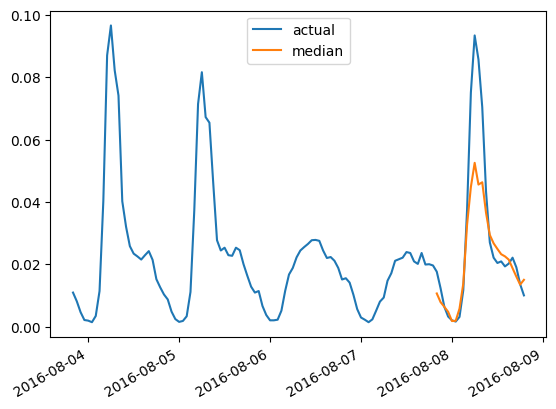

For instance, for time-series within the test set with index 4:

plot(4)

Evaluate on DLinear

A probabilistic DLinear is implemented in gluonts and thus we will train and evaluate it relatively quickly here:

from gluonts.torch.model.d_linear.estimator import DLinearEstimator

estimator = DLinearEstimator(

prediction_length=dataset.metadata.prediction_length,

context_length=dataset.metadata.prediction_length*2,

scaling=scaling,

hidden_dimension=2,

batch_size=batch_size,

num_batches_per_epoch=num_batches_per_epoch,

trainer_kwargs=dict(max_epochs=epochs)

)

Train the model:

predictor = estimator.train(

training_data=train_dataset,

cache_data=True,

shuffle_buffer_length=1024

)

>>> INFO:pytorch_lightning.callbacks.model_summary:

| Name | Type | Params

---------------------------------------

0 | model | DLinearModel | 4.7 K

---------------------------------------

4.7 K Trainable params

0 Non-trainable params

4.7 K Total params

0.019 Total estimated model params size (MB)

Training: 0it [00:00, ?it/s]

...

INFO:pytorch_lightning.utilities.rank_zero:Epoch 49, global step 5000: 'train_loss' was not in top 1

INFO:pytorch_lightning.utilities.rank_zero:`Trainer.fit` stopped: `max_epochs=50` reached.

And evaluate it on the test set:

from gluonts.evaluation import make_evaluation_predictions, Evaluator

forecast_it, ts_it = make_evaluation_predictions(

dataset=dataset.test,

predictor=predictor,

)

d_linear_forecasts = list(forecast_it)

d_linear_tss = list(ts_it)

evaluator = Evaluator()

agg_metrics, _ = evaluator(iter(d_linear_tss), iter(d_linear_forecasts))

So the result for the DLinear model is:

dlinear_mase = agg_metrics["MASE"]

print(f"DLinear MASE: {dlinear_mase:.3f}")

>>> DLinear MASE: 0.965

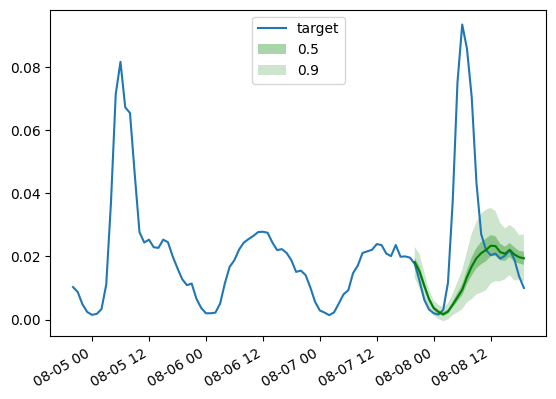

As before, we plot the predictions from our trained DLinear model via this helper:

def plot_gluonts(index):

plt.plot(d_linear_tss[index][-4 * dataset.metadata.prediction_length:].to_timestamp(), label="goal")

d_linear_forecasts[index].plot(show_label=True, color='g')

plt.legend()

plt.gcf().autofmt_xdate()

plt.show()

plot_gluonts(4)

The traffic dataset has a distributional shift within the sensor patterns between weekdays and weekends. So what is occurring here? Because the DLinear model has no capability to include covariates, specifically any date-time features, the context window we give it doesn’t have enough information to work out if the prediction is for the weekend or weekday. Thus, the model will predict the more common of the patterns, namely the weekdays resulting in poorer performance on weekends. After all, by giving it a bigger context window, a linear model will work out the weekly pattern, but perhaps there may be a monthly or quarterly pattern in the info which might require greater and larger contexts.

Conclusion

How do Transformer-based models compare against the above linear baseline? The test set MASE metrics from different models we’ve are below:

| Dataset | Transformer (uni.) | Transformer (mv.) | Informer (uni.) | Informer (mv.) | Autoformer (uni.) | DLinear |

|---|---|---|---|---|---|---|

Traffic |

0.876 | 1.046 | 0.924 | 1.131 | 0.910 | 0.965 |

As one can observe, the vanilla Transformer which we introduced last yr gets one of the best results here. Secondly, multivariate models are typically worse than the univariate ones, the rationale being the issue in estimating the cross-series correlations/relationships. The extra variance added by the estimates often harms the resulting forecasts or the model learns spurious correlations. Recent papers like CrossFormer (ICLR 23) and CARD try to deal with this problem in Transformer models.

Multivariate models normally perform well when trained on large amounts of information. Nevertheless, when put next to univariate models, especially on smaller open datasets, the univariate models are likely to provide higher metrics. By comparing the linear model with equivalent-sized univariate transformers or the truth is every other neural univariate model, one will typically get well performance.

To summarize, Transformers are definitely removed from being outdated with regards to time-series forcasting!

Yet the provision of large-scale datasets is crucial for maximizing their potential.

Unlike in CV and NLP, the sphere of time series lacks publicly accessible large-scale datasets.

Most existing pre-trained models for time series are trained on small sample sizes from archives like UCR and UEA,

which contain only a number of hundreds and even lots of of samples.

Although these benchmark datasets have been instrumental within the progress of the time series community,

their limited sample sizes and lack of generality pose challenges for pre-training deep learning models.

Due to this fact, the event of large-scale, generic time series datasets (like ImageNet in CV) is of the utmost importance.

Creating such datasets will greatly facilitate further research on pre-trained models specifically designed for time series evaluation,

and it would improve the applicability of pre-trained models in time series forecasting.

Acknowledgements

We express our appreciation to Lysandre Debut and Pedro Cuenca

their insightful comments and help during this project ❤️.