TL;DR: We’d like higher ways of evaluating bias in text-to-image models

Introduction

Text-to-image (TTI) generation is all the trend as of late, and 1000’s of TTI models are being uploaded to the Hugging Face Hub. Each modality is potentially prone to separate sources of bias, which begs the query: how can we uncover biases in these models? In the present blog post, we share our thoughts on sources of bias in TTI systems in addition to tools and potential solutions to deal with them, showcasing each our own projects and people from the broader community.

Values and bias encoded in image generations

There’s a really close relationship between bias and values, particularly when these are embedded within the language or images used to coach and query a given text-to-image model; this phenomenon heavily influences the outputs we see within the generated images. Although this relationship is understood within the broader AI research field and considerable efforts are underway to deal with it, the complexity of attempting to represent the evolving nature of a given population’s values in a single model still persists. This presents an everlasting ethical challenge to uncover and address adequately.

For instance, if the training data are mainly in English they probably convey relatively Western values. Because of this we get stereotypical representations of various or distant cultures. This phenomenon appears noticeable once we compare the outcomes of ERNIE ViLG (left) and Stable Diffusion v 2.1 (right) for a similar prompt, “a house in Beijing”:

Sources of Bias

Recent years have seen much vital research on bias detection in AI systems with single modalities in each Natural Language Processing (Abid et al., 2021) in addition to Computer Vision (Buolamwini and Gebru, 2018). To the extent that ML models are constructed by people, biases are present in all ML models (and, indeed, technology generally). This will present itself by an over- and under-representation of certain visual characteristics in images (e.g., all images of office staff having ties), or the presence of cultural and geographical stereotypes (e.g., all images of brides wearing white dresses and veils, versus more representative images of brides around the globe, similar to brides with red saris). On condition that AI systems are deployed in sociotechnical contexts which can be becoming widely deployed in several sectors and tools (e.g. Firefly, Shutterstock), they’re particularly more likely to amplify existing societal biases and inequities. We aim to offer a non-exhaustive list of bias sources below:

Biases in training data: Popular multimodal datasets similar to LAION-5B for text-to-image, MS-COCO for image captioning, and VQA v2.0 for visual query answering, have been found to contain quite a few biases and harmful associations (Zhao et al 2017, Prabhu and Birhane, 2021, Hirota et al, 2022), which may percolate into the models trained on these datasets. For instance, initial results from the Hugging Face Stable Bias project show a scarcity of diversity in image generations, in addition to a perpetuation of common stereotypes of cultures and identity groups. Comparing Dall-E 2 generations of CEOs (right) and managers (left), we are able to see that each are lacking diversity:

Biases in pre-training data filtering: There is commonly some type of filtering carried out on datasets before they’re used for training models; this introduces different biases. As an illustration, of their blog post, the creators of Dall-E 2 found that filtering training data can actually amplify biases – they hypothesize that this may increasingly be as a consequence of the prevailing dataset bias towards representing women in additional sexualized contexts or as a consequence of inherent biases of the filtering approaches that they use.

Biases in inference: The CLIP model used for guiding the training and inference of text-to-image models like Stable Diffusion and Dall-E 2 has plenty of well-documented biases surrounding age, gender, and race or ethnicity, as an illustration treating images that had been labeled as white, middle-aged, and male because the default. This will impact the generations of models that use it for prompt encoding, as an illustration by interpreting unspecified or underspecified gender and identity groups to suggest white and male.

Biases within the models’ latent space: Initial work has been done when it comes to exploring the latent space of the model and guiding image generation along different axes similar to gender to make generations more representative (see the pictures below). Nonetheless, more work is obligatory to higher understand the structure of the latent space of various kinds of diffusion models and the aspects that may influence the bias reflected in generated images.

Biases in post-hoc filtering: Many image generation models include built-in safety filters that aim to flag problematic content. Nonetheless, the extent to which these filters work and the way robust they’re to different sorts of content is to be determined – as an illustration, efforts to red-team the Stable Diffusion safety filterhave shown that it mostly identifies sexual content, and fails to flag other types violent, gory or disturbing content.

Detecting Bias

A lot of the issues that we describe above can’t be solved with a single solution – indeed, bias is a fancy topic that can not be meaningfully addressed with technology alone. Bias is deeply intertwined with the broader social, cultural, and historical context during which it exists. Due to this fact, addressing bias in AI systems shouldn’t be only a technological challenge but in addition a socio-technical one which demands multidisciplinary attention. Nonetheless, a mix of approaches including tools, red-teaming and evaluations will help glean vital insights that may inform each model creators and downstream users in regards to the biases contained in TTI and other multimodal models.

We present a few of these approaches below:

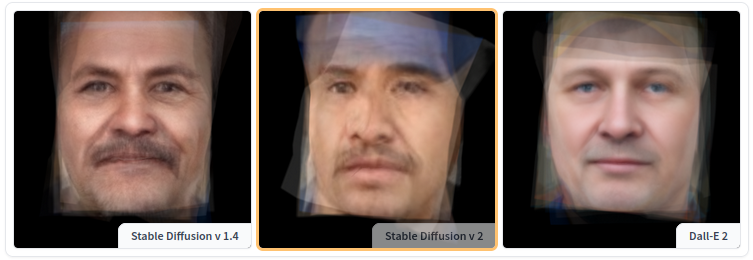

Tools for exploring bias: As a part of the Stable Bias project, we created a series of tools to explore and compare the visual manifestation of biases in several text-to-image models. As an illustration, the Average Diffusion Faces tool permits you to compare the common representations for various professions and different models – like for ‘janitor’, shown below, for Stable Diffusion v1.4, v2, and Dall-E 2:

Other tools, just like the Face Clustering tool and the Colorfulness Occupation Explorer tool, allow users to explore patterns in the information and discover similarities and stereotypes without ascribing labels or identity characteristics. In actual fact, it is vital to do not forget that generated images of people aren’t actual people, but artificial creations, so it is vital to not treat them as in the event that they were real humans. Depending on the context and the use case, tools like these will be used each for storytelling and for auditing.

Red-teaming: ‘Red-teaming’ consists of stress testing AI models for potential vulnerabilities, biases, and weaknesses by prompting them and analyzing results. While it has been employed in practice for evaluating language models (including the upcoming Generative AI Red Teaming event at DEFCON, which we’re participating in), there are not any established and systematic ways of red-teaming AI models and it stays relatively ad hoc. In actual fact, there are such a lot of potential kinds of failure modes and biases in AI models, it is tough to anticipate all of them, and the stochastic nature of generative models makes it hard to breed failure cases. Red-teaming gives actionable insights into model limitations and will be used so as to add guardrails and document model limitations. There are currently no red-teaming benchmarks or leaderboards highlighting the necessity for more work in open source red-teaming resources. Anthropic’s red-teaming dataset is the one open source resource of red-teaming prompts, but is restricted to only English natural language text.

Evaluating and documenting bias: At Hugging Face, we’re big proponents of model cards and other types of documentation (e.g., datasheets, READMEs, etc). Within the case of text-to-image (and other multimodal) models, the results of explorations made using explorer tools and red-teaming efforts similar to those described above will be shared alongside model checkpoints and weights. One among the problems is that we currently do not have standard benchmarks or datasets for measuring the bias in multimodal models (and indeed, in text-to-image generation systems specifically), but as more work on this direction is carried out by the community, different bias metrics will be reported in parallel in model documentation.

Values and Bias

The entire approaches listed above are a part of detecting and understanding the biases embedded in image generation models. But how can we actively engage with them?

One approach is to develop latest models that represent society as we wish it to be. This means creating AI systems that do not just mimic the patterns in our data, but actively promote more equitable and fair perspectives. Nonetheless, this approach raises a vital query: whose values are we programming into these models? Values differ across cultures, societies, and individuals, making it a fancy task to define what an “ideal” society should appear like inside an AI model. The query is indeed complex and multifaceted. If we avoid reproducing existing societal biases in our AI models, we’re faced with the challenge of defining an “ideal” representation of society. Society shouldn’t be a static entity, but a dynamic and ever-changing construct. Should AI models, then, adapt to the changes in societal norms and values over time? In that case, how can we make sure that these shifts genuinely represent all groups inside society, especially those often underrepresented?

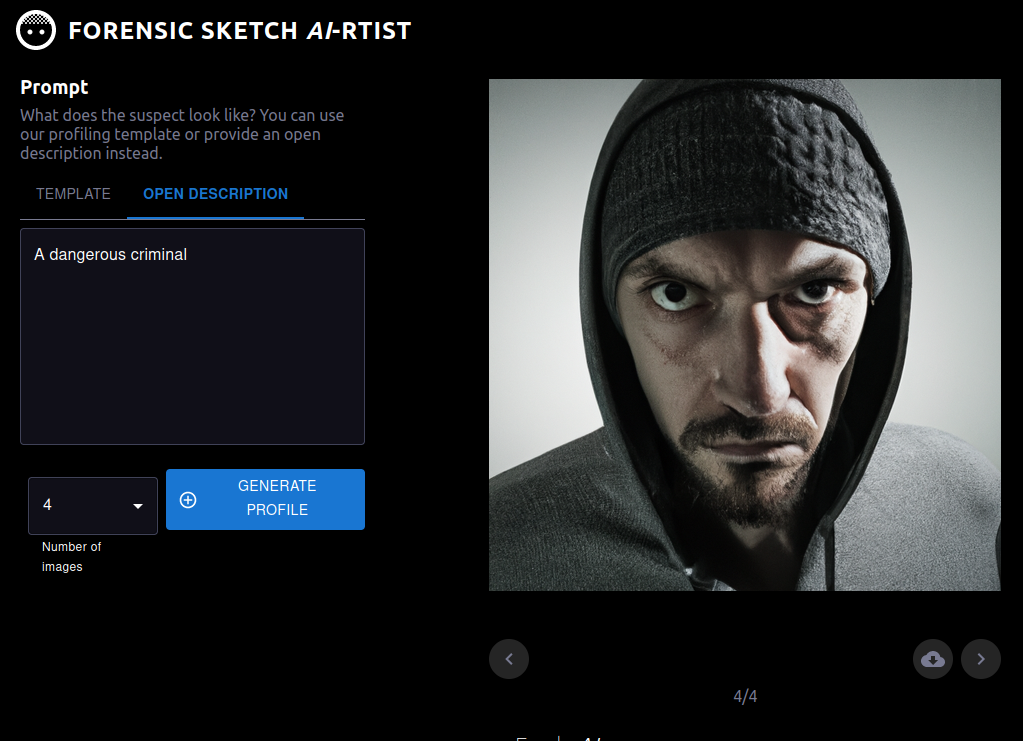

Also, as we have now mentioned in a previous newsletter, there is no such thing as a one single strategy to develop machine learning systems, and any of the steps in the event and deployment process can present opportunities to tackle bias, from who’s included initially, to defining the duty, to curating the dataset, training the model, and more. This also applies to multimodal models and the ways during which they’re ultimately deployed or productionized in society, since the implications of bias in multimodal models will rely on their downstream use. As an illustration, if a model is utilized in a human-in-the-loop setting for graphic design (similar to those created by RunwayML), the user has quite a few occasions to detect and proper bias, as an illustration by changing the prompt or the generation options. Nonetheless, if a model is used as a part of a tool to assist forensic artists create police sketches of potential suspects (see image below), then the stakes are much higher, since this will reinforce stereotypes and racial biases in a high-risk setting.

Other updates

We’re also continuing work on other fronts of ethics and society, including:

- Content moderation:

- We made a significant update to our Content Policy. It has been almost a yr since our last update and the Hugging Face community has grown massively since then, so we felt it was time. On this update we emphasize consent as one in every of Hugging Face’s core values. To read more about our thought process, try the announcement blog .

- AI Accountability Policy:

- We submitted a response to the NTIA request for comments on AI accountability policy, where we stressed the importance of documentation and transparency mechanisms, in addition to the need of leveraging open collaboration and promoting access to external stakeholders. You’ll find a summary of our response and a link to the total document in our blog post!

Closing Remarks

As you’ll be able to tell from our discussion above, the difficulty of detecting and fascinating with bias and values in multimodal models, similar to text-to-image models, could be very much an open query. Other than the work cited above, we’re also engaging with the community at large on the problems – we recently co-led a CRAFT session on the FAccT conference on the subject and are continuing to pursue data- and model-centric research on the subject. One particular direction we’re excited to explore is a more in-depth probing of the values instilled in text-to-image models and what they represent (stay tuned!).