Open-source LLMs like Falcon, (Open-)LLaMA, X-Gen, StarCoder or RedPajama, have come a great distance in recent months and might compete with closed-source models like ChatGPT or GPT4 for certain use cases. Nonetheless, deploying these models in an efficient and optimized way still presents a challenge.

On this blog post, we’ll show you the right way to deploy open-source LLMs to Hugging Face Inference Endpoints, our managed SaaS solution that makes it easy to deploy models. Moreover, we’ll teach you the right way to stream responses and test the performance of our endpoints. So let’s start!

- How you can deploy Falcon 40B instruct

- Test the LLM endpoint

- Stream responses in Javascript and Python

Before we start, let’s refresh our knowledge about Inference Endpoints.

What’s Hugging Face Inference Endpoints

Hugging Face Inference Endpoints offers a simple and secure technique to deploy Machine Learning models to be used in production. Inference Endpoints empower developers and data scientists alike to create AI applications without managing infrastructure: simplifying the deployment process to a number of clicks, including handling large volumes of requests with autoscaling, reducing infrastructure costs with scale-to-zero, and offering advanced security.

Listed here are a few of an important features for LLM deployment:

- Easy Deployment: Deploy models as production-ready APIs with just a number of clicks, eliminating the necessity to handle infrastructure or MLOps.

- Cost Efficiency: Profit from automatic scale to zero capability, reducing costs by cutting down the infrastructure when the endpoint just isn’t in use, while paying based on the uptime of the endpoint, ensuring cost-effectiveness.

- Enterprise Security: Deploy models in secure offline endpoints accessible only through direct VPC connections, backed by SOC2 Type 2 certification, and offering BAA and GDPR data processing agreements for enhanced data security and compliance.

- LLM Optimization: Optimized for LLMs, enabling high throughput with Paged Attention and low latency through custom transformers code and Flash Attention power by Text Generation Inference

- Comprehensive Task Support: Out of the box support for 🤗 Transformers, Sentence-Transformers, and Diffusers tasks and models, and simple customization to enable advanced tasks like speaker diarization or any Machine Learning task and library.

You may start with Inference Endpoints at: https://ui.endpoints.huggingface.co/

1. How you can deploy Falcon 40B instruct

To start, you could be logged in with a User or Organization account with a payment method on file (you’ll be able to add one here), then access Inference Endpoints at https://ui.endpoints.huggingface.co

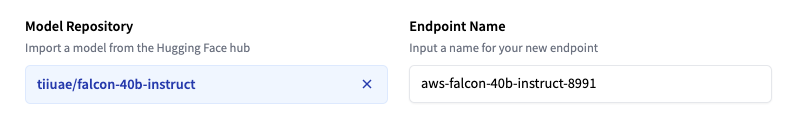

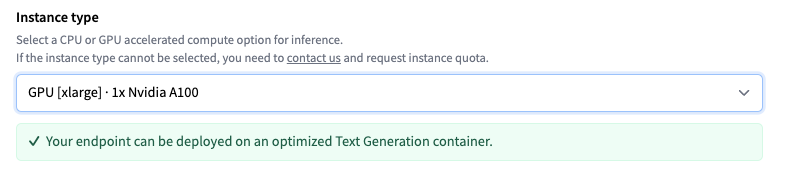

Then, click on “Recent endpoint”. Select the repository, the cloud, and the region, adjust the instance and security settings, and deploy in our case tiiuae/falcon-40b-instruct.

Inference Endpoints suggest an instance type based on the model size, which must be large enough to run the model. Here 4x NVIDIA T4 GPUs. To get one of the best performance for the LLM, change the instance to GPU [xlarge] · 1x Nvidia A100.

Note: If the instance type can’t be chosen, you could contact us and request an instance quota.

You may then deploy your model with a click on “Create Endpoint”. After 10 minutes, the Endpoint must be online and available to serve requests.

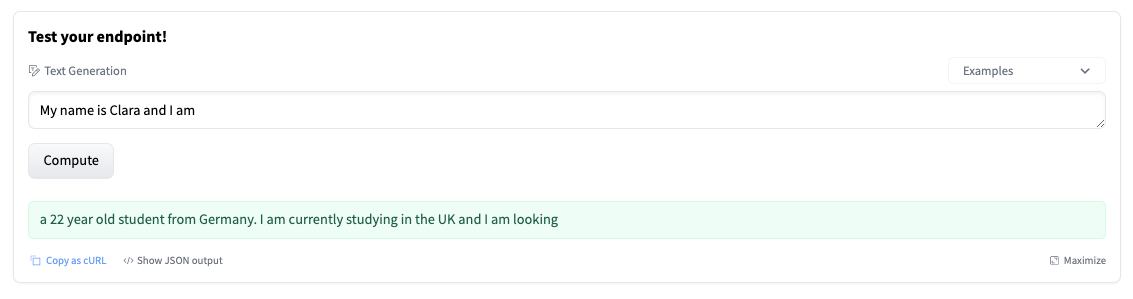

2. Test the LLM endpoint

The Endpoint overview provides access to the Inference Widget, which might be used to manually send requests. This permits you to quickly test your Endpoint with different inputs and share it with team members. Those Widgets don’t support parameters – on this case this results to a “short” generation.

The widget also generates a cURL command you need to use. Just add your hf_xxx and test.

curl https://j4xhm53fxl9ussm8.us-east-1.aws.endpoints.huggingface.cloud

-X POST

-d '{"inputs":"Once upon a time,"}'

-H "Authorization: Bearer "

-H "Content-Type: application/json"

You should utilize different parameters to manage the generation, defining them within the parameters attribute of the payload. As of today, the next parameters are supported:

temperature: Controls randomness within the model. Lower values will make the model more deterministic and better values will make the model more random. Default value is 1.0.max_new_tokens: The utmost variety of tokens to generate. Default value is 20, max value is 512.repetition_penalty: Controls the likelihood of repetition. Default isnull.seed: The seed to make use of for random generation. Default isnull.stop: An inventory of tokens to stop the generation. The generation will stop when considered one of the tokens is generated.top_k: The variety of highest probability vocabulary tokens to maintain for top-k-filtering. Default value isnull, which disables top-k-filtering.top_p: The cumulative probability of parameter highest probability vocabulary tokens to maintain for nucleus sampling, default tonulldo_sample: Whether or not to make use of sampling; use greedy decoding otherwise. Default value isfalse.best_of: Generate best_of sequences and return the one if the very best token logprobs, default tonull.details: Whether or to not return details in regards to the generation. Default value isfalse.return_full_text: Whether or to not return the complete text or only the generated part. Default value isfalse.truncate: Whether or to not truncate the input to the utmost length of the model. Default value istrue.typical_p: The standard probability of a token. Default value isnull.watermark: The watermark to make use of for the generation. Default value isfalse.

3. Stream responses in Javascript and Python

Requesting and generating text with LLMs could be a time-consuming and iterative process. An ideal technique to improve the user experience is streaming tokens to the user as they’re generated. Below are two examples of the right way to stream tokens using Python and JavaScript. For Python, we’re going to use the client from Text Generation Inference, and for JavaScript, the HuggingFace.js library

Streaming requests with Python

First, you could install the huggingface_hub library:

pip install -U huggingface_hub

We are able to create a InferenceClient providing our endpoint URL and credential alongside the hyperparameters we wish to make use of

from huggingface_hub import InferenceClient

endpoint_url = "https://YOUR_ENDPOINT.endpoints.huggingface.cloud"

hf_token = "hf_YOUR_TOKEN"

client = InferenceClient(endpoint_url, token=hf_token)

gen_kwargs = dict(

max_new_tokens=512,

top_k=30,

top_p=0.9,

temperature=0.2,

repetition_penalty=1.02,

stop_sequences=["nUser:", "<|endoftext|>", ""],

)

prompt = "What are you able to do in Nuremberg, Germany? Give me 3 Suggestions"

stream = client.text_generation(prompt, stream=True, details=True, **gen_kwargs)

for r in stream:

if r.token.special:

proceed

if r.token.text in gen_kwargs["stop_sequences"]:

break

print(r.token.text, end = "")

Replace the print command with the yield or with a function you would like to stream the tokens to.

Streaming requests with JavaScript

First, you could install the @huggingface/inference library.

npm install @huggingface/inference

We are able to create a HfInferenceEndpoint providing our endpoint URL and credential alongside the hyperparameter we wish to make use of.

import { HfInferenceEndpoint } from '@huggingface/inference'

const hf = recent HfInferenceEndpoint('https://YOUR_ENDPOINT.endpoints.huggingface.cloud', 'hf_YOUR_TOKEN')

const gen_kwargs = >', ''],

const prompt = 'What are you able to do in Nuremberg, Germany? Give me 3 Suggestions'

const stream = hf.textGenerationStream({ inputs: prompt, parameters: gen_kwargs })

for await (const r of stream) {

if (r.token.special) {

proceed

}

if (gen_kwargs['stop_sequences'].includes(r.token.text)) {

break

}

process.stdout.write(r.token.text)

}

Replace the process.stdout call with the yield or with a function you would like to stream the tokens to.

Conclusion

On this blog post, we showed you the right way to deploy open-source LLMs using Hugging Face Inference Endpoints, the right way to control the text generation with advanced parameters, and the right way to stream responses to a Python or JavaScript client to enhance the user experience. By utilizing Hugging Face Inference Endpoints you’ll be able to deploy models as production-ready APIs with just a number of clicks, reduce your costs with automatic scale to zero, and deploy models into secure offline endpoints backed by SOC2 Type 2 certification.

Thanks for reading! If you will have any questions, be happy to contact me on Twitter or LinkedIn.