On this blog post, I’ll show you the way I made Doodle Dash, a real-time ML-powered web game that runs completely in your browser (due to Transformers.js). The goal of this tutorial is to indicate you the way easy it’s to make your personal ML-powered web game… just in time for the upcoming Open Source AI Game Jam (7-9 July 2023). Join the sport jam should you have not already!

Quick links

Overview

Before we start, let’s discuss what we’ll be creating. The sport is inspired by Google’s Quick, Draw! game, where you are given a word and a neural network has 20 seconds to guess what you are drawing (repeated 6 times). In truth, we’ll be using their training data to coach our own sketch detection model! Don’t you only love open source? 😍

In our version, you’ll need one minute to attract as many items as you may, one prompt at a time. If the model predicts the right label, the canvas might be cleared and you may be given a brand new word. Keep doing this until the timer runs out! Because the game runs locally in your browser, we haven’t got to fret about server latency in any respect. The model is in a position to make real-time predictions as you draw, to the tune of over 60 predictions a second… 🤯 WOW!

This tutorial is split into 3 sections:

1. Training the neural network

Training data

We’ll be training our model using a subset of Google’s Quick, Draw! dataset, which comprises over 5 million drawings across 345 categories. Listed here are some samples from the dataset:

Model architecture

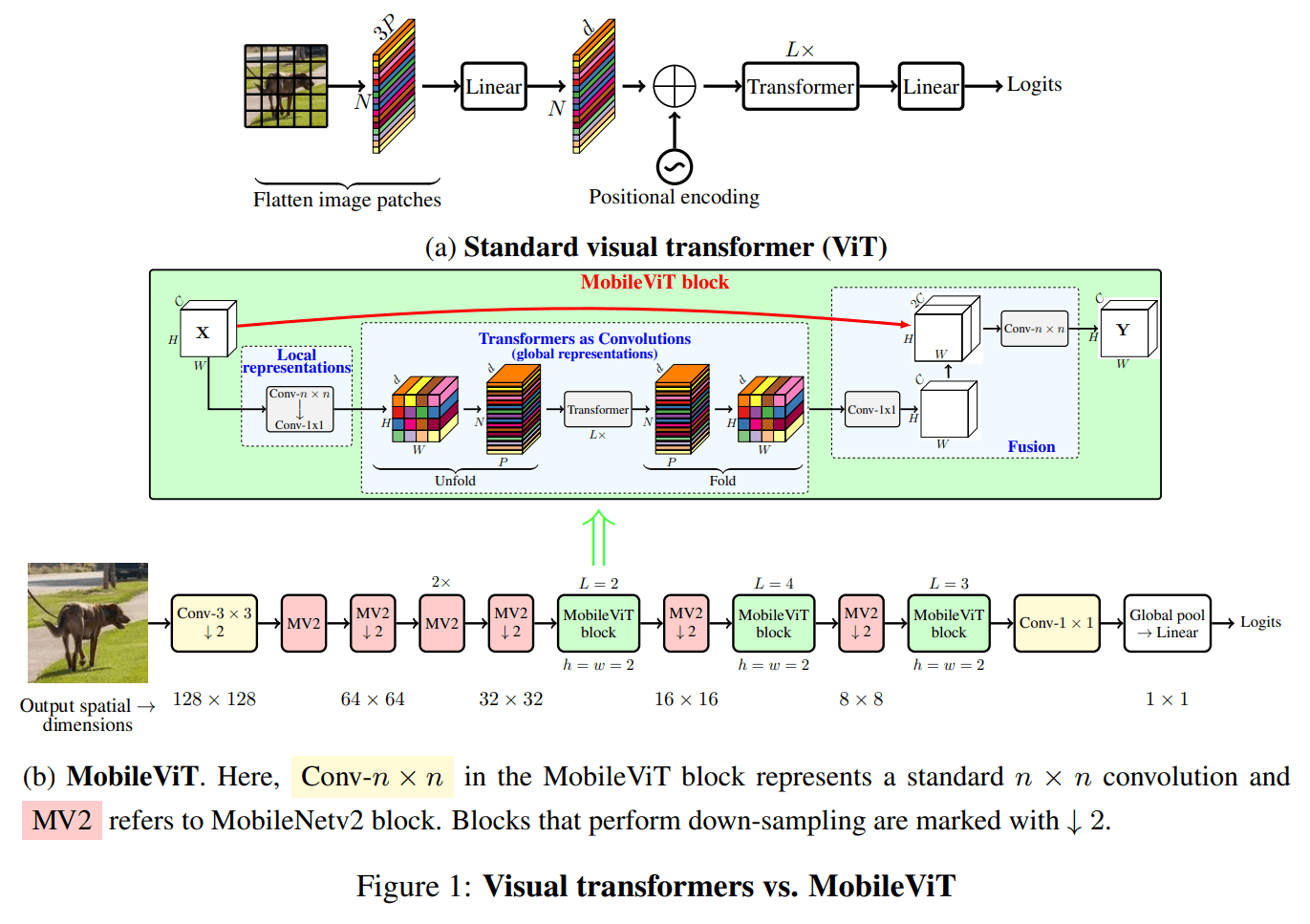

We’ll be finetuning apple/mobilevit-small, a light-weight and mobile-friendly Vision Transformer that has been pre-trained on ImageNet-1k. It has only 5.6M parameters (~20 MB file size), an ideal candidate for running in-browser! For more information, try the MobileViT paper and the model architecture below.

Finetuning

To maintain the blog post (relatively) short, we have prepared a Colab notebook which can show you the precise steps we took to finetune apple/mobilevit-small on our dataset. At a high level, this involves:

-

Loading the “Quick, Draw!” dataset.

-

Transforming the dataset using a

MobileViTImageProcessor. -

Defining our collate function and evaluation metric.

-

Loading the pre-trained MobileVIT model using

MobileViTForImageClassification.from_pretrained. -

Training the model using the

TrainerandTrainingArgumentshelper classes. -

Evaluating the model using 🤗 Evaluate.

NOTE: You’ll find our finetuned model here on the Hugging Face Hub.

2. Running within the browser with Transformers.js

What’s Transformers.js?

Transformers.js is a JavaScript library that lets you run 🤗 Transformers directly in your browser (no need for a server)! It’s designed to be functionally akin to the Python library, meaning you may run the identical pre-trained models using a really similar API.

Behind the scenes, Transformers.js uses ONNX Runtime, so we’d like to convert our finetuned PyTorch model to ONNX.

Converting our model to ONNX

Fortunately, the 🤗 Optimum library makes it super easy to convert your finetuned model to ONNX! The simplest (and beneficial way) is to:

-

Clone the Transformers.js repository and install the mandatory dependencies:

git clone https://github.com/xenova/transformers.js.git cd transformers.js pip install -r scripts/requirements.txt -

Run the conversion script (it uses

Optimumunder the hood):python -m scripts.convert --model_idwhere

Xenova/quickdraw-mobilevit-small).

Organising our project

Let’s start by scaffolding an easy React app using Vite:

npm create vite@latest doodle-dash -- --template react

Next, enter the project directory and install the mandatory dependencies:

cd doodle-dash

npm install

npm install @xenova/transformers

You may then start the event server by running:

npm run dev

Running the model within the browser

Running machine learning models is computationally intensive, so it is vital to perform inference in a separate thread. This manner we cannot block the essential thread, which is used for rendering the UI and reacting to your drawing gestures 😉. The Web Employees API makes this super easy!

Create a brand new file (e.g., employee.js) within the src directory and add the next code:

import { pipeline, RawImage } from "@xenova/transformers";

const classifier = await pipeline("image-classification", 'Xenova/quickdraw-mobilevit-small', { quantized: false });

const image = await RawImage.read('https://huggingface.co/datasets/huggingface/documentation-images/resolve/essential/blog/ml-web-games/skateboard.png');

const output = await classifier(image.grayscale());

console.log(output);

We are able to now use this employee in our App.jsx file by adding the next code to the App component:

import { useState, useEffect, useRef } from 'react'

function App() {

const employee = useRef(null);

useEffect(() => {

if (!employee.current) {

employee.current = recent Employee(recent URL('./employee.js', import.meta.url), {

type: 'module'

});

}

const onMessageReceived = (e) => { };

employee.current.addEventListener('message', onMessageReceived);

return () => employee.current.removeEventListener('message', onMessageReceived);

});

}

You may test that the whole lot is working by running the event server (with npm run dev), visiting the local website (often http://localhost:5173/), and opening the browser console. It’s best to see the output of the model being logged to the console.

[{ label: "skateboard", score: 0.9980043172836304 }]

Woohoo! 🥳 Although the above code is only a small a part of the final product, it shows how easy the machine-learning side of it’s! The remaining is just making it look nice and adding some game logic.

3. Game Design

On this section, I’ll briefly discuss the sport design process. As a reminder, you will discover the total source code for the project on GitHub, so I won’t be going into detail concerning the code itself.

Profiting from real-time performance

One in every of the essential benefits of performing in-browser inference is that we will make predictions in real time (over 60 times a second). In the unique Quick, Draw! game, the model only makes a brand new prediction every couple of seconds. We could do the identical in our game, but then we would not be profiting from its real-time performance! So, I made a decision to revamp the essential game loop:

- As an alternative of six 20-second rounds (where each round corresponds to a brand new word), our version tasks the player with accurately drawing as many doodles as they will in 60 seconds (one prompt at a time).

- When you come across a word you’re unable to attract, you may skip it (but this can cost you 3 seconds of your remaining time).

- In the unique game, because the model would make a guess every few seconds, it could slowly cross labels off the list until it will definitely guessed accurately. In our version, we as a substitute decrease the model’s scores for the primary

nincorrect labels, withnincreasing over time because the user continues drawing.

Quality of life improvements

The unique dataset comprises 345 different classes, and since our model is comparatively small (~20MB), it sometimes is unable to accurately guess a few of the classes. To resolve this problem, we removed some words that are either:

- Too much like other labels (e.g., “barn” vs. “house”)

- Too obscure (e.g., “animal migration”)

- Too difficult to attract in sufficient detail (e.g., “brain”)

- Ambiguous (e.g., “bat”)

After filtering, we were still left with over 300 different classes!

BONUS: Coming up with the name

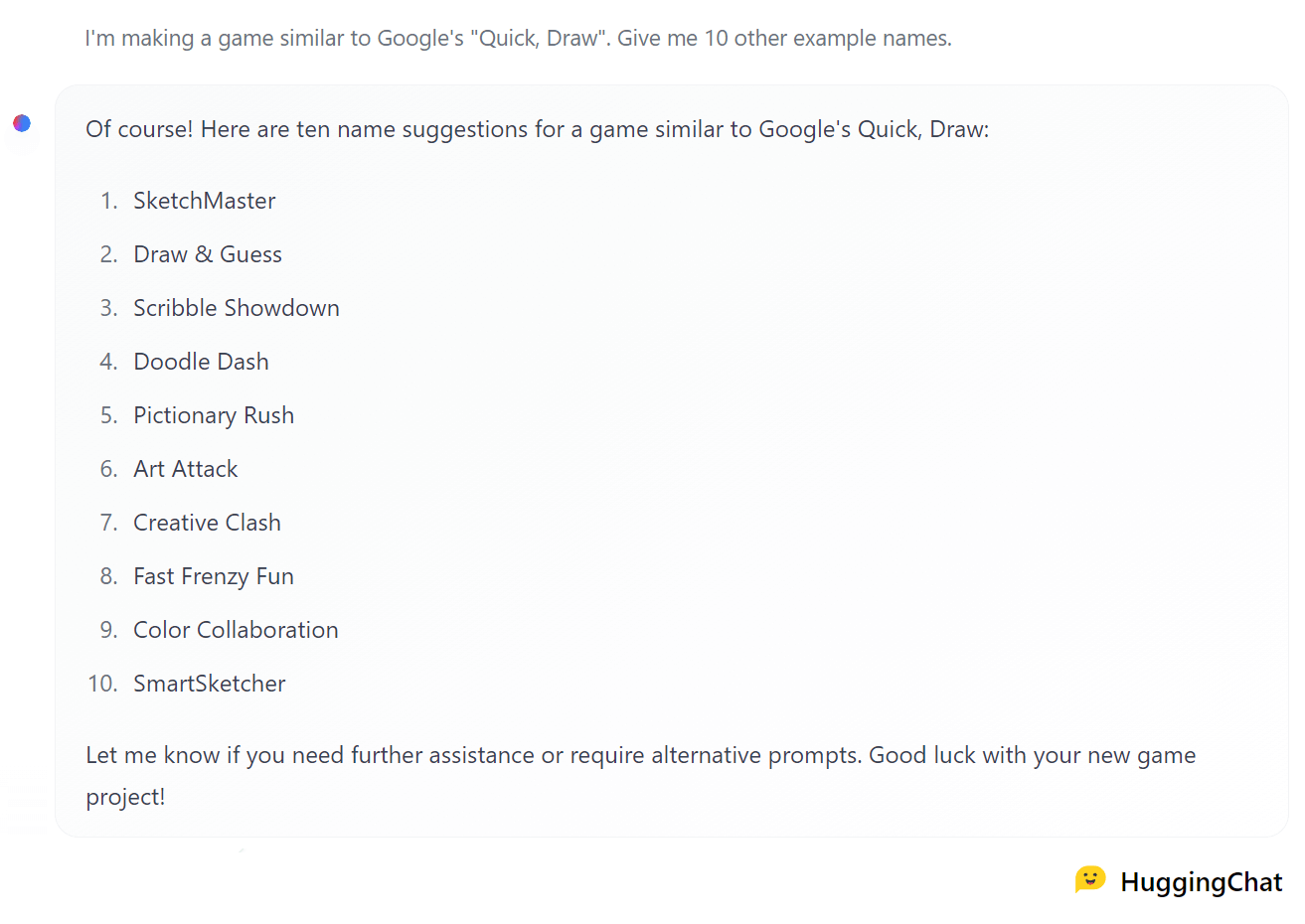

Within the spirit of open-source development, I made a decision to ask Hugging Chat for some game name ideas… and pointless to say, it didn’t disappoint!

I liked the alliteration of “Doodle Dash” (suggestion #4), so I made a decision to go along with that. Thanks Hugging Chat! 🤗

I hope you enjoyed constructing this game with me! If you have got any questions or suggestions, you will discover me on Twitter, GitHub, or the 🤗 Hub. Also, if you ought to improve the sport (game modes? power-ups? animations? sound effects?), be happy to fork the project and submit a pull request! I’d like to see what you provide you with!

PS: Do not forget to hitch the Open Source AI Game Jam! Hopefully this blog post inspires you to construct your personal web game with Transformers.js! 😉 See you on the Game Jam! 🚀