Stable Diffusion XL was released yesterday and it’s awesome. It may possibly generate large (1024×1024) top quality images; adherence to prompts has been improved with some latest tricks; it might effortlessly produce very dark or very vivid images because of the newest research on noise schedulers; and it’s open source!

The downside is that the model is way greater, and subsequently slower and tougher to run on consumer hardware. Using the latest release of the Hugging Face diffusers library, you possibly can run Stable Diffusion XL on CUDA hardware in 16 GB of GPU RAM, making it possible to apply it to Colab’s free tier.

The past few months have shown that folks are very clearly concerned about running ML models locally for quite a lot of reasons, including privacy, convenience, easier experimentation, or unmetered use. We’ve been working hard at each Apple and Hugging Face to explore this space. We’ve shown tips on how to run Stable Diffusion on Apple Silicon, or tips on how to leverage the latest advancements in Core ML to enhance size and performance with 6-bit palettization.

For Stable Diffusion XL we’ve done a number of things:

- Ported the base model to Core ML so you need to use it in your native Swift apps.

- Updated Apple’s conversion and inference repo so you possibly can convert the models yourself, including any fine-tunes you’re concerned about.

- Updated Hugging Face’s demo app to indicate tips on how to use the brand new Core ML Stable Diffusion XL models downloaded from the Hub.

- Explored mixed-bit palettization, a sophisticated compression technique that achieves vital size reductions while minimizing and controlling the standard loss you incur. You may apply the identical technique to your individual models too!

Every little thing is open source and available today, let’s get on with it.

Contents

Using SD XL Models from the Hugging Face Hub

As a part of this release, we published two different versions of Stable Diffusion XL in Core ML.

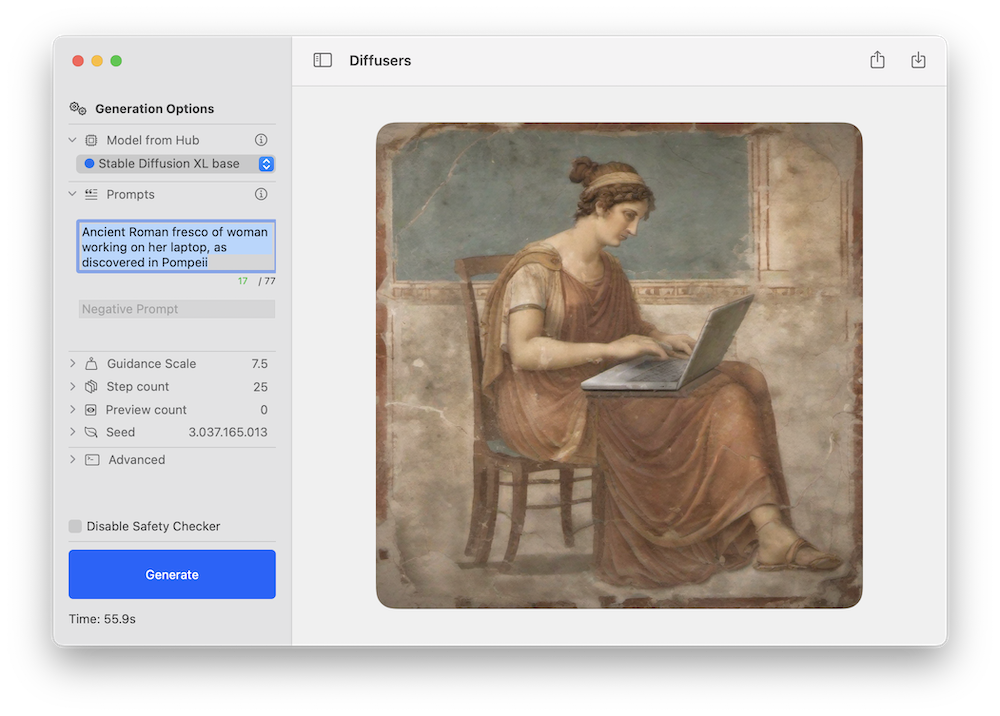

Either model will be tested using Apple’s Swift command-line inference app, or Hugging Face’s demo app. That is an example of the latter using the brand new Stable Diffusion XL pipeline:

As with previous Stable Diffusion releases, we expect the community to provide you with novel fine-tuned versions for various domains, and lots of of them can be converted to Core ML. You may control this filter within the Hub to explore!

Stable Diffusion XL works on Apple Silicon Macs running the general public beta of macOS 14. It currently uses the ORIGINAL attention implementation, which is meant for CPU + GPU compute units. Note that the refiner stage has not been ported yet.

For reference, these are the performance figures we achieved on different devices:

| Device | --compute-unit |

--attention-implementation |

End-to-End Latency (s) | Diffusion Speed (iter/s) |

|---|---|---|---|---|

| MacBook Pro (M1 Max) | CPU_AND_GPU |

ORIGINAL |

46 | 0.46 |

| MacBook Pro (M2 Max) | CPU_AND_GPU |

ORIGINAL |

37 | 0.57 |

| Mac Studio (M1 Ultra) | CPU_AND_GPU |

ORIGINAL |

25 | 0.89 |

| Mac Studio (M2 Ultra) | CPU_AND_GPU |

ORIGINAL |

20 | 1.11 |

What’s Mixed-Bit Palettization?

Last month we discussed 6-bit palettization, a post-training quantization method that converts 16-bit weights to simply 6-bit per parameter. This achieves a crucial reduction in model size, but going beyond that is difficult because model quality becomes increasingly more impacted because the variety of bits is decreased.

One option to diminish model size further is to make use of training time quantization, which consists of learning the quantization tables while we fine-tune the model. This works great, but you could run a fine-tuning phase for each model you desire to convert.

We explored a distinct alternative as a substitute: mixed-bit palettization. As a substitute of using 6 bits per parameter, we examine the model and choose what number of quantization bits to make use of per layer. We make the choice based on how much each layer contributes to the general quality degradation, which we measure by comparing the PSNR between the quantized model and the unique model in float16 mode, for a set of a number of inputs. We explore several bit depths, per layer: 1 (!), 2, 4 and 8. If a layer degrades significantly when using, say, 2 bits, we move to 4 and so forth. Some layers could be kept in 16-bit mode in the event that they are critical to preserving quality.

Using this method, we are able to achieve effective quantizations of, for instance, 2.8 bits on average, and we measure the impact on degradation for each combination we try. This permits us to be higher informed about one of the best quantization to make use of for our goal quality and size budgets.

As an example the strategy, let’s consider the next quantization “recipes” that we got from one in all our evaluation runs (we’ll explain later how they were generated):

{

"model_version": "stabilityai/stable-diffusion-xl-base-1.0",

"baselines": {

"original": 82.2,

"linear_8bit": 66.025,

"recipe_6.55_bit_mixedpalette": 79.9,

"recipe_4.50_bit_mixedpalette": 75.8,

"recipe_3.41_bit_mixedpalette": 71.7,

},

}

What this tells us is that the unique model quality, as measured by PSNR in float16, is about 82 dB. Performing a naïve 8-bit linear quantization drops it to 66 dB. But then now we have a recipe that compresses to six.55 bits per parameter, on average, while keeping PSNR at 80 dB. The second and third recipes further reduce the model size, while still sustaining a PSNR larger than that of the 8-bit linear quantization.

For visual examples, these are the outcomes on prompt a top quality photo of a browsing dog running each one in all the three recipes with the identical seed:

Some initial conclusions:

- In our opinion, all the pictures have good quality by way of how realistic they give the impression of being. The 6.55 and 4.50 versions are near the 16-bit version on this aspect.

- The identical seed produces an equivalent composition, but won’t preserve the identical details. Dog breeds could also be different, for instance.

- Adherence to the prompt may degrade as we increase compression. In this instance, the aggressive 3.41 version loses the board. PSNR only compares how much pixels differ overall, but doesn’t care concerning the subjects in the pictures. You’ll want to examine results and assess them on your use case.

This method is great for Stable Diffusion XL because we are able to keep concerning the same UNet size despite the fact that the variety of parameters tripled with respect to the previous version. However it’s not exclusive to it! You may apply the strategy to any Stable Diffusion Core ML model.

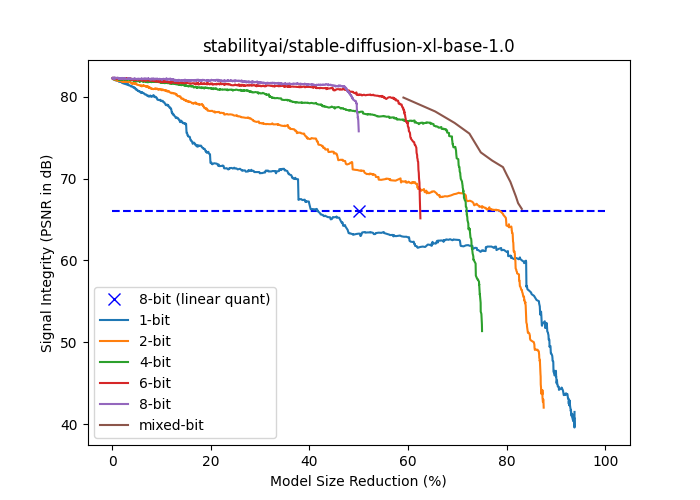

How are Mixed-Bit Recipes Created?

The next plot shows the signal strength (PSNR in dB) versus model size reduction (% of float16 size) for stabilityai/stable-diffusion-xl-base-1.0. The {1,2,4,6,8}-bit curves are generated by progressively palettizing more layers using a palette with a hard and fast variety of bits. The layers were ordered in ascending order of their isolated impact to end-to-end signal strength, so the cumulative compression’s impact is delayed as much as possible. The mixed-bit curve is predicated on falling back to a better variety of bits as soon as a layer’s isolated impact to end-to-end signal integrity drops below a threshold. Note that every one curves based on palettization outperform linear 8-bit quantization at the identical model size aside from 1-bit.

Mixed-bit palettization runs in two phases: evaluation and application.

The goal of the evaluation phase is to seek out points within the mixed-bit curve (the brown one above all of the others within the figure) so we are able to select our desired quality-vs-size tradeoff. As mentioned within the previous section, we iterate through the layers and choose the bottom bit depths that yield results above a given PSNR threshold. We repeat the method for various thresholds to get different quantization strategies. The results of the method is thus a set of quantization recipes, where each recipe is only a JSON dictionary detailing the variety of bits to make use of for every layer within the model. Layers with few parameters are ignored and kept in float16 for simplicity.

The applying phase simply goes over the recipe and applies palettization with the variety of bits laid out in the JSON structure.

Evaluation is a lengthy process and requires a GPU (mps or cuda), as now we have to run inference multiple times. Once it’s done, recipe application will be performed in a number of minutes.

We offer scripts for every one in all these phases:

Converting Wonderful-Tuned Models

For those who’ve previously converted Stable Diffusion models to Core ML, the method for XL using the command line converter may be very similar. There’s a brand new flag to point whether the model belongs to the XL family, and you might have to make use of --attention-implementation ORIGINAL if that’s the case.

For an introduction to the method, check the instructions within the repo or one in all our previous blog posts, and ensure you employ the flags above.

Running Mixed-Bit Palettization

After converting Stable Diffusion or Stable Diffusion XL models to Core ML, you possibly can optionally apply mixed-bit palettization using the scripts mentioned above.

Since the evaluation process is slow, now we have prepared recipes for the preferred models:

You may download and apply them locally to experiment.

As well as, we also applied the three best recipes from the Stable Diffusion XL evaluation to the Core ML version of the UNet, and published them here. Be at liberty to play with them and see how they give you the results you want!

Finally, as mentioned within the introduction, we created a complete Stable Diffusion XL Core ML pipeline that uses a 4.5-bit recipe.