AudioLDM 2 was proposed in AudioLDM 2: Learning Holistic Audio Generation with Self-supervised Pretraining

by Haohe Liu et al. AudioLDM 2 takes a text prompt as input and predicts the corresponding audio. It may generate realistic

sound effects, human speech and music.

While the generated audios are of top quality, running inference with the unique implementation could be very slow: a ten

second audio sample takes upwards of 30 seconds to generate. That is because of a mixture of things, including a deep

multi-stage modelling approach, large checkpoint sizes, and un-optimised code.

On this blog post, we showcase use AudioLDM 2 within the Hugging Face 🧨 Diffusers library, exploring a variety of code

optimisations equivalent to half-precision, flash attention, and compilation, and model optimisations equivalent to scheduler alternative

and negative prompting, to scale back the inference time by over 10 times, with minimal degradation in quality of the

output audio. The blog post can also be accompanied by a more streamlined Colab notebook,

that comprises all of the code but fewer explanations.

Read to the top to search out out generate a ten second audio sample in only 1 second!

Model overview

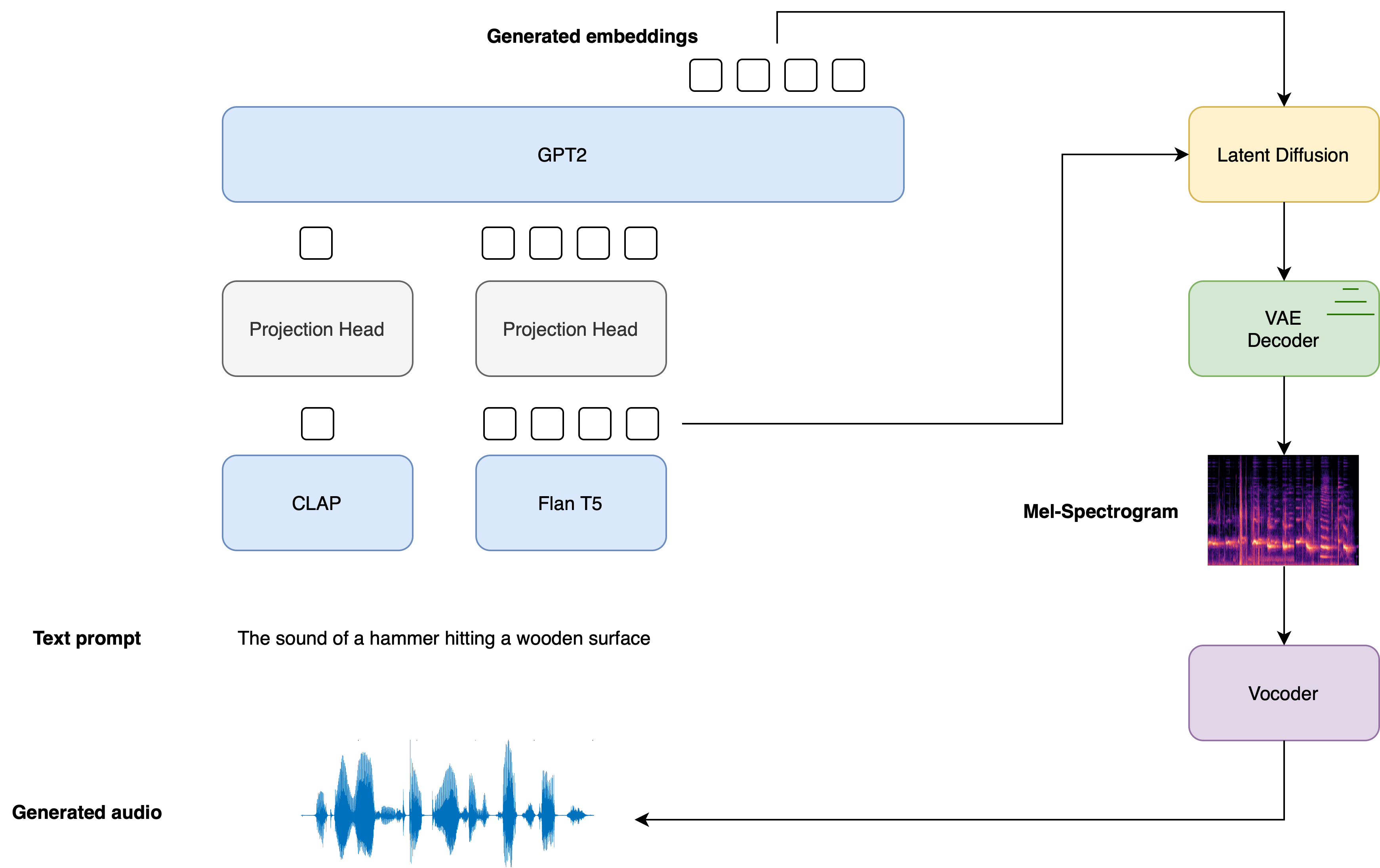

Inspired by Stable Diffusion, AudioLDM 2

is a text-to-audio latent diffusion model (LDM) that learns continuous audio representations from text embeddings.

The general generation process is summarised as follows:

- Given a text input , two text encoder models are used to compute the text embeddings: the text-branch of CLAP, and the text-encoder of Flan-T5

The CLAP text embeddings are trained to be aligned with the embeddings of the corresponding audio sample, whereas the Flan-T5 embeddings give a greater representation of the semantics of the text.

- These text embeddings are projected to a shared embedding space through individual linear projections:

Within the diffusers implementation, these projections are defined by the AudioLDM2ProjectionModel.

- A GPT2 language model (LM) is used to auto-regressively generate a sequence of latest embedding vectors, conditional on the projected CLAP and Flan-T5 embeddings:

- The generated embedding vectors and Flan-T5 text embeddings are used as cross-attention conditioning within the LDM, which de-noises

a random latent via a reverse diffusion process. The LDM is run within the reverse diffusion process for a complete of inference steps:

where the initial latent variable is drawn from a traditional distribution .

The UNet of the LDM is exclusive in

the sense that it takes two sets of cross-attention embeddings, from the GPT2 language model and

from Flan-T5, as opposed to 1 cross-attention conditioning as in most other LDMs.

- The ultimate de-noised latents are passed to the VAE decoder to recuperate the Mel spectrogram :

- The Mel spectrogram is passed to the vocoder to acquire the output audio waveform :

The diagram below demonstrates how a text input is passed through the text conditioning models, with the 2 prompt embeddings used as cross-conditioning within the LDM:

For full details on how the AudioLDM 2 model is trained, the reader is referred to the AudioLDM 2 paper.

Hugging Face 🧨 Diffusers provides an end-to-end inference pipeline class AudioLDM2Pipeline that wraps this multi-stage generation process right into a single callable object, enabling you to generate audio samples from text in only a number of lines of code.

AudioLDM 2 is available in three variants. Two of those checkpoints are applicable to the overall task of text-to-audio generation. The third checkpoint is trained exclusively on text-to-music generation. See the table below for details on the three official checkpoints, which may all be found on the Hugging Face Hub:

Now that we have covered a high-level overview of how the AudioLDM 2 generation process works, let’s put this theory into practice!

Load the pipeline

For the needs of this tutorial, we’ll initialise the pipeline with the pre-trained weights from the bottom checkpoint,

cvssp/audioldm2. We will load the whole lot of the pipeline using the

.from_pretrained

method, which is able to instantiate the pipeline and cargo the pre-trained weights:

from diffusers import AudioLDM2Pipeline

model_id = "cvssp/audioldm2"

pipe = AudioLDM2Pipeline.from_pretrained(model_id)

Output:

Loading pipeline components...: 100%|███████████████████████████████████████████| 11/11 [00:01<00:00, 7.62it/s]

The pipeline will be moved to the GPU in much the identical way as an ordinary PyTorch nn module:

pipe.to("cuda");

Great! We’ll define a Generator and set a seed for reproducibility. This may allow us to tweak our prompts and observe

the effect that they’ve on the generations by fixing the starting latents within the LDM model:

import torch

generator = torch.Generator("cuda").manual_seed(0)

Now we’re able to perform our first generation! We’ll use the identical running example throughout this notebook, where we’ll

condition the audio generations on a set text prompt and use the identical seed throughout. The audio_length_in_s

argument controls the length of the generated audio. It defaults to the audio length that the LDM was trained on

(10.24 seconds):

prompt = "The sound of Brazilian samba drums with waves gently crashing within the background"

audio = pipe(prompt, audio_length_in_s=10.24, generator=generator).audios[0]

Output:

100%|███████████████████████████████████████████| 200/200 [00:13<00:00, 15.27it/s]

Cool! That run took about 13 seconds to generate. Let’s have a take heed to the output audio:

from IPython.display import Audio

Audio(audio, rate=16000)

Sounds very like our text prompt! The standard is sweet, but still has artefacts of background noise. We will provide the

pipeline with a negative prompt

to discourage the pipeline from generating certain features. On this case, we’ll pass a negative prompt that daunts

the model from generating low quality audio within the outputs. We’ll omit the audio_length_in_s argument and leave it to

take its default value:

negative_prompt = "Low quality, average quality."

audio = pipe(prompt, negative_prompt=negative_prompt, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 200/200 [00:12<00:00, 16.50it/s]

The inference time is un-changed when using a negative prompt ; we simply replace the unconditional input to the

LDM with the negative input. Meaning any gains we get in audio quality we get without cost.

Let’s take a take heed to the resulting audio:

Audio(audio, rate=16000)

There’s definitely an improvement in the general audio quality – there are less noise artefacts and the audio generally

sounds sharper.

Note that in practice, we typically see a discount in inference time going from our first generation to our

second. That is because of a CUDA “warm-up” that happens the primary time we run the computation. The second generation is a

higher benchmark for our actual inference time.

Optimisation 1: Flash Attention

PyTorch 2.0 and upwards includes an optimised and memory-efficient implementation of the eye operation through the

torch.nn.functional.scaled_dot_product_attention (SDPA) function. This function routinely applies several in-built optimisations depending on the inputs, and runs faster and more memory-efficient than the vanilla attention implementation. Overall, the SDPA function gives similar behaviour to flash attention, as proposed within the paper Fast and Memory-Efficient Exact Attention with IO-Awareness by Dao et. al.

These optimisations can be enabled by default in Diffusers if PyTorch 2.0 is installed and if torch.nn.functional.scaled_dot_product_attention

is obtainable. To make use of it, just install torch 2.0 or higher as per the official instructions,

after which use the pipeline as is 🚀

audio = pipe(prompt, negative_prompt=negative_prompt, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 200/200 [00:12<00:00, 16.60it/s]

For more details on using SDPA in diffusers, check with the corresponding documentation.

Optimisation 2: Half-Precision

By default, the AudioLDM2Pipeline loads the model weights in float32 (full) precision. All of the model computations are

also performed in float32 precision. For inference, we will safely convert the model weights and computations to float16

(half) precision, which is able to give us an improvement to inference time and GPU memory, with an impercivable change to

generation quality.

We will load the weights in float16 precision by passing the torch_dtype

argument to .from_pretrained:

pipe = AudioLDM2Pipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.to("cuda");

Let’s run generation in float16 precision and take heed to the audio outputs:

audio = pipe(prompt, negative_prompt=negative_prompt, generator=generator.manual_seed(0)).audios[0]

Audio(audio, rate=16000)

Output:

100%|███████████████████████████████████████████| 200/200 [00:09<00:00, 20.94it/s]

The audio quality is essentially un-changed from the complete precision generation, with an inference speed-up of about 2 seconds.

In our experience, we have not seen any significant audio degradation using diffusers pipelines with float16 precision,

but consistently reap a considerable inference speed-up. Thus, we recommend using float16 precision by default.

Optimisation 3: Torch Compile

To get an extra speed-up, we will use the brand new torch.compile feature. Because the UNet of the pipeline is frequently the

most computationally expensive, we wrap the unet with torch.compile, leaving the remaining of the sub-models (text encoders

and VAE) as is:

pipe.unet = torch.compile(pipe.unet, mode="reduce-overhead", fullgraph=True)

After wrapping the UNet with torch.compile the primary inference step we run is usually going to be slow, because of the

overhead of compiling the forward pass of the UNet. Let’s run the pipeline forward with the compilation step get this

longer run out of the best way. Note that the primary inference step might take as much as 2 minutes to compile, so be patient!

audio = pipe(prompt, negative_prompt=negative_prompt, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 200/200 [01:23<00:00, 2.39it/s]

Great! Now that the UNet is compiled, we will now run the complete diffusion process and reap the advantages of faster inference:

audio = pipe(prompt, negative_prompt=negative_prompt, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 200/200 [00:04<00:00, 48.98it/s]

Only 4 seconds to generate! In practice, you’ll only should compile the UNet once, after which get faster inference for

all successive generations. Because of this the time taken to compile the model is amortised by the gains in subsequent

inference time. For more information and options regarding torch.compile, check with the

torch compile docs.

Optimisation 4: Scheduler

An alternative choice is to scale back the variety of inference steps. Selecting a more efficient scheduler might help decrease the

variety of steps without sacrificing the output audio quality. Yow will discover which schedulers are compatible with the

AudioLDM2Pipeline by calling the schedulers.compatibles

attribute:

pipe.scheduler.compatibles

Output:

[diffusers.schedulers.scheduling_lms_discrete.LMSDiscreteScheduler,

diffusers.schedulers.scheduling_k_dpm_2_discrete.KDPM2DiscreteScheduler,

diffusers.schedulers.scheduling_dpmsolver_multistep.DPMSolverMultistepScheduler,

diffusers.schedulers.scheduling_unipc_multistep.UniPCMultistepScheduler,

diffusers.schedulers.scheduling_euler_discrete.EulerDiscreteScheduler,

diffusers.schedulers.scheduling_pndm.PNDMScheduler,

diffusers.schedulers.scheduling_dpmsolver_singlestep.DPMSolverSinglestepScheduler,

diffusers.schedulers.scheduling_heun_discrete.HeunDiscreteScheduler,

diffusers.schedulers.scheduling_ddpm.DDPMScheduler,

diffusers.schedulers.scheduling_deis_multistep.DEISMultistepScheduler,

diffusers.utils.dummy_torch_and_torchsde_objects.DPMSolverSDEScheduler,

diffusers.schedulers.scheduling_ddim.DDIMScheduler,

diffusers.schedulers.scheduling_k_dpm_2_ancestral_discrete.KDPM2AncestralDiscreteScheduler,

diffusers.schedulers.scheduling_euler_ancestral_discrete.EulerAncestralDiscreteScheduler]

Alright! We have a protracted list of schedulers to select from 📝. By default, AudioLDM 2 uses the DDIMScheduler,

and requires 200 inference steps to get good quality audio generations. Nonetheless, more performant schedulers, like DPMSolverMultistepScheduler,

require only 20-25 inference steps to realize similar results.

Let’s have a look at how we will switch the AudioLDM 2 scheduler from DDIM to DPM Multistep. We’ll use the ConfigMixin.from_config()

method to load a DPMSolverMultistepScheduler

from the configuration of our original DDIMScheduler:

from diffusers import DPMSolverMultistepScheduler

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

Let’s set the variety of inference steps to twenty and re-run the generation with the brand new scheduler.

Because the shape of the LDM latents are un-changed, we haven’t got to repeat the compilation step:

audio = pipe(prompt, negative_prompt=negative_prompt, num_inference_steps=20, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 20/20 [00:00<00:00, 49.14it/s]

That took lower than 1 second to generate the audio! Let’s have a take heed to the resulting generation:

Audio(audio, rate=16000)

Kind of the identical as our original audio sample, but only a fraction of the generation time! 🧨 Diffusers pipelines

are designed to be composable, allowing you two swap out schedulers and other components for more performant counterparts

with ease.

What about memory?

The length of the audio sample we would like to generate dictates the width of the latent variables we de-noise within the LDM.

Because the memory of the cross-attention layers within the UNet scales with sequence length (width) squared, generating very

long audio samples might result in out-of-memory errors. Our batch size also governs our memory usage, controlling the number

of samples that we generate.

We have already mentioned that loading the model in float16 half precision gives strong memory savings. Using PyTorch 2.0

SDPA also gives a memory improvement, but this may not be suffienct for terribly large sequence lengths.

Let’s try generating an audio sample 2.5 minutes (150 seconds) in duration. We’ll also generate 4 candidate audios by

setting num_waveforms_per_prompt=4.

Once num_waveforms_per_prompt>1,

automatic scoring is performed between the generated audios and the text prompt: the audios and text prompts are embedded

within the CLAP audio-text embedding space, after which ranked based on their cosine similarity scores. We will access the ‘best’

waveform as that in position 0.

Since we have modified the width of the latent variables within the UNet, we’ll should perform one other torch compilation step

with the brand new latent variable shapes. Within the interest of time, we’ll re-load the pipeline without torch compile, such that

we’re not hit with a lengthy compilation step first up:

pipe = AudioLDM2Pipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.to("cuda")

audio = pipe(prompt, negative_prompt=negative_prompt, num_waveforms_per_prompt=4, audio_length_in_s=150, num_inference_steps=20, generator=generator.manual_seed(0)).audios[0]

Output:

“`

OutOfMemoryError Traceback (most up-to-date call last)

in

3 pipe.to(“cuda”)

4

—-> 5 audio = pipe(prompt, negative_prompt=negative_prompt, num_waveforms_per_prompt=4, audio_length_in_s=150, num_inference_steps=20, generator=generator.manual_seed(0)).audios[0]

23 frames

/usr/local/lib/python3.10/dist-packages/torch/nn/modules/linear.py in forward(self, input)

112

113 def forward(self, input: Tensor) -> Tensor:

–> 114 return F.linear(input, self.weight, self.bias)

115

116 def extra_repr(self) -> str:

OutOfMemoryError: CUDA out of memory. Tried to allocate 1.95 GiB. GPU 0 has a complete capacty of 14.75 GiB of which 1.66 GiB is free. Process 414660 has 13.09 GiB memory in use. Of the allocated memory 10.09 GiB is allocated by PyTorch, and 1.92 GiB is reserved by PyTorch but unallocated. If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Unless you've gotten a GPU with high RAM, the code above probably returned an OOM error. While the AudioLDM 2 pipeline involves

several components, only the model getting used needs to be on the GPU at anyone time. The rest of the modules will be

offloaded to the CPU. This method, called *CPU offload*, can reduce memory usage, with a really low penalty to inference time.

We will enable CPU offload on our pipeline with the function [enable_model_cpu_offload()](https://huggingface.co/docs/diffusers/most important/en/api/pipelines/audioldm2#diffusers.AudioLDM2Pipeline.enable_model_cpu_offload):

```python

pipe.enable_model_cpu_offload()

Running generation with CPU offload is then the identical as before:

audio = pipe(prompt, negative_prompt=negative_prompt, num_waveforms_per_prompt=4, audio_length_in_s=150, num_inference_steps=20, generator=generator.manual_seed(0)).audios[0]

Output:

100%|███████████████████████████████████████████| 20/20 [00:36<00:00, 1.82s/it]

And with that, we will generate 4 samples, each of 150 seconds in duration, multi functional call to the pipeline! Using the

large AudioLDM 2 checkpoint will lead to higher overall memory usage than the bottom checkpoint, because the UNet is over

twice the dimensions (750M parameters in comparison with 350M), so this memory saving trick is especially helpful here.

Conclusion

On this blog post, we showcased 4 optimisation methods which might be available out of the box with 🧨 Diffusers, taking

the generation time of AudioLDM 2 from 14 seconds right down to lower than 1 second. We also highlighted employ memory

saving tricks, equivalent to half-precision and CPU offload, to scale back peak memory usage for long audio samples or large

checkpoint sizes.

Blog post by Sanchit Gandhi. Many due to Vaibhav Srivastav

and Sayak Paul for his or her constructive comments. Spectrogram image source: Attending to Know the Mel Spectrogram.

Waveform image source: Aalto Speech Processing.