Deploying large language models (LLMs) and other generative AI models may be difficult attributable to their computational requirements and latency needs. To offer useful recommendations to firms trying to deploy Llama 2 on Amazon SageMaker with the Hugging Face LLM Inference Container, we created a comprehensive benchmark analyzing over 60 different deployment configurations for Llama 2.

On this benchmark, we evaluated various sizes of Llama 2 on a spread of Amazon EC2 instance types with different load levels. Our goal was to measure latency (ms per token), and throughput (tokens per second) to seek out the optimal deployment strategies for 3 common use cases:

- Most Cost-Effective Deployment: For users in search of good performance at low price

- Best Latency Deployment: Minimizing latency for real-time services

- Best Throughput Deployment: Maximizing tokens processed per second

To maintain this benchmark fair, transparent, and reproducible, we share the entire assets, code, and data we used and picked up:

We hope to enable customers to make use of LLMs and Llama 2 efficiently and optimally for his or her use case. Before we get into the benchmark and data, let us take a look at the technologies and methods we used.

What’s the Hugging Face LLM Inference Container?

Hugging Face LLM DLC is a purpose-built Inference Container to simply deploy LLMs in a secure and managed environment. The DLC is powered by Text Generation Inference (TGI), an open-source, purpose-built solution for deploying and serving LLMs. TGI enables high-performance text generation using Tensor Parallelism and dynamic batching for the preferred open-source LLMs, including StarCoder, BLOOM, GPT-NeoX, Falcon, Llama, and T5. VMware, IBM, Grammarly, Open-Assistant, Uber, Scale AI, and plenty of more already use Text Generation Inference.

What’s Llama 2?

Llama 2 is a family of LLMs from Meta, trained on 2 trillion tokens. Llama 2 is available in three sizes – 7B, 13B, and 70B parameters – and introduces key improvements like longer context length, business licensing, and optimized chat abilities through reinforcement learning in comparison with Llama (1). If you wish to learn more about Llama 2 try this blog post.

What’s GPTQ?

GPTQ is a post-training quantziation method to compress LLMs, like GPT. GPTQ compresses GPT (decoder) models by reducing the variety of bits needed to store each weight within the model, from 32 bits right down to just 3-4 bits. This implies the model takes up much less memory and may run on less Hardware, e.g. Single GPU for 13B Llama2 models. GPTQ analyzes each layer of the model individually and approximates the weights to preserve the general accuracy. If you wish to learn more and learn how to use it, try Optimize open LLMs using GPTQ and Hugging Face Optimum.

Benchmark

To benchmark the real-world performance of Llama 2, we tested 3 model sizes (7B, 13B, 70B parameters) on 4 different instance types with 4 different load levels, leading to 60 different configurations:

- Models: We evaluated all currently available model sizes, including 7B, 13B, and 70B.

- Concurrent Requests: We tested configurations with 1, 5, 10, and 20 concurrent requests to find out the performance on different usage scenarios.

- Instance Types: We evaluated different GPU instances, including g5.2xlarge, g5.12xlarge, g5.48xlarge powered by NVIDIA A10G GPUs, and p4d.24xlarge powered by NVIDIA A100 40GB GPU.

- Quantization: We compared performance with and without quantization. We used GPTQ 4-bit as a quantization technique.

As metrics, we used Throughput and Latency defined as:

- Throughput (tokens/sec): Variety of tokens being generated per second.

- Latency (ms/token): Time it takes to generate a single token

We used those to guage the performance of Llama across the various setups to know the advantages and tradeoffs. If you wish to run the benchmark yourself, we created a Github repository.

Yow will discover the total data of the benchmark within the Amazon SageMaker Benchmark: TGI 1.0.3 Llama 2 sheet. The raw data is obtainable on GitHub.

If you happen to are curious about all of the small print, we recommend you to dive deep into the provided raw data.

Recommendations & Insights

Based on the benchmark, we offer specific recommendations for optimal LLM deployment depending in your priorities between cost, throughput, and latency for all Llama 2 model sizes.

Note: The recommendations are based on the configuration we tested. In the longer term, other environments or hardware offerings, comparable to Inferentia2, could also be much more cost-efficient.

Most Cost-Effective Deployment

Essentially the most cost-effective configuration focuses on the precise balance between performance (latency and throughput) and price. Maximizing the output per dollar spent is the goal. We checked out the performance during 5 concurrent requests. We are able to see that GPTQ offers one of the best cost-effectiveness, allowing customers to deploy Llama 2 13B on a single GPU.

| Model | Quantization | Instance | concurrent requests | Latency (ms/token) median | Throughput (tokens/second) | On-demand cost ($/h) in us-west-2 | Time to generate 1 M tokens (minutes) | cost to generate 1M tokens ($) |

|---|---|---|---|---|---|---|---|---|

| Llama 2 7B | GPTQ | g5.2xlarge | 5 | 34.245736 | 120.0941633 | $1.52 | 138.78 | $3.50 |

| Llama 2 13B | GPTQ | g5.2xlarge | 5 | 56.237484 | 71.70560104 | $1.52 | 232.43 | $5.87 |

| Llama 2 70B | GPTQ | ml.g5.12xlarge | 5 | 138.347928 | 33.33372399 | $7.09 | 499.99 | $59.08 |

Best Throughput Deployment

The Best Throughput configuration maximizes the variety of tokens which are generated per second. This might include some reduction in overall latency because you process more tokens concurrently. We checked out the best tokens per second performance during twenty concurrent requests, with some respect to the associated fee of the instance. The best throughput was for Llama 2 13B on the ml.p4d.24xlarge instance with 688 tokens/sec.

| Model | Quantization | Instance | concurrent requests | Latency (ms/token) median | Throughput (tokens/second) | On-demand cost ($/h) in us-west-2 | Time to generate 1 M tokens (minutes) | cost to generate 1M tokens ($) |

|---|---|---|---|---|---|---|---|---|

| Llama 2 7B | None | ml.g5.12xlarge | 20 | 43.99524 | 449.9423027 | $7.09 | 33.59 | $3.97 |

| Llama 2 13B | None | ml.p4d.12xlarge | 20 | 67.4027465 | 668.0204881 | $37.69 | 24.95 | $15.67 |

| Llama 2 70B | None | ml.p4d.24xlarge | 20 | 59.798591 | 321.5369158 | $37.69 | 51.83 | $32.56 |

Best Latency Deployment

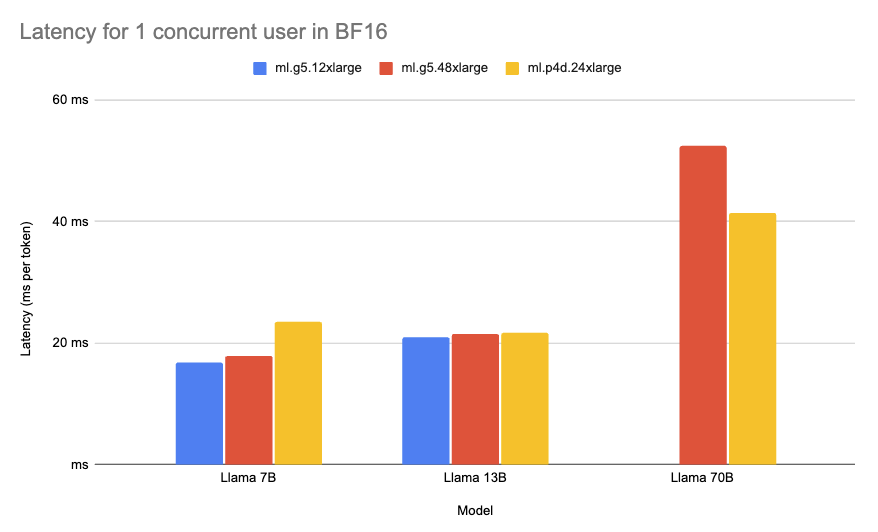

The Best Latency configuration minimizes the time it takes to generate one token. Low latency is significant for real-time use cases and providing a superb experience to the shopper, e.g. Chat applications. We checked out the bottom median for milliseconds per token during 1 concurrent request. The bottom overall latency was for Llama 2 7B on the ml.g5.12xlarge instance with 16.8ms/token.

| Model | Quantization | Instance | concurrent requests | Latency (ms/token) median | Thorughput (tokens/second) | On-demand cost ($/h) in us-west-2 | Time to generate 1 M tokens (minutes) | cost to generate 1M tokens ($) |

|---|---|---|---|---|---|---|---|---|

| Llama 2 7B | None | ml.g5.12xlarge | 1 | 16.812526 | 61.45733054 | $7.09 | 271.19 | $32.05 |

| Llama 2 13B | None | ml.g5.12xlarge | 1 | 21.002715 | 47.15736567 | $7.09 | 353.43 | $41.76 |

| Llama 2 70B | None | ml.p4d.24xlarge | 1 | 41.348543 | 24.5142928 | $37.69 | 679.88 | $427.05 |

Conclusions

On this benchmark, we tested 60 configurations of Llama 2 on Amazon SageMaker. For cost-effective deployments, we found 13B Llama 2 with GPTQ on g5.2xlarge delivers 71 tokens/sec at an hourly cost of $1.55. For max throughput, 13B Llama 2 reached 296 tokens/sec on ml.g5.12xlarge at $2.21 per 1M tokens. And for minimum latency, 7B Llama 2 achieved 16ms per token on ml.g5.12xlarge.

We hope the benchmark will help firms deploy Llama 2 optimally based on their needs. If you wish to start deploying Llama 2 on Amazon SageMaker, try Introducing the Hugging Face LLM Inference Container for Amazon SageMaker and Deploy Llama 2 7B/13B/70B on Amazon SageMaker blog posts.

Thanks for reading! If you’ve got any questions, be happy to contact me on Twitter or LinkedIn.