Within the ever-evolving landscape of programming and software development, the hunt for efficiency and productivity has led to remarkable innovations. One such innovation is the emergence of code generation models reminiscent of Codex, StarCoder and Code Llama. These models have demonstrated remarkable capabilities in generating human-like code snippets, thereby showing immense potential as coding assistants.

Nevertheless, while these pre-trained models can perform impressively across a spread of tasks, there’s an exciting possibility lying just beyond the horizon: the power to tailor a code generation model to your specific needs. Consider personalized coding assistants which may very well be leveraged at an enterprise scale.

On this blog post we show how we created HugCoder 🤗, a code LLM fine-tuned on the code contents from the general public repositories of the huggingface GitHub organization. We’ll discuss our data collection workflow, our training experiments, and a few interesting results. This can enable you to create your personal personal copilot based in your proprietary codebase. We’ll leave you with a few further extensions of this project for experimentation.

Let’s begin 🚀

Data Collection Workflow

Our desired dataset is conceptually easy, we structured it like so:

| Repository Name | Filepath within the Repository | File Contents |

| — | — | — |

| — | — | — |

Scraping code contents from GitHub is easy with the Python GitHub API. Nevertheless, depending on the variety of repositories and the variety of code files inside a repository, one might easily run into API rate-limiting issues.

To stop such problems, we decided to clone all the general public repositories locally and extract the contents from them as an alternative of through the API. We used the multiprocessing module from Python to download all repos in parallel, as shown in this download script.

A repository can often contain non-code files reminiscent of images, presentations and other assets. We’re not excited about scraping them. We created a list of extensions to filter them out. To parse code files aside from Jupyter Notebooks, we simply used the “utf-8” encoding. For notebooks, we only considered the code cells.

We also excluded all file paths that were circuitously related to code. These include: .git, __pycache__, and xcodeproj.

To maintain the serialization of this content relatively memory-friendly, we used chunking and the feather format. Seek advice from this script for the complete implementation.

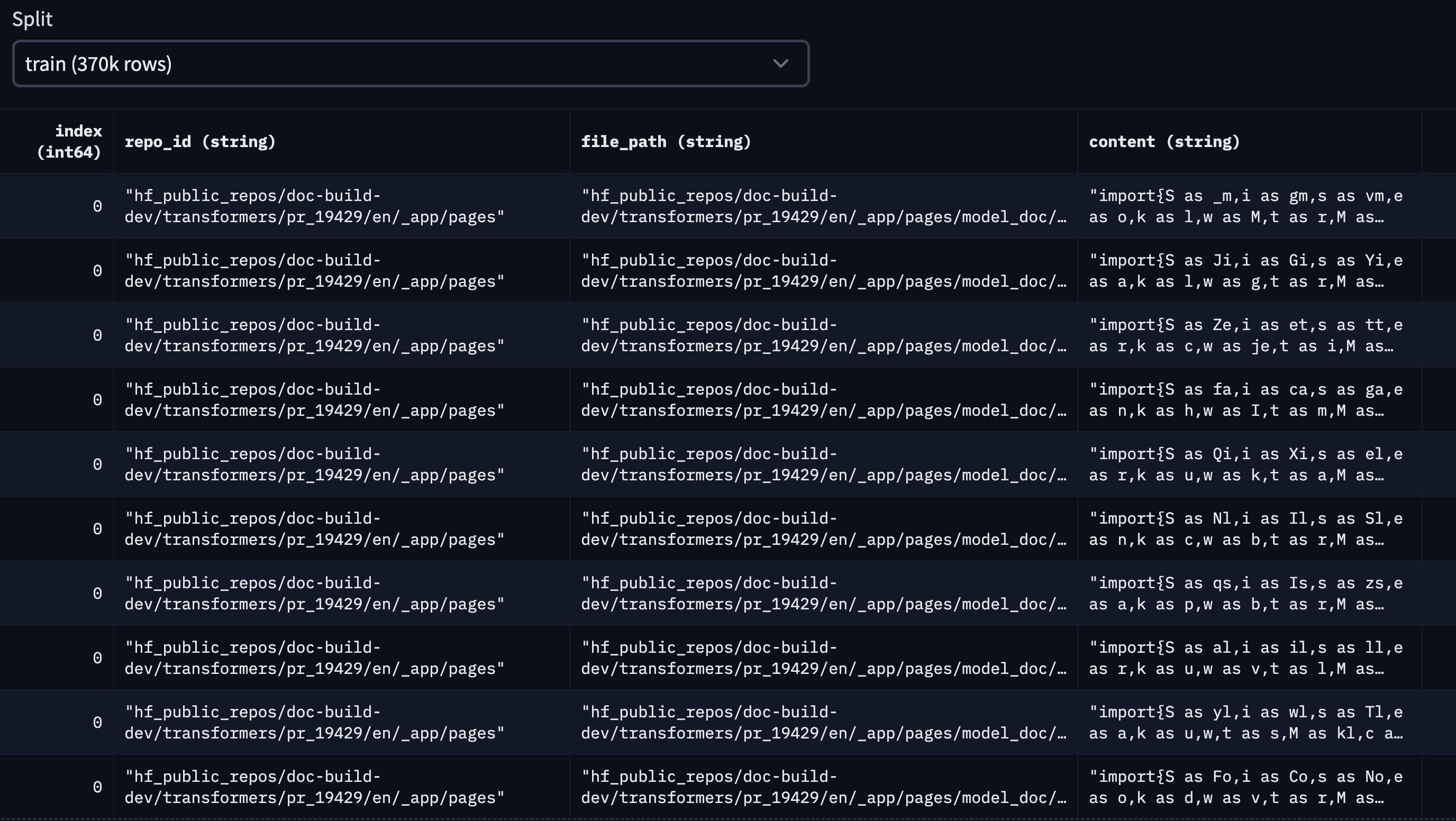

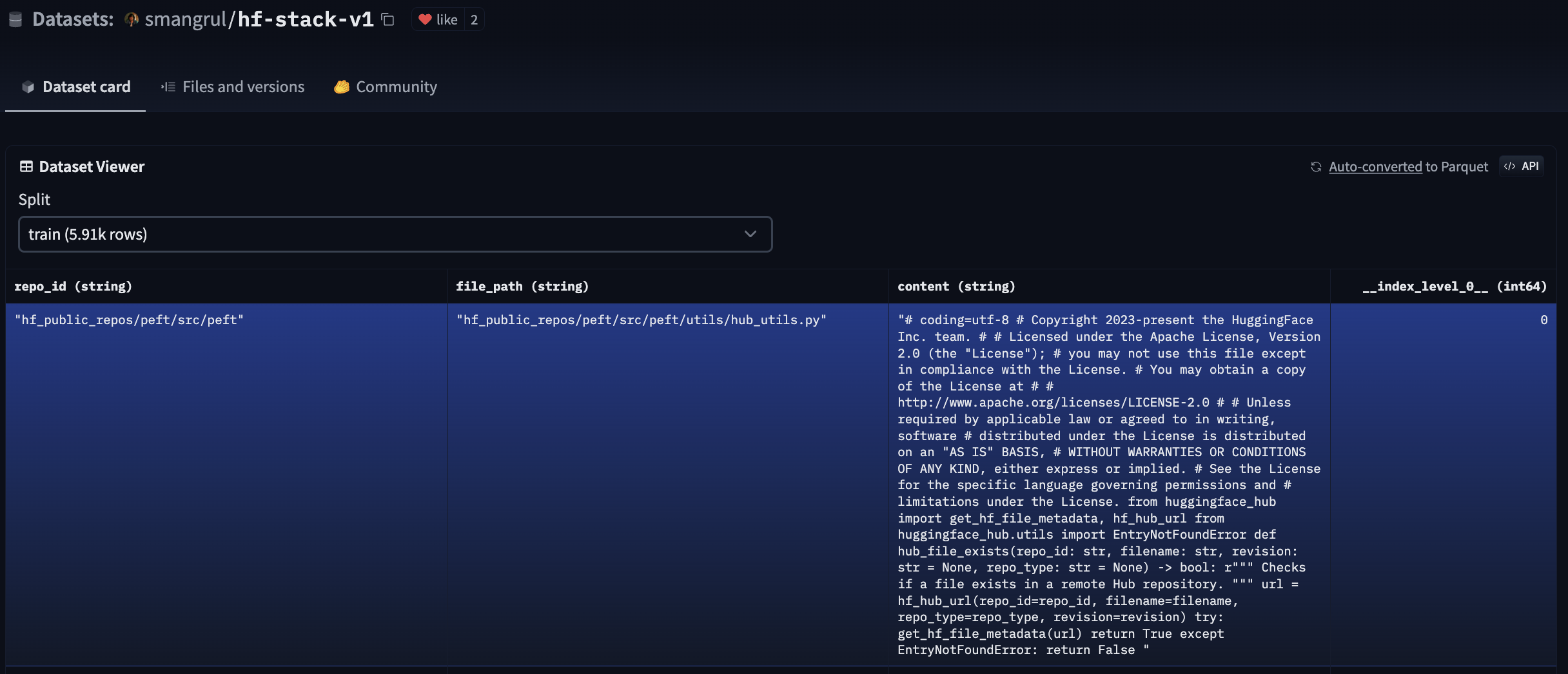

The ultimate dataset is available on the Hub, and it looks like this:

For this blog, we considered the highest 10 Hugging Face public repositories, based on stargazers. They’re the next:

[‘transformers’, ‘pytorch-image-models’, ‘datasets’, ‘diffusers’, ‘peft’, ‘tokenizers’, ‘accelerate’, ‘text-generation-inference’, ‘chat-ui’, ‘deep-rl-class’]

That is the code we used to generate this dataset, and that is the dataset within the Hub. Here’s a snapshot of what it looks like:

To cut back the project complexity, we didn’t consider deduplication of the dataset. In case you are excited about applying deduplication techniques for a production application, this blog post is a wonderful resource concerning the topic within the context of code LLMs.

Finetuning your personal Personal Co-Pilot

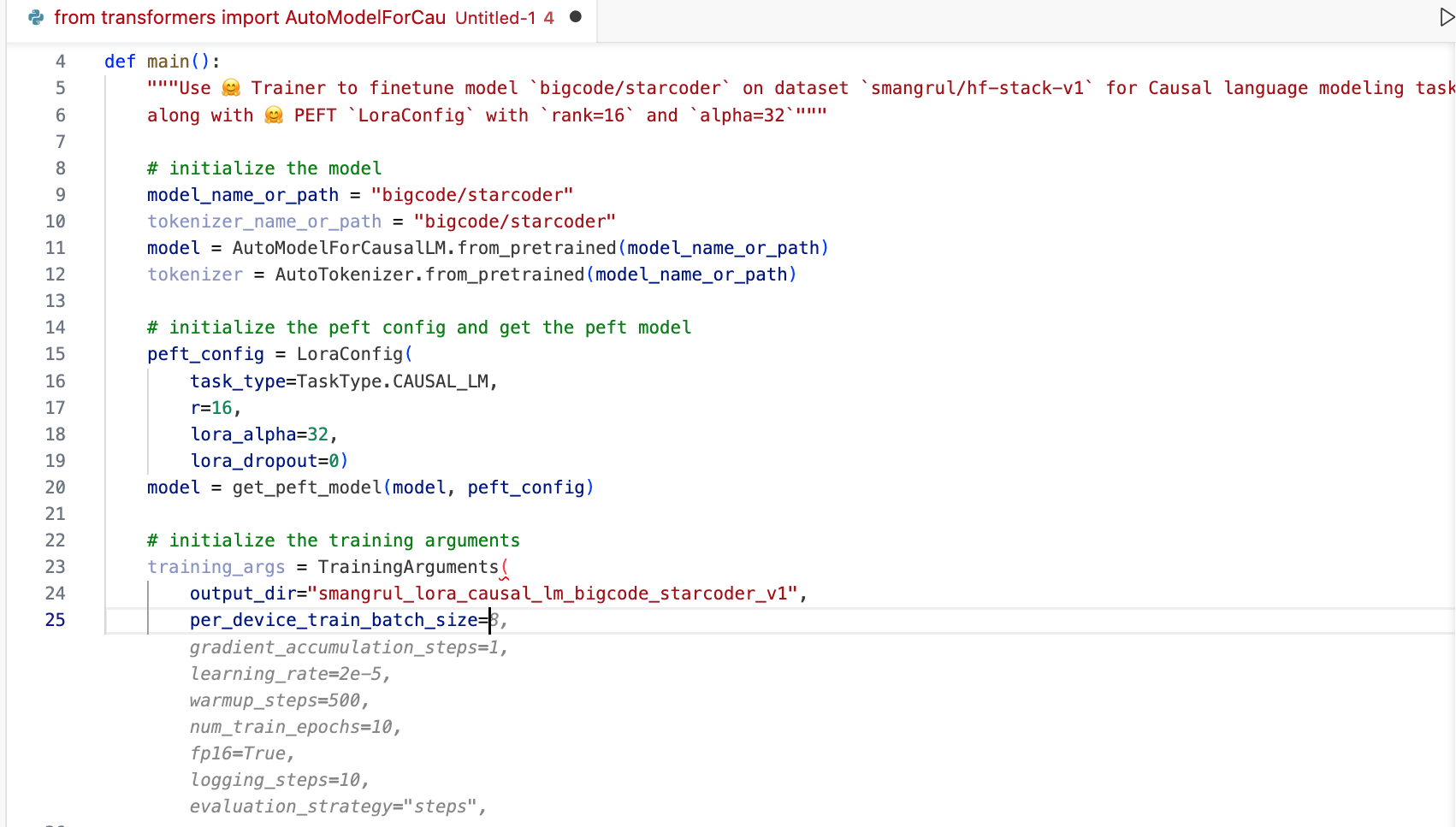

On this section, we show how you can fine-tune the next models: bigcode/starcoder (15.5B params), bigcode/starcoderbase-1b (1B params), Deci/DeciCoder-1b (1B params). We’ll use a single A100 40GB Colab Notebook using 🤗 PEFT (Parameter-Efficient Nice-Tuning) for all of the experiments. Moreover, we’ll show how you can fully finetune the bigcode/starcoder (15.5B params) on a machine with 8 A100 80GB GPUs using 🤗 Speed up’s FSDP integration. The training objective is fill in the center (FIM), wherein parts of a training sequence are moved to the top, and the reordered sequence is predicted auto-regressively.

Why PEFT? Full fine-tuning is pricey. Let’s have some numbers to place things in perspective:

Minimum GPU memory required for full fine-tuning:

- Weight: 2 bytes (Mixed-Precision training)

- Weight gradient: 2 bytes

- Optimizer state when using Adam: 4 bytes for original FP32 weight + 8 bytes for first and second moment estimates

- Cost per parameter adding all the above: 16 bytes per parameter

- 15.5B model -> 248GB of GPU memory without even considering huge memory requirements for storing intermediate activations -> minimum 4X A100 80GB GPUs required

Because the hardware requirements are huge, we’ll be using parameter-efficient fine-tuning using QLoRA. Listed below are the minimal GPU memory requirements for fine-tuning StarCoder using QLoRA:

trainable params: 110,428,160 || all params: 15,627,884,544 || trainable%: 0.7066097761926236

- Base model Weight: 0.5 bytes * 15.51B frozen params = 7.755 GB

- Adapter weight: 2 bytes * 0.11B trainable params = 0.22GB

- Weight gradient: 2 bytes * 0.11B trainable params = 0.12GB

- Optimizer state when using Adam: 4 bytes * 0.11B trainable params * 3 = 1.32GB

- Adding all the above -> 9.51 GB ~10GB -> 1 A100 40GB GPU required 🤯. The rationale for A100 40GB GPU is that the intermediate activations for long sequence lengths of 2048 and batch size of 4 for training result in higher memory requirements. As we’ll see below, GPU memory required is 26GB which may be accommodated on A100 40GB GPU. Also, A100 GPUs have higher compatibilty with Flash Attention 2.

Within the above calculations, we didn’t consider memory required for intermediate activation checkpointing which is considerably huge. We leverage Flash Attention V2 and Gradient Checkpointing to beat this issue.

- For QLoRA together with flash attention V2 and gradient checkpointing, the entire memory occupied by the model on a single A100 40GB GPU is 26 GB with a batch size of 4.

- For full fine-tuning using FSDP together with Flash Attention V2 and Gradient Checkpointing, the memory occupied per GPU ranges between 70 GB to 77.6 GB with a per_gpu_batch_size of 1.

Please check with the model-memory-usage to simply calculate how much vRAM is required to coach and perform big model inference on a model hosted on the 🤗 Hugging Face Hub.

Full Finetuning

We’ll take a look at how you can do full fine-tuning of bigcode/starcoder (15B params) on 8 A100 80GB GPUs using PyTorch Fully Sharded Data Parallel (FSDP) technique. For more information on FSDP, please check with Nice-tuning Llama 2 70B using PyTorch FSDP and Speed up Large Model Training using PyTorch Fully Sharded Data Parallel.

Resources

- Codebase: link. It uses the recently added Flash Attention V2 support in Transformers.

- FSDP Config: fsdp_config.yaml

- Model: bigcode/stacoder

- Dataset: smangrul/hf-stack-v1

- Nice-tuned Model: smangrul/peft-lora-starcoder15B-v2-personal-copilot-A100-40GB-colab

The command to launch training is given at run_fsdp.sh.

speed up launch --config_file "configs/fsdp_config.yaml" train.py

--model_path "bigcode/starcoder"

--dataset_name "smangrul/hf-stack-v1"

--subset "data"

--data_column "content"

--split "train"

--seq_length 2048

--max_steps 2000

--batch_size 1

--gradient_accumulation_steps 2

--learning_rate 5e-5

--lr_scheduler_type "cosine"

--weight_decay 0.01

--num_warmup_steps 30

--eval_freq 100

--save_freq 500

--log_freq 25

--num_workers 4

--bf16

--no_fp16

--output_dir "starcoder-personal-copilot-A100-40GB-colab"

--fim_rate 0.5

--fim_spm_rate 0.5

--use_flash_attn

The full training time was 9 Hours. Taking the associated fee of $12.00 / hr based on lambdalabs for 8x A100 80GB GPUs, the entire cost could be $108.

PEFT

We’ll take a look at how you can use QLoRA for fine-tuning bigcode/starcoder (15B params) on a single A100 40GB GPU using 🤗 PEFT. For more information on QLoRA and PEFT methods, please check with Making LLMs much more accessible with bitsandbytes, 4-bit quantization and QLoRA and 🤗 PEFT: Parameter-Efficient Nice-Tuning of Billion-Scale Models on Low-Resource Hardware.

Resources

- Codebase: link. It uses the recently added Flash Attention V2 support in Transformers.

- Colab notebook: link. Ensure that to decide on A100 GPU with High RAM setting.

- Model: bigcode/stacoder

- Dataset: smangrul/hf-stack-v1

- QLoRA Nice-tuned Model: smangrul/peft-lora-starcoder15B-v2-personal-copilot-A100-40GB-colab

The command to launch training is given at run_peft.sh. The full training time was 12.5 Hours. Taking the associated fee of $1.10 / hr based on lambdalabs, the entire cost could be $13.75. That is pretty good 🚀! By way of cost, it’s 7.8X lower than the associated fee for full fine-tuning.

Comparison

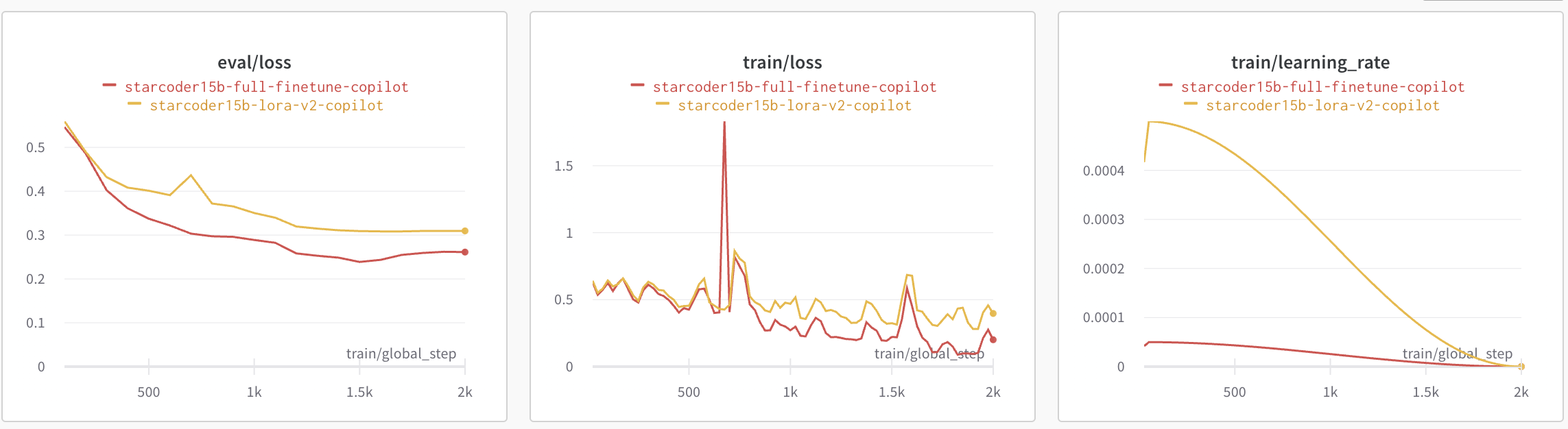

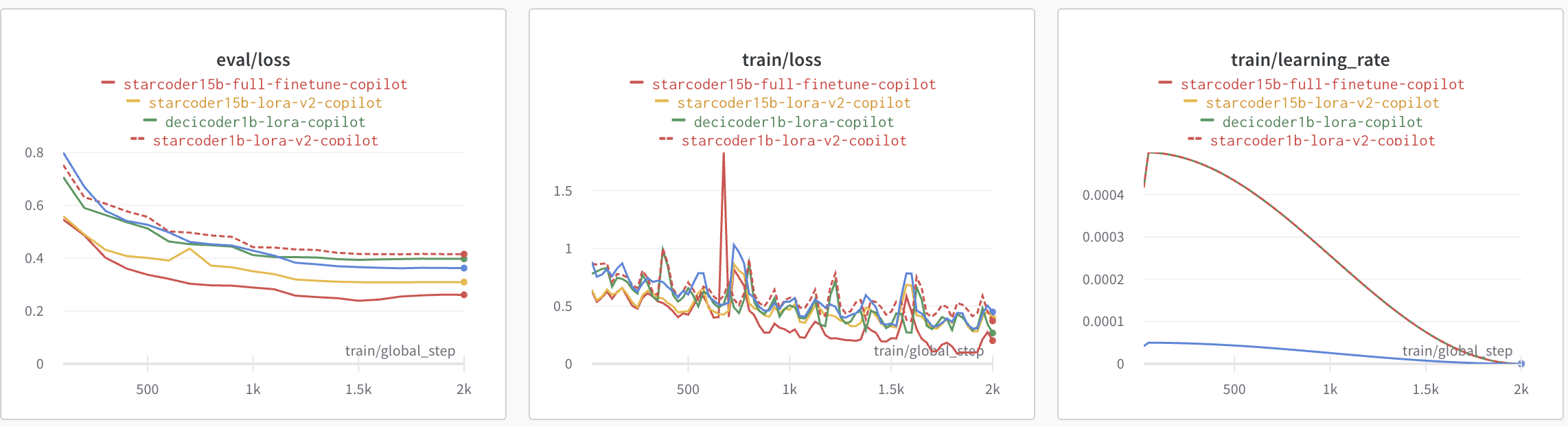

The plot below shows the eval loss, train loss and learning rate scheduler for QLoRA vs full fine-tuning. We observe that full fine-tuning results in barely lower loss and converges a bit faster in comparison with QLoRA. The educational rate for peft fine-tuning is 10X greater than that of full fine-tuning.

To be sure that that our QLoRA model doesn’t result in catastrophic forgetting, we run the Python Human Eval on it. Below are the outcomes we got. Pass@1 measures the pass rate of completions considering only a single generated code candidate per problem. We will observe that the performance on humaneval-python is comparable between the bottom bigcode/starcoder (15B params) and the fine-tuned PEFT model smangrul/peft-lora-starcoder15B-v2-personal-copilot-A100-40GB-colab.

| Model | Pass@1 |

| bigcode/starcoder | 33.57 |

| smangrul/peft-lora-starcoder15B-v2-personal-copilot-A100-40GB-colab | 33.37 |

Let’s now take a look at some qualitative samples. In our manual evaluation, we noticed that the QLoRA led to slight overfitting and as such we down weigh it by creating latest weighted adapter with weight 0.8 via add_weighted_adapter utility of PEFT.

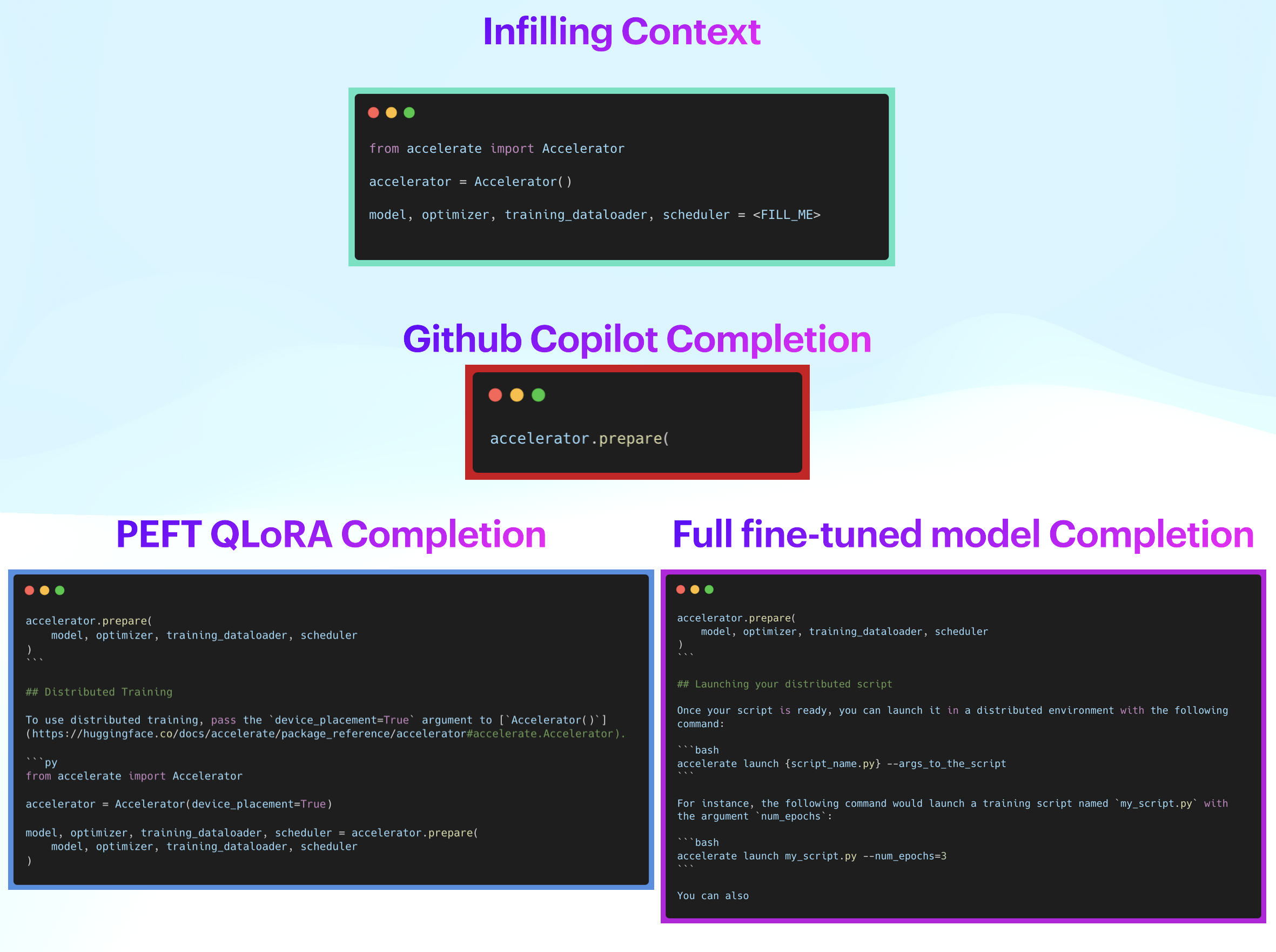

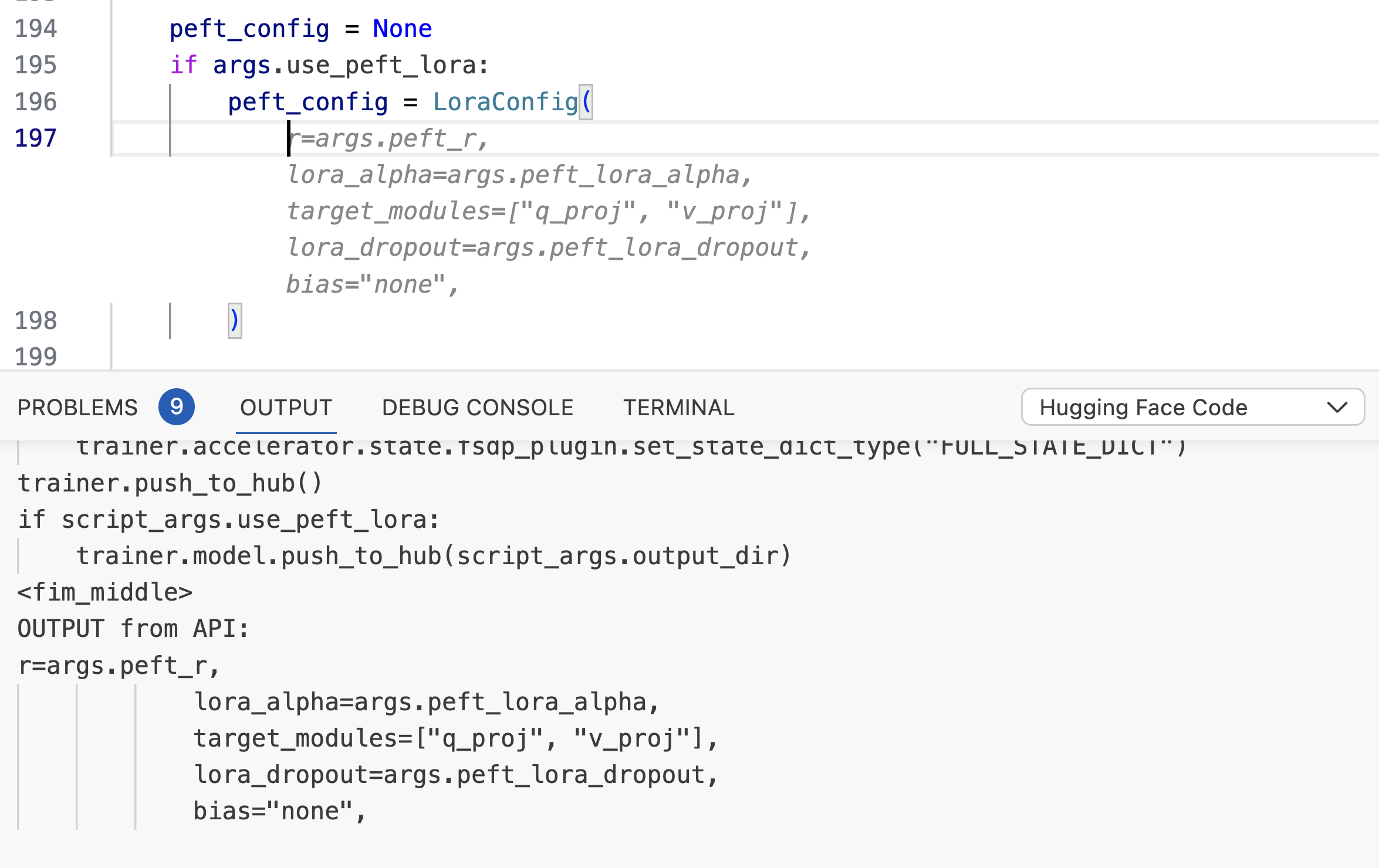

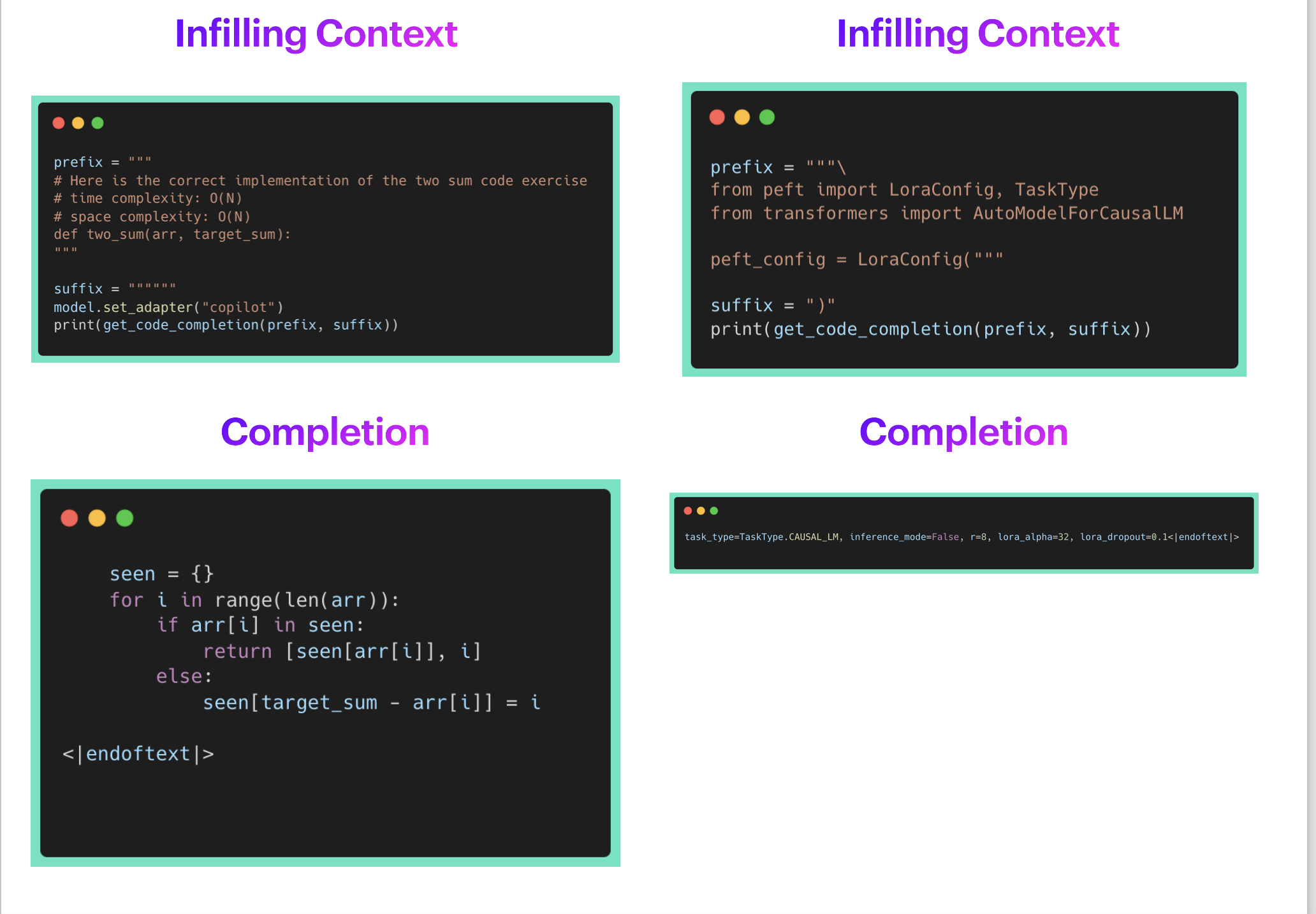

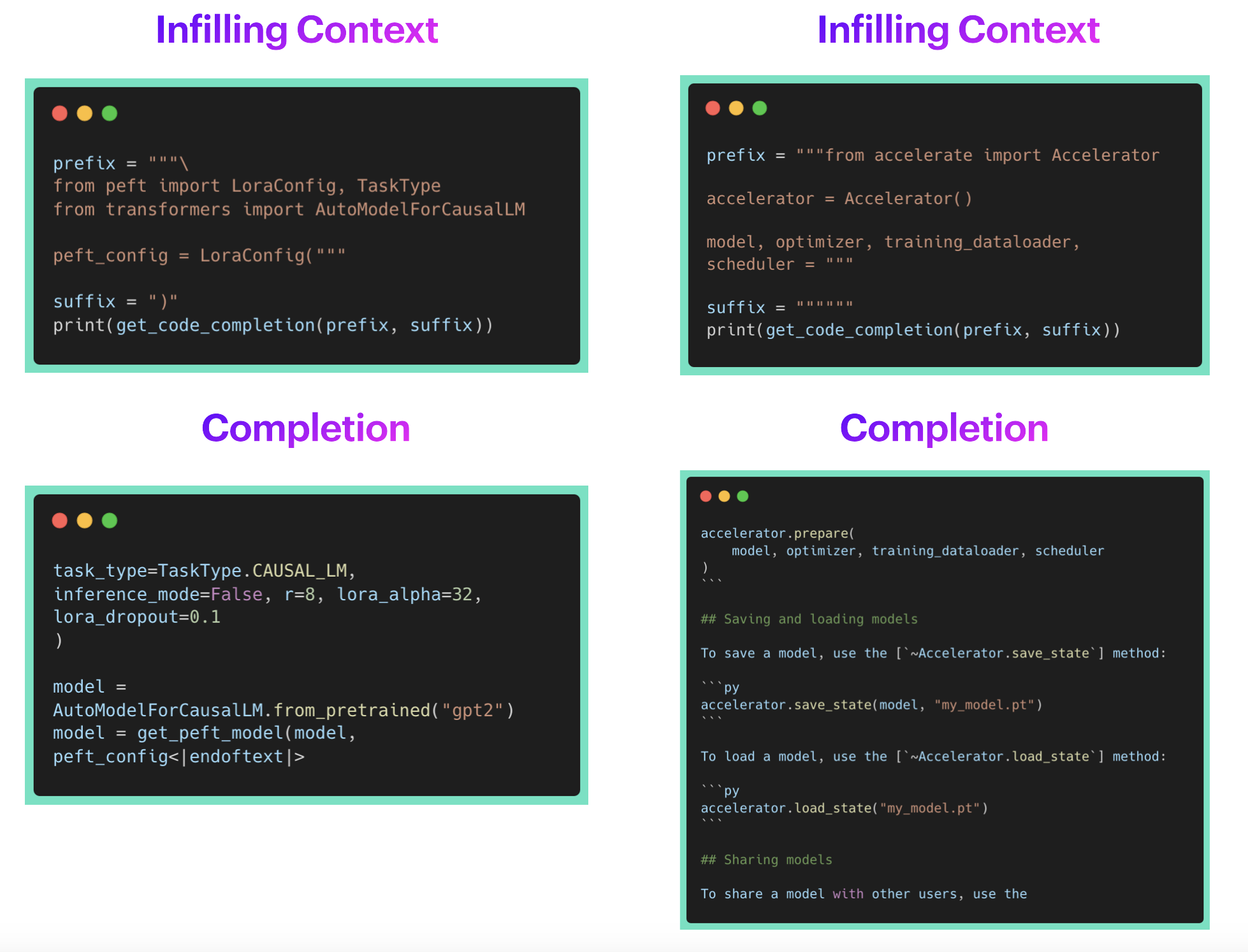

We’ll take a look at 2 code infilling examples wherein the duty of the model is to fill the part denoted by the

In the instance above, the completion from GitHub Copilot is along the right lines but doesn’t help much. Alternatively, completions from QLoRA and full fine-tuned models are accurately infilling the whole function call with the essential parameters. Nevertheless, also they are adding rather a lot more noise afterwards. This may very well be controlled with a post-processing step to limit completions to closing brackets or latest lines. Note that each QLoRA and full fine-tuned models produce results with similar quality.

Within the second example above, GitHub Copilot didn’t give any completion. This may be attributable to the indisputable fact that 🤗 PEFT is a recent library and never yet a part of Copilot’s training data, which is strictly the kind of problem we are attempting to deal with. Alternatively, completions from QLoRA and full fine-tuned models are accurately infilling the whole function call with the essential parameters. Again, note that each the QLoRA and the complete fine-tuned models are giving generations of comparable quality. Inference Code with various examples for full fine-tuned model and peft model can be found at Full_Finetuned_StarCoder_Inference.ipynb and PEFT_StarCoder_Inference.ipynb, respectively.

Subsequently, we are able to observe that the generations from each the variants are as per expectations. Awesome! 🚀

How do I exploit it in VS Code?

You possibly can easily configure a custom code-completion LLM in VS Code using 🤗 llm-vscode VS Code Extension, along with hosting the model via 🤗 Inference EndPoints. We’ll undergo the required steps below. You possibly can learn more details about deploying an endpoint within the inference endpoints documentation.

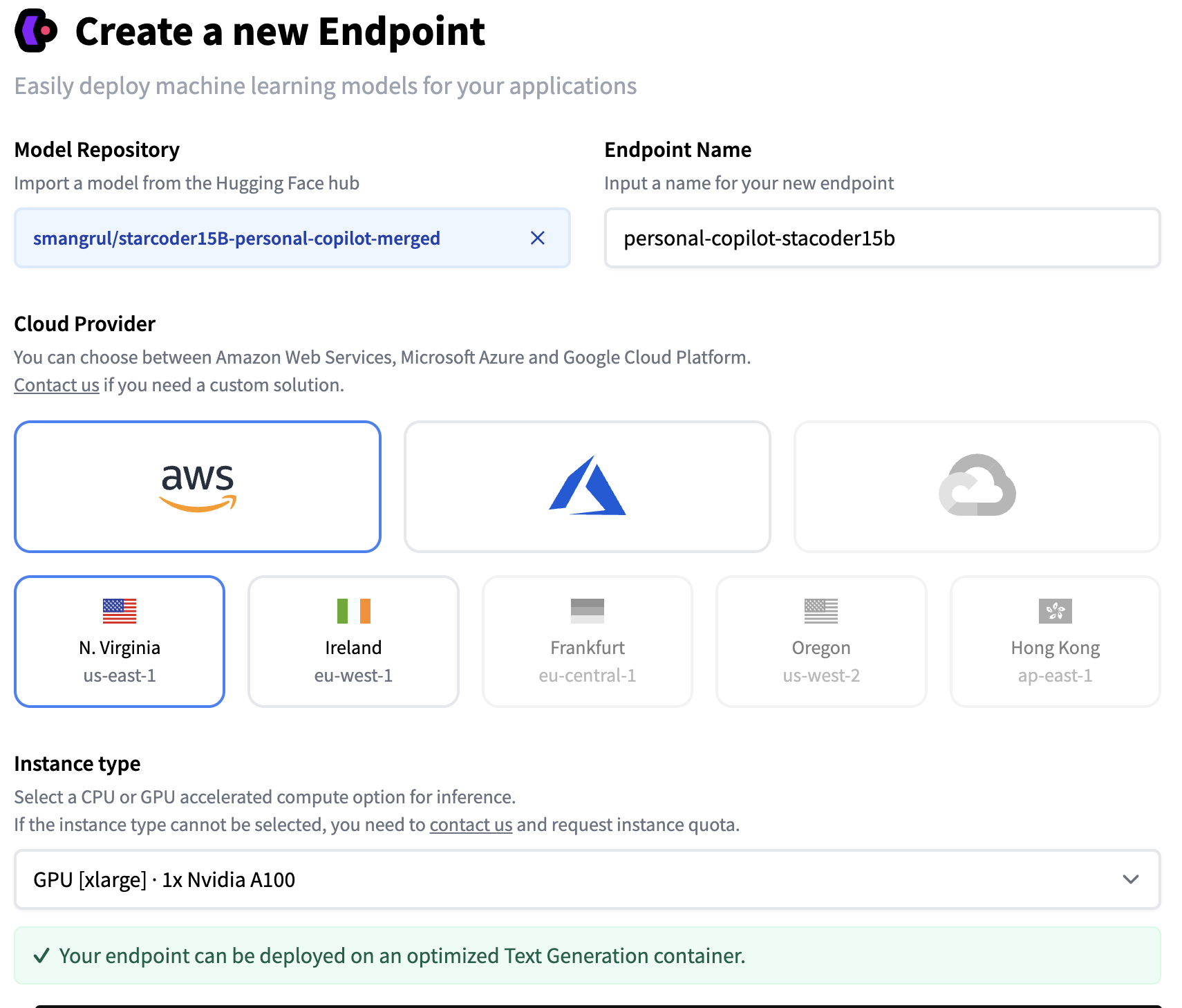

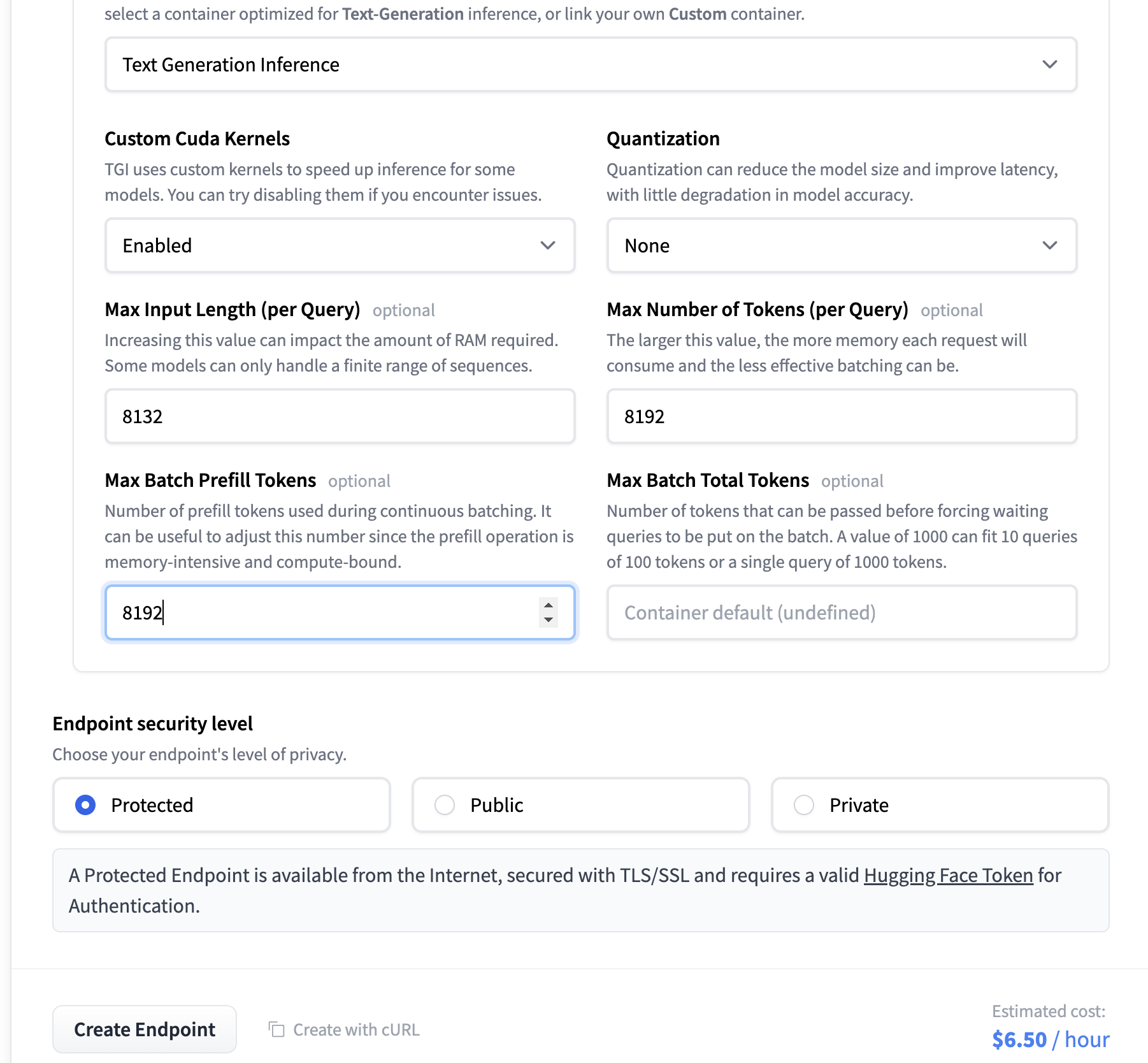

Setting an Inference Endpoint

Below are the screenshots with the steps we followed to create our custom Inference Endpoint. We used our QLoRA model, exported as a full-sized merged model that may be easily loaded in transformers.

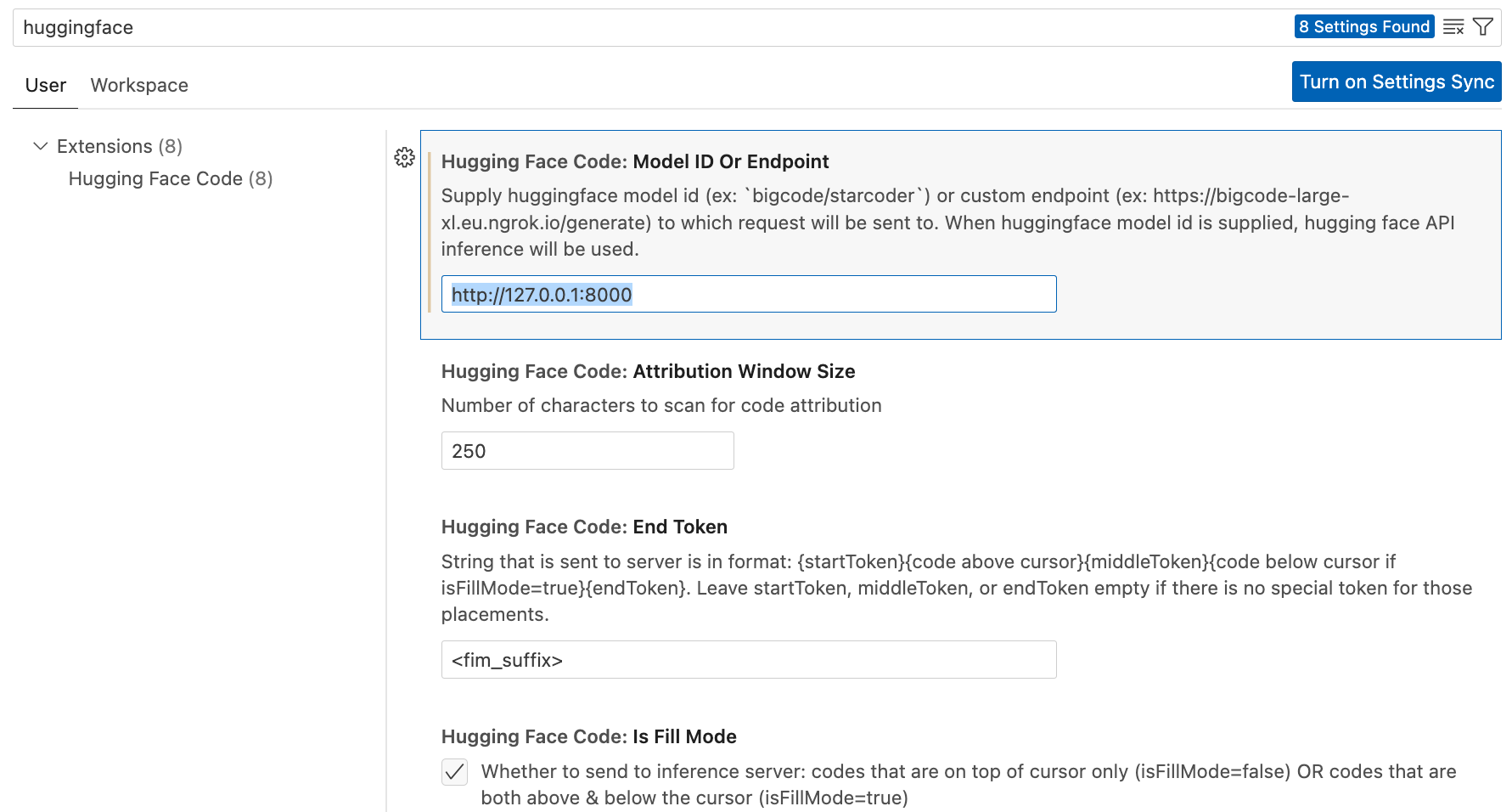

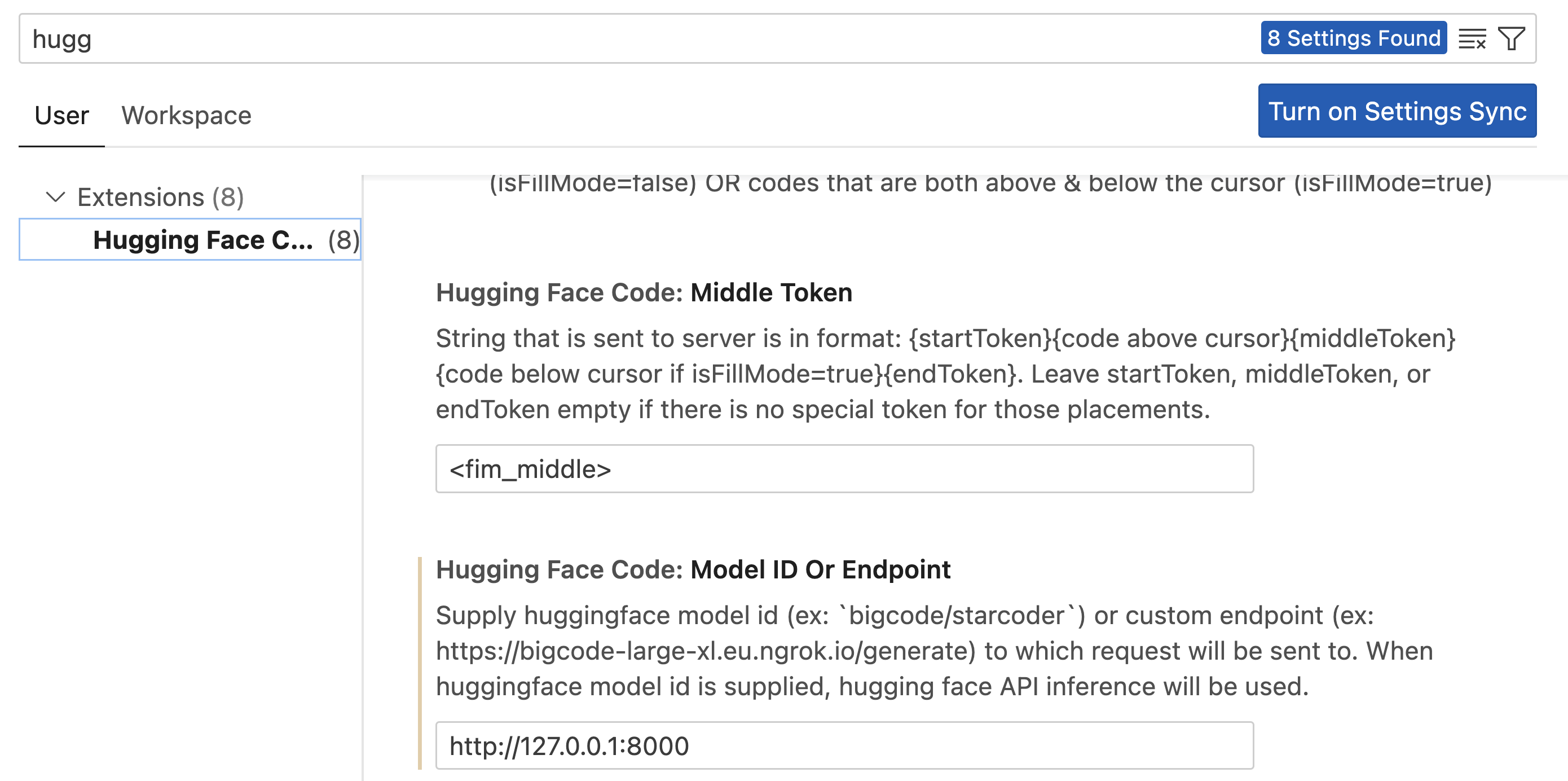

Establishing the VS Code Extension

Just follow the installation steps. Within the settings, replace the endpoint in the sphere below, so it points to the HF Inference Endpoint you deployed.

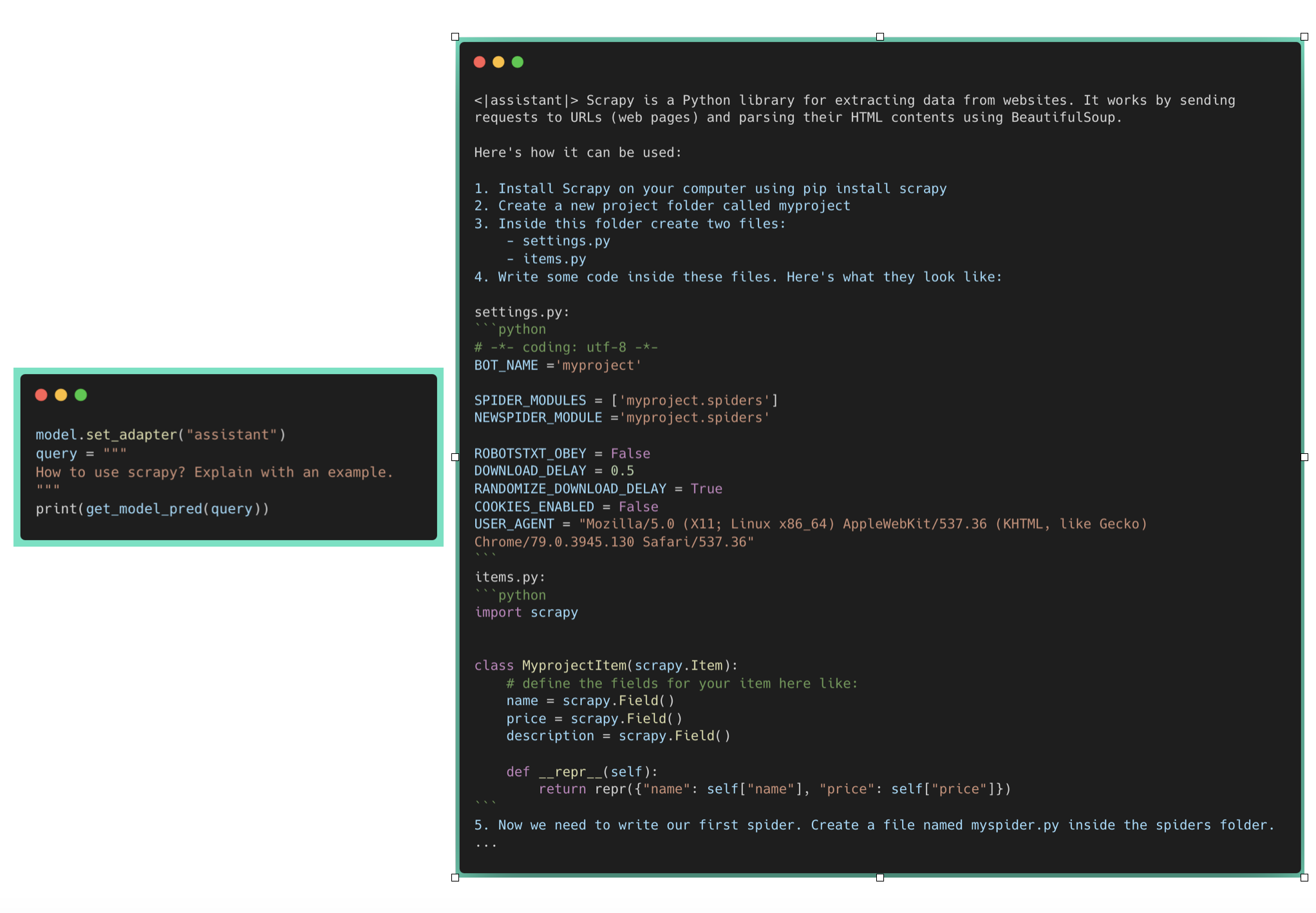

Usage will seem like below:

Finetuning your personal Code Chat Assistant

Up to now, the models we trained were specifically trained as personal co-pilot for code completion tasks. They don’t seem to be trained to perform conversations or for query answering. Octocoder and StarChat are great examples of such models. This section briefly describes how you can achieve that.

Resources

- Codebase: link. It uses the recently added Flash Attention V2 support in Transformers.

- Colab notebook: link. Ensure that to decide on A100 GPU with High RAM setting.

- Model: bigcode/stacoderplus

- Dataset: smangrul/code-chat-assistant-v1. Mixture of

LIMA+GUANACOwith proper formatting in a ready-to-train format. - Trained Model: smangrul/peft-lora-starcoderplus-chat-asst-A100-40GB-colab

Dance of LoRAs

If you could have dabbled with Stable Diffusion models and LoRAs for making your personal Dreambooth models, you could be conversant in the concepts of mixing different LoRAs with different weights, using a LoRA model with a unique base model than the one on which it was trained. In text/code domain, this stays unexplored territory. We feature out experiments on this regard and have observed very promising findings. Are you ready? Let’s go! 🚀

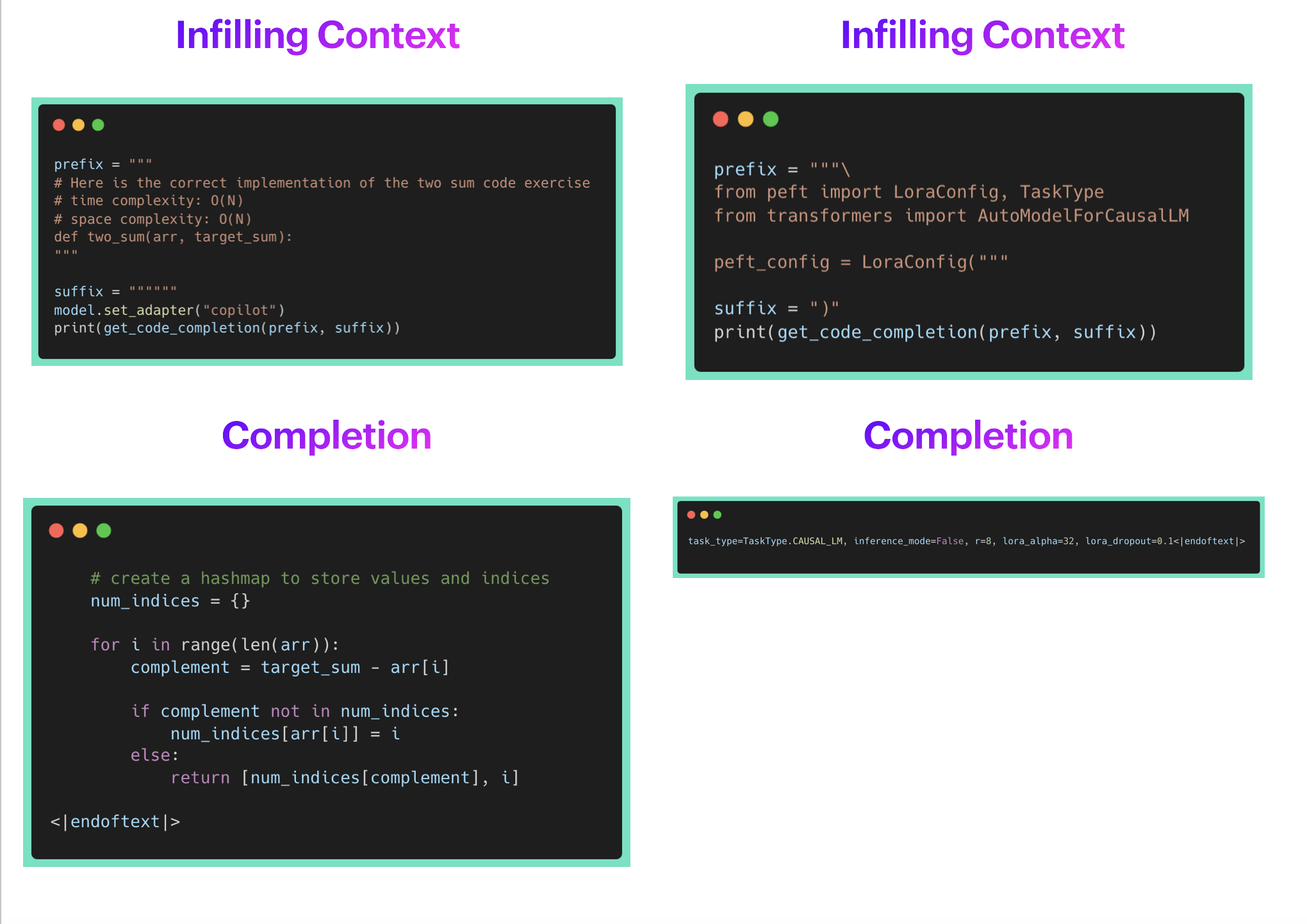

Mix-and-Match LoRAs

PEFT currently supports 3 ways of mixing LoRA models, linear, svd and cat. For more details, check with tuners#peft.LoraModel.add_weighted_adapter.

Our notebook Dance_of_LoRAs.ipynb includes all of the inference code and various LoRA loading combos, like loading the chat assistant on top of starcoder as an alternative of starcodeplus, which is the bottom model that we fine-tuned.

Here, we’ll consider 2 abilities (chatting/QA and code-completion) on 2 data distributions (top 10 public hf codebase and generic codebase). That offers us 4 axes on which we’ll perform some qualitative evaluation analyses.

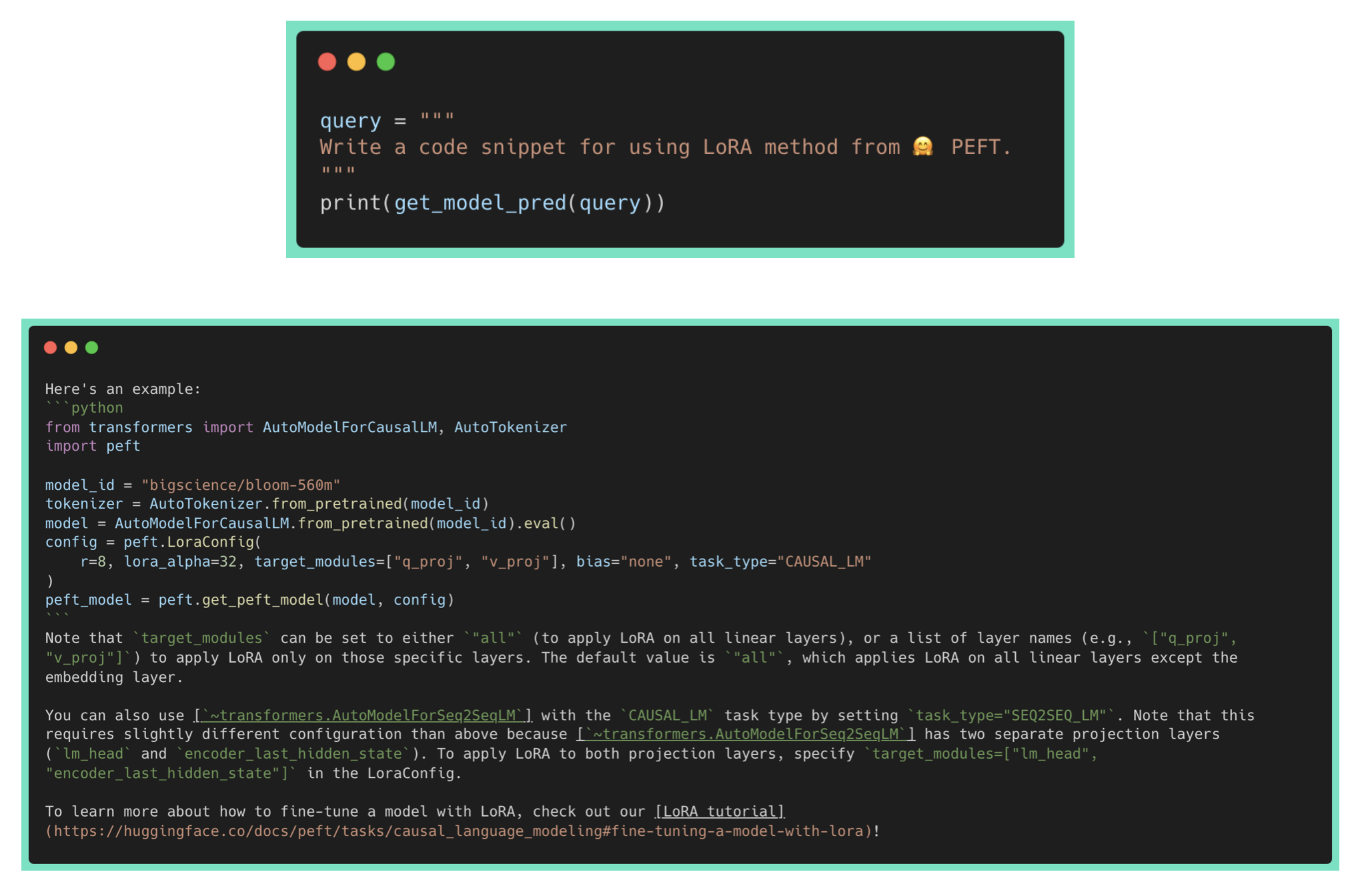

First, allow us to consider the chatting/QA task.

If we disable adapters, we observe that the duty fails for each datasets, as the bottom model (starcoder) is just meant for code completion and never suitable for chatting/question-answering. Enabling copilot adapter performs much like the disabled case because this LoRA was also specifically fine-tuned for code-completion.

Now, let’s enable the assistant adapter.

Query Answering based on generic code

Query Answering based on HF code

We will observe that generic query regarding scrapy is being answered properly. Nevertheless, it’s failing for the HF code related query which wasn’t a part of its pretraining data.

Allow us to now consider the code-completion task.

On disabling adapters, we observe that the code completion for the generic two-sum works as expected. Nevertheless, the HF code completion fails with improper params to LoraConfig, since the base model hasn’t seen it in its pretraining data. Enabling assistant performs much like the disabled case because it was trained on natural language conversations which did not have any Hugging Face code repos.

Now, let’s enable the copilot adapter.

We will observe that the copilot adapter gets it right in each cases. Subsequently, it performs as expected for code-completions when working with HF specific codebase in addition to generic codebases.

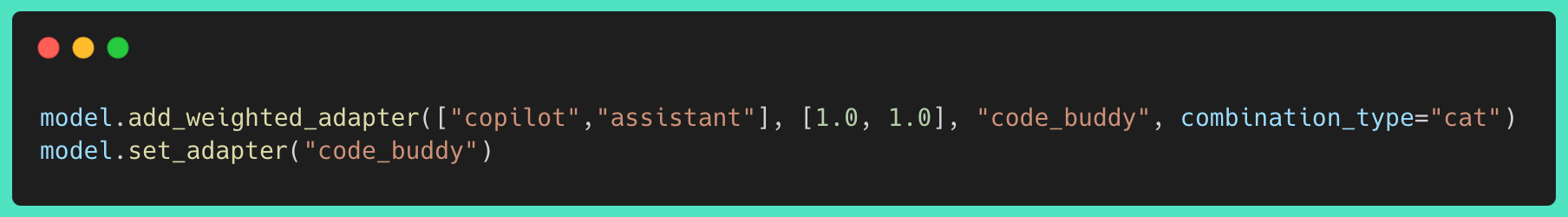

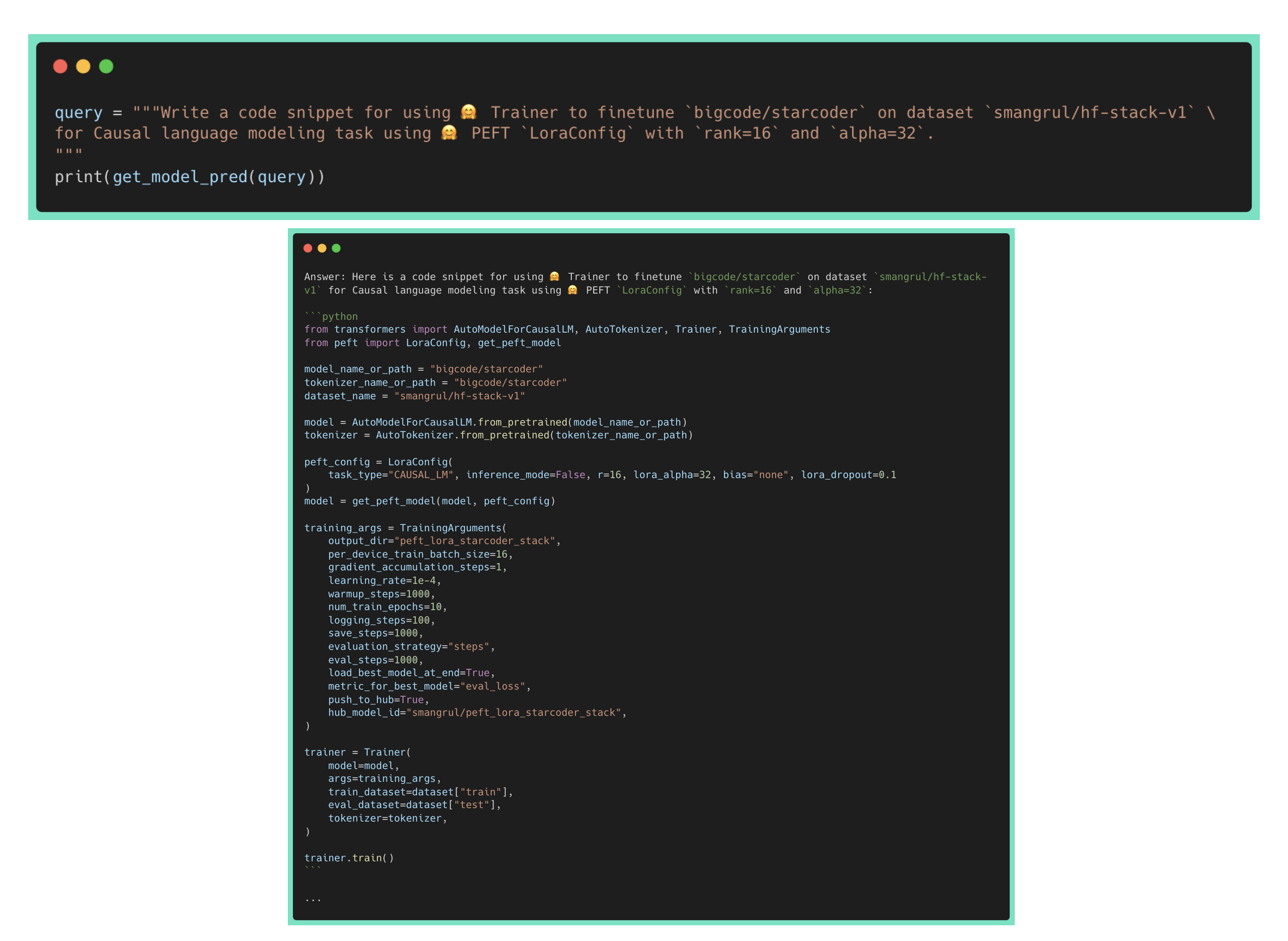

Now, as a user, I would like to mix the power of assistant in addition to copilot. This can enable me to make use of it for code completion while coding in an IDE, and still have it as a chatbot to reply my questions regarding APIs, classes, methods, documentation. It should find a way to offer answers to questions like How do I exploit x, Please write a code snippet for Y on my codebase.

PEFT lets you do it via add_weighted_adapter. Let’s create a brand new adapter code_buddy with equal weights to assistant and copilot adapters.

Now, let’s have a look at how code_buddy performs on the chatting/question_answering tasks.

We will observe that code_buddy is performing significantly better than the assistant or copilot adapters alone! It’s capable of answer the write a code snippet request to indicate how you can use a particular HF repo API. Nevertheless, it’s also hallucinating the improper links/explanations, which stays an open challenge for LLMs.

Below is the performance of code_buddy on code completion tasks.

We will observe that code_buddy is acting on par with copilot, which was specifically finetuned for this task.

Transfer LoRAs to different base models

We may transfer the LoRA models to different base models.

We’ll take the hot-off-the-press Octocoder model and apply on it the LoRA we trained above with starcoder base model. Please undergo the next notebook PEFT_Personal_Code_CoPilot_Adapter_Transfer_Octocoder.ipynb for the whole code.

Performance on the Code Completion task

We will observe that octocoder is performing great. It’s capable of complete HF specific code snippets. It is usually capable of complete generic code snippets as seen within the notebook.

Performance on the Chatting/QA task

As Octocoder is trained to reply questions and perform conversations about coding, let’s have a look at if it might probably use our LoRA adapter to reply HF specific questions.

Yay! It accurately answers intimately how you can create LoraConfig and related peft model together with accurately using the model name, dataset name in addition to param values of LoraConfig. On disabling the adapter, it fails to accurately use the API of LoraConfig or to create a PEFT model, suggesting that it is not a part of the training data of Octocoder.

How do I run it locally?

I do know, in any case this, you wish to finetune starcoder in your codebase and use it locally in your consumer hardware reminiscent of Mac laptops with M1 GPUs, windows with RTX 4090/3090 GPUs …

Don’t be concerned, we’ve got got you covered.

We will probably be using this super cool open source library mlc-llm 🔥. Specifically, we will probably be using this fork pacman100/mlc-llm which has changes to get it working with the Hugging Face Code Completion extension for VS Code. On my Mac latop with M1 Metal GPU, the 15B model was painfully slow. Hence, we’ll go small and train a PEFT LoRA version in addition to a full finetuned version of bigcode/starcoderbase-1b. The training colab notebooks are linked below:

- Colab notebook for Full fine-tuning and PEFT LoRA finetuning of

starcoderbase-1b: link

The training loss, evaluation loss in addition to learning rate schedules are plotted below:

Now, we’ll take a look at detailed steps for locally hosting the merged model smangrul/starcoder1B-v2-personal-copilot-merged and using it with 🤗 llm-vscode VS Code Extension.

- Clone the repo

git clone --recursive https://github.com/pacman100/mlc-llm.git && cd mlc-llm/

- Install the mlc-ai and mlc-chat (in editable mode) :

pip install --pre --force-reinstall mlc-ai-nightly mlc-chat-nightly -f https://mlc.ai/wheels

cd python

pip uninstall mlc-chat-nightly

pip install -e "."

- Compile the model via:

time python3 -m mlc_llm.construct --hf-path smangrul/starcoder1B-v2-personal-copilot-merged --target metal --use-cache=0

- Update the config with the next values in

dist/starcoder1B-v2-personal-copilot-merged-q4f16_1/params/mlc-chat-config.json:

{

"model_lib": "starcoder7B-personal-copilot-merged-q4f16_1",

"local_id": "starcoder7B-personal-copilot-merged-q4f16_1",

"conv_template": "code_gpt",

- "temperature": 0.7,

+ "temperature": 0.2,

- "repetition_penalty": 1.0,

"top_p": 0.95,

- "mean_gen_len": 128,

+ "mean_gen_len": 64,

- "max_gen_len": 512,

+ "max_gen_len": 64,

"shift_fill_factor": 0.3,

"tokenizer_files": [

"tokenizer.json",

"merges.txt",

"vocab.json"

],

"model_category": "gpt_bigcode",

"model_name": "starcoder1B-v2-personal-copilot-merged"

}

- Run the local server:

python -m mlc_chat.rest --model dist/starcoder1B-v2-personal-copilot-merged-q4f16_1/params --lib-path dist/starcoder1B-v2-personal-copilot-merged-q4f16_1/starcoder1B-v2-personal-copilot-merged-q4f16_1-metal.so

- Change the endpoint of HF Code Completion extension in VS Code to point to the local server:

- Open a brand new file in VS code, paste the code below and have the cursor in-between the doc quotes, in order that the model tries to infill the doc string:

Voila! ⭐️

The demo at the beginning of this post is that this 1B model running locally on my Mac laptop.

Conclusion

On this blog plost, we saw how you can finetune starcoder to create a private co-pilot that knows about our code. We called it 🤗 HugCoder, as we trained it on Hugging Face code 🙂 After the info collection workflow, we compared training using QLoRA vs full fine-tuning. We also experimented by combining different LoRAs, which remains to be an unexplored technique within the text/code domain. For deployment, we examined distant inference using 🤗 Inference Endpoints, and in addition showed on-device execution of a smaller model with VS Code and MLC.

Please, tell us in the event you use these methods for your personal codebase!

Acknowledgements

We would love to thank Pedro Cuenca, Leandro von Werra, Benjamin Bossan, Sylvain Gugger and Loubna Ben Allal for his or her help with the writing of this blogpost.