We’re excited to present an efficient non-diffusion text-to-image model named aMUSEd. It’s called so since it’s a open reproduction of Google’s MUSE. aMUSEd’s generation quality will not be one of the best and we’re releasing a research preview with a permissive license.

In contrast to the commonly used latent diffusion approach (Rombach et al. (2022)), aMUSEd employs a Masked Image Model (MIM) methodology. This not only requires fewer inference steps, as noted by Chang et al. (2023), but additionally enhances the model’s interpretability.

Just as MUSE, aMUSEd demonstrates an exceptional ability for style transfer using a single image, a feature explored in depth by Sohn et al. (2023). This aspect could potentially open latest avenues in personalized and style-specific image generation.

On this blog post, we will provide you with some internals of aMUSEd, show how you should use it for various tasks, including text-to-image, and show fine-tune it. Along the way in which, we are going to provide all of the essential resources related to aMUSEd, including its training code. Let’s start 🚀

Table of contents

We’ve built a demo for readers to play with aMUSEd. You possibly can try it out in this Space or within the playground embedded below:

How does it work?

aMUSEd relies on Masked Image Modeling. It makes for a compelling use case for the community to explore components which are known to work in language modeling within the context of image generation.

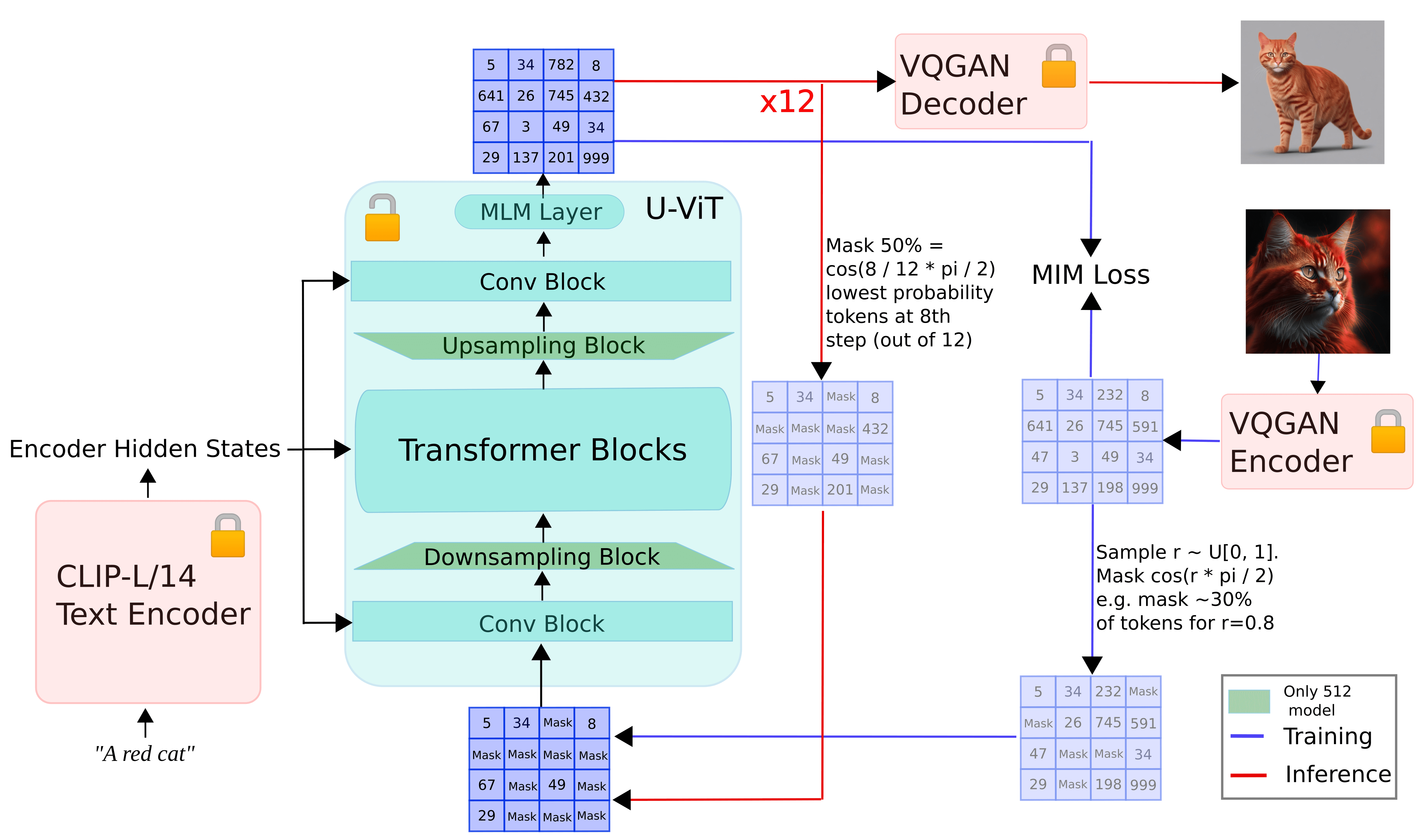

The figure below presents a pictorial overview of how aMUSEd works.

During training:

- input images are tokenized using a VQGAN to acquire image tokens

- the image tokens are then masked in keeping with a cosine masking schedule.

- the masked tokens (conditioned on the prompt embeddings computed using a CLIP-L/14 text encoder are passed to a U-ViT model that predicts the masked patches

During inference:

- input prompt is embedded using the CLIP-L/14 text encoder.

- iterate till

Nsteps are reached:- start with randomly masked tokens and pass them to the U-ViT model together with the prompt embeddings

- predict the masked tokens and only keep a certain percentage of probably the most confident predictions based on the

Nand mask schedule. Mask the remaining ones and pass them off to the U-ViT model

- pass the ultimate output to the VQGAN decoder to acquire the ultimate image

As mentioned at first, aMUSEd borrows a number of similarities from MUSE. Nevertheless, there are some notable differences:

- aMUSEd doesn’t follow a two-stage approach for predicting the ultimate masked patches.

- As an alternative of using T5 for text conditioning, CLIP L/14 is used for computing the text embeddings.

- Following Stable Diffusion XL (SDXL), additional conditioning, reminiscent of image size and cropping, is passed to the U-ViT. That is known as “micro-conditioning”.

To learn more about aMUSEd, we recommend reading the technical report here.

Using aMUSEd in 🧨 diffusers

aMUSEd comes fully integrated into 🧨 diffusers. To make use of it, we first need to put in the libraries:

pip install -U diffusers speed up transformers -q

Let’s start with text-to-image generation:

import torch

from diffusers import AmusedPipeline

pipe = AmusedPipeline.from_pretrained(

"amused/amused-512", variant="fp16", torch_dtype=torch.float16

)

pipe = pipe.to("cuda")

prompt = "A mecha robot in a favela in expressionist style"

negative_prompt = "low quality, ugly"

image = pipe(prompt, negative_prompt=negative_prompt, generator=torch.manual_seed(0)).images[0]

image

We will study how num_inference_steps affects the standard of the pictures under a hard and fast seed:

from diffusers.utils import make_image_grid

images = []

for step in [5, 10, 15]:

image = pipe(prompt, negative_prompt=negative_prompt, num_inference_steps=step, generator=torch.manual_seed(0)).images[0]

images.append(image)

grid = make_image_grid(images, rows=1, cols=3)

grid

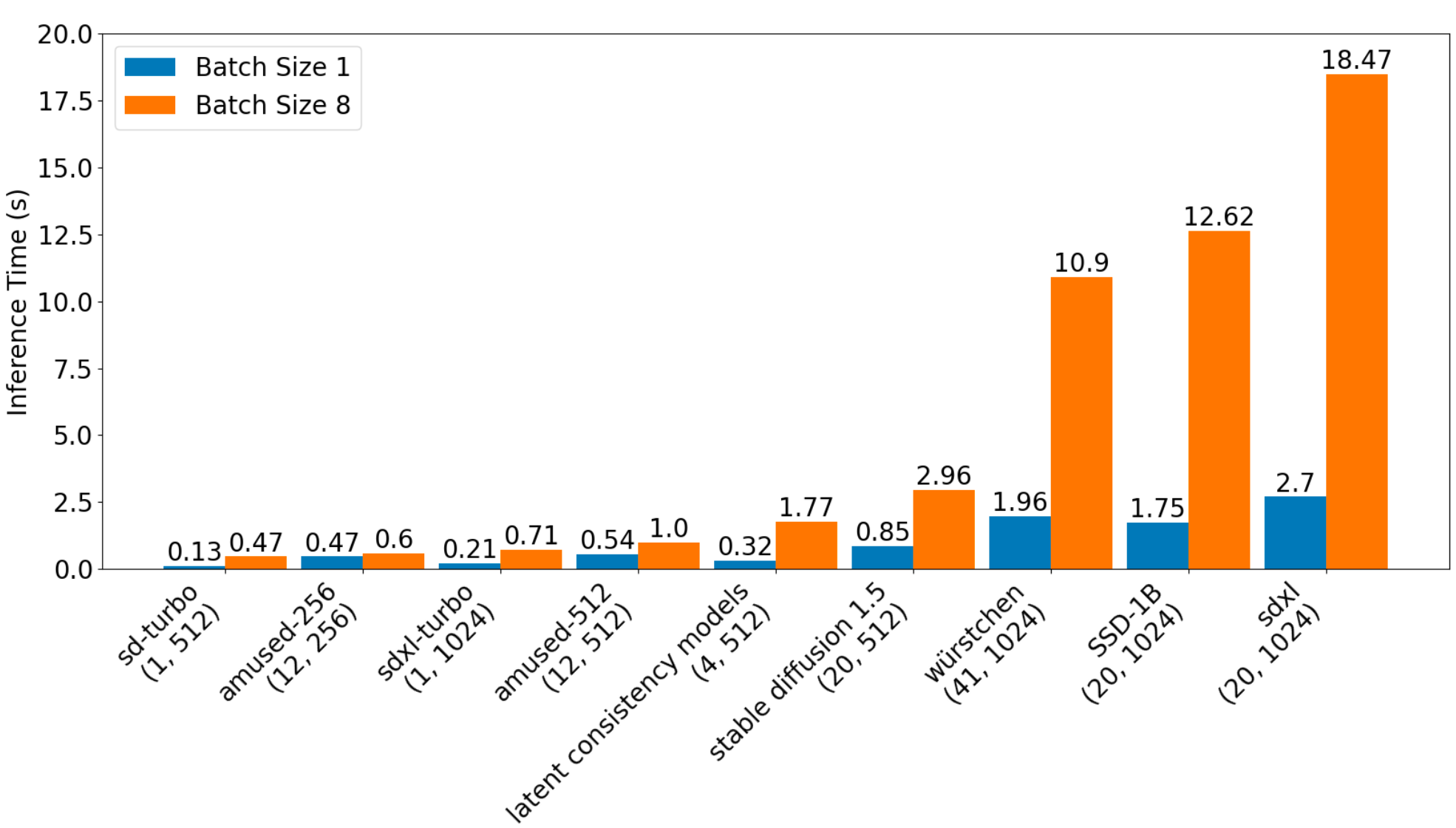

Crucially, due to its small size (only ~800M parameters, including the text encoder and VQ-GAN), aMUSEd may be very fast. The figure below provides a comparative study of the inference latencies of various models, including aMUSEd:

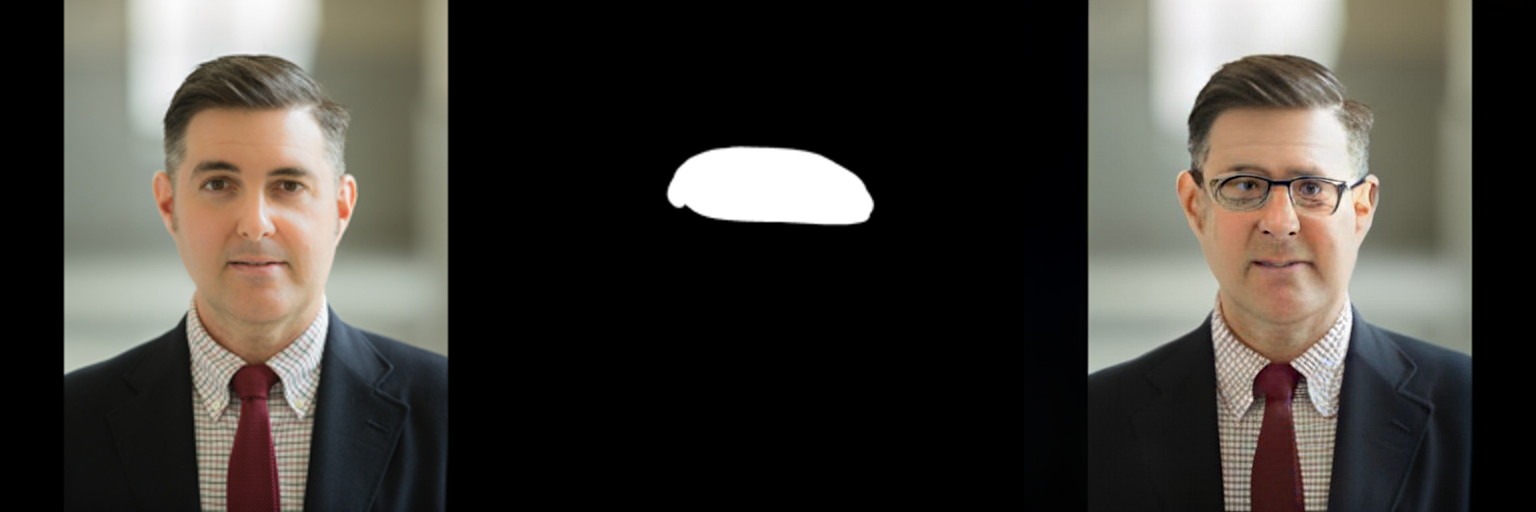

As a direct byproduct of its pre-training objective, aMUSEd can do image inpainting zero-shot, unlike other models reminiscent of SDXL.

import torch

from diffusers import AmusedInpaintPipeline

from diffusers.utils import load_image

from PIL import Image

pipe = AmusedInpaintPipeline.from_pretrained(

"amused/amused-512", variant="fp16", torch_dtype=torch.float16

)

pipe = pipe.to("cuda")

prompt = "a person with glasses"

input_image = (

load_image(

"https://huggingface.co/amused/amused-512/resolve/fundamental/assets/inpainting_256_orig.png"

)

.resize((512, 512))

.convert("RGB")

)

mask = (

load_image(

"https://huggingface.co/amused/amused-512/resolve/fundamental/assets/inpainting_256_mask.png"

)

.resize((512, 512))

.convert("L")

)

image = pipe(prompt, input_image, mask, generator=torch.manual_seed(3)).images[0]

aMUSEd is the primary non-diffusion system inside diffusers. Its iterative scheduling approach for predicting the masked patches made it a very good candidate for diffusers. We’re excited to see how the community leverages it.

We encourage you to examine out the technical report back to study all of the tasks we explored with aMUSEd.

High quality-tuning aMUSEd

We offer an easy training script for fine-tuning aMUSEd on custom datasets. With the 8-bit Adam optimizer and float16 precision, it’s possible to fine-tune aMUSEd with just below 11GBs of GPU VRAM. With LoRA, the memory requirements get further reduced to simply 7GBs.

aMUSEd comes with an OpenRAIL license, and hence, it’s commercially friendly to adapt. Check with this directory for more details on fine-tuning.

Limitations

aMUSEd will not be a state-of-the-art image generation regarding image quality. We released aMUSEd to encourage the community to explore non-diffusion frameworks reminiscent of MIM for image generation. We imagine MIM’s potential is underexplored, given its advantages:

- Inference efficiency

- Smaller size, enabling on-device applications

- Task transfer without requiring expensive fine-tuning

- Benefits of well-established components from the language modeling world

(Note that the unique work on MUSE is close-sourced)

For an in depth description of the quantitative evaluation of aMUSEd, check with the technical report.

We hope that the community will find the resources useful and feel motivated to enhance the state of MIM for image generation.

Resources

Papers:

Code + misc:

Acknowledgements

Suraj led training. William led data and supported training. Patrick von Platen supported each training and data and provided general guidance. Robin Rombach did the VQGAN training and provided general guidance. Isamu Isozaki helped with insightful discussions and made code contributions.

Because of Patrick von Platen and Pedro Cuenca for his or her reviews on the blog post draft.