An update to the Gemma models was released two months after this post, see the newest versions on this collection.

Gemma, a brand new family of state-of-the-art open LLMs, was released today by Google! It’s great to see Google reinforcing its commitment to open-source AI, and we’re excited to completely support the launch with comprehensive integration in Hugging Face.

Gemma is available in two sizes: 7B parameters, for efficient deployment and development on consumer-size GPU and TPU and 2B versions for CPU and on-device applications. Each are available in base and instruction-tuned variants.

We’ve collaborated with Google to make sure the most effective integration into the Hugging Face ecosystem. Yow will discover the 4 open-access models (2 base models & 2 fine-tuned ones) on the Hub. Among the many features and integrations being released, we now have:

Table of contents

What’s Gemma?

Gemma is a family of 4 recent LLM models by Google based on Gemini. It is available in two sizes: 2B and 7B parameters, each with base (pretrained) and instruction-tuned versions. All of the variants will be run on various varieties of consumer hardware, even without quantization, and have a context length of 8K tokens:

A month after the unique release, Google released a new edition of the instruct models. This version has higher coding capabilities, factuality, instruction following and multi-turn quality. The model is also less susceptible to begin its with “Sure,”.

So, how good are the Gemma models? Here’s an outline of the bottom models and their performance in comparison with other open models on the LLM Leaderboard (higher scores are higher):

Gemma 7B is a very strong model, with performance comparable to the most effective models within the 7B weight, including Mistral 7B. Gemma 2B is an interesting model for its size, but it surely doesn’t rating as high within the leaderboard as the most effective capable models with an identical size, resembling Phi 2. We’re looking forward to receiving feedback from the community about real-world usage!

Recall that the LLM Leaderboard is very useful for measuring the standard of pretrained models and never a lot of the chat ones. We encourage running other benchmarks resembling MT Bench, EQ Bench, and the lmsys Arena for the Chat ones!

Prompt format

The bottom models haven’t any prompt format. Like other base models, they will be used to proceed an input sequence with a plausible continuation or for zero-shot/few-shot inference. Also they are an important foundation for fine-tuning on your individual use cases. The Instruct versions have a quite simple conversation structure:

<start_of_turn>user

knock knock<end_of_turn>

<start_of_turn>model

who's there<end_of_turn>

<start_of_turn>user

LaMDA<end_of_turn>

<start_of_turn>model

LaMDA who?<end_of_turn>

This format must be exactly reproduced for effective use. We’ll later show how easy it’s to breed the instruct prompt with the chat template available in transformers.

Exploring the Unknowns

The Technical report includes information concerning the training and evaluation processes of the bottom models, but there are not any extensive details on the dataset’s composition and preprocessing. We all know they were trained with data from various sources, mostly web documents, code, and mathematical texts. The information was filtered to remove CSAM content and PII in addition to licensing checks.

Similarly, for the Gemma instruct models, no details have been shared concerning the fine-tuning datasets or the hyperparameters related to SFT and RLHF.

Demo

You possibly can chat with the Gemma Instruct model on Hugging Chat! Try the link here: https://huggingface.co/chat/models/google/gemma-1.1-7b-it

Using 🤗 Transformers

With Transformers release 4.38, you need to use Gemma and leverage all of the tools inside the Hugging Face ecosystem, resembling:

- training and inference scripts and examples

- secure file format (

safetensors) - integrations with tools resembling bitsandbytes (4-bit quantization), PEFT (parameter efficient fine-tuning), and Flash Attention 2

- utilities and helpers to run generation with the model

- mechanisms to export the models to deploy

As well as, Gemma models are compatible with torch.compile() with CUDA graphs, giving them a ~4x speedup at inference time!

To make use of Gemma models with transformers, be sure that to put in a recent version of transformers:

pip install --upgrade transformers

The next snippet shows tips on how to use gemma-7b-it with transformers. It requires about 18 GB of RAM, which incorporates consumer GPUs resembling 3090 or 4090.

from transformers import pipeline

import torch

pipe = pipeline(

"text-generation",

model="google/gemma-7b-it",

model_kwargs={"torch_dtype": torch.bfloat16},

device="cuda",

)

messages = [

{"role": "user", "content": "Who are you? Please, answer in pirate-speak."},

]

outputs = pipe(

messages,

max_new_tokens=256,

do_sample=True,

temperature=0.7,

top_k=50,

top_p=0.95

)

assistant_response = outputs[0]["generated_text"][-1]["content"]

print(assistant_response)

Avast me, me hearty. I'm a pirate of the high seas, able to pillage and plunder. Prepare for a tale of adventure and booty!

We used bfloat16 because that’s the reference precision and the way all evaluations were run. Running in float16 could also be faster in your hardware.

You too can routinely quantize the model, loading it in 8-bit and even 4-bit mode. 4-bit loading takes about 9 GB of memory to run, making it compatible with plenty of consumer cards and all of the GPUs in Google Colab. That is the way you’d load the generation pipeline in 4-bit:

pipeline = pipeline(

"text-generation",

model=model,

model_kwargs={

"torch_dtype": torch.float16,

"quantization_config": {"load_in_4bit": True}

},

)

For more details on using the models with transformers, please check the model cards.

JAX Weights

All of the Gemma model variants can be found to be used with PyTorch, as explained above, or JAX / Flax. To load Flax weights, you must use the flax revision from the repo, as shown below:

import jax.numpy as jnp

from transformers import AutoTokenizer, FlaxGemmaForCausalLM

model_id = "google/gemma-2b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

tokenizer.padding_side = "left"

model, params = FlaxGemmaForCausalLM.from_pretrained(

model_id,

dtype=jnp.bfloat16,

revision="flax",

_do_init=False,

)

inputs = tokenizer("Valencia and Málaga are", return_tensors="np", padding=True)

output = model.generate(**inputs, params=params, max_new_tokens=20, do_sample=False)

output_text = tokenizer.batch_decode(output.sequences, skip_special_tokens=True)

['Valencia and Málaga are two of the most popular tourist destinations in Spain. Both cities boast a rich history, vibrant culture,']

Please, take a look at this notebook for a comprehensive hands-on walkthrough on tips on how to parallelize JAX inference on Colab TPUs!

Integration with Google Cloud

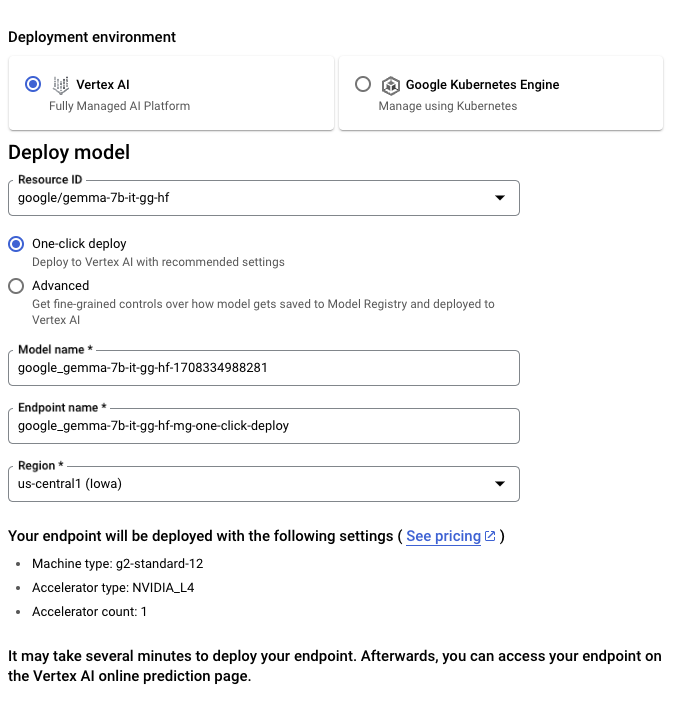

You possibly can deploy and train Gemma on Google Cloud through Vertex AI or Google Kubernetes Engine (GKE), using Text Generation Inference and Transformers.

To deploy the Gemma model from Hugging Face, go to the model page and click on on Deploy -> Google Cloud. This can bring you to the Google Cloud Console, where you’ll be able to 1-click deploy Gemma on Vertex AI or GKE. Text Generation Inference powers Gemma on Google Cloud and is the primary integration as a part of our partnership with Google Cloud.

You too can access Gemma directly through the Vertex AI Model Garden.

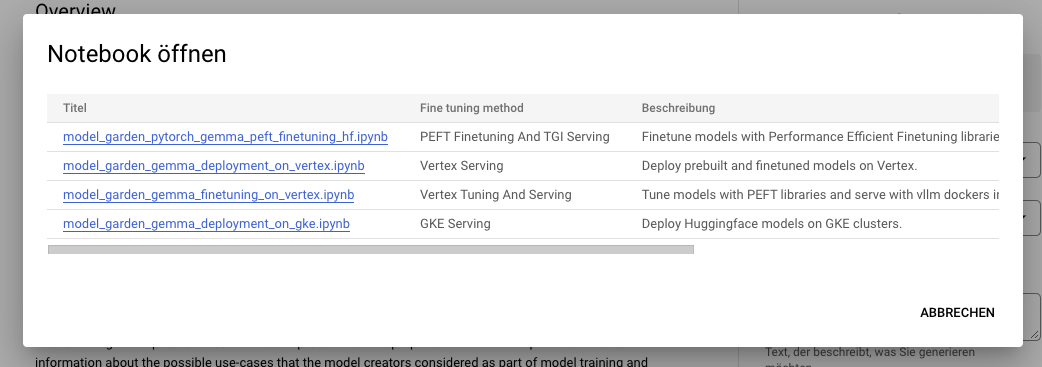

To Tune the Gemma model from Hugging Face, go to the model page and click on on Train -> Google Cloud. This can bring you to the Google Cloud Console, where you’ll be able to access notebooks to tune Gemma on Vertex AI or GKE.

These integrations mark the primary offerings we’re launching together as a results of our collaborative partnership with Google. Stay tuned for more!

Integration with Inference Endpoints

You possibly can deploy Gemma on Hugging Face’s Inference Endpoints, which uses Text Generation Inference because the backend. Text Generation Inference is a production-ready inference container developed by Hugging Face to enable easy deployment of huge language models. It has features resembling continuous batching, token streaming, tensor parallelism for fast inference on multiple GPUs, and production-ready logging and tracing.

To deploy a Gemma model, go to the model page and click on on the Deploy -> Inference Endpoints widget. You possibly can learn more about Deploying LLMs with Hugging Face Inference Endpoints in a previous blog post. Inference Endpoints supports Messages API through Text Generation Inference, which permits you to switch from one other closed model to an open one by simply changing the URL.

from openai import OpenAI

client = OpenAI(

base_url="" + "/v1/",

api_key="" ,

)

chat_completion = client.chat.completions.create(

model="tgi",

messages=[

{"role": "user", "content": "Why is open-source software important?"},

],

stream=True,

max_tokens=500

)

for message in chat_completion:

print(message.decisions[0].delta.content, end="")

High quality-tuning with 🤗 TRL

Training LLMs will be technically and computationally difficult. On this section, we’ll have a look at the tools available within the Hugging Face ecosystem to efficiently train Gemma on consumer-size GPUs

An example command to fine-tune Gemma on OpenAssistant’s chat dataset will be found below. We use 4-bit quantization and QLoRA to conserve memory to focus on all the eye blocks’ linear layers.

First, install the nightly version of 🤗 TRL and clone the repo to access the training script:

pip install -U transformers trl peft bitsandbytes

git clone https:

cd trl

Then you definately can run the script:

speed up launch --config_file examples/accelerate_configs/multi_gpu.yaml --num_processes=1

examples/scripts/sft.py

--model_name google/gemma-7b

--dataset_name OpenAssistant/oasst_top1_2023-08-25

--per_device_train_batch_size 2

--gradient_accumulation_steps 1

--learning_rate 2e-4

--save_steps 20_000

--use_peft

--lora_r 16 --lora_alpha 32

--lora_target_modules q_proj k_proj v_proj o_proj

--load_in_4bit

--output_dir gemma-finetuned-openassistant

This takes about 9 hours to coach on a single A10G, but will be easily parallelized by tweaking --num_processes to the variety of GPUs you’ve gotten available.

Additional Resources

Acknowledgments

Releasing such models with support and evaluations within the ecosystem wouldn’t be possible without the contributions of many community members, including Clémentine and Eleuther Evaluation Harness for LLM evaluations; Olivier and David for Text Generation Inference Support; Simon for developing the brand new access control features on Hugging Face; Arthur, Younes, and Sanchit for integrating Gemma into transformers; Morgan for integrating Gemma into optimum-nvidia (coming); Nathan, Victor, and Mishig for making Gemma available in Hugging Chat.

And Thanks to the Google Team for releasing Gemma and making it available to the open-source AI community!