In recent months, we have seen multiple news stories involving ‘deepfakes’, or AI-generated content: from images of Taylor Swift to videos of Tom Hanks and recordings of US President Joe Biden. Whether or not they are selling products, manipulating images of individuals without their consent, supporting phishing for personal information, or creating misinformation materials intended to mislead voters, deepfakes are increasingly being shared on social media platforms. This allows them to be quickly propagated and have a wider reach and subsequently, the potential to cause long-lasting damage.

On this blog post, we are going to describe approaches to perform watermarking of AI-generated content, discuss their pros and cons, and present a number of the tools available on the Hugging Face Hub for adding/detecting watermarks.

What’s watermarking and the way does it work?

Watermarking is a technique designed to mark content so as to convey additional information, similar to authenticity. Watermarks in AI-generated content can range from fully visible (Figure 1) to invisible (Figure 2). In AI specifically, watermarking involves adding patterns to digital content (similar to images), and conveying information regarding the provenance of the content; these patterns can then be recognized either by humans or algorithmically.

There are two primary methods for watermarking AI-generated content: the primary occurs during content creation, which requires access to the model itself but may also be more robust provided that it’s mechanically embedded as a part of the generation process. The second method, which is implemented after the content is produced, may also be applied even to content from closed-source and proprietary models, with the caveat that it might not be applicable to every type of content (e.g., text).

Data Poisoning and Signing Techniques

Along with watermarking, several related techniques have a task to play in limiting non-consensual image manipulation. Some imperceptibly alter images you share online in order that AI algorithms don’t process them well. Although people can see the photographs normally, AI algorithms can’t access comparable content, and because of this, cannot create latest images. Some tools that imperceptibly alter images include Glaze and Photoguard. Other tools work to “poison” images in order that they break the assumptions inherent in AI algorithm training, making it inconceivable for AI systems to learn what people appear to be based on the photographs shared online – this makes it harder for these systems to generate fake images of individuals. These tools include Nightshade and Fawkes.

Maintaining content authenticity and reliability can be possible by utilizing “signing” techniques that link content to metadata about their provenance, similar to the work of Truepic, which embeds metadata following the C2PA standard. Image signing will help understand where images come from. While metadata might be edited, systems similar to Truepic help get around this limitation by 1) Providing certification to make sure that the validity of the metadata might be verified and a couple of) Integrating with watermarking techniques to make it harder to remove the knowledge.

Open vs Closed Watermarks

There are pros and cons of providing different levels of access to each watermarkers and detectors for most people. Openness helps stimulate innovation, as developers can iterate on key ideas and create higher and higher systems. Nonetheless, this should be balanced against malicious use. With open code in an AI pipeline calling a watermarker, it’s trivial to remove the watermarking step. Even when that aspect of the pipeline is closed, then if the watermark is thought and the watermarking code is open, malicious actors may read the code to determine how one can edit generated content in a way where the watermarking doesn’t work. If access to a detector can be available, it’s possible to proceed editing something synthetic until the detector returns low-confidence, undoing what the watermark provides. There are hybrid open-closed approaches that directly address these issues. For example, the Truepic watermarking code is closed, but they supply a public JavaScript library that may confirm Content Credentials. The IMATAG code to call a watermarker during generation is open, but the actual watermarker and the detector are private.

Watermarking Different Sorts of Data

While watermarking is a very important tool across modalities (audio, images, text, etc.), each modality brings with it unique challenges and considerations. So, too, does the intent of the watermark: whether to forestall the usage of training data for training models, to guard content from being manipulated, to mark the output of models, or to detect AI-generated data. In the present section, we explore different modalities of knowledge, the challenges they present for watermarking, and the open-source tools that exist on the Hugging Face Hub to perform several types of watermarking.

Watermarking Images

Probably the perfect known variety of watermarking (each for content created by humans or produced by AI) is carried out on images. There have been different approaches proposed to tag training data to affect the outputs of models trained on it: the best-known method for this sort of ‘image cloaking’ approach is “Nightshade”, which carries out tiny changes to photographs which might be imperceptible to the human eye but that impact the standard of models trained on poisoned data. There are similar image cloaking tools available on the Hub – as an illustration, Fawkes, developed by the identical lab that developed Nightshade, specifically targets images of individuals with the goal of thwarting facial recognition systems. Similarly, there’s also Photoguard, which goals to protect images against manipulation using generative AI tools, e.g., for the creation of deepfakes based on them.

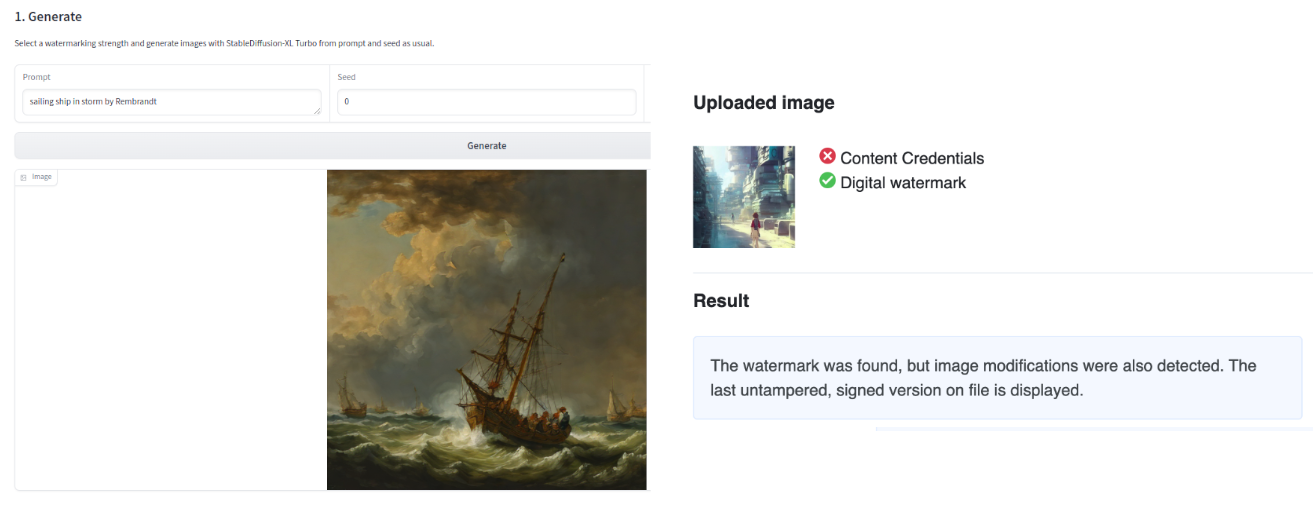

By way of watermarking output images, there are two complementary approaches available on the Hub: IMATAG (see Fig 2), which carries out watermarking in the course of the generation of content by leveraging modified versions of popular models similar to Stable Diffusion XL Turbo, and Truepic, which adds invisible content credentials after a picture has been generated.

TruePic also embeds C2PA content credentials into the photographs, which enables the storage of metadata regarding image provenance and generation within the image itself. Each the IMATAG and TruePic Spaces also allow for the detection of images watermarked by their systems. Each of those detection tools work with their respective approaches (i.e., they’re approach-specific). There’s an existing general deepfake detection Space on the Hub, but in our experience, we found that these solutions have variable performance depending on the standard of the image and the model used.

Watermarking Text

While watermarking AI-generated images can seem more intuitive – given the strongly visual nature of this content – text is an entire different story… How do you add watermarks to written words and numbers (tokens)? Well, the present approaches for watermarking depend on promoting sub-vocabularies based on the previous text. Let’s dive into what this may appear to be for LLM-generated text.

In the course of the generation process, an LLM outputs an inventory of logits for the following token before it carries out sampling or greedy decoding. Based on the previous generated text, most approaches split all candidate tokens into 2 groups – call them “red” and “green”. The “red” tokens can be restricted, and the “green” group can be promoted. This could occur by disallowing the red group tokens altogether (Hard Watermark), or by increasing the probability of the green group (Soft Watermark). The more we modify the unique probabilities, the upper our watermarking strength. WaterBench has created a benchmark dataset to facilitate comparison of performance across watermarking algorithms while controlling the watermarking strength for apples-to-apples comparisons.

Detection works by determining what “color” each token is, after which calculating the probability that the input text comes from the model in query. It’s value noting that shorter texts have a much lower confidence, since there are less tokens to look at.

There are a pair of the way you’ll be able to easily implement watermarking for LLMs on the Hugging Face Hub. The Watermark for LLMs Space (see Fig. 3) demonstrates this, using an LLM watermarking approach on models similar to OPT and Flan-T5. For production level workloads, you should utilize our Text Generation Inference toolkit, which implements the identical watermarking algorithm and sets the corresponding parameters and might be used with any of the newest models!

Just like universal watermarking of AI-generated images, it’s yet to be proven whether universally watermarking text is feasible. Approaches similar to GLTR are supposed to be robust for any accessible language model (provided that they rely on comparing the logits of generated text to those of various models). Detecting whether a given text was generated using a language model without accessing that model (either since it’s closed-source or since you don’t know which model was used to generate the text) is currently inconceivable.

As we discussed above, detection methods for generated text require a considerable amount of text to be reliable. Even then, detectors can have high false positive rates, incorrectly labeling text written by people as synthetic. Indeed, OpenAI removed their in-house detection tool in 2023 given low accuracy rate, which got here with unintended consequences when it was utilized by teachers to gauge whether the assignments submitted by their students were generated using ChatGPT or not.

Watermarking Audio

The info extracted from an individual’s voice (voiceprint), is commonly used as a biometric security authentication mechanism to discover a person. While generally paired with other security aspects similar to PIN or password, a breach of this biometric data still presents a risk and might be used to realize access to, e.g., bank accounts, provided that many banks use voice recognition technologies to confirm clients over the phone. As voice becomes easier to duplicate with AI, we must also improve the techniques to validate the authenticity of voice audio. Watermarking audio content is analogous to watermarking images within the sense that there’s a multidimensional output space that might be used to inject metadata regarding provenance. Within the case of audio, the watermarking is frequently carried out on frequencies which might be imperceptible to human ears (below ~20 or above ~20,000 Hz), which may then be detected using AI-driven approaches.

Given the high-stakes nature of audio output, watermarking audio content is an energetic area of research, and multiple approaches (e.g., WaveFuzz, Venomave) have been proposed over the previous few years.

AudioSeal is a technique for speech localized watermarking, with state-of-the-art detector speed without compromising the watermarking robustness. It jointly trains a generator that embeds a watermark within the audio, and a detector that detects the watermarked fragments in longer audios, even within the presence of editing. Audioseal achieves state-of-the-art detection performance of each natural and artificial speech on the sample level (1/16k second resolution), it generates limited alteration of signal quality and is powerful to many sorts of audio editing.

AudioSeal was also used to release SeamlessExpressive and SeamlessStreaming demos with mechanisms for safety.

Conclusion

Disinformation, being accused of manufacturing synthetic content when it’s real, and instances of inappropriate representations of individuals without their consent might be difficult and time-consuming; much of the damage is completed before corrections and clarifications might be made. As such, as a part of our mission to democratize good machine learning, we at Hugging Face imagine that having mechanisms to discover AI-Generated content quickly and systematically are vital. AI watermarking is just not foolproof, but could be a powerful tool within the fight against malicious and misleading uses of AI.