We introduce the concept of embedding quantization and showcase their impact on retrieval speed, memory usage, disk space, and price. We’ll discuss how embeddings could be quantized in theory and in practice, after which we introduce a demo showing a real-life retrieval scenario of 41 million Wikipedia texts.

Table of Contents

Why Embeddings?

Embeddings are some of the versatile tools in natural language processing, supporting a wide range of settings and use cases. In essence, embeddings are numerical representations of more complex objects, like text, images, audio, etc. Specifically, the objects are represented as n-dimensional vectors.

After transforming the complex objects, you’ll be able to determine their similarity by calculating the similarity of the respective embeddings! That is crucial for a lot of use cases: it serves because the backbone for advice systems, retrieval, one-shot or few-shot learning, outlier detection, similarity search, paraphrase detection, clustering, classification, and rather more.

Embeddings may struggle to scale

Nevertheless, embeddings could also be difficult to scale for production use cases, which results in expensive solutions and high latencies. Currently, many state-of-the-art models produce embeddings with 1024 dimensions, each of which is encoded in float32, i.e., they require 4 bytes per dimension. To perform retrieval over 250 million vectors, you’d subsequently need around 1TB of memory!

The table below gives an outline of various models, dimension size, memory requirement, and costs. Costs are computed at an estimated $3.8 per GB/mo with x2gd instances on AWS.

| Embedding Dimension | Example Models | 100M Embeddings | 250M Embeddings | 1B Embeddings |

|---|---|---|---|---|

| 384 | all-MiniLM-L6-v2 bge-small-en-v1.5 |

143.05GB $543 / mo |

357.62GB $1,358 / mo |

1430.51GB $5,435 / mo |

| 768 | all-mpnet-base-v2 bge-base-en-v1.5 jina-embeddings-v2-base-en nomic-embed-text-v1 |

286.10GB $1,087 / mo |

715.26GB $2,717 / mo |

2861.02GB $10,871 / mo |

| 1024 | bge-large-en-v1.5 mxbai-embed-large-v1 Cohere-embed-english-v3.0 |

381.46GB $1,449 / mo |

953.67GB $3,623 / mo |

3814.69GB $14,495 / mo |

| 1536 | OpenAI text-embedding-3-small | 572.20GB $2,174 / mo |

1430.51GB $5,435 / mo |

5722.04GB $21,743 / mo |

| 3072 | OpenAI text-embedding-3-large | 1144.40GB $4,348 / mo |

2861.02GB $10,871 / mo |

11444.09GB $43,487 / mo |

Improving scalability

There are several ways to approach the challenges of scaling embeddings. Essentially the most common approach is dimensionality reduction, reminiscent of PCA. Nevertheless, classic dimensionality reduction — like PCA methods — tends to perform poorly when used with embeddings.

In recent news, Matryoshka Representation Learning (blogpost) (MRL) as utilized by OpenAI also allows for cheaper embeddings. With MRL, only the primary n embedding dimensions are used. This approach has already been adopted by some open models like nomic-ai/nomic-embed-text-v1.5 and mixedbread-ai/mxbai-embed-2nd-large-v1 (For OpenAIs text-embedding-3-large, we see a performance retention of 93.1% at 12x compression. For nomic’s model, we retain 95.8% of performance at 3x compression and 90% at 6x compression.).

Nevertheless, there’s one other latest approach to realize progress on this challenge; it doesn’t entail dimensionality reduction, but fairly a discount in the scale of every of the person values within the embedding: Quantization. Our experiments on quantization will show that we are able to maintain a considerable amount of performance while significantly speeding up computation and saving on memory, storage, and costs. Let’s dive into it!

Binary Quantization

Unlike quantization in models where you reduce the precision of weights, quantization for embeddings refers to a post-processing step for the embeddings themselves. Specifically, binary quantization refers back to the conversion of the float32 values in an embedding to 1-bit values, leading to a 32x reduction in memory and storage usage.

To quantize float32 embeddings to binary, we simply threshold normalized embeddings at 0:

We will use the Hamming Distance to retrieve these binary embeddings efficiently. That is the variety of positions at which the bits of two binary embeddings differ. The lower the Hamming Distance, the closer the embeddings; thus, the more relevant the document. An enormous advantage of the Hamming Distance is that it will probably be easily calculated with 2 CPU cycles, allowing for blazingly fast performance.

Yamada et al. (2021) introduced a rescore step, which they called rerank, to spice up the performance. They proposed that the float32 query embedding could possibly be compared with the binary document embeddings using dot-product. In practice, we first retrieve rescore_multiplier * top_k results with the binary query embedding and the binary document embeddings — i.e., the list of the primary k results of the double-binary retrieval — after which rescore that list of binary document embeddings with the float32 query embedding.

By applying this novel rescoring step, we’re in a position to preserve as much as ~96% of the full retrieval performance, while reducing the memory and disk space usage by 32x and improving the retrieval speed by as much as 32x as well. Without the rescoring, we’re in a position to preserve roughly ~92.5% of the full retrieval performance.

Binary Quantization in Sentence Transformers

Quantizing an embedding with a dimensionality of 1024 to binary would lead to 1024 bits. In practice, it’s rather more common to store bits as bytes as an alternative, so after we quantize to binary embeddings, we pack the bits into bytes using np.packbits.

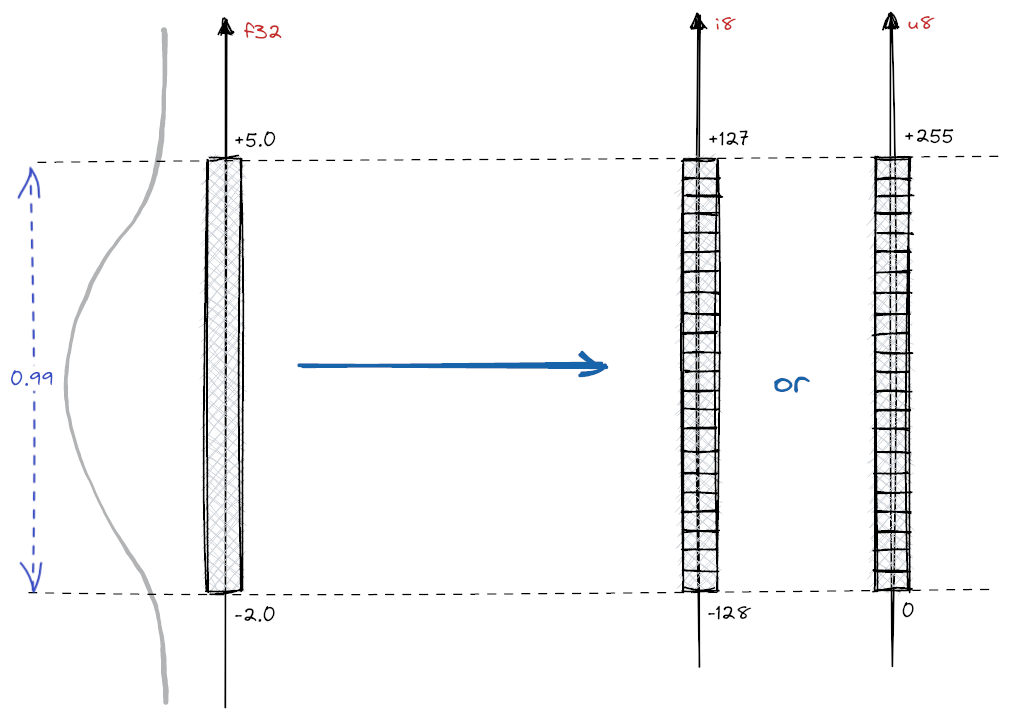

Due to this fact, quantizing a float32 embedding with a dimensionality of 1024 yields an int8 or uint8 embedding with a dimensionality of 128. See two approaches of how you’ll be able to produce quantized embeddings using Sentence Transformers below:

from sentence_transformers import SentenceTransformer

model = SentenceTransformer("mixedbread-ai/mxbai-embed-large-v1")

binary_embeddings = model.encode(

["I am driving to the lake.", "It is a beautiful day."],

precision="binary",

)

or

from sentence_transformers import SentenceTransformer

from sentence_transformers.quantization import quantize_embeddings

model = SentenceTransformer("mixedbread-ai/mxbai-embed-large-v1")

embeddings = model.encode(["I am driving to the lake.", "It is a beautiful day."])

binary_embeddings = quantize_embeddings(embeddings, precision="binary")

References:

Here, you’ll be able to see the differences between default float32 embeddings and binary embeddings by way of shape, size, and numpy dtype:

>>> embeddings.shape

(2, 1024)

>>> embeddings.nbytes

8192

>>> embeddings.dtype

float32

>>> binary_embeddings.shape

(2, 128)

>>> binary_embeddings.nbytes

256

>>> binary_embeddings.dtype

int8

Note that you may also select "ubinary" to quantize to binary using the unsigned uint8 data format. This may occasionally be a requirement depending in your vector library/database.

Binary Quantization in Vector Databases

Scalar (int8) Quantization

We use a scalar quantization process to convert the float32 embeddings into int8. This involves mapping the continual range of float32 values to the discrete set of int8 values, which might represent 256 distinct levels (from -128 to 127), as shown within the image below. This is completed through the use of a big calibration dataset of embeddings. We compute the range of those embeddings, i.e., the min and max of every embedding dimension. From there, we calculate the steps (buckets) to categorize each value.

To further boost the retrieval performance, you’ll be able to optionally apply the identical rescoring step as for the binary embeddings. It is necessary to notice that the calibration dataset greatly influences performance because it defines the quantization buckets.

Source: https://qdrant.tech/articles/scalar-quantization/

With scalar quantization to int8, we reduce the unique float32 embeddings’ precision in order that each value is represented with an 8-bit integer (4x smaller). Note that this differs from the binary quantization case, where each value is represented by a single bit (32x smaller).

Scalar Quantization in Sentence Transformers

Quantizing an embedding with a dimensionality of 1024 to int8 ends in 1024 bytes. In practice, we are able to select either uint8 or int8. This selection will likely be made depending on what your vector library/database supports.

In practice, it is suggested to offer the scalar quantization with either:

- a big set of embeddings to quantize suddenly, or

minandmaxranges for every of the embedding dimensions, or- a big calibration dataset of embeddings from which the

minandmaxranges could be computed.

If none of those are the case, you might be given a warning like this:

Computing int8 quantization buckets based on 2 embeddings. int8 quantization is more stable with 'ranges' calculated from more embeddings or a 'calibration_embeddings' that could be used to calculate the buckets.

See how you’ll be able to produce scalar quantized embeddings using Sentence Transformers below:

from sentence_transformers import SentenceTransformer

from sentence_transformers.quantization import quantize_embeddings

from datasets import load_dataset

model = SentenceTransformer("mixedbread-ai/mxbai-embed-large-v1")

corpus = load_dataset("nq_open", split="train[:1000]")["question"]

calibration_embeddings = model.encode(corpus)

embeddings = model.encode(["I am driving to the lake.", "It is a beautiful day."])

int8_embeddings = quantize_embeddings(

embeddings,

precision="int8",

calibration_embeddings=calibration_embeddings,

)

References:

Here you’ll be able to see the differences between default float32 embeddings and int8 scalar embeddings by way of shape, size, and numpy dtype:

>>> embeddings.shape

(2, 1024)

>>> embeddings.nbytes

8192

>>> embeddings.dtype

float32

>>> int8_embeddings.shape

(2, 1024)

>>> int8_embeddings.nbytes

2048

>>> int8_embeddings.dtype

int8

Scalar Quantization in Vector Databases

Combining Binary and Scalar Quantization

Combining binary and scalar quantization is feasible to get the perfect of each worlds: the intense speed from binary embeddings and the nice performance preservation of scalar embeddings with rescoring. See the demo below for a real-life implementation of this approach involving 41 million texts from Wikipedia. The pipeline for that setup is as follows:

- The query is embedded using the

mixedbread-ai/mxbai-embed-large-v1SentenceTransformer model. - The query is quantized to binary using the

quantize_embeddingsfunction from thesentence-transformerslibrary. - A binary index (41M binary embeddings; 5.2GB of memory/disk space) is searched using the quantized query for the highest 40 documents.

- The highest 40 documents are loaded on the fly from an int8 index on disk (41M int8 embeddings; 0 bytes of memory, 47.5GB of disk space).

- The highest 40 documents are rescored using the float32 query and the int8 embeddings to get the highest 10 documents.

- The highest 10 documents are sorted by rating and displayed.

Through this approach, we use 5.2GB of memory and 52GB of disk space for the indices. That is considerably lower than normal retrieval, requiring 200GB of memory and 200GB of disk space. Especially as you scale up even further, this can lead to notable reductions in latency and costs.

Quantization Experiments

We conducted our experiments on the retrieval subset of the MTEB containing 15 benchmarks. First, we retrieved the highest k (k=100) search results with a rescore_multiplier of 4. Due to this fact, we retrieved 400 ends in total and performed the rescoring on these top 400. For the int8 performance, we directly used the dot-product with none rescoring.

Several key trends and advantages could be identified from the outcomes of our quantization experiments. As expected, embedding models with higher dimension size typically generate higher storage costs per computation but achieve the perfect performance. Surprisingly, nonetheless, quantization to int8 already helps mxbai-embed-large-v1 and Cohere-embed-english-v3.0 achieve higher performance with lower storage usage than that of the smaller dimension size base models.

The advantages of quantization are, if anything, much more clearly visible when taking a look at the outcomes obtained with binary models. In that scenario, the 1024 dimension models still outperform a now 10x more storage intensive base model, and the mxbai-embed-large-v1 even manages to carry greater than 96% of performance after a 32x reduction in resource requirements. The further quantization from int8 to binary barely ends in any additional lack of performance for this model.

Interestingly, we may also see that all-MiniLM-L6-v2 exhibits stronger performance on binary than on int8 quantization. A possible explanation for this could possibly be the collection of calibration data. On e5-base-v2, we observe the effect of dimension collapse, which causes the model to only use a subspace of the latent space; when performing the quantization, the entire space collapses further, resulting in high performance losses.

This shows that quantization doesn’t universally work with all embedding models. It stays crucial to contemplate exisiting benchmark outcomes and conduct experiments to find out a given model’s compatibility with quantization.

Influence of Rescoring

On this section we take a look at the influence of rescoring on retrieval performance. We evaluate the outcomes based on mxbai-embed-large-v1.

Binary Rescoring

With binary embeddings, mxbai-embed-large-v1 retains 92.53% of performance on MTEB Retrieval. Just doing the rescoring without retrieving more samples pushes the performance to 96.45%. We experimented with setting therescore_multiplier from 1 to 10, but observe no further boost in performance. This means that the top_k search already retrieved the highest candidates and the rescoring reordered these good candidates appropriately.

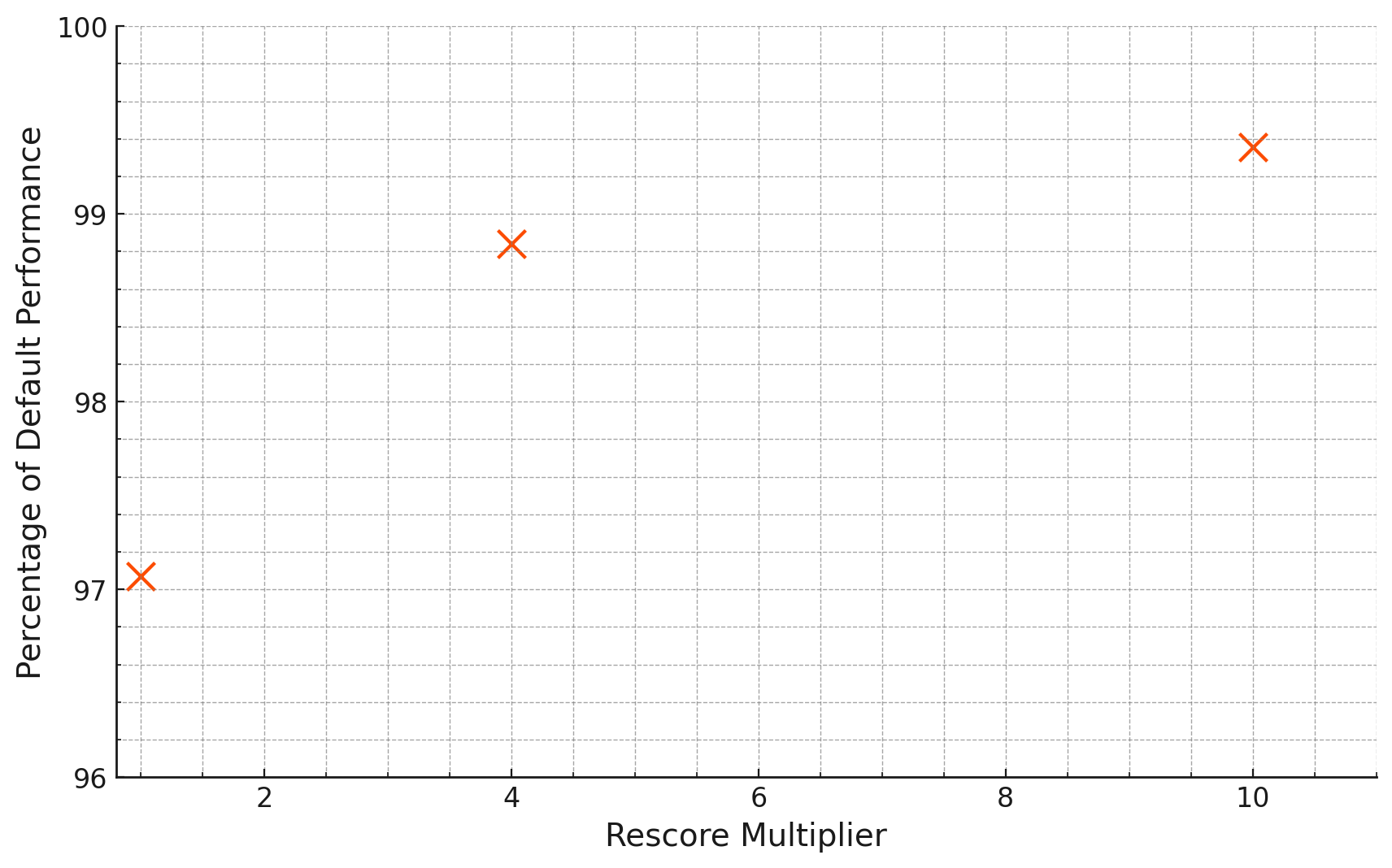

Scalar (Int8) Rescoring

We also evaluated the mxbai-embed-large-v1 model with int8 rescoring, as Cohere showed that Cohere-embed-english-v3.0 reached as much as 100% of the performance of the float32 model with int8 quantization. For this experiment, we set the rescore_multiplier to [1, 4, 10] and got the next results:

As we are able to see from the diagram, a better rescore multiplier implies higher retention of performance after quantization. Extrapolating from our results, we assume the relation is probably going hyperbolical with performance approaching 100% because the rescore multiplier continues to rise. A rescore multiplier of 4-5 already results in a remarkable performance retention of 99% using int8.

Retrieval Speed

We measured retrieval speed on a Google Cloud Platform a2-highgpu-4g instance using the mxbai-embed-large-v1 embeddings with 1024 dimension on the entire MTEB Retrieval. For int8 we used USearch (Version 2.9.2) and binary quantization Faiss (Version 1.8.0). All the things was computed on CPU using exact search.

| Quantization | Min | Mean | Max |

|---|---|---|---|

float32 |

1x (baseline) | 1x (baseline) | 1x (baseline) |

int8 |

2.99x speedup | 3.66x speedup | 4.8x speedup |

binary |

15.05x speedup | 24.76x speedup | 45.8x speedup |

As shown within the table, applying int8 scalar quantization ends in a mean speedup of three.66x in comparison with full-size float32 embeddings. Moreover, binary quantization achieves a speedup of 24.76x on average. For each scalar and binary quantization, even the worst case scenario resulted in very notable speedups.

Performance Summarization

The experimental results, effects on resource use, retrieval speed, and retrieval performance through the use of quantization could be summarized as follows:

| float32 | int8/uint8 | binary/ubinary | |

|---|---|---|---|

| Memory & Index size savings | 1x | exactly 4x | exactly 32x |

| Retrieval Speed | 1x | as much as 4x | as much as 45x |

| Percentage of default performance | 100% | ~99.3% | ~96% |

Demo

The next demo showcases the retrieval efficiency using exact or approximate search by combining binary search with scalar (int8) rescoring. The answer requires 5GB of memory for the binary index and 50GB of disk space for the binary and scalar indices, considerably lower than the 200GB of memory and disk space which could be required for normal float32 retrieval. Moreover, retrieval is way faster.

Try it yourself

The next scripts could be used to experiment with embedding quantization for retrieval & beyond. There are three categories:

- Beneficial Retrieval:

- semantic_search_recommended.py: This script combines binary search with scalar rescoring, very similar to the above demo, for affordable, efficient, and performant retrieval.

- Usage:

- Benchmarks:

- semantic_search_faiss_benchmark.py: This script features a retrieval speed benchmark of

float32retrieval, binary retrieval + rescoring, and scalar retrieval + rescoring, using FAISS. It uses thesemantic_search_faissutility function. Our benchmarks especially show show speedups forubinary. - semantic_search_usearch_benchmark.py: This script features a retrieval speed benchmark of

float32retrieval, binary retrieval + rescoring, and scalar retrieval + rescoring, using USearch. It uses thesemantic_search_usearchutility function. Our experiments show large speedups on newer hardware, particularly forint8.

- semantic_search_faiss_benchmark.py: This script features a retrieval speed benchmark of

Future work

We’re looking forward to further advancements of binary quantization. To call just a few potential improvements, we suspect that there could also be room for scalar quantization smaller than int8, i.e. with 128 or 64 buckets as an alternative of 256.

Moreover, we’re excited that embedding quantization is fully perpendicular to Matryoshka Representation Learning (MRL). In other words, it is feasible to shrink MRL embeddings from e.g. 1024 to 128 (which normally corresponds with a 2% reduction in performance) after which apply binary or scalar quantization. We suspect this might speed up retrieval as much as 32x for a ~3% reduction in quality, or as much as 256x for a ~10% reduction in quality.

Lastly, we recognize that retrieval using embedding quantization will also be combined with a separate reranker model. We imagine that a 3-step pipeline of binary search, scalar (int8) rescoring, and cross-encoder reranking allows for state-of-the-art retrieval performance at low latencies, memory usage, disk space, and costs.

Acknowledgments

This project is feasible because of our collaboration with mixedbread.ai and the SentenceTransformers library, which permits you to easily create sentence embeddings and quantize them. If you should use quantized embeddings in your project, now you realize how!

Citation

@article{shakir2024quantization,

creator = { Aamir Shakir and

Tom Aarsen and

Sean Lee

},

title = { Binary and Scalar Embedding Quantization for Significantly Faster & Cheaper Retrieval },

journal = {Hugging Face Blog},

12 months = {2024},

note = {https://huggingface.co/blog/embedding-quantization},

}