This blog post was written on April 2024 and provides an excellent introduction to internals of vision language models, an summary of existing suite of vision language models and learn how to fine-tune them. We have now written an April 2025 update, with more capabilities and more models. Be sure to examine it out after reading this one!

Vision language models are models that may learn concurrently from images and texts to tackle many tasks, from visual query answering to image captioning. On this post, we undergo the primary constructing blocks of vision language models: have an summary, grasp how they work, determine learn how to find the best model, learn how to use them for inference and learn how to easily fine-tune them with the new edition of trl released today!

What’s a Vision Language Model?

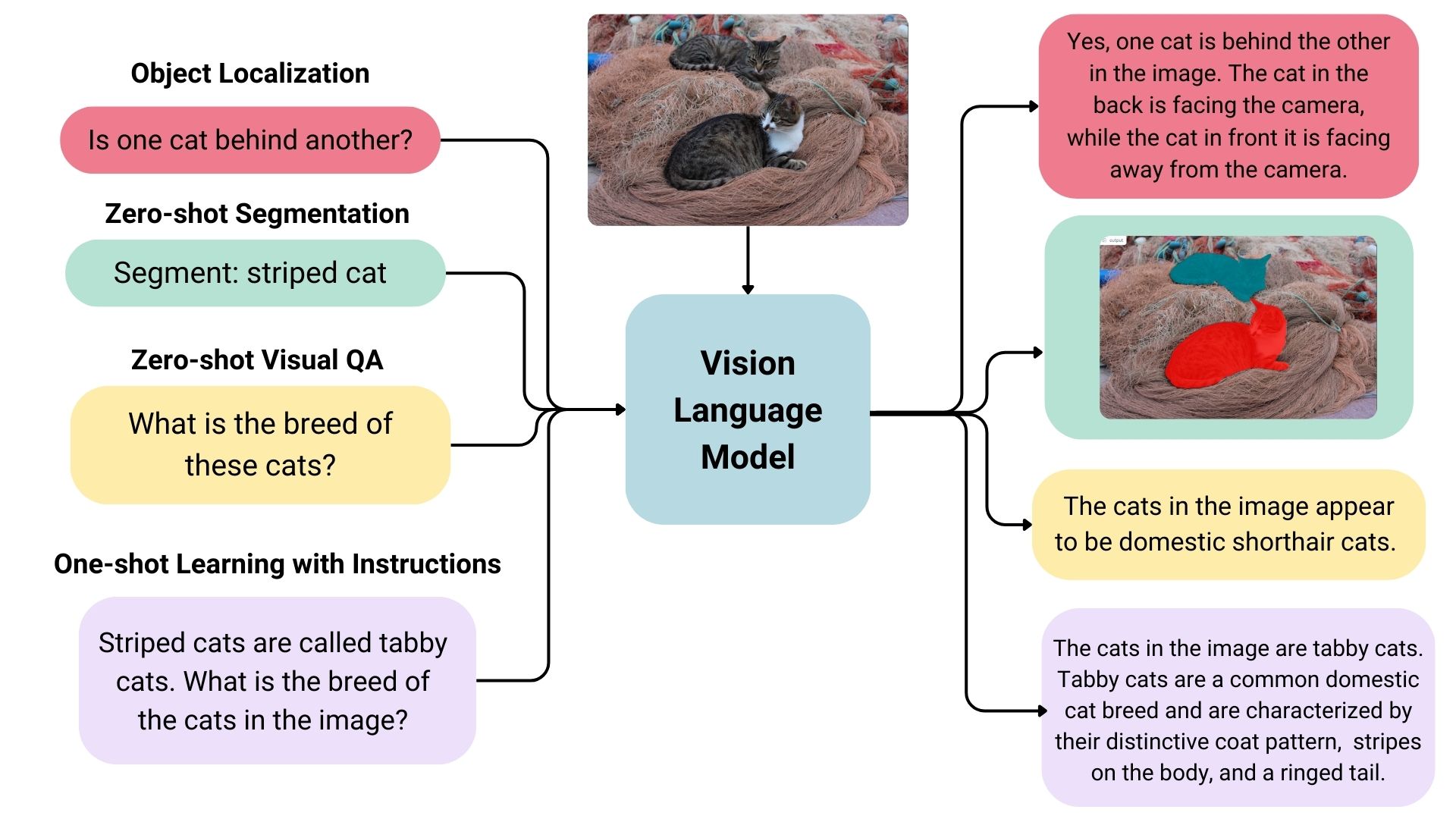

Vision language models are broadly defined as multimodal models that may learn from images and text. They’re a kind of generative models that take image and text inputs, and generate text outputs. Large vision language models have good zero-shot capabilities, generalize well, and might work with many sorts of images, including documents, web pages, and more. The use cases include chatting about images, image recognition via instructions, visual query answering, document understanding, image captioning, and others. Some vision language models may also capture spatial properties in a picture. These models can output bounding boxes or segmentation masks when prompted to detect or segment a specific subject, or they will localize different entities or answer questions on their relative or absolute positions. There’s quite a lot of diversity inside the present set of enormous vision language models, the info they were trained on, how they encode images, and, thus, their capabilities.

Overview of Open-source Vision Language Models

There are numerous open vision language models on the Hugging Face Hub. A number of the most outstanding ones are shown within the table below.

- There are base models, and models fine-tuned for chat that might be utilized in conversational mode.

- A few of these models have a feature called “grounding” which reduces model hallucinations.

- All models are trained on English unless stated otherwise.

Finding the best Vision Language Model

There are numerous ways to pick out essentially the most appropriate model to your use case.

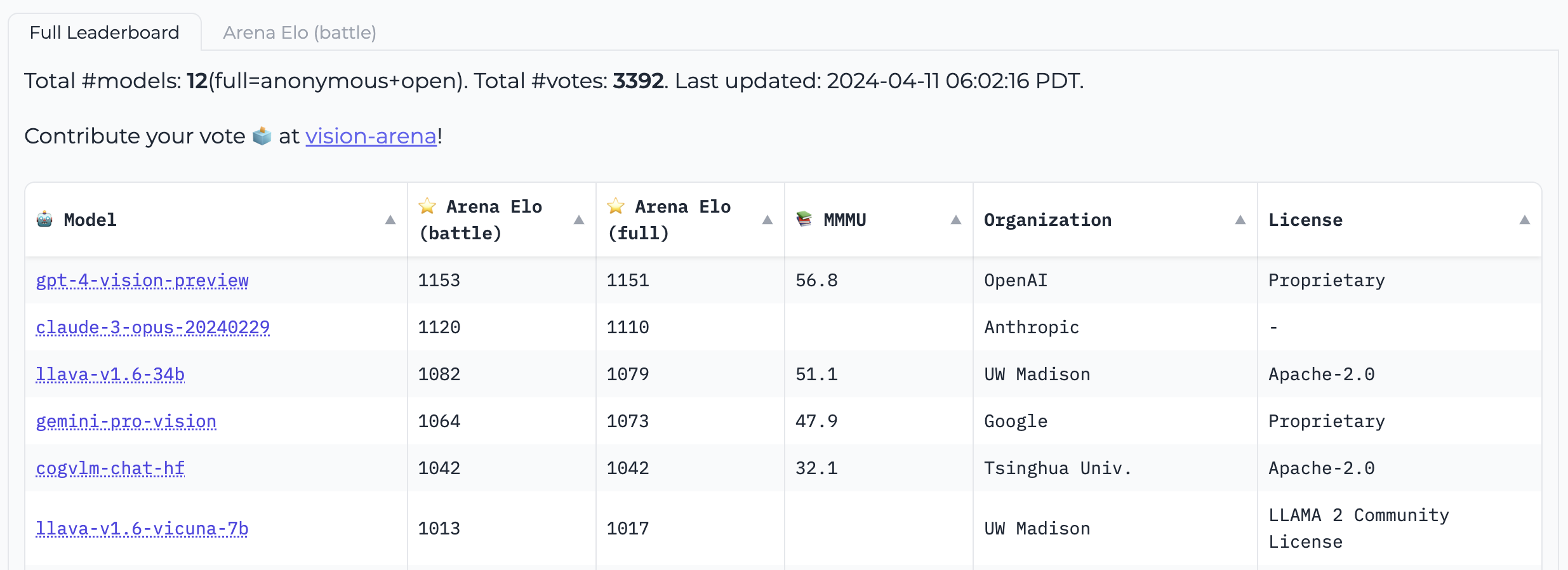

Vision Arena is a leaderboard solely based on anonymous voting of model outputs and is updated constantly. On this arena, the users enter a picture and a prompt, and outputs from two different models are sampled anonymously, then the user can pick their preferred output. This fashion, the leaderboard is constructed solely based on human preferences.

Vision Arena

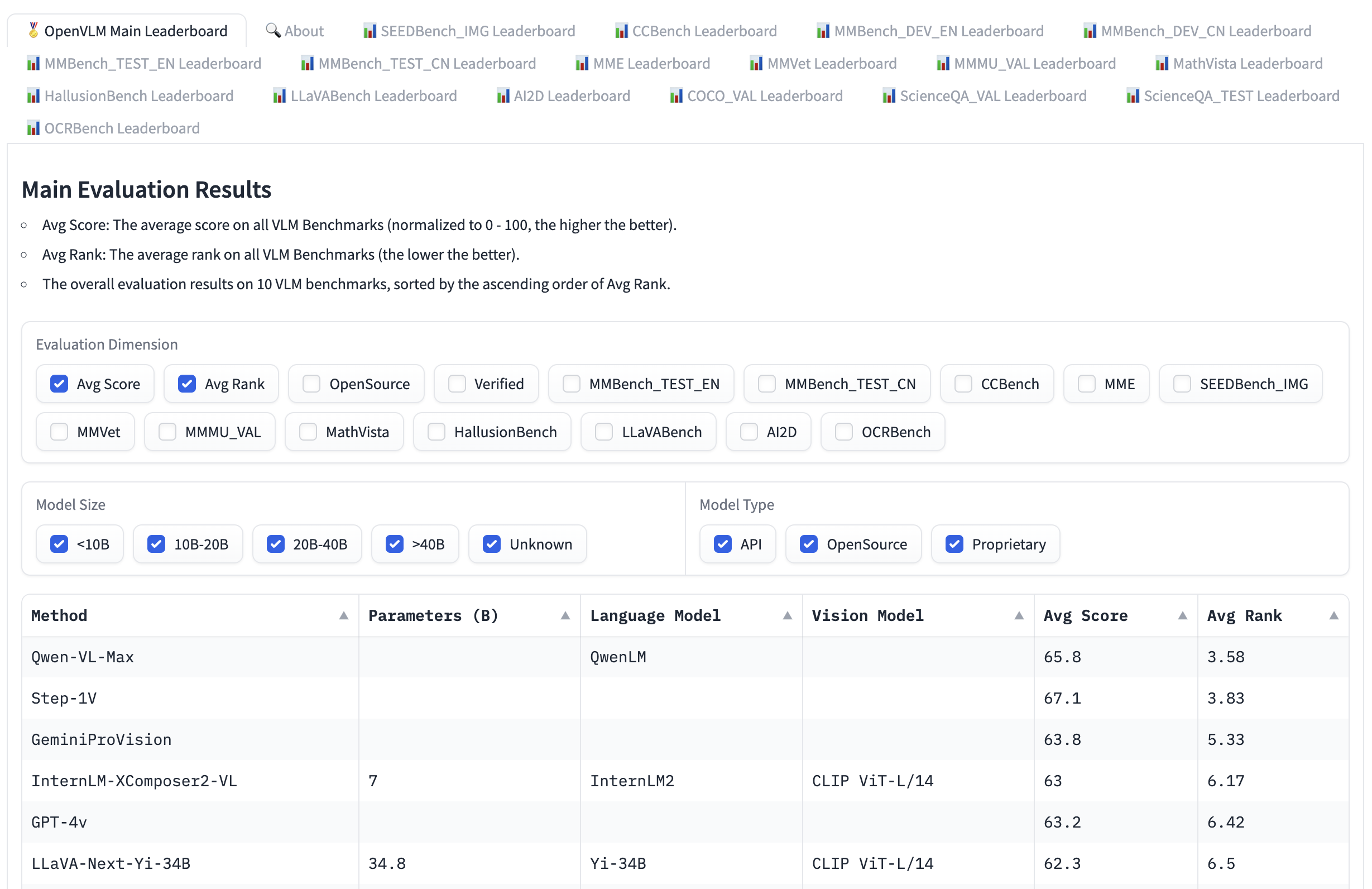

Open VLM Leaderboard, is one other leaderboard where various vision language models are ranked in line with these metrics and average scores. You may as well filter models in line with model sizes, proprietary or open-source licenses, and rank for various metrics.

Open VLM Leaderboard

VLMEvalKit is a toolkit to run benchmarks on a vision language models that powers the Open VLM Leaderboard.

One other evaluation suite is LMMS-Eval, which provides a regular command line interface to guage Hugging Face models of your selection with datasets hosted on the Hugging Face Hub, like below:

speed up launch --num_processes=8 -m lmms_eval --model llava --model_args pretrained="liuhaotian/llava-v1.5-7b" --tasks mme,mmbench_en --batch_size 1 --log_samples --log_samples_suffix llava_v1.5_mme_mmbenchen --output_path ./logs/

Each the Vision Arena and the Open VLM Leaderbard are limited to the models which can be submitted to them, and require updates so as to add latest models. If you should find additional models, you’ll be able to browse the Hub for models under the duty image-text-to-text.

There are different benchmarks to guage vision language models that you might come across within the leaderboards. We’ll undergo a number of of them.

MMMU

A Massive Multi-discipline Multimodal Understanding and Reasoning Benchmark for Expert AGI (MMMU) is essentially the most comprehensive benchmark to guage vision language models. It incorporates 11.5K multimodal challenges that require college-level subject knowledge and reasoning across different disciplines comparable to arts and engineering.

MMBench

MMBench is an evaluation benchmark that consists of 3000 single-choice questions over 20 different skills, including OCR, object localization and more. The paper also introduces an evaluation strategy called CircularEval, where the reply selections of a matter are shuffled in several combos, and the model is predicted to provide the best answer at every turn.

There are other more specific benchmarks across different domains, including MathVista (visual mathematical reasoning), AI2D (diagram understanding), ScienceQA (Science Query Answering) and OCRBench (document understanding).

Technical Details

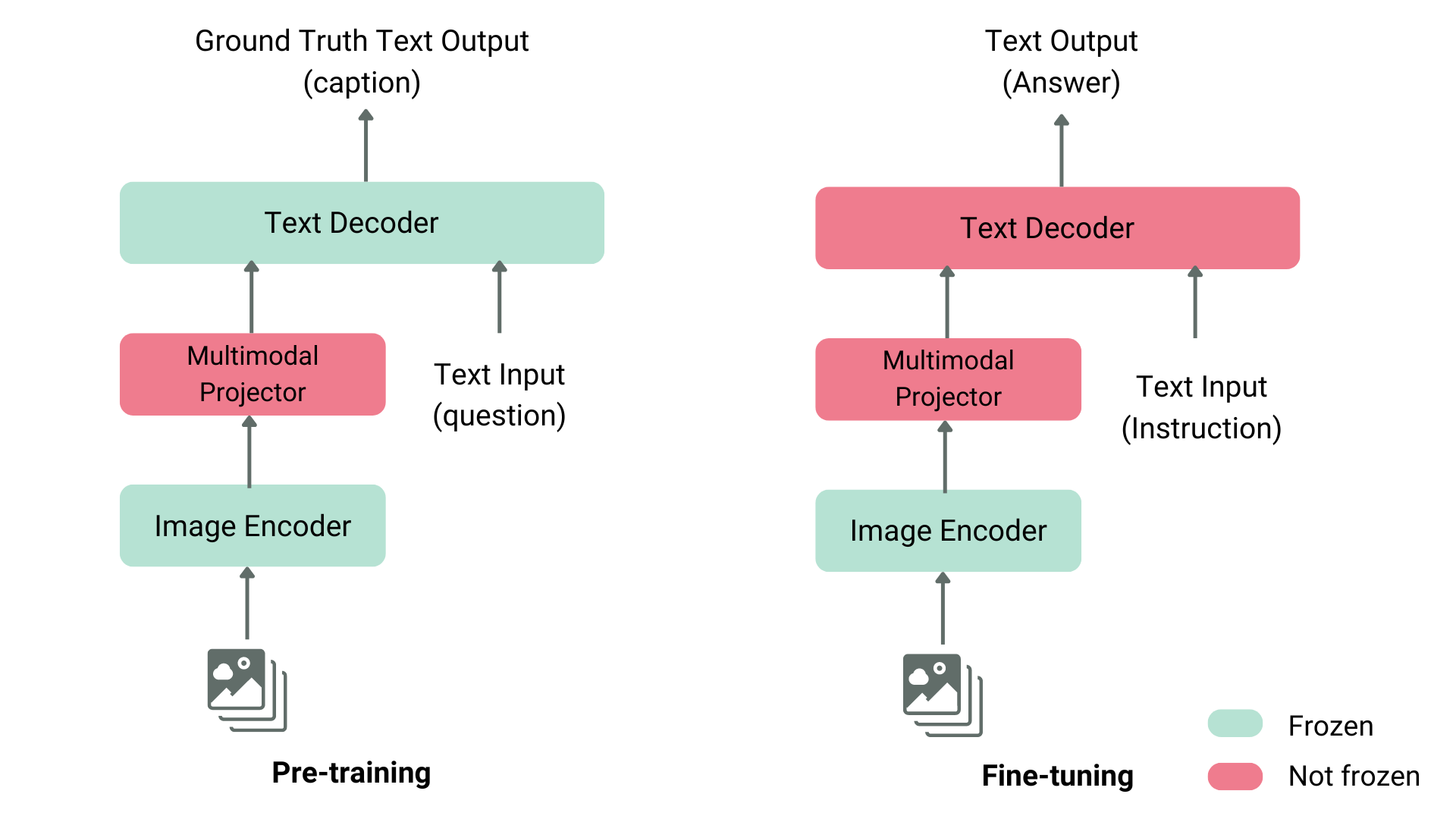

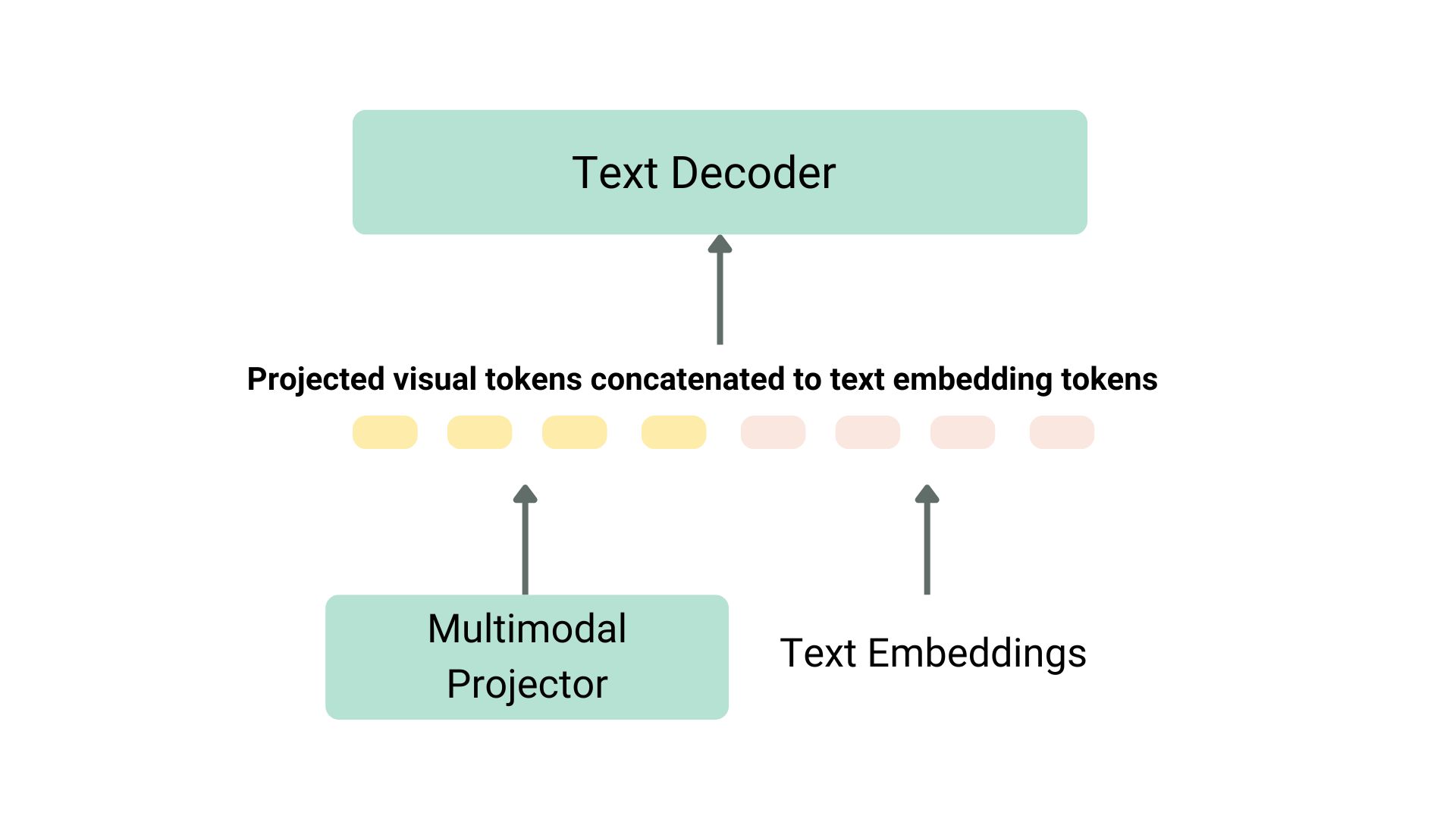

There are numerous ways to pretrain a vision language model. The primary trick is to unify the image and text representation and feed it to a text decoder for generation. Essentially the most common and outstanding models often consist of a picture encoder, an embedding projector to align image and text representations (often a dense neural network) and a text decoder stacked on this order. As for the training parts, different models have been following different approaches.

As an illustration, LLaVA consists of a CLIP image encoder, a multimodal projector and a Vicuna text decoder. The authors fed a dataset of images and captions to GPT-4 and generated questions related to the caption and the image. The authors have frozen the image encoder and text decoder and have only trained the multimodal projector to align the image and text features by feeding the model images and generated questions and comparing the model output to the bottom truth captions. After the projector pretraining, they keep the image encoder frozen, unfreeze the text decoder, and train the projector with the decoder. This fashion of pre-training and fine-tuning is essentially the most common way of coaching vision language models.

Structure of a Typical Vision Language Model

Projection and text embeddings are concatenated

One other example is KOSMOS-2, where the authors selected to completely train the model end-to-end, which is computationally expensive in comparison with LLaVA-like pre-training. The authors later did language-only instruction fine-tuning to align the model. Fuyu-8B, as one other example, doesn’t even have a picture encoder. As a substitute, image patches are directly fed to a projection layer after which the sequence goes through an auto-regressive decoder.

More often than not, you don’t have to pre-train a vision language model, as you’ll be able to either use considered one of the present ones or fine-tune them on your individual use case. We’ll undergo learn how to use these models using transformers and fine-tune using SFTTrainer.

Using Vision Language Models with transformers

You’ll be able to infer with Llava using the LlavaNext model as shown below.

Let’s initialize the model and the processor first.

from transformers import LlavaNextProcessor, LlavaNextForConditionalGeneration

import torch

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

processor = LlavaNextProcessor.from_pretrained("llava-hf/llava-v1.6-mistral-7b-hf")

model = LlavaNextForConditionalGeneration.from_pretrained(

"llava-hf/llava-v1.6-mistral-7b-hf",

torch_dtype=torch.float16,

low_cpu_mem_usage=True

)

model.to(device)

We now pass the image and the text prompt to the processor, after which pass the processed inputs to the generate. Note that every model uses its own prompt template, watch out to make use of the best one to avoid performance degradation.

from PIL import Image

import requests

url = "https://github.com/haotian-liu/LLaVA/blob/1a91fc274d7c35a9b50b3cb29c4247ae5837ce39/images/llava_v1_5_radar.jpg?raw=true"

image = Image.open(requests.get(url, stream=True).raw)

prompt = "[INST] nWhat is shown on this image? [/INST]"

inputs = processor(prompt, image, return_tensors="pt").to(device)

output = model.generate(**inputs, max_new_tokens=100)

Call decode to decode the output tokens.

print(processor.decode(output[0], skip_special_tokens=True))

Advantageous-tuning Vision Language Models with TRL

We’re excited to announce that TRL’s SFTTrainer now includes experimental support for Vision Language Models! We offer an example here of learn how to perform SFT on a Llava 1.5 VLM using the llava-instruct dataset which incorporates 260k image-conversation pairs.

The dataset incorporates user-assistant interactions formatted as a sequence of messages. For instance, each conversation is paired with a picture that the user asks questions on.

To make use of the experimental VLM training support, you need to install the most recent version of TRL, with pip install -U trl.

The complete example script might be found here.

from trl.commands.cli_utils import SftScriptArguments, TrlParser

parser = TrlParser((SftScriptArguments, TrainingArguments))

args, training_args = parser.parse_args_and_config()

Initialize the chat template for instruction fine-tuning.

LLAVA_CHAT_TEMPLATE = """A chat between a curious user and a synthetic intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions. {% for message in messages %}{% if message['role'] == 'user' %}USER: {% else %}ASSISTANT: {% endif %}{% for item in message['content'] %}{% if item['type'] == 'text' %}{{ item['text'] }}{% elif item['type'] == 'image' %}{% endif %}{% endfor %}{% if message['role'] == 'user' %} {% else %}{{eos_token}}{% endif %}{% endfor %}" ""

We’ll now initialize our model and tokenizer.

from transformers import AutoTokenizer, AutoProcessor, TrainingArguments, LlavaForConditionalGeneration

import torch

model_id = "llava-hf/llava-1.5-7b-hf"

tokenizer = AutoTokenizer.from_pretrained(model_id)

tokenizer.chat_template = LLAVA_CHAT_TEMPLATE

processor = AutoProcessor.from_pretrained(model_id)

processor.tokenizer = tokenizer

model = LlavaForConditionalGeneration.from_pretrained(model_id, torch_dtype=torch.float16)

Let’s create an information collator to mix text and image pairs.

class LLavaDataCollator:

def __init__(self, processor):

self.processor = processor

def __call__(self, examples):

texts = []

images = []

for example in examples:

messages = example["messages"]

text = self.processor.tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=False

)

texts.append(text)

images.append(example["images"][0])

batch = self.processor(texts, images, return_tensors="pt", padding=True)

labels = batch["input_ids"].clone()

if self.processor.tokenizer.pad_token_id is not None:

labels[labels == self.processor.tokenizer.pad_token_id] = -100

batch["labels"] = labels

return batch

data_collator = LLavaDataCollator(processor)

Load our dataset.

from datasets import load_dataset

raw_datasets = load_dataset("HuggingFaceH4/llava-instruct-mix-vsft")

train_dataset = raw_datasets["train"]

eval_dataset = raw_datasets["test"]

Initialize the SFTTrainer, passing within the model, the dataset splits, PEFT configuration and data collator and call train(). To push our final checkpoint to the Hub, call push_to_hub().

from trl import SFTTrainer

trainer = SFTTrainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

dataset_text_field="text",

tokenizer=tokenizer,

data_collator=data_collator,

dataset_kwargs={"skip_prepare_dataset": True},

)

trainer.train()

Save the model and push to the Hugging Face Hub.

trainer.save_model(training_args.output_dir)

trainer.push_to_hub()

You’ll find the trained model here.

You’ll be able to try the model we just trained directly in our VLM playground below ⬇️

Acknowledgements

We would love to thank Pedro Cuenca, Lewis Tunstall, Kashif Rasul and Omar Sanseviero for his or her reviews and suggestions on this blog post.