We’re excited to introduce the LiveCodeBench leaderboard, based on LiveCodeBench, a brand new benchmark developed by researchers from UC Berkeley, MIT, and Cornell for measuring LLMs’ code generation capabilities.

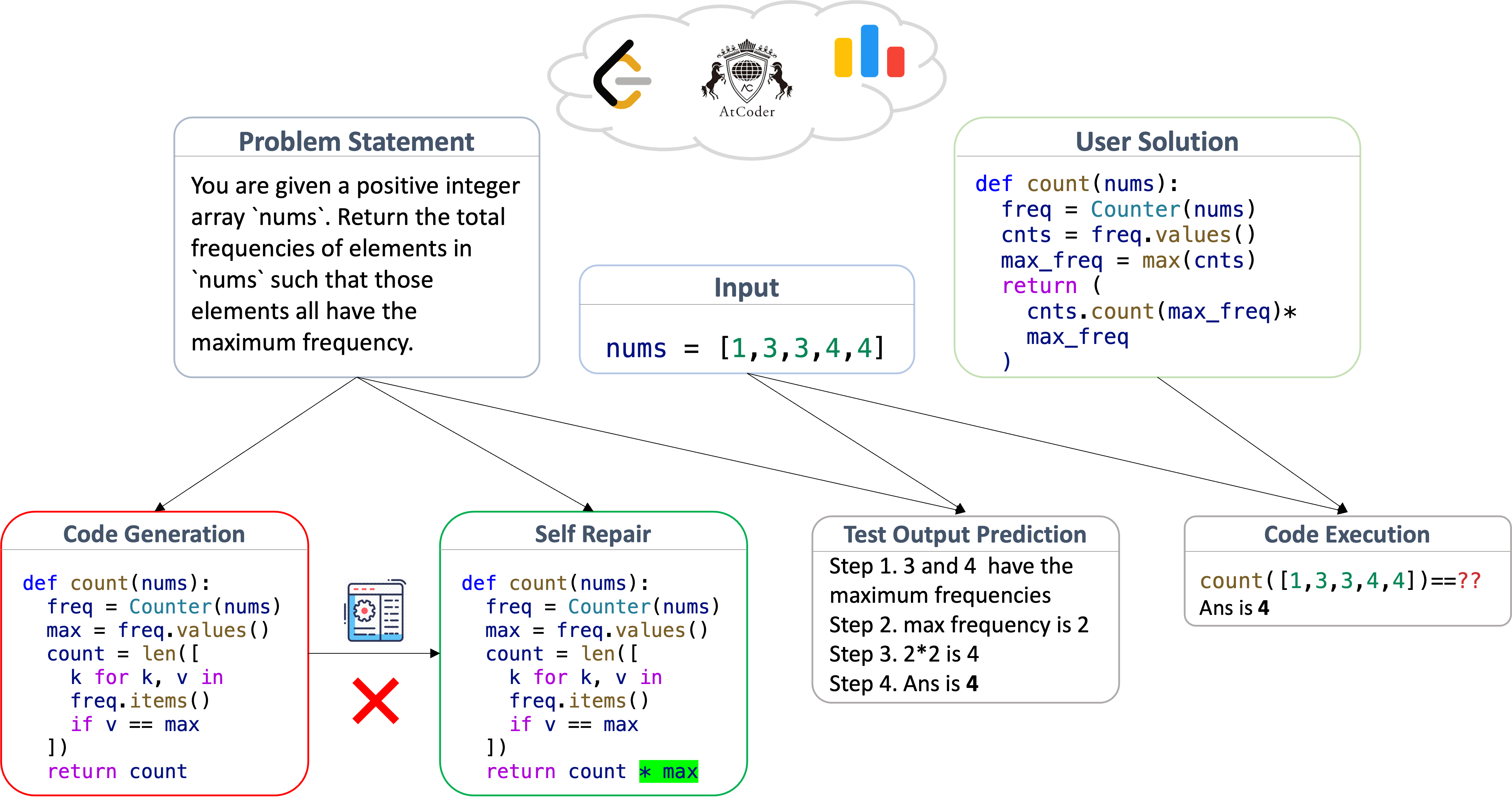

LiveCodeBench collects coding problems over time from various coding contest platforms, annotating problems with their release dates. Annotations are used to guage models on problem sets released in numerous time windows, allowing an “evaluation over time” strategy that helps detect and forestall contamination. Along with the same old code generation task, LiveCodeBench also assesses self-repair, test output prediction, and code execution, thus providing a more holistic view of coding capabilities required for the subsequent generation of AI programming agents.

LiveCodeBench Scenarios and Evaluation

LiveCodeBench problems are curated from coding competition platforms: LeetCode, AtCoder, and CodeForces. These web sites periodically host contests containing problems that assess the coding and problem-solving skills of participants. Problems consist of a natural language problem statement together with example input-output examples, and the goal is to jot down a program that passes a set of hidden tests. 1000’s of participants engage within the competitions, which ensures that the issues are vetted for clarity and correctness.

LiveCodeBench uses the collected problems for constructing its 4 coding scenarios

- Code Generation. The model is given an issue statement, which incorporates a natural language description and example tests (input-output pairs), and is tasked with generating an accurate solution. Evaluation relies on the functional correctness of the generated code, which is set using a set of test cases.

- Self Repair. The model is given an issue statement and generates a candidate program, much like the code generation scenario above. In case of a mistake, the model is supplied with error feedback (either an exception message or a failing test case) and is tasked with generating a fix. Evaluation is performed using the identical functional correctness as above.

- Code Execution. The model is provided a program snippet consisting of a function (f) together with a test input, and is tasked with predicting the output of this system on the input test case. Evaluation relies on an execution-based correctness metric: the model’s output is taken into account correct if the assertion

assert f(input) == generated_outputpasses. - Test Output Prediction. The model is given the issue statement together with a test case input and is tasked with generating the expected output for the input. Tests are generated solely from problem statements, without the necessity for the function’s implementation, and outputs are evaluated using a precise match checker.

For every scenario, evaluation is performed using the Pass@1 metric. The metric captures the probability of generating an accurate answer and is computed using the ratio of the count of correct answers over the count of total attempts, following Pass@1 = total_correct / total_attempts.

Stopping Benchmark Contamination

Contamination is one among the key bottlenecks in current LLM evaluations. Even inside LLM coding evaluations, there have been evidential reports of contamination and overfitting on standard benchmarks like HumanEval ([1] and [2]).

Because of this, we annotate problems with release dates in LiveCodeBench: that way, for brand spanking new models with a training-cutoff date D, we will compute scores on problems released after D to measure their generalization on unseen problems.

LiveCodeBench formalizes this with a “scrolling over time” feature, that means that you can select problems inside a particular time window. You possibly can try it out within the leaderboard above!

Findings

We discover that:

- while model performances are correlated across different scenarios, the relative performances and orderings can vary on the 4 scenarios we use

GPT-4-Turbois the best-performing model across most scenarios. Moreover, its margin grows on self-repair tasks, highlighting its capability to take compiler feedback.Claude-3-OpusovertakesGPT-4-Turbowithin the test output prediction scenario, highlighting stronger natural language reasoning capabilities.Mistral-Largeperforms considerably higher on natural language reasoning tasks like test output prediction and code execution.

How you can Submit?

To judge your code models on LiveCodeBench, you may follow these steps

- Environment Setup: You need to use conda to create a brand new environment, and install LiveCodeBench

git clone https://github.com/LiveCodeBench/LiveCodeBench.git

cd LiveCodeBench

pip install poetry

poetry install

- For evaluating recent Hugging Face models, you may easily evaluate the model using

python -m lcb_runner.runner.foremost --model {model_name} --scenario {scenario_name}

for various scenarios. For brand new model families, now we have implemented an extensible framework and you may support recent models by modifying lcb_runner/lm_styles.py and lcb_runner/prompts as described within the github README.

- When you results are generated, you may submit them by filling out this kind.

How you can contribute

Finally, we’re searching for collaborators and suggestions for LiveCodeBench. The dataset and code can be found online, so please reach out by submitting a problem or mail.