Instruction tuning is an approach of fine-tuning that offers large language models (LLMs) the aptitude to follow natural and human-written instructions. Nevertheless, for programming tasks, most models are tuned on either human-written instructions (that are very expensive) or instructions generated by huge and proprietary LLMs (which will not be permitted). We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code LLM trained with a completely permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate hundreds of instruction-response pairs, that are then used to fine-tune StarCoder-15B itself with none human annotations or distilled data from huge and proprietary LLMs.

StarCoder2-15B-Instruct achieves a 72.6 HumanEval rating, even surpassing the 72.0 rating of CodeLlama-70B-Instruct! Further evaluation on LiveCodeBench shows that the self-aligned model is even higher than the identical model trained on data distilled from GPT-4, implying that an LLM could learn more effectively from data inside its own distribution than a shifted distribution from a teacher LLM.

Method

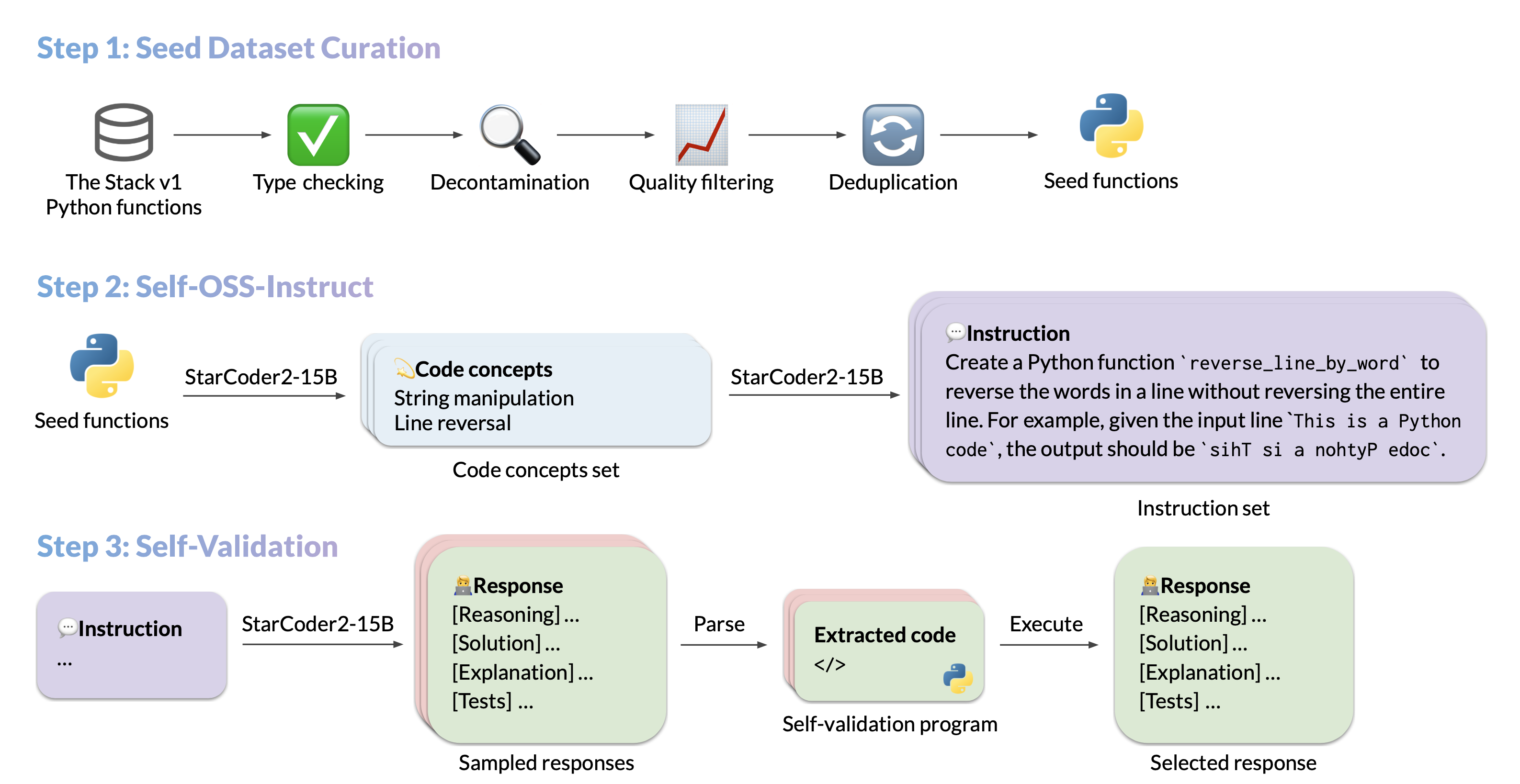

Our data generation pipeline mainly consists of three steps:

- Extract high-quality and diverse seed functions from The Stack v1, an enormous corpus of permissively licensed source code.

- Create diverse and realistic code instructions that incorporate different code concepts present within the seed functions (e.g., data deserialization, list concatenation, and recursion).

- For every instruction, generate a high-quality response through execution-guided self-validation.

In the next sections, we are going to explore each of those points intimately.

Collecting seed code snippets

To completely unlock the instruction-following capabilities of a code model, it must be exposed to a various set of instructions encompassing a wide selection of programming principles and practices. Motivated by OSS-Instruct, we further promote such diversity by mining code concepts from open-source code snippets which are, specifically, well-formed seed Python functions from The Stack V1.

For our seed dataset, we fastidiously extract all Python functions with docstrings in The Stack V1, infer dependencies required using autoimport, and apply the next filtering rules on all functions:

- Type checking: We apply the Pyright heuristic type-checker to remove all functions that produce static errors, signaling a possibly incorrect item.

- Decontamination: We detect and take away all benchmark items on which we evaluate. We use exact string match on each the solutions and prompts.

- Docstring Quality Filtering: We utilize StarCoder2-15B as a judge to remove functions with poor documentation. We prompt the bottom model with 7 few-shot examples, requiring it to reply with either “Yes” or “No” for retaining the item.

- Near-Deduplication: We utilize MinHash and locality-sensitive hashing with a Jaccard similarity threshold of 0.5 to filter duplicate seed functions in our dataset. That is the same process applied to StarCoder’s training data.

This filtering pipeline ends in a dataset of 250k Python functions filtered from 5M functions with docstrings. This process is extremely inspired by the information collection pipeline utilized in MultiPL-T.

Self-OSS-Instruct

After collecting the seed functions, we use Self-OSS-Instruct to generate diverse instructions. Intimately, we employ in-context learning to let the bottom StarCoder2-15B self-generate instructions from the given seed code snippets. This process utilizes 16 fastidiously designed few-shot examples, each formatted as (snippet, concepts, instruction). The instruction generation procedure is split into two steps:

- Concepts extraction: For every seed function, StarCoder2-15B is prompted to supply a listing of code concepts present throughout the function. Code concepts confer with the foundational principles and techniques utilized in programming, reminiscent of pattern matching and data type conversion, that are crucial for developers to master.

- Instruction generation: StarCoder2-15B is then prompted to self-generate a coding task that includes the identified code concepts.

Eventually, 238k instructions are generated from this process.

Response self-validation

Given the instructions generated from Self-OSS-Instruct, our next step is to match each instruction with a high-quality response. Prior practices commonly depend on distilling responses from stronger teacher models, reminiscent of GPT-4, which hopefully exhibit higher quality. Nevertheless, distilling proprietary models results in non-permissive licensing and a stronger teacher model won’t at all times be available. More importantly, teacher models could be fallacious as well, and the distribution gap between teacher and student could be detrimental.

We propose to self-align StarCoder2-15B by explicitly instructing the model to generate tests for self-validation after it produces a response interleaved with natural language. This process is analogous to how developers test their code implementations. Specifically, for every instruction, StarCoder2-15B generates 10 samples of the format (NL Response, Test) and we filter out those falsified by the test execution under a sandbox environment. We then randomly select one passing response per instruction to the ultimate SFT dataset. In total, we generated 2.4M (10 x 238k) responses for the 238k instructions with temperature 0.7, where 500k passed the execution test. After deduplication, we’re left with 50k instructions, each paired with a random passing response, which we finally use as our SFT dataset.

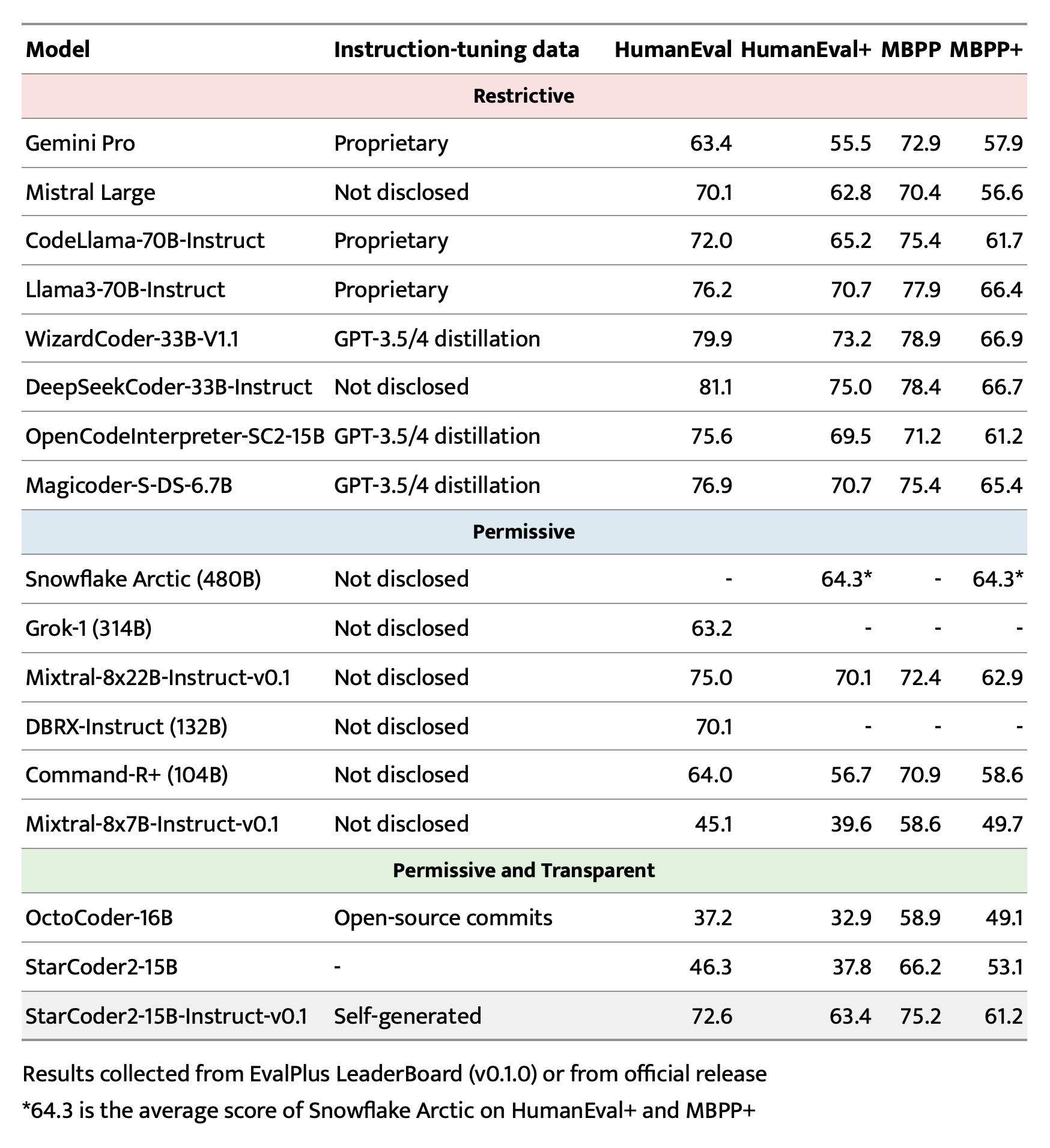

Evaluation

On the favored and rigorous EvalPlus benchmark, StarCoder2-15B-Instruct stands out because the top-performing permissive LLM at its scale, outperforming the much larger Grok-1 Command-R+, DBRX, while closely matching Snowflake Arctic 480B and Mixtral-8x22B-Instruct. To our knowledge, StarCoder2-15B-Instruct is the primary code LLM with a completely transparent and permissive pipeline reaching a 70+ HumanEval rating. It drastically outperforms OctoCoder, which is the previous state-of-the-art permissive code LLM with a transparent pipeline.

Even in comparison with powerful LLMs with restrictive licenses, StarCoder2-15B-Instruct stays competitive, surpassing Gemini Pro and Mistral Large and comparable to CodeLlama-70B-Instruct. Moreover, StarCoder2-15B-Instruct, trained purely on self-generated data, closely rivals OpenCodeInterpreter-SC2-15B, which finetunes StarCoder2-15B on distilled data from GPT-3.5/4.

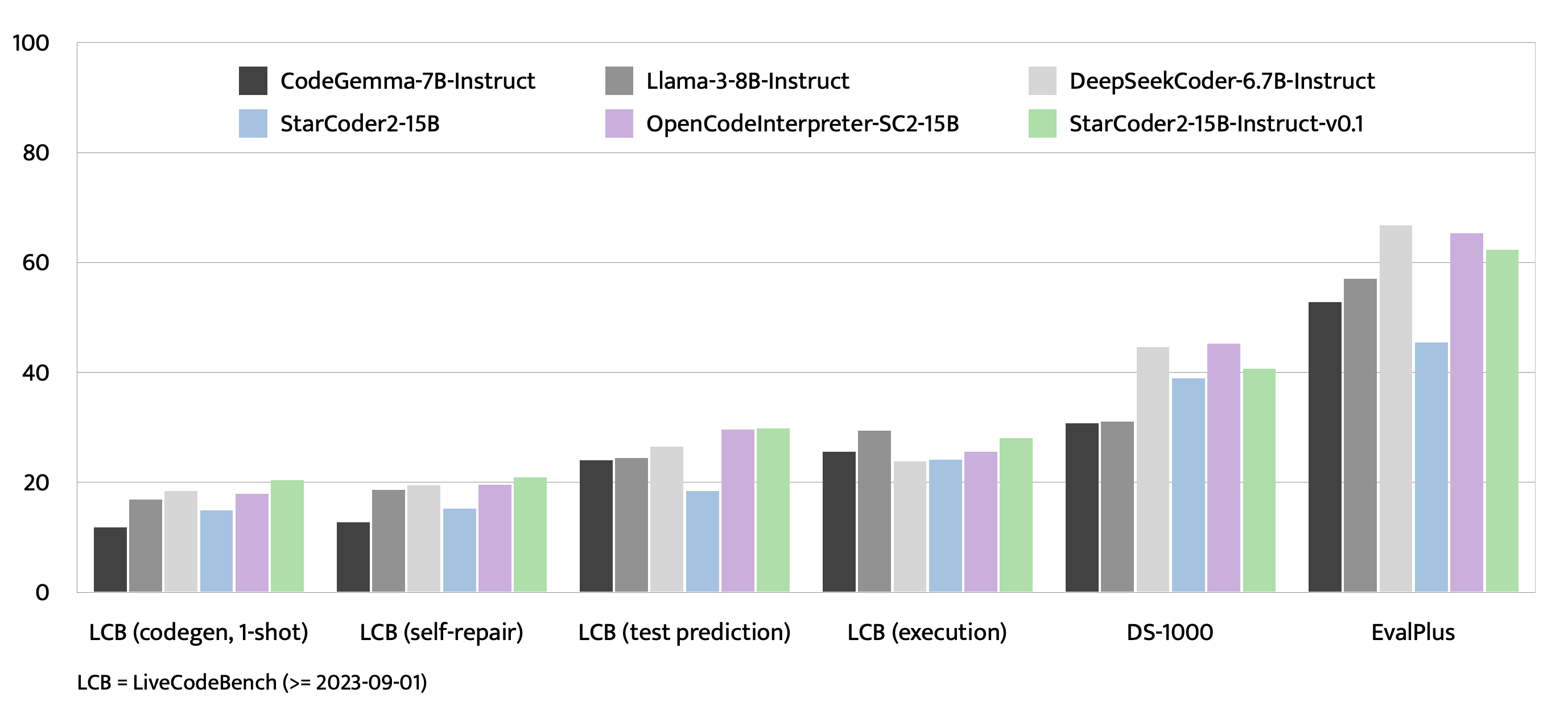

Besides EvalPlus, we also evaluated state-of-the-art open-source models with similar or smaller sizes on LiveCodeBench, which incorporates fresh coding problems created after 2023-09-01, in addition to DS-1000 that targets data science programs. On LiveCodeBench, StarCoder2-15B-Instruct achieves one of the best results among the many models evaluated and consistently outperforms OpenCodeInterpreter-SC2-15B which distills GPT-4 data. On DS-1000, the StarCoder2-15B-Instruct continues to be competitive despite being trained on very limited data science problems.

Conclusion

StarCoder2-15B-Instruct-v0.1 showcases for the primary time that we are able to create powerful instruction-tuned code models without counting on stronger teacher models like GPT-4. This model demonstrates that self-alignment, where a model uses its own generated content to learn, can also be effective for code. It’s fully transparent and allows for distillation, setting it other than other larger permissive but non-transparent models reminiscent of Snowflake-Arctic, Grok-1, Mixtral-8x22B, DBRX, and CommandR+. We have now made our datasets and all the pipeline, including data curation and training, fully open-source. We hope this seminal work can encourage more future research and development on this field.

Resources

Citation

@article{wei2024selfcodealign,

title={SelfCodeAlign: Self-Alignment for Code Generation},

creator={Yuxiang Wei and Federico Cassano and Jiawei Liu and Yifeng Ding and Naman Jain and Zachary Mueller and Harm de Vries and Leandro von Werra and Arjun Guha and Lingming Zhang},

yr={2024},

journal={arXiv preprint arXiv:2410.24198}

}