On this blog post, we offer a tutorial on tips on how to use a brand new data augmentation technique for document images, developed in collaboration with Albumentations AI.

Motivation

Vision Language Models (VLMs) have an immense range of applications, but they often must be fine-tuned to specific use-cases, particularly for datasets containing document images, i.e., images with high textual content. In these cases, it’s crucial for text and image to interact with one another in any respect stages of model training, and applying augmentation to each modalities ensures this interaction. Essentially, we would like a model to learn to read properly, which is difficult in essentially the most common cases where data is missing.

Hence, the necessity for effective data augmentation techniques for document images became evident when addressing challenges in fine-tuning models with limited datasets. A standard concern is that typical image transformations, similar to resizing, blurring, or changing background colours, can negatively impact text extraction accuracy.

We recognized the necessity for data augmentation techniques that preserve the integrity of the text while augmenting the dataset. Such data augmentation can facilitate generation of recent documents or modification of existing ones, while preserving their text quality.

Introduction

To handle this need, we introduce a latest data augmentation pipeline developed in collaboration with Albumentations AI. This pipeline handles each images and text inside them, providing a comprehensive solution for document images. This class of knowledge augmentation is multimodal because it modifies each the image content and the text annotations concurrently.

As discussed in a previous blog post, our goal is to check the hypothesis that integrating augmentations on each text and pictures during pretraining of VLMs is effective. Detailed parameters and use case illustrations may be found on the Albumentations AI Documentation. Albumentations AI enables the dynamic design of those augmentations and their integration with other kinds of augmentations.

Method

To reinforce document images, we start by randomly choosing lines throughout the document. A hyperparameter fraction_range controls the bounding box fraction to be modified.

Next, we apply one in all several text augmentation methods to the corresponding lines of text, that are commonly utilized in text generation tasks. These methods include Random Insertion, Deletion, and Swap, and Stopword Substitute.

After modifying the text, we black out parts of the image where the text is inserted and inpaint them, using the unique bounding box size as a proxy for the brand new text’s font size. The font size may be specified with the parameter font_size_fraction_range, which determines the range for choosing the font size as a fraction of the bounding box height. Note that the modified text and corresponding bounding box may be retrieved and used for training. This process leads to a dataset with semantically similar textual content and visually distorted images.

Predominant Features of the TextImage Augmentation

The library may be used for 2 fundamental purposes:

-

Inserting any text on the image: This feature permits you to overlay text on document images, effectively generating synthetic data. Through the use of any random image as a background and rendering completely latest text, you’ll be able to create diverse training samples. The same technique, called SynthDOG, was introduced within the OCR-free document understanding transformer.

-

Inserting augmented text on the image: This includes the next text augmentations:

- Random deletion: Randomly removes words from the text.

- Random swapping: Swaps words throughout the text.

- Stop words insertion: Inserts common stop words into the text.

Combining these augmentations with other image transformations from Albumentations allows for simultaneous modification of images and text. You’ll be able to retrieve the augmented text as well.

Note: The initial version of the information augmentation pipeline presented in this repo, included synonym alternative. It was removed on this version since it caused significant time overhead.

Installation

!pip install -U pillow

!pip install albumentations

!pip install nltk

import albumentations as A

import cv2

from matplotlib import pyplot as plt

import json

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

Visualization

def visualize(image):

plt.figure(figsize=(20, 15))

plt.axis('off')

plt.imshow(image)

Load data

Note that for such a augmentation you should use the IDL and PDFA datasets. They supply the bounding boxes of the lines that you need to modify.

For this tutorial, we are going to concentrate on the sample from IDL dataset.

bgr_image = cv2.imread("examples/original/fkhy0236.tif")

image = cv2.cvtColor(bgr_image, cv2.COLOR_BGR2RGB)

with open("examples/original/fkhy0236.json") as f:

labels = json.load(f)

font_path = "/usr/share/fonts/truetype/liberation/LiberationSerif-Regular.ttf"

visualize(image)

We’d like to accurately preprocess the information, because the input format for the bounding boxes is the normalized Pascal VOC. Hence, we construct the metadata as follows:

page = labels['pages'][0]

def prepare_metadata(page: dict, image_height: int, image_width: int) -> list:

metadata = []

for text, box in zip(page['text'], page['bbox']):

left, top, width_norm, height_norm = box

metadata.append({

"bbox": [left, top, left + width_norm, top + height_norm],

"text": text

})

return metadata

image_height, image_width = image.shape[:2]

metadata = prepare_metadata(page, image_height, image_width)

Random Swap

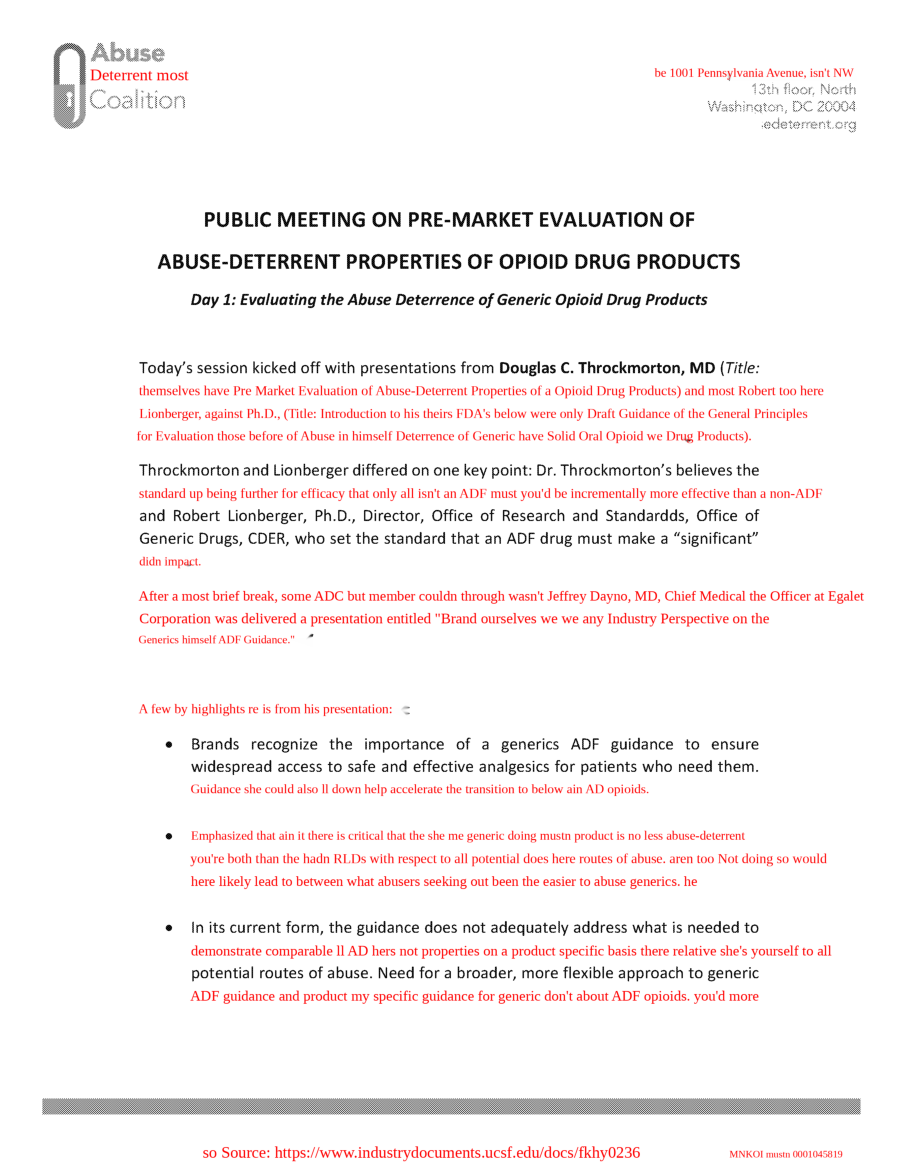

transform = A.Compose([A.TextImage(font_path=font_path, p=1, augmentations=["swap"], clear_bg=True, font_color = 'red', fraction_range = (0.5,0.8), font_size_fraction_range=(0.8, 0.9))])

transformed = transform(image=image, textimage_metadata=metadata)

visualize(transformed["image"])

Random Deletion

transform = A.Compose([A.TextImage(font_path=font_path, p=1, augmentations=["deletion"], clear_bg=True, font_color = 'red', fraction_range = (0.5,0.8), font_size_fraction_range=(0.8, 0.9))])

transformed = transform(image=image, textimage_metadata=metadata)

visualize(transformed['image'])

Random Insertion

In random insertion we insert random words or phrases into the text. On this case, we use stop words, common words in a language which can be often ignored or filtered out during natural language processing (NLP) tasks because they carry less meaningful information in comparison with other words. Examples of stop words include “is,” “the,” “in,” “and,” “of,” etc.

stops = stopwords.words('english')

transform = A.Compose([A.TextImage(font_path=font_path, p=1, augmentations=["insertion"], stopwords = stops, clear_bg=True, font_color = 'red', fraction_range = (0.5,0.8), font_size_fraction_range=(0.8, 0.9))])

transformed = transform(image=image, textimage_metadata=metadata)

visualize(transformed['image'])

Can we mix with other transformations?

Let’s define a posh transformation pipeline using A.Compose, which incorporates text insertion with specified font properties and stopwords, Planckian jitter, and affine transformations. Firstly, with A.TextImage we insert text into the image using specified font properties, with a transparent background and red font color. The fraction and size of the text to be inserted are also specified.

Then with A.PlanckianJitter we alter the colour balance of the image. Finally, using A.Affine we apply affine transformations, which may include scaling, rotating, and translating the image.

transform_complex = A.Compose([A.TextImage(font_path=font_path, p=1, augmentations=["insertion"], stopwords = stops, clear_bg=True, font_color = 'red', fraction_range = (0.5,0.8), font_size_fraction_range=(0.8, 0.9)),

A.PlanckianJitter(p=1),

A.Affine(p=1)

])

transformed = transform_complex(image=image, textimage_metadata=metadata)

visualize(transformed["image"])

To extract the knowledge on the bounding box indices where text was altered, together with the corresponding transformed text data

run the next cell. This data may be used effectively for training models to acknowledge and process text changes in images.

transformed['overlay_data']

[{'bbox_coords': (375, 1149, 2174, 1196),

'text': "Lionberger, Ph.D., (Title: if Introduction to won i FDA's yourselves Draft Guidance once of the wasn't General Principles",

'original_text': "Lionberger, Ph.D., (Title: Introduction to FDA's Draft Guidance of the General Principles",

'bbox_index': 12,

'font_color': 'red'},

{'bbox_coords': (373, 1677, 2174, 1724),

'text': "After off needn't were a brief break, ADC member mustn Jeffrey that Dayno, MD, Chief Medical Officer for at their Egalet",

'original_text': 'After a brief break, ADC member Jeffrey Dayno, MD, Chief Medical Officer at Egalet',

'bbox_index': 19,

'font_color': 'red'},

{'bbox_coords': (525, 2109, 2172, 2156),

'text': 'll Brands recognize the has importance and of a generics ADF guidance to ensure which after',

'original_text': 'Brands recognize the importance of a generics ADF guidance to ensure',

'bbox_index': 23,

'font_color': 'red'}]

Synthetic Data Generation

This augmentation method may be prolonged to the generation of synthetic data, because it enables the rendering of text on any background or template.

template = cv2.imread('template.png')

image_template = cv2.cvtColor(template, cv2.COLOR_BGR2RGB)

transform = A.Compose([A.TextImage(font_path=font_path, p=1, clear_bg=True, font_color = 'red', font_size_fraction_range=(0.5, 0.7))])

metadata = [{

"bbox": [0.1, 0.4, 0.5, 0.48],

"text": "Some smart text goes here.",

}, {

"bbox": [0.1, 0.5, 0.5, 0.58],

"text": "Hope you discover it helpful.",

}]

transformed = transform(image=image_template, textimage_metadata=metadata)

visualize(transformed['image'])

Conclusion

In collaboration with Albumentations AI, we introduced TextImage Augmentation, a multimodal technique that modifies document images together with the text. By combining text augmentations similar to Random Insertion, Deletion, Swap, and Stopword Substitute with image modifications, this pipeline allows for the generation of diverse training samples.

For detailed parameters and use case illustrations, discuss with the Albumentations AI Documentation. We hope you discover these augmentations useful for enhancing your document image processing workflows.

References

@inproceedings{kim2022ocr,

title={Ocr-free document understanding transformer},

writer={Kim, Geewook and Hong, Teakgyu and Yim, Moonbin and Nam, JeongYeon and Park, Jinyoung and Yim, Jinyeong and Hwang, Wonseok and Yun, Sangdoo and Han, Dongyoon and Park, Seunghyun},

booktitle={European Conference on Computer Vision},

pages={498--517},

yr={2022},

organization={Springer}

}