It’s been two weeks because the release of DeepSeek R1 and just per week since we began the open-r1 project to copy the missing pieces, namely the training pipeline and the synthetic data. This post summarizes:

- the progress of Open-R1 to copy the DeepSeek-R1 pipeline and dataset

- what we learned about DeepSeek-R1 and discussions around it

- cool projects the community has built because the release of DeepSeek-R1

It should serve each as an update on the project and as a group of interesting resources around DeepSeek-R1.

Progress after 1 Week

Let’s start by taking a look at the progress we made on Open-R1. We began Open-R1 only one week ago and other people across the teams in addition to the community got here together to work on it and we have now some progress to report.

Evaluation

Step one in reproduction is to confirm that we are able to match the evaluation scores. We’re in a position to reproduce Deepseek’s reported results on the MATH-500 Benchmark:

| Model | MATH-500 (HF lighteval) | MATH-500 (DeepSeek Reported) |

|---|---|---|

| DeepSeek-R1-Distill-Qwen-1.5B | 81.6 | 83.9 |

| DeepSeek-R1-Distill-Qwen-7B | 91.8 | 92.8 |

| DeepSeek-R1-Distill-Qwen-14B | 94.2 | 93.9 |

| DeepSeek-R1-Distill-Qwen-32B | 95.0 | 94.3 |

| DeepSeek-R1-Distill-Llama-8B | 85.8 | 89.1 |

| DeepSeek-R1-Distill-Llama-70B | 93.4 | 94.5 |

Yow will discover the instructions to run these evaluations within the open-r1 repository.

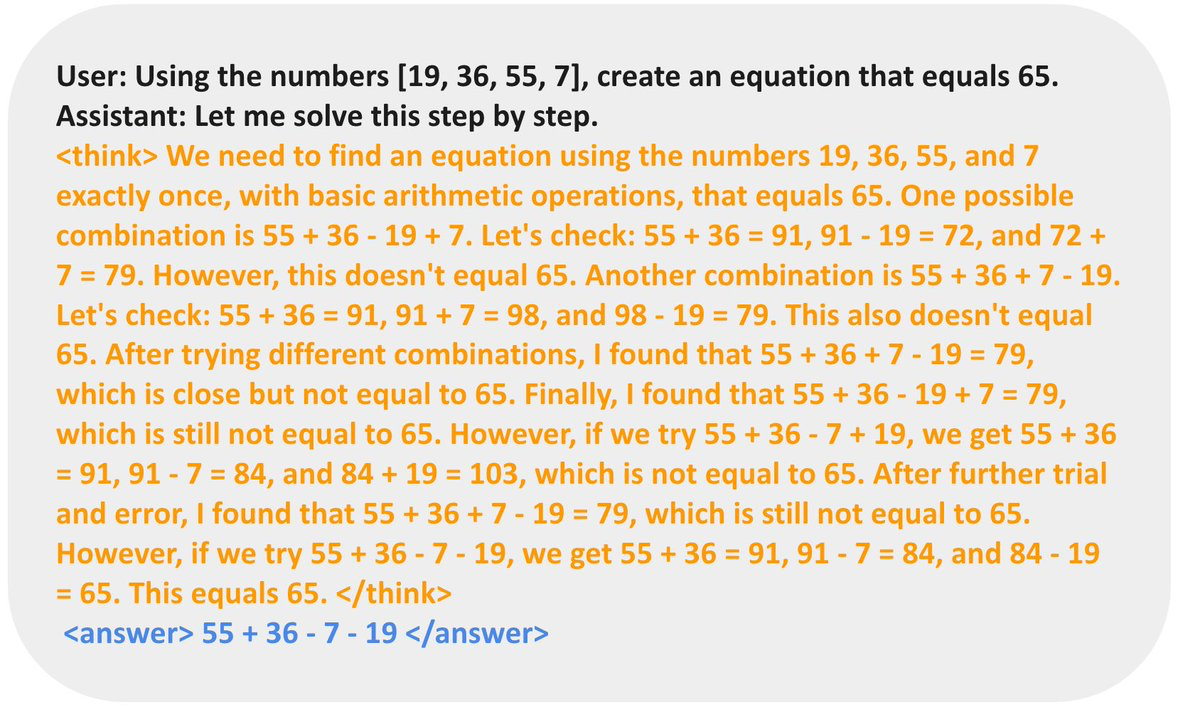

One remark we have now made is the large size of the generations from the DeepSeek models, which makes even evaluating the model difficult. Here we show DeepSeek-R1 response lengths within the OpenThoughts dataset:

Distribution of R1’s responses shows that they’re on average very long with the common response being 6,000 tokens long and a few responses containing greater than 20,000 tokens. Value noting that the common page incorporates ~500 words and one token is on average barely lower than a word, which suggests the various reponses are over 10 pages long. (src: https://x.com/gui_penedo/status/1884953463051649052)

The length of responses will make GPRO training difficult, as we could have to generate long completions which is able to require a major proportion of GPU memory to store the activations / gradients for the optimization step.

With the intention to share our progress publicly, we have now created an open-r1 evaluation leaderboard, so the community can follow our reproduction efforts (space is here):

Training Pipeline

Following the discharge of Open R1, GRPO (Grouped Relative Policy Optimization) was integrated into the newest TRL release (version 0.14). This integration enables training any model with one or multiple reward functions or models. The GRPO implementation integrates with DeepSpeed ZeRO 1/2/3 for parallelized training that scales to many GPUs, and uses vLLM for fast generation, which is the first bottleneck in online training methods.

from datasets import load_dataset

from trl import GRPOConfig, GRPOTrainer

dataset = load_dataset("trl-lib/tldr", split="train")

def reward_len(completions, **kwargs):

return [-abs(20 - len(completion)) for completion in completions]

training_args = GRPOConfig(output_dir="Qwen2-0.5B-GRPO", logging_steps=10)

trainer = GRPOTrainer(

model="Qwen/Qwen2-0.5B-Instruct",

reward_funcs=reward_len,

args=training_args,

train_dataset=dataset,

)

trainer.train()

There are still some limitations referring to the usage of high memory usage, and progress is being made to profile and reduce them.

Synthetic Data Generation

One of the vital exciting findings of the R1 report was that the foremost model will be used to generate synthetic reasoning traces and smaller models fine-tuned on this dataset see similar performance gains because the foremost model. So naturally we wish to re-create the synthetic reasoning dataset as well such that the community can fine-tune other models on it.

With a model as big as R1 the foremost challenge is scaling up generation efficiently and fast. We spent per week tinkering with various setups and configurations.

The model suits on two 8xH100 nodes so naturally we began experimenting with that setup and used vLLM as inference server. Nonetheless, we quickly noticed that this configuration is just not ideal: the throughput is sub-optimal and only allows for 8 parallel request, since the GPU KV cache fills up too quickly. What happens when the cache fills up ist that the requests which use loads of cache are preempted and if the config uses PreemptionMode.RECOMPUTE the requests are scheduled again later when more VRAM is on the market.

We then switched to a setup with 4x 8xH100 nodes, so 32 GPUs in total. This leaves enough spare VRAM for 32 requests running in parallel with barely any of them getting rescheduled attributable to 100% cache utilization.

Originally we began querying the vLLM servers with batches of requests but noticed quickly, that straggles within the batches would cause the GPU utiliziation to differ since a brand new batch would only start processing once the last sample of the previous batch is finished. Switching the batched inference to streaming helped stabilize the GPU utilization significantly:

It only required changing the code sending requests to the vLLM servers. The code for batched inference:

for batch in batch_generator(dataset, bs=500):

active_tasks = []

for row in batch:

task = asyncio.create_task(send_requests(row))

active_tasks.add(task)

if active_tasks:

await asyncio.gather(*active_tasks)

The brand new code for streaming requests:

active_tasks = []

for row in dataset:

while len(active_tasks) >= 500:

done, active_tasks = await asyncio.wait(

active_tasks,

return_when=asyncio.FIRST_COMPLETED

)

task = asyncio.create_task(send_requests(row))

active_tasks.add(task)

if active_tasks:

await asyncio.gather(*active_tasks)

We’re generating at a reasonably constant rate but might still explore a bit further if for instance switching to the CPU cache is a greater strategy when long queries get preempted.

The present inference code will be found here.

Outreach

There was wide interest in open-r1, including from the media so various team members have been within the news prior to now week:

Other mentions: Washington Post, Financial Times, Financial Times, Fortune, Fortune, The Verge, Financial Review, Tech Crunch, Die Zeit, Financial Times, Recent York Times, The Wall Street Journal, EuroNews, Barrons, Recent York Times, Vox, Nature, SwissInfo, Handelsblatt, Business Insider, IEEE Spectrum, MIT Tech Review, LeMonde.

What have we learned about DeepSeek-R1?

While the community continues to be digesting DeepSeek-R1’s results and report, DeepSeek has captured broader public attention just two weeks after its release.

Responses to R1

After a comparatively calm first week post-release, the second week saw significant market reactions, prompting responses from multiple AI research labs:

In parallel, several firms worked on providing the DeepSeek models through various platforms (non-exhaustive list):

DeepSeek V3 Training Compute

There was loads of interest across the proclaimed cost of coaching V3/R1. While the precise number probably doesn’t matter a lot, people worked on some back-of-the-envelope calculations to confirm the order of magnitude here. TL;DR the numbers seem generally in the fitting order of magnitude, as seen in these discussions:

As many groups are working on reproducing the training pipeline, we’ll get more evidence on the possible training efficiency for the model.

Training Dataset

Last week some speculations surfaced that DeepSeek may need been using OpenAI outputs to coach its models. See for instance the Financial Times. Nonetheless, it’s unclear at this point what the results of those allegations will probably be.

Community

The open source community has been extremely lively around DeepSeek-R1 and lots of people began constructing interesting projects across the model.

Projects

There have been plenty of projects that try to breed the fundamental learning mechanics at smaller scale, so you’ll be able to test the fundamental learning principles at home.

Datasets

The community has been busy on plenty of dataset efforts related to R1, some highlights include:

- bespokelabs/Bespoke-Stratos-17k: is a replication of the Berkeley Sky-T1 data pipeline which uses DeepSeek-R1 to create a dataset of questions, reasoning traces and answers. This data was subsequently used to fine-tune 7B and 32B Qwen models using a distillation approach much like the R1 paper.

- open-thoughts/OpenThoughts-114k: an “Open synthetic reasoning dataset with 114k high-quality examples covering math, science, code, and puzzles”. A part of the Open Thoughts effort.

- cognitivecomputations/dolphin-r1: 800k sample dataset with completions from DeepSeek-R1, Gemini flash and 200k samples from Dolphin chat with the goal of helping train R1 style models.

- ServiceNow-AI/R1-Distill-SFT: Currently at 17,000 samples, an effort by the ServiceNow Language Models lab to create data to support Open-R1 efforts.

- NovaSky-AI/Sky-T1_data_17k: A dataset used to coach Sky-T1-32B-Preview. This dataset was a part of a reasonably early effort to copy o1 style reasoning. The model trained on this dataset was trained for lower than $450. This blog post goes into more detail.

- Magpie-Align/Magpie-Reasoning-V2-250K-CoT-Deepseek-R1-Llama-70B: This dataset extends Magpie and approach to generating instruction data without starting prompts to incorporate reasoning within the responses. The instructions are generated by Llama 3.1 70B Instruct and Llama 3.3 70B Instruct, and the responses are generated by DeepSeek-R1-Distill-Llama-70B

This list only covers a small variety of reasoning and problem solving related datasets on the Hub. We’re excited to see what other datasets the community construct in the approaching weeks.

What’s next?

We are only getting began and need to complete the training pipeline and check out it on smaller models and use the scaled up inference pipeline to generate prime quality datasets. If you ought to contribute take a look at the open-r1 repository on GitHub or follow the Hugging Face open-r1 org.

)](https://cdn-uploads.huggingface.co/production/uploads/5e48005437cb5b49818287a5/9VTGYr3wg1jZHw9uviB6j.png)