In attempting to maintain up with (or ahead of) the competition, model releases proceed at a gradual clip: GPT-5.2 represents OpenAI’s third major model release since August. GPT-5 launched that month with a brand new routing system that toggles between instant-response and simulated reasoning modes, though users complained about responses that felt cold and clinical. November’s GPT-5.1 update added eight preset “personality” options and focused on making the system more conversational.

Numbers go up

Oddly, though the GPT-5.2 model release is ostensibly a response to Gemini 3’s performance, OpenAI selected to not list any benchmarks on its promotional website comparing the 2 models. As an alternative, the official blog post focuses on GPT-5.2’s improvements over its predecessors and its performance on OpenAI’s latest GDPval benchmark, which attempts to measure skilled knowledge work tasks across 44 occupations.

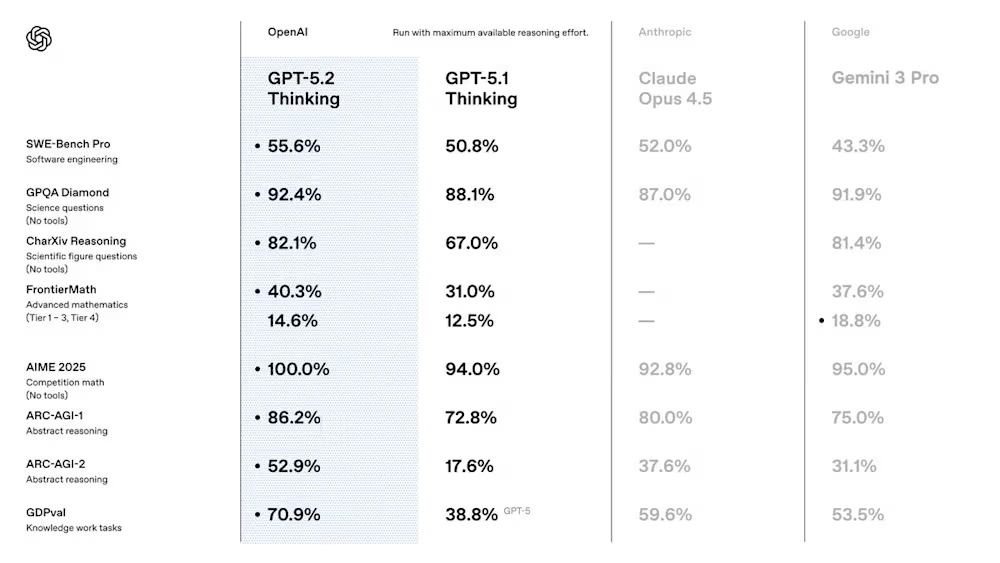

Through the press briefing, OpenAI did share some competition comparison benchmarks that included Gemini 3 Pro and Claude Opus 4.5 but pushed back on the narrative that GPT-5.2 was rushed to market in response to Google. “It is necessary to notice this has been within the works for a lot of, many months,” Simo told reporters, although selecting when to release it, we’ll note, is a strategic decision.

In line with the shared numbers, GPT-5.2 Considering scored 55.6 percent on SWE-Bench Pro, a software engineering benchmark, in comparison with 43.3 percent for Gemini 3 Pro and 52.0 percent for Claude Opus 4.5. On GPQA Diamond, a graduate-level science benchmark, GPT-5.2 scored 92.4 percent versus Gemini 3 Pro’s 91.9 percent.

OpenAI says GPT-5.2 Considering beats or ties “human professionals” on 70.9 percent of tasks within the GDPval benchmark (in comparison with 53.3 percent for Gemini 3 Pro). The corporate also claims the model completes these tasks at greater than 11 times the speed and lower than 1 percent of the price of human experts.

GPT-5.2 Considering also reportedly generates responses with 38 percent fewer confabulations than GPT-5.1, based on Max Schwarzer, OpenAI’s post-training lead, who told VentureBeat that the model “hallucinates substantially less” than its predecessor.

Nevertheless, we all the time take benchmarks with a grain of salt since it’s easy to present them in a way that’s positive to an organization, especially when the science of measuring AI performance objectively hasn’t quite caught up with corporate sales pitches for humanlike AI capabilities.

Independent benchmark results from researchers outside OpenAI will take time to reach. Within the meantime, in the event you use ChatGPT for work tasks, expect competent models with incremental improvements and a few higher coding performance thrown in for good measure.