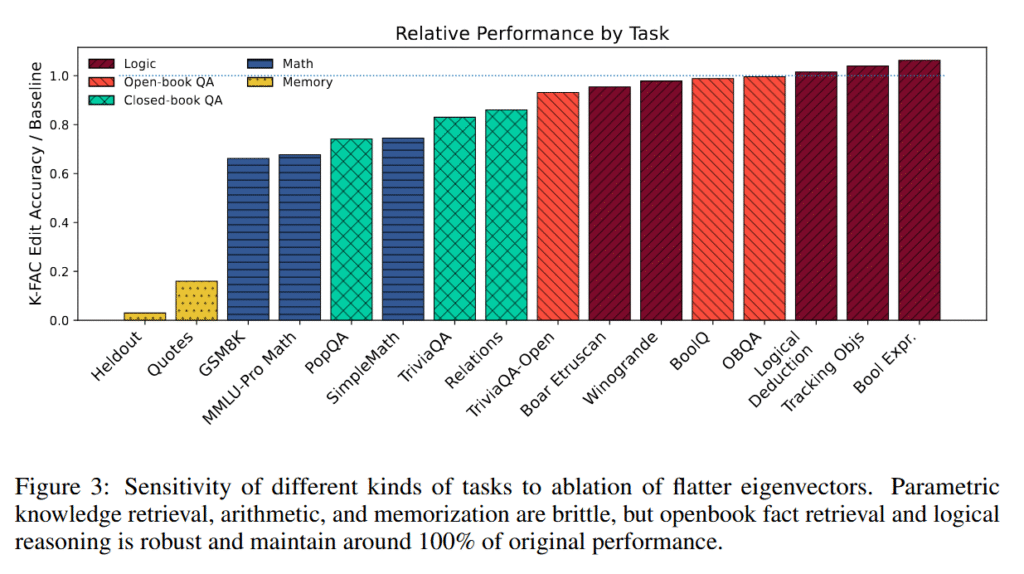

Mathematical operations and closed-book fact retrieval shared pathways with memorization, dropping to 66 to 86 percent performance after editing. The researchers found arithmetic particularly brittle. Even when models generated similar reasoning chains, they failed on the calculation step after low-curvature components were removed.

“Arithmetic problems themselves are memorized on the 7B scale, or because they require narrowly used directions to do precise calculations,” the team explains. Open-book query answering, which relies on provided context moderately than internal knowledge, proved most robust to the editing procedure, maintaining nearly full performance.

Curiously, the mechanism separation varied by information type. Common facts like country capitals barely modified after editing, while rare facts like company CEOs dropped 78 percent. This implies models allocate distinct neural resources based on how incessantly information appears in training.

The K-FAC technique outperformed existing memorization removal methods without having training examples of memorized content. On unseen historical quotes, K-FAC achieved 16.1 percent memorization versus 60 percent for the previous best method, BalancedSubnet.

Vision transformers showed similar patterns. When trained with intentionally mislabeled images, the models developed distinct pathways for memorizing flawed labels versus learning correct patterns. Removing memorization pathways restored 66.5 percent accuracy on previously mislabeled images.

Limits of memory removal

Nevertheless, the researchers acknowledged that their technique isn’t perfect. Once-removed memories might return if the model receives more training, as other research has shown that current unlearning methods only suppress information moderately than completely erasing it from the neural network’s weights. Meaning the “forgotten” content will be reactivated with just just a few training steps targeting those suppressed areas.

The researchers can also’t fully explain why some abilities, like math, break so easily when memorization is removed. It’s unclear whether the model actually memorized all its arithmetic or whether math just happens to make use of similar neural circuits as memorization. Moreover, some sophisticated capabilities might appear like memorization to their detection method, even after they’re actually complex reasoning patterns. Finally, the mathematical tools they use to measure the model’s “landscape” can develop into unreliable on the extremes, though this doesn’t affect the actual editing process.

This text was updated on November 11, 2025 at 9:16 am to make clear a proof about sorting weights by curvature.