Good morning, AI enthusiasts. While the AI world awaits the upcoming launch of OpenAI’s open-source model and GPT-5, Chinese labs proceed to churn out the headlines.

Latest launches from Zai and Alibaba just raised the open-source bar again in each language and video models — continuing a relentless pace of development out East that’s shifting the AI landscape faster than ever.

Note: We just opened multi-seats for our AI University. For those who’re seeking to construct your team’s AI upskilling learning path, reach out here.

In today’s AI rundown:

-

Z.ai’s recent open-source powerhouse

-

Microsoft’s ‘Copilot Mode’ for agentic browsing

-

Replace any character voice in your videos

-

Alibaba’s Wan2.2 pushes open-source video forward

-

4 recent AI tools & 4 job opportunities

LATEST DEVELOPMENTS

Z AI

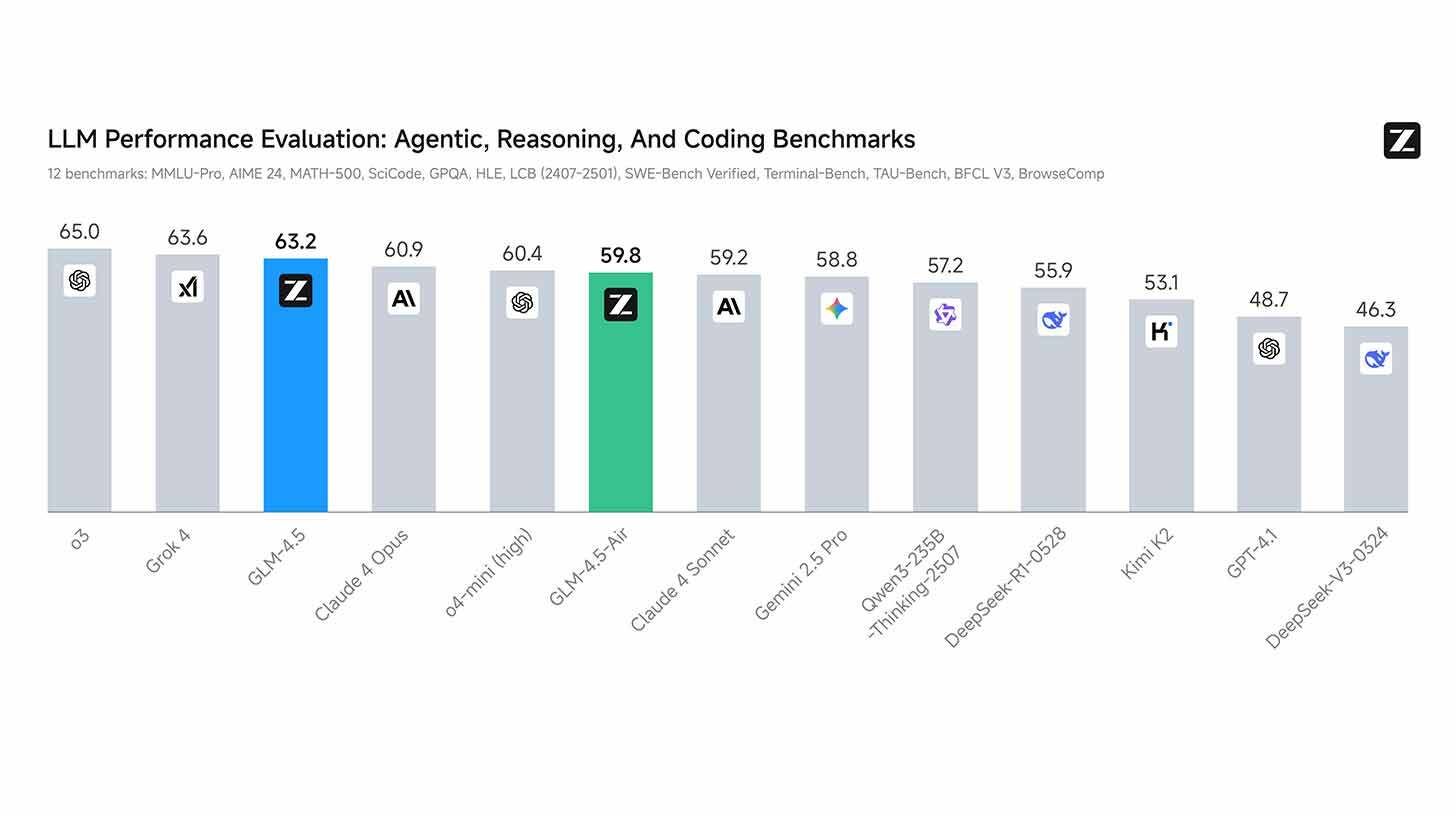

🤖 Z.ai’s recent open-source powerhouse

Image source: Zai

The Rundown: Chinese startup Z.ai (formerly Zhipu) just released GLM-4.5, an open-source agentic AI model family that undercuts DeepSeek’s pricing while nearing the performance of leading models across reasoning, coding, and autonomous tasks.

The main points:

-

4.5 combines reasoning, coding, and agentic abilities right into a single model with 355B parameters, with hybrid considering for balancing speed vs. task difficulty.

-

Z.ai claims 4.5 is now the highest open-source model worldwide, and ranks just behind industry leaders o3 and Grok 4 in overall performance.

-

The model excels in agentic tasks, beating out top models like o3, Gemini 2.5 Pro, and Grok 4 on benchmarks while hitting a 90% success rate in tool use.

-

Along with 4.5 and 4.5-Air launching with open weights, Z.ai also published and open-sourced their ‘slime’ training framework for others to construct off of.

Why it matters: Qwen, Kimi, DeepSeek, MiniMax, Z.ai… The list goes on and on. Chinese labs are putting out higher and higher open models at an insane pace, continuing to each close the gap with frontier systems and put pressure on the likes of OpenAI’s upcoming releases to remain a step ahead of the sector.

TOGETHER WITH GUIDDE

The Rundown: Stop wasting time on repetitive explanations. Guidde’s AI helps you create stunning video guides in seconds, 11x faster.

Use Guidde to:

-

Auto-generate step-by-step video guides with visuals, voiceovers, and a CTA

-

Turn boring docs into visual masterpieces

-

Save hours with AI-powered automation

-

Share or embed your guide anywhere

MICROSOFT

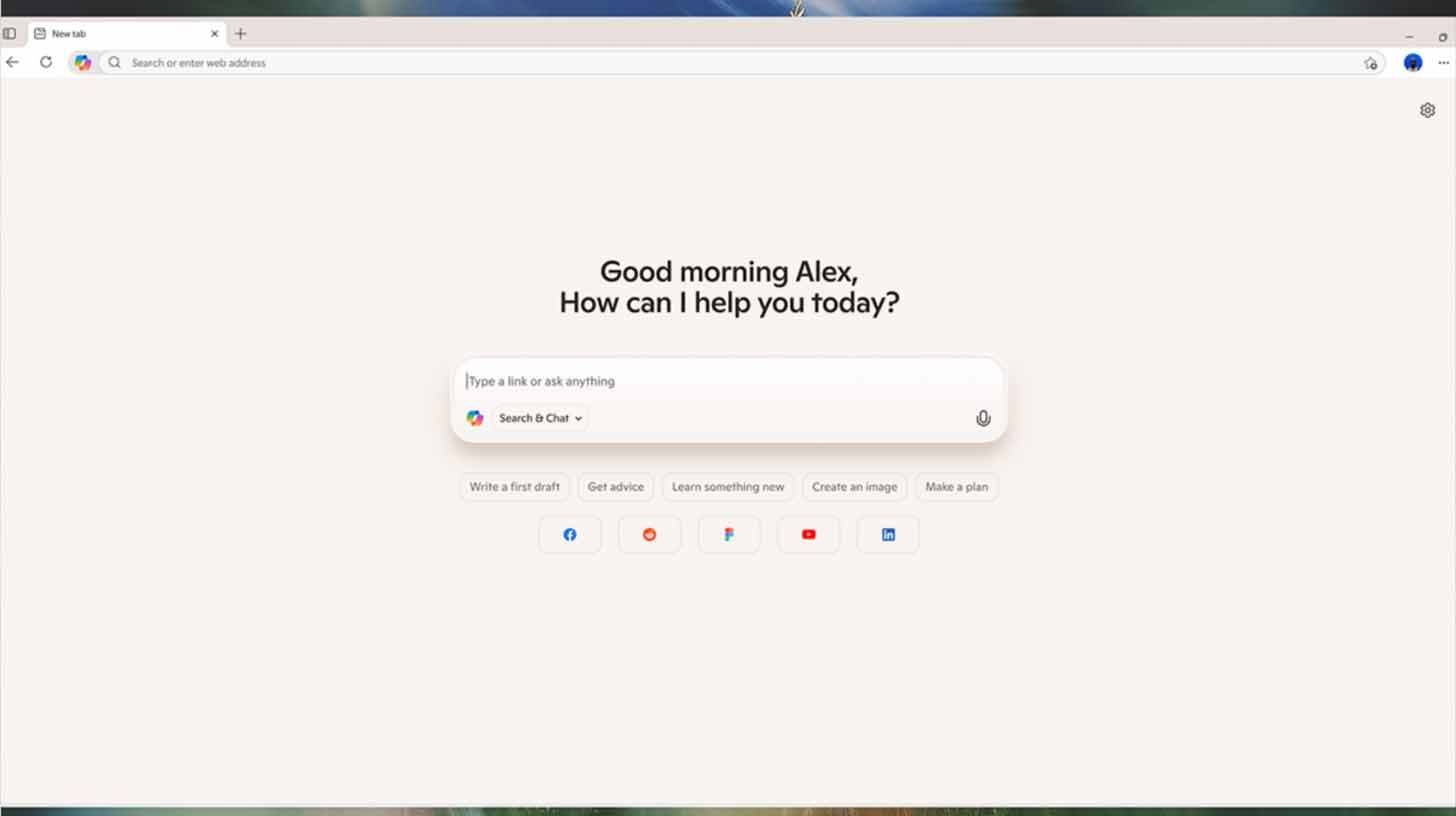

🦄 Microsoft’s ‘Copilot Mode’ for agentic browsing

Image source: Microsoft

The Rundown: Microsoft just released ‘Copilot Mode’ in Edge, bringing the AI assistant directly into the browser to look across open tabs, handle tasks, and proactively suggest and take actions.

The main points:

-

Copilot Mode integrates AI directly into Edge’s recent tab page, integrating features like voice and multi-tab evaluation directly into the browsing experience.

-

The feature launches free for a limited time on Windows and Mac with opt-in activation, though Microsoft hinted at eventual subscription pricing.

-

Copilot will eventually find a way to access users’ browser history and credentials (with permission), allowing for actions like completing bookings or errands.

Why it matters: Microsoft Edge now enters into the agentic browser wars, with competitors like Perplexity’s Comet and TBC’s Dia also launching inside the previous couple of months. While agentic tasks are still rough around the perimeters across the industry, the incorporation of lively AI involvement within the browsing experience is clearly here to remain.

AI TRAINING

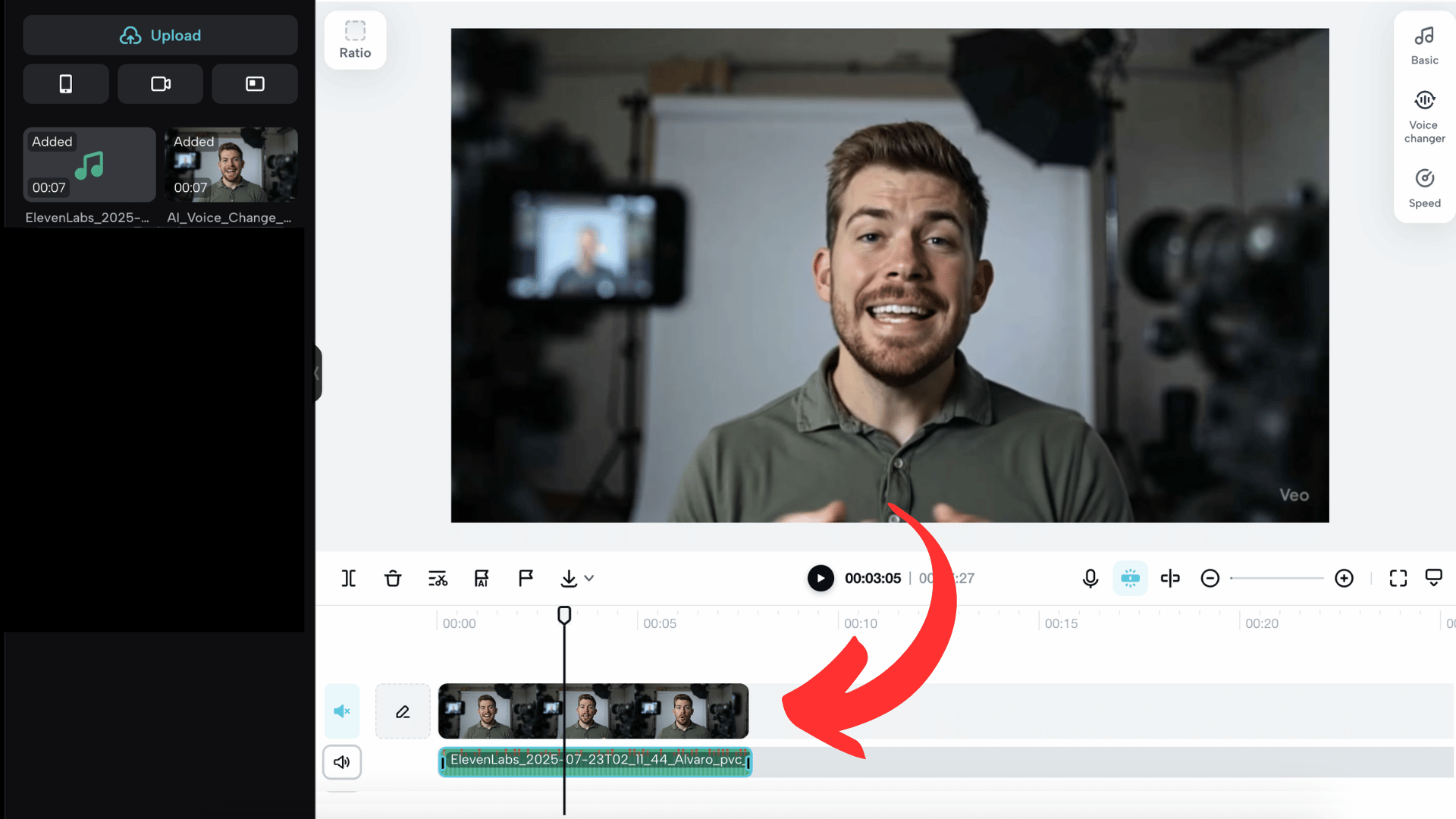

🎤 Replace any character voice in your videos

The Rundown: On this tutorial, you’ll learn methods to transform AI-generated videos by replacing their default voices with custom voices using Google Veo, audio conversion tools, and ElevenLabs’ voice cloning.

Step-by-step:

-

Create your AI video using Google Veo and download the MP4 file

-

Convert the video to MP3 using any audio extractor from a video tool

-

Go to ElevenLabs’ Voice Changer, upload your MP3, and generate speech along with your chosen voice.

-

Import each the unique video and recent audio into CapCut, mute the unique audio, and export your video with the custom voice.

Pro tip: Create voice clones in ElevenLabs to keep up consistent character voices across all of your video projects.

PRESENTED BY PREZI

The Rundown: Prezi AI doesn’t just make slides. It builds persuasive narratives with a dynamic format designed to carry attention and help your message land. Whether you are pitching or presenting to your team, Prezi transforms your ideas into presentations that really perform.

With Prezi, you’ll be able to:

-

Go from rough ideas or PDFs to standout presentations in seconds

-

Engage your audience with a format proven to be simpler than slides

-

Get AI-powered suggestions for content, structure, and design

Try Prezi AI without spending a dime and beat boring slides.

ALIBABA

🎥 Alibaba’s Wan2.2 pushes open-source video forward

Image source: Alibaba

The Rundown: Alibaba’s Tongyi Lab just launched Wan2.2, a brand new open-source video model that brings advanced cinematic capabilities and high-quality motion for each text-to-video and image-to-video generations.

The main points:

-

Wan2.2 uses two specialized “experts” — one creates the general scene while the opposite adds nice details, keeping the system efficient.

-

The model surpassed top rivals, including Seedance, Hailuo, Kling, and Sora, in aesthetics, text rendering, camera control, and more.

-

It was trained on 66% more images and 83% more videos than Wan2.1, enabling it to raised handle complex motion, scenes, and aesthetics.

-

Users also can fine-tune video features like lighting, color, and camera angles, unlocking more cinematic control over the ultimate output.

Why it matters: China’s open-source flurry doesn’t just apply to language models like GLM-4.5 above — it’s across the whole AI toolbox. While Western labs are debating closed versus open models, Chinese labs are constructing a parallel open AI ecosystem, with network effects that would determine which path developers worldwide adopt.

QUICK HITS

🛠️ Trending AI Tools

-

🎬 Runway Aleph – Edit, transform, and generate video content

-

🧠 Qwen3-Pondering – Alibaba’s AI with enhanced reasoning and knowledge

-

🌎 Hunyuan3D World Model 1.0 – Tencent’s open world generation model

-

📜 Aeneas – Google’s open-source AI for restoring ancient texts

💼 AI Job Opportunities

-

📱 Databricks – Senior Digital Media Manager

-

🗂️ Parloa – Executive Assistant to the CRO

-

🤝 UiPath – Partner Sales Executive

-

🧑💻 xAI – Software Engineer, Developer Experience

📰 Every part else in AI today

Alibaba debuted Quark AI glasses, a brand new line of smart glasses launching by the top of the yr, powered by the corporate’s Qwen model.

Anthropic announced weekly rate limits for Pro and Max users on account of “unprecedented demand” from Claude Code, saying the move will impact under 5% of current users.

Tesla and Samsung signed a $16.5B deal for the manufacturing of Tesla’s next-gen AI6 chips, with Elon Musk saying the “strategic importance of this is difficult to overstate.”

Runway signed a brand new partnership agreement with IMAX, bringing AI-generated shorts from the corporate’s 2025 AI Film Festival to big screens at ten U.S. locations in August.

Google DeepMind CEO Demis Hassabis revealed that Google processed 980 trillion (!) tokens across its AI products in June, an over 2x increase from May.

Anthropic published research on automated agents that audit models for alignment issues, using them to identify subtle risks and misbehaviors that humans might miss.

COMMUNITY

🎥 Join our next live workshop

Join our next workshop this Friday, August 1st, at 4 PM EST with Dr. Alvaro Cintas, The Rundown’s AI professor. By the top of this workshop, you’ll have practical strategies to get the AI to do exactly what you wish.

RSVP here. Not a member? Join The Rundown University on a 14-day free trial.

That is it for today!Before you go we’d like to know what you considered today’s newsletter to assist us improve The Rundown experience for you.

|

|

|

Login or Subscribe to take part in polls. |

See you soon,

Rowan, Joey, Zach, Alvaro, and Jason—The Rundown’s editorial team