Hugging Face has introduced an open source vision-language-action (VLA) model for the event of universal robots, and has been popularized for robotics technology. It is very effective within the MacBook.

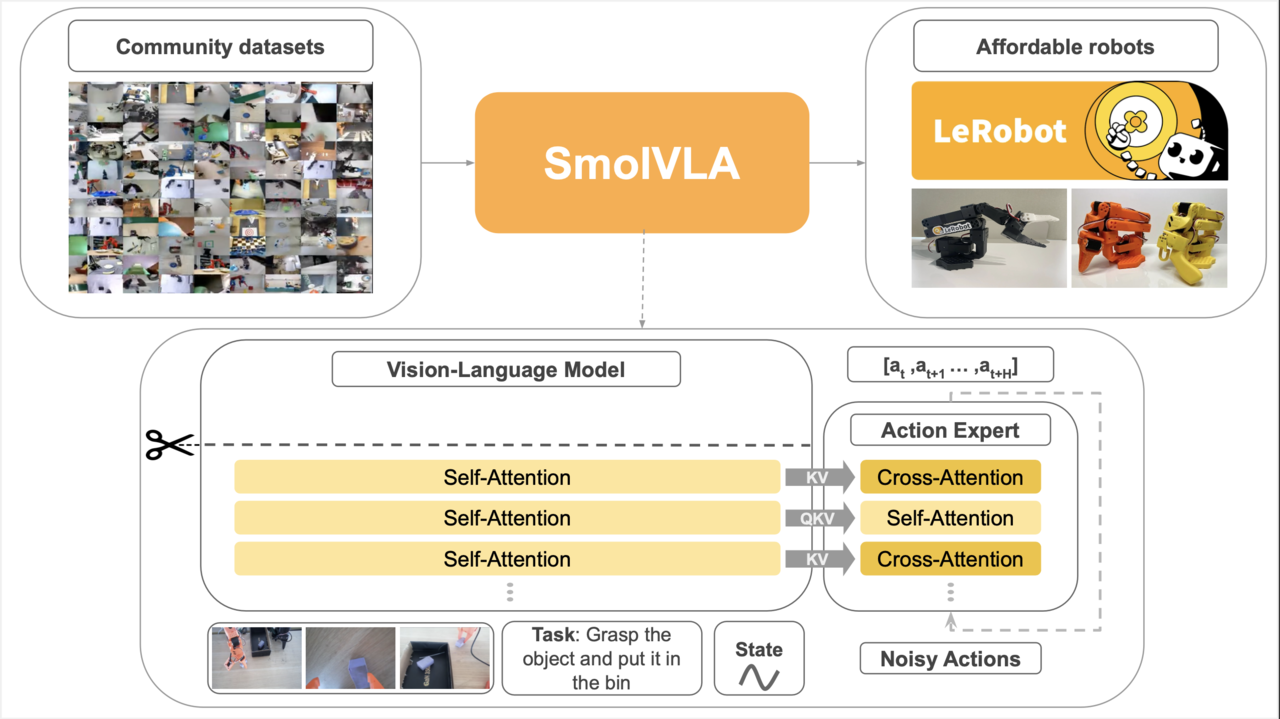

Hugging Face unveiled the open source ‘Smolvla’, an open source compact VLA model dedicated to Robotics on the 4th (local time).

Small VLA is an AI model centered on light weight, low price distribution, and high practicality, and is characterised by having the ability to run on MacBooks or GPUs for consumers without expensive robot hardware.

Particularly, it’s designed to be utilized in the low -cost robot system, which was recently introduced with French Robot Startup Polen Robotics, which was recently acquired by Herging Face.

The model, which consists of about 450 million parameters, was learned in 481 community datasets consisting of about 23,000 scenarios developed by Herging Face.

The model is split into two parts.

First, the ‘Smolvlm-2’ module processes the robot’s image (RGB), sensor information, and directions that folks say at the identical time. With the intention to increase speed and efficiency, the image is reduced small, and only the front a part of the transformer model is used.

Then, the ‘Motion Expert’ module is a model that predicts what the robot will work, with a light-weight and fast structure. Use two ways of understanding and connecting information to assist the robot match what the robot must see.

Particularly, the introduction of ‘Asynchronous Inference Stack’ was introduced to separate the actual operation of the robot and the actual operation.

This helps robots process visual and hearing information and the technique of acting actual behavior, helping to reply quickly in complex and fast -changing environments. Because of this, even in a limited environment, the delay time will be minimized and real -time reactions are possible.

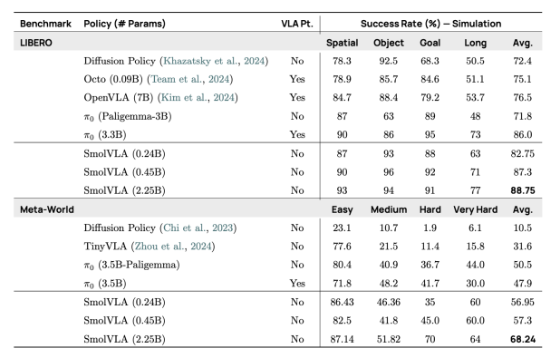

It also showed good performance on the benchmark.

Within the simulation benchmark ‘Libero’, the common success rate was 87.3%, and ‘Meta-World’ showed greater than the larger model or existing method.

Within the actual robot environment, the common success rate was 78.3%in pick -and -places, stacking, and classification, showing higher performance than the prevailing large model ₀ or ACT.

Small VLA -related source code, learning data, and distribution tools HubIt’s provided in.

As such, the cuddling face is specializing in the strategy of making a low -cost open source robotics ecosystem. Following the ‘Lerobot’ project, the corporate has recently launched a humanoid robot hardware for developers with only $ 3000.

By Park Chan, reporter cpark@aitimes.com