Following the total emergence of artificial intelligence (AI) agents, a framework focused on the power to make use of external tools (LLM) of huge language model (LLM). Beyond the prevailing method, it’s characterised by the indisputable fact that the primary tool to access could be used appropriately.

Researchers at Suzhou University, China, recently through the net archive ‘Cotools of Tools‘The paper was published.

With the launch of the agent, the LLM has develop into more vital than the text creation, and the power to interact with external databases and applications has develop into vital.

Currently, it is not uncommon to make use of high-quality adjustments to strengthen this ability. Nevertheless, this permits LLM to make use of only the tools learned by LLM, which might reduce the inference performance of the model through the high-quality adjustment.

Thus, the researchers have established a framework for LLM to make use of quite a lot of tools directly inside the reasoning process, and particularly, that may use untrained tools.

The secret’s the tactic of ‘ICL’ within the context. It provides an example of a tool that could be utilized in LLM and an example of the right way to use it directly within the prompt, in addition to the pliability in order that the model can use the tools which have never seen before.

As an alternative of high-quality adjusting your complete model, the tool chain learns a lightweight ‘specialized module’ that works with LLM through the creation process. The core feature of LLM is to be fixed and doesn’t damage its original ability.

To this end, it uses an intermediate expression of LLM called ‘hidden states’. It occurs within the calculation process that happens before the model is created, and it doesn’t affect the model output. Thus, the paper was attached to the paper called ‘Utilizing Massive Unseen Tools within the Cot Reasoning of Frozen Language Models’.

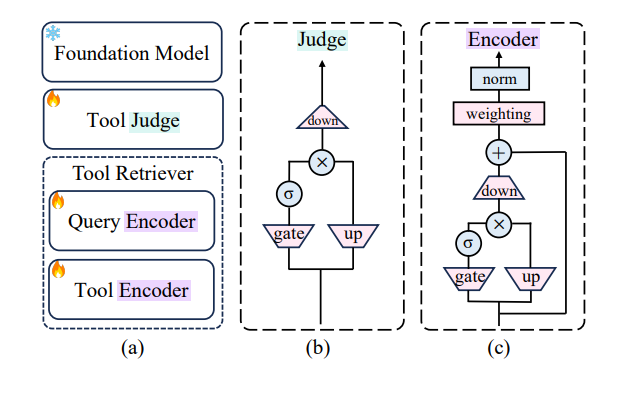

To this end, the tool chain consists of three modules.

To start with, ‘Tool Judge’ analyzes the state of the token generated by LLM and determines whether it is suitable for the token to be created within the hidden state through the reasoning process.

When the tool determination is made, the Tool Retriever works. This module has been trained to search out essentially the most appropriate tools within the pool that could be used based on the query.

Finally, the agent runs ‘tool calling’ through the ICL prompt. In other words, it’s explained that essentially the most appropriate tool could be called with none examples of the tool. As well as, the primary tool you possibly can see can judge the suitable appropriate.

The researchers explained, “The core of the tool chain is to choose where and the right way to call the tool by utilizing semantic expression functions as an alternative of freezing the muse.”

By utilizing the hidden state of LLM, it’s separated from the choice -making (determinant) and the choice (searcher) from parameters (calls through ICL) to preserve the core functions of LLM and flexibly use recent tools.

Nevertheless, because it is crucial to approach the hidden state of the model, it can’t be used for closed models akin to ‘GPT-4O’ or ‘Claude’, and might only be applied to open sources akin to ‘Rama’ or ‘Mistrill’.

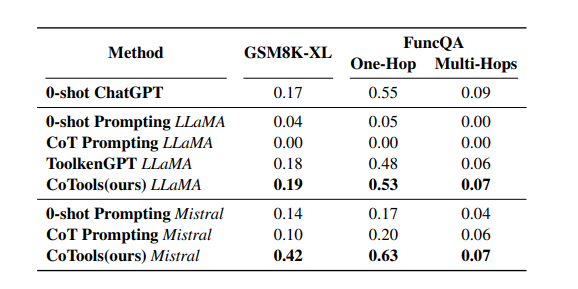

Benchmarks were carried out in two scenarios: numerical reasoning using calculation tools and KBQAs that required search.

The tool chain has achieved similar performance to the chat GPT in ‘GSM8K-XL’, which is applied to ‘Rama 2-7B’ and measures the power to make use of the essential operation. As well as, in ‘FunCQA’, which examines the power to make use of complex functions, it was much like or ahead of other learning methods, ‘Tullkengpt (Toolkengpt)’. That is the results of the effective improvement of the function of the based model.

He also showed excellent tool selection accuracy within the ‘KBQA’ work, including 1836 tools. It also included 837 tools which have not been encountered before.

This method explains that it is beneficial because it may easily integrate external tools and resources into the appliance through the ‘Model Context Protocol (MCP)’, which is popular for developers.

Due to this fact, corporations emphasized that they’ll minimize learning within the model and develop and distribute agents. Specifically, he added that it depends upon the meaningful understanding of the hidden state, so it may be a reliable tool in complex tasks since it is feasible to pick delicate and accurate tools.

“The tool chain shows the right way to provide a large recent tool to LLM in a straightforward way,” he said. “In case you use it, you possibly can construct an AI agent with an MCP to make complex reasoning with scientific tools.”

Nevertheless, this result continues to be within the experimental stage, “To use to the actual environment, we must still find the balance between the micro -adjustment cost and the generalized tool call.”

The code that trains the tool determinant and the search machine module Open on GitHubIt was done.

By Dae -jun Lim, reporter ydj@aitimes.com