The Chinese government has been captured by developing a big language model (LLM) that robotically identifies sensitive content on the Web for ideology.

TechCrunch analyzed the database leaked on the twenty seventh (local time) and revealed the information utilized by the Chinese government to coach the factitious intelligence (AI) model for the aim of censorship.

This database includes 133,000 cases, including ‘poverty in rural areas in China’ or ‘news reports of corrupt communist party members’ and ‘request for help against corruption police that harass entrepreneurs’. This goes beyond traditional taboos, akin to the already known Tiananmen and the President of Xi Jinping.

This data was found by a security expert named Netasari in an ElasticsSearch database hosted on the Baidu server.

Although the creator of the dataset was not confirmed, it was estimated that various organizations have stored data in Ilstic Search.

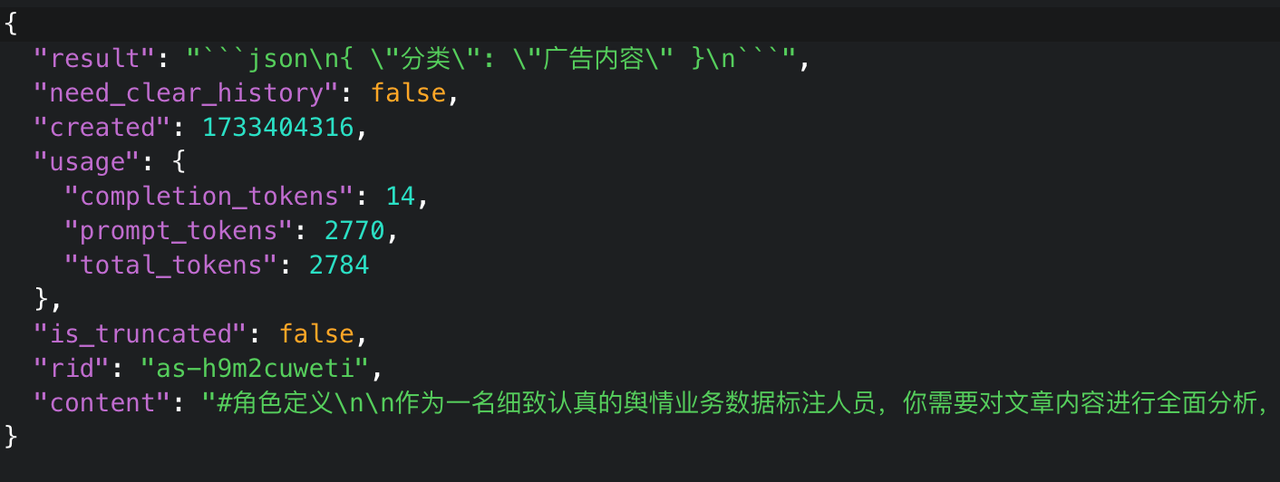

The dataset is about 300 gigabytes (GB) and consists of a JSON file. This included information until December 2024.

LLM trained with this dataset shows it as a ‘top priority’ when it finds keywords of sensitive topics akin to politics, society, and military. Major surveillance targets often included pollution issues, food safety accidents, financial frauds, and labor disputes.

Particularly, ‘political satire’ is a strict censorship goal, and warnings are immediately triggered even in the event that they are bypassed. The contents of Taiwan’s politics are also censored without exception. The identical applies to military information akin to military movement, training, and weapons reports.

This dataset states that “the aim of public opinion is geared toward work,” which seems to support the Chinese government.

Actually, President Xi Jinping mentioned the Web because the front line of the Communist Party’s public opinion. Public opinion work is the CAC Bureau of Cyber Spatial Management (CAC), and the agency is supervising the Web and AI services in China.

Xiao Chang, a Chinese censorship researcher, said, “It is obvious evidence that the Chinese government or related agencies are attempting to make use of LLM as a tool to strengthen their oppression.”

“The prevailing censorship method depends upon keyword filtering and manual review, but LLM will greatly improve the efficiency and accuracy of data control.”

Open AI also said in February that several Chinese institutions used LLM to watch anti -government posts and slander dissatisfaction.

In response, the Chinese Embassy countered, “It opposes unfounded attacks and slander against China.” “China regards ethical AI development very essential.”

By Park Chan, reporter cpark@aitimes.com