The Attention Mechanism is commonly related to the transformer architecture, but it surely was already utilized in RNNs. In Machine Translation or MT (e.g., English-Italian) tasks, when you need to predict the following Italian word, you wish your model to focus, or concentrate, on crucial English words which are useful to make a great translation.

I is not going to go into details of RNNs, but attention helped these models to mitigate the vanishing gradient problem and to capture more long-range dependencies amongst words.

At a certain point, we understood that the one necessary thing was the eye mechanism, and the complete RNN architecture was overkill. Hence, Attention is All You Need!

Self-Attention in Transformers

Classical attention indicates where words within the output sequence should focus attention in relation to the words in input sequence. This is vital in sequence-to-sequence tasks like MT.

The self-attention is a selected sort of attention. It operates between any two elements in the identical sequence. It provides information on how “correlated” the words are in the identical sentence.

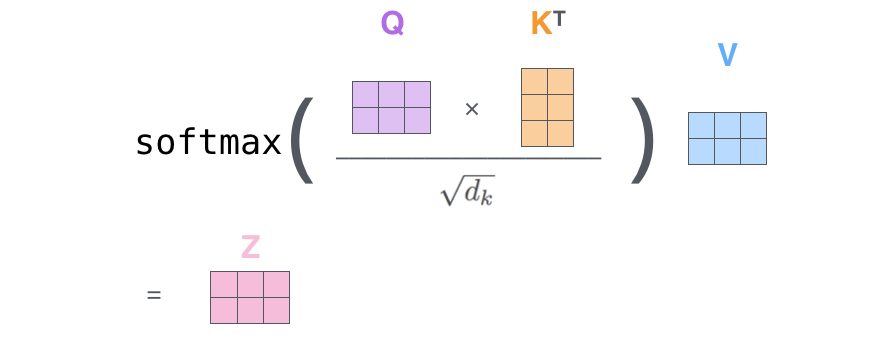

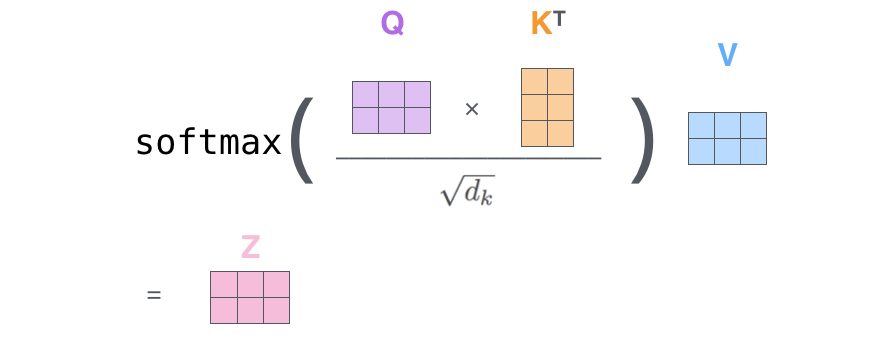

For a given token (or word) in a sequence, self-attention generates a listing of attention weights corresponding to all other tokens within the sequence. This process is applied to every token within the sentence, obtaining a matrix of attention weights (as in the image).

That is the final idea, in practice things are a bit more complicated because we would like so as to add many learnable parameters to our neural network, let’s see how.

K, V, Q representations

Our model input is a sentence like “. With the means of tokenization, a sentence is converted into a listing of numbers like [2, 6, 8, 3, 1].

Before feeding the sentence into the transformer we want to create a dense representation for every token.

Learn how to create this representation? We multiply each token by a matrix. The matrix is learned during training.

Let’s add some complexity now.

For every token, we create 3 vectors as a substitute of 1, we call these vectors: and . (We see later how we create these 3 vectors).

Conceptually these 3 tokens have a specific meaning:

- The vector key represents the core information captured by the token

- The vector value captures the total information of a token

- The vector query, it’s a matter in regards to the token relevance for the present task.

So the concept is that we concentrate on a specific token i , and we would like to ask what’s the importance of the opposite tokens within the sentence regarding the token i we’re making an allowance for.

Which means we take the vector q_i (we ask a matter regarding i) for token i, and we do some mathematical operations with all the opposite tokens k_j (j!=i). That is like wondering at first glance what are the opposite tokens within the sequence that look really necessary to know the meaning of token i.

What is that this magical mathematical operation?

We’d like to multiply (dot-product) the query vector by the important thing vectors and divide by a scaling factor. We do that for every k_j token.

In this fashion, we obtain a rating for every pair (q_i, k_j). We make this list change into a probability distribution by applying a softmax operation on it. Great now now we have obtained the attention weights!

With the eye weights, we all know what’s the importance of every token k_j to for undestandin the token i. So now we multiply the worth vector v_j related to each token per its weight and we sum the vectors. In this fashion we obtain the ultimate context-aware vector of token_i.

If we’re computing the contextual dense vector of token_1 we calculate:

z1 = a11*v1 + a12*v2 + … + a15*v5

Where a1j are the pc attention weights, and v_j are the worth vectors.

Done! Almost…

I didn’t cover how we obtained the vectors k, v and q of every token. We’d like to define some matrices w_k, w_v and w_q in order that after we multiply:

- token * w_k -> k

- token * w_q -> q

- token * w_v -> v

These 3 matrices are set at random and are learned during training, that is why now we have many parameters in modern models akin to LLMs.

Multi-head Self-Attention in Transformers (MHSA)

Are we sure that the previous self-attention mechanism is capable of capture all necessary relationships amongst tokens (words) and create dense vectors of those tokens that basically make sense?

It could actually not work at all times perfectly. What if to mitigate the error we re-run the complete thing 2 times with latest w_q, w_k and w_v matrices and one way or the other merge the two dense vectors obtained? In this fashion possibly one self-attention managed to capture some relationship and the opposite managed to capture another relationship.

Well, that is what exactly happens in MHSA. The case we just discussed incorporates two heads since it has two sets of w_q, w_k and w_v matrices. We are able to have much more heads: 4, 8, 16 etc.

The one complicated thing is that every one these heads are managed in parallel, we process the all in the identical computation using tensors.

The best way we merge the dense vectors of every head is straightforward, we concatenate them (hence the dimension of every vector shall be smaller in order that when concat them we obtain the unique dimension we wanted), and we pass the obtained vector through one other w_o learnable matrix.

Hands-on

Suppose you could have a sentence. After tokenization, each token (word for simplicity) corresponds to an index (number):

Before feeding the sentence into the transofrmer we want to create a dense representation for every token.

Learn how to create these representation? We multiply each token per a matrix. This matrix is learned during training.

Let’s construct this embedding matrix.

If we multiply our tokenized sentence with the embeddings, we obtain a dense representation of dimension 16 for every token

So as to use the eye mechanism we want to create 3 latest We define 3 matrixes w_q, w_k and w_v. After we multiply one input token time the w_q we obtain the vector q. Same with w_k and w_v.

Compute attention weights

Let’s now compute the eye weights for less than the primary input token of the sentence.

We’d like to multiply the query vector associated to token1 (query_1) with all of the keys of the opposite vectors.

So now we want to compute all of the keys (key_2, key_2, key_4, key_5). But wait, we are able to compute all of those in a single time by multiplying the sentence_embed times the w_k matrix.

Let’s do the identical thing with the values

Let’s compute the primary a part of the attions formula.

import torch.nn.functional as FWith the eye weights we all know what’s the importance of every token. So now we multiply the worth vector associated to every token per its weight.

To acquire the ultimate context aware vector of token_1.

In the identical way we could compute the context aware dense vectors of all the opposite tokens. Now we’re at all times using the identical matrices w_k, w_q, w_v. We are saying that we use one head.

But we are able to have multiple triplets of matrices, so multi-head. That’s why it is known as multi-head attention.

The dense vectors of an input tokens, given in oputut from each head are at then end concatenated and linearly transformed to get the ultimate dense vector.

Implementing MultiheadSelf-Attention

Same steps as before…

We’ll define a multi-head attention mechanism with h heads (let’s say 4 heads for this instance). Each head may have its own w_q, w_k, and w_v matrices, and the output of every head shall be concatenated and passed through a final linear layer.

Because the output of the top shall be concatenated, and we would like a final dimension of d, the dimension of every head must be d/h. Moreover each concatenated vector will go though a linear transformation, so we want one other matrix w_ouptut as you possibly can see within the formula.

Since now we have 4 heads, we would like 4 copies for every matrix. As an alternative of copies, we add a dimension, which is identical thing, but we only do one operation. (Imagine stacking matrices on top of one another, its the identical thing).

I’m using for simplicity torch’s einsum. Should you’re not aware of it try my blog post.

The einsum operation torch.einsum('sd,hde->hse', sentence_embed, w_query) in PyTorch uses letters to define the right way to multiply and rearrange numbers. Here’s what each part means:

- Input Tensors:

sentence_embedwith the notation'sd':srepresents the variety of words (sequence length), which is 5.drepresents the variety of numbers per word (embedding size), which is 16.- The form of this tensor is

[5, 16].

w_querywith the notation'hde':hrepresents the variety of heads, which is 4.drepresents the embedding size, which again is 16.erepresents the brand new number size per head (d_k), which is 4.- The form of this tensor is

[4, 16, 4].

- Output Tensor:

- The output has the notation

'hse':hrepresents 4 heads.srepresents 5 words.erepresents 4 numbers per head.- The form of the output tensor is

[4, 5, 4].

- The output has the notation

This einsum equation performs a dot product between the queries (hse) and the transposed keys (hek) to acquire scores of shape [h, seq_len, seq_len], where:

- h -> Variety of heads.

- s and k -> Sequence length (variety of tokens).

- e -> Dimension of every head (d_k).

The division by (d_k ** 0.5) scales the scores to stabilize gradients. Softmax is then applied to acquire attention weights:

Now we concatenate all of the heads of token 1

Let’s finally multiply per the last w_output matrix as within the formula above

Final Thoughts

On this blog post I’ve implemented an easy version of the eye mechanism. This will not be the way it is absolutely implemented in modern frameworks, but my scope is to offer some insights to permit anyone an understanding of how this works. In future articles I’ll undergo the complete implementation of a transformer architecture.

Follow me on TDS in the event you like this text! 😁

💼 Linkedin ️| 🐦 X (Twitter) | 💻 Website

Unless otherwise noted, images are by the creator