Artificial Intelligence (AI) Startup Contextual AI has launched a brand new large language model (LLM) that minimizes hallucinations based on ‘RAG 2.0’ technology, which has reorganized search augmentation (RAG). Created by the founding father of RAG technology, the model surpassed the facts of Google, Antrovic, and Open AI on the benchmark.

Context Chual AI unveiled the ‘Groundedness -based’ language model, which provides the very best accuracy within the industry on the 4th (local time). The name can also be named ‘GLM (Grounded Language Model)’.

GLM uses the concept of evidence that gives AI responses only with explicitly provided information. To this end, the Context Chual has introduced RAG 2.0, a brand new approach that goes beyond the bounds of the prevailing RAG system.

The corporate was founded by RAG experts. In an interview with the co -founder and CEO, Dowewe Chiela, “I’m a co -inventor of RAG,” he said. “The corporate’s goal is to correct RAG and evolve RAG to the subsequent level.”

Actually, he was opened in May 2020 The primary RAG paperIt’s considered one of the co -authors.

Chiella CEO has heard that many elements have been combined with most RAG systems.

For instance, a model for storing information, a search database, and an AI model that generates answers are operated individually, and so they connect them to construct a system. This approach identified that “individual components work, like Frankenstein’s monsters, but the general system shouldn’t be optimized.”

Subsequently, these systems are lacking in stability and sometimes not learned in response to certain domains. As well as, in an effort to obtain the specified results, complex prompting is required and one error may cause various problems. Because of this, existing RAG systems have been limited to make use of in actual corporate environments.

Nonetheless, RAG 2.0 said it integrates all elements of the system into one and optimizes. Preliminary learning and fantastic adjustment are carried out in order that information search and processing are done inside a single integrated system. This explains that it maximizes learning and performance at the identical time the language model and search model (Retriever).

RAG 2.0 also uses the ‘Mixture-of-Retrievers’ feature that allows smart searches. This technology is asked questions, first planning essentially the most appropriate search method, after which finds information in response to that strategy. This is comparable to the best way of pondering of the most recent AI model.

Along with the world’s best ‘re-ranker’, he added that it’s going to select an important contents of the search and prioritize the searched information, and increase the accuracy before delivering it to the GLM.

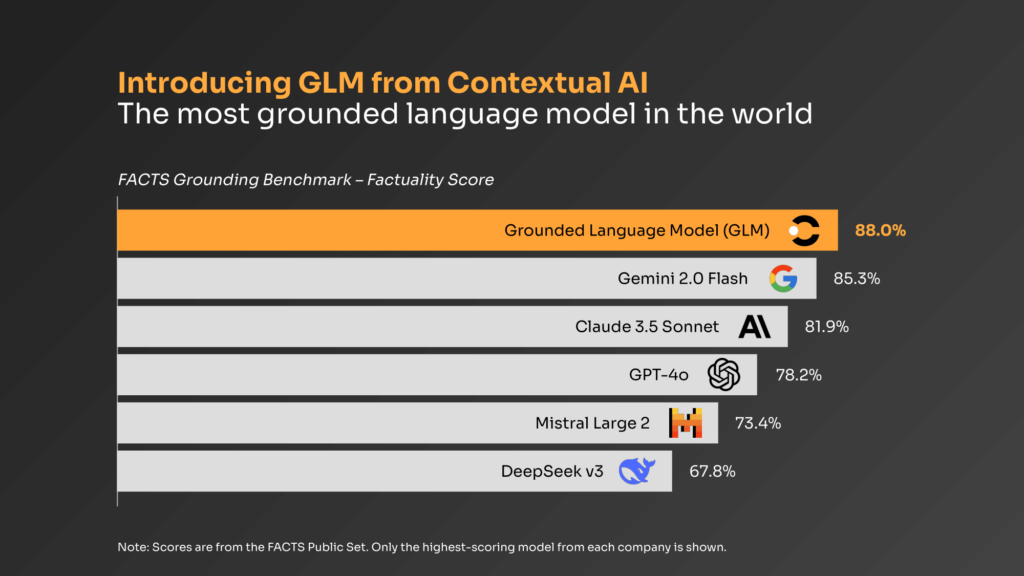

GLM surpassed all basic models by recording state -of -the -art performance in a representative basis -based benchmark called ‘FACTS’.

It scored 88%of the fact scores, surpassing Google ‘Geminai 2.0 Flash (84.6%)’, Antropic ‘Claude 3.5 Sonnet (79.4%)’ and Open AI ‘GPT-4O (78.8%)’.

today In Context Chual AI You possibly can create an account and use GLM through the free API credits provided.

By Park Chan, reporter cpark@aitimes.com