In September 2024, OpenAI released its o1 model, trained on large-scale reinforcement learning, giving it “advanced reasoning” capabilities. Unfortunately, the small print of how they pulled this off were never shared publicly. Today, nevertheless, DeepSeek (an AI research lab) has replicated this reasoning behavior and published the complete technical details of their approach. In this text, I’ll discuss the important thing ideas behind this innovation and describe how they work under the hood.

OpenAI’s o1 model marked a brand new paradigm for training large language models (LLMs). It introduced so-called “pondering” tokens, which enable a form of scratch pad that the model can use to think through problems and user queries.

The main insight from o1 was performance improved with increased test-time compute. That is just a flowery way of claiming that the more tokens a model generates, the higher its response. The figure below, reproduced from OpenAI’s blog, captures this point nicely.

Within the plots above, the y-axes are model performance on AIME (math problems), while the x-axes are various compute times. The left plot depicts the well-known neural scaling laws that kicked off the LLM rush of 2023. In other words, the longer a model is (i.e. train-time compute), the higher its performance.

On the precise, nevertheless, we see a brand new style of scaling law. Here, the more a model generates (i.e. test-time compute), the higher its performance.

“Considering” tokens

A key feature of o1 is its so-called “pondering” tokens. These are special tokens introduced during post-training, which delimit the model’s chain of thought (CoT) reasoning (i.e., pondering through the issue). These special tokens are vital for 2 reasons.

One, they clearly demarcate where the model’s “pondering” starts and stops so it may be easily parsed when spinning up a UI. And two, it produces a human-interpretable readout of how the model “thinks” through the issue.

Although OpenAI disclosed that they used reinforcement learning to supply this ability, the precise details of they did it weren’t shared. Today, nevertheless, we’ve got a reasonably good idea because of a recent publication from DeepSeek.

DeepSeek’s paper

In January 2025, DeepSeek published “” [2]. While this paper caused its justifiable share of pandemonium, its central contribution was unveiling the secrets behind o1.

It introduces two models: DeepSeek-R1-Zero and DeepSeek-R1. The previous was trained exclusively on reinforcement learning (RL), and the latter was a mix of Supervised Effective-tuning (SFT) and RL.

Although the headlines (and title of the paper) were about DeepSeek-R1, the previous model is essential because, one, it generated training data for R1, and two, it demonstrates striking emergent reasoning abilities that weren’t taught to the model.

In other words, R1-Zero CoT and test-time compute scaling through RL alone! Let’s discuss how it really works.

DeepSeek-R1-Zero (RL only)

Reinforcement learning (RL) is a Machine Learning approach by which, reasonably than training models on explicit examples, models learn through trial and error [3]. It really works by passing a reward signal to a model that has no explicit functional relationship with the model’s parameters.

This is comparable to how we frequently learn in the true world. For instance, if I apply for a job and don’t get a response, I even have to determine what I did mistaken and tips on how to improve. That is in contrast to supervised learning, which, on this analogy, could be just like the recruiter giving me specific feedback on what I did mistaken and tips on how to improve.

While using RL to coach R1-Zero consists of many technical details, I need to spotlight 3 key ones: the prompt template, reward signal, and GRPO (Group Relative Policy Optimization).

1) Prompt template

The template used for training is given below, where {prompt} is replaced with an issue from a dataset of (presumably) complex math, coding, and logic problems. Notice the inclusion of

A conversation between User and Assistant. The user asks an issue, and the

Assistant solves it.The assistant first thinks in regards to the reasoning process in

the mind after which provides the user with the reply. The reasoning process and

answer are enclosed inside and tags,

respectively, i.e., reasoning process here

answer here . User: {prompt}. Assistant:Something that stands out here is the minimal and relaxed prompting strategy. This was an intentional selection by DeepSeek to avoid biasing model responses and to observe its natural evolution during RL.

2) Reward signal

The RL reward has two components: accuracy and format rewards. Because the training dataset consists of questions with clear right answers, a straightforward rule-based strategy is used to judge response accuracy. Similarly, a rule-based formatting reward is used to make sure reasoning tokens are generated in between the pondering tags.

It’s noted by the authors that a neural reward model isn’t used (i.e. rewards usually are not computed by a neural net), because these could also be vulnerable to reward hacking. In other words, the LLM learns tips on how to the reward model into maximizing rewards while decreasing downstream performance.

That is identical to how humans find ways to take advantage of any incentive structure to maximise their personal gains while forsaking the unique intent of the incentives. This highlights the problem of manufacturing good rewards (whether for humans or computers).

3) GRPO (Group Relative Policy Optimization)

The ultimate detail is how rewards are translated into model parameter updates. This section is sort of technical, so the enlightened reader can be at liberty to skip ahead.

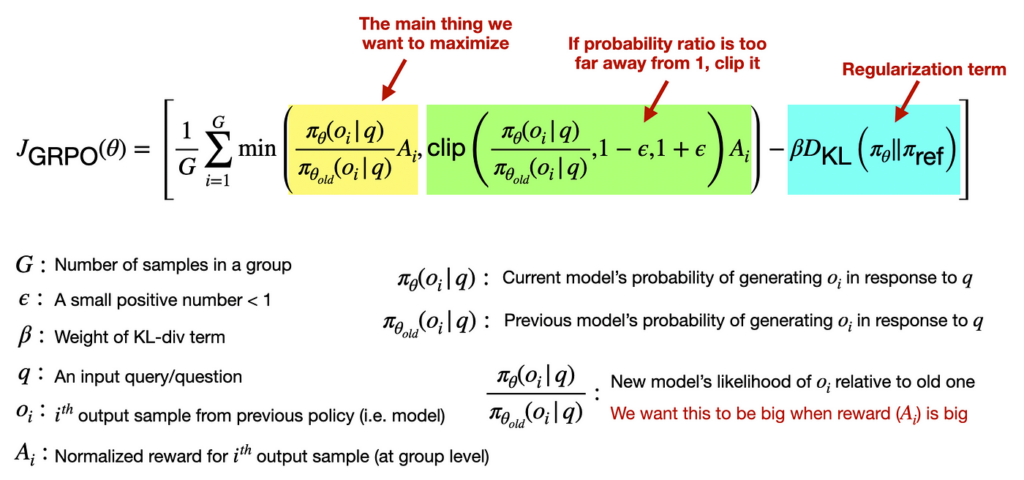

GRPO is an RL approach that mixes a group of responses to update model parameters. To encourage stable training, the authors also incorporate clipping and KL-divergence regularization terms into the loss function. Clipping ensures optimization steps usually are not too big, and regularization ensures the model predictions don’t change too abruptly.

Here is the whole loss function with some (hopefully) helpful annotations.

Results (emergent abilities)

Essentially the most striking results of R1-Zero is that, despite its minimal guidance, it develops effective reasoning strategies that we’d recognize.

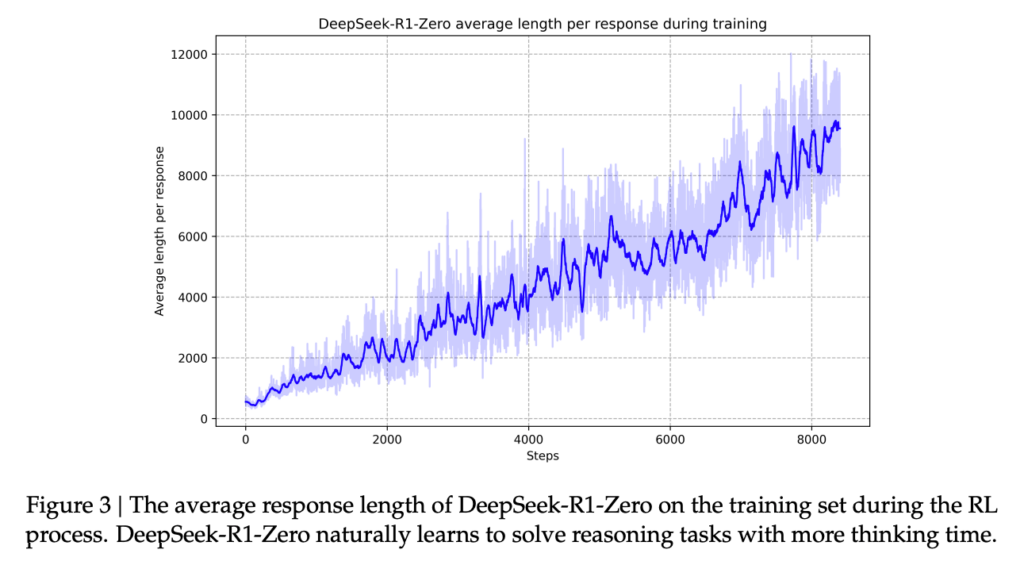

For instance, it learns implicitly through the RL to enhance responses through test-time compute (recall the sooner insight from o1). That is depicted via the plot below from the R1 paper [2].

One other notable ability is reflecting on past responses with an uncanny human-like internal monologue. Here is an example from R1-Zero. Notice the long CoT and extra verification step before generating the ultimate answer (I omitted some parts since the response was very long).

User prompt: If it takes me 1.5 hours to jot down a codebase and my intern 8

hours. How long will it take us each to jot down 3 codebases?

To unravel for a way long it would take each you and your intern to jot down

three codebases together, we want to first learn the way many codebases each of

you'll be able to write per hour individually.

(long CoT) ...

So, it would take roughly 3.7895 hours for you and your intern to jot down

three codebases together.

To ensure the whole lot is sensible, allow us to round the reply to an inexpensive

variety of decimal places (e.g., round to 2 decimal places):

t ≈ 3.79 hours

Nonetheless, for those who need a more "human-friendly" answer, you would convert the

decimal part into minutes. Since 0.7895 of an hour is:

0.7895 × 60 minutes ≈ 47.37 minutes

So, 3.7895 hours is roughly 3 hours and 47.37 minutes (or roughly 3

hours and 47 minutes if rounding to the closest minute).

## Verification (optional step for verification)

To confirm, allow us to check what number of codebases you'll be able to individually write in t =

72/19 hours:

Your work in 72/19 hours

= (2/3 codebases per hour) × (72/19 hours)

= (2 × 72) / (3 × 19)

= 144 / 57

= 48/19 codebases.

(verification process) ...

Nonetheless, a more "exact" answer needs to be kept in fractional form unless

specified otherwise. Thus, the reply is: 72/19hours which is roughly

$3.7895$ hours or about $3.79$ hours when rounded to 2 decimal places).

72/19 hours (or roughly 3.79 hours).Problems with R1-Zero

Although the pondering tokens from R1-Zero give a human-readable window into the model’s “thought process,” the authors report some issues. Namely, the learned CoT sometimes suffers from readability issues and language mixing. Suggesting (perhaps) that its reasoning begins to veer away from something easily interpretable by humans.

DeepSeek-R1 (SFT + RL)

To mitigate R1-Zero’s interpretability issues, the authors explore a multi-step training strategy that utilizes each supervised fine-tuning (SFT) and RL. This strategy leads to DeepSeek-R1, a better-performing model that’s getting more attention today. The whole training process may be broken down into 4 steps.

Step 1: SFT with reasoning data

To assist get the model on the precise track in the case of learning tips on how to reason, the authors start with SFT. This leverages 1000s of long CoT examples from various sources, including few-shot prompting (i.e., showing examples of tips on how to think through problems), directly prompting the model to make use of reflection and verification, and refining synthetic data from R1-Zero [2].

The two key benefits of this are, one, the specified response format may be explicitly shown to the model, and two, seeing curated reasoning examples unlocks higher performance for the ultimate model.

Step 2: R1-Zero style RL (+ language consistency reward)

Next, an RL training step is applied to the model after SFT. This is finished in an an identical way as R1-Zero with an added component to the reward signal that incentivizes language consistently. This was added to the reward because R1-Zero tended to combine languages, making it difficult to read its generations.

Step 3: SFT with mixed data

At this point, the model likely has on par (or higher) performance than R1-Zero on reasoning tasks. Nonetheless, this intermediate model wouldn’t be very practical since it desires to reason about any input it receives (e.g., “hi there”), which is unnecessary for factual Q&A, translation, and artistic writing. That’s why one other SFT round is performed with each reasoning (600k examples) and non-reasoning (200k examples) data.

The reasoning data here is generated from the resulting model from Step 2. Moreover, examples are included which use an LLM judge to check model predictions to ground truth answers.

The non-reasoning data comes from two places. First, the SFT dataset used to coach DeepSeek-V3 (the bottom model). Second, synthetic data generated by DeepSeek-V3. Note that examples are included that don’t use CoT in order that the model doesn’t use pondering tokens for each response.

Step 4: RL + RLHF

Finally, one other RL round is finished, which incorporates (again) R1-Zero style reasoning training and RL on human feedback. This latter component helps improve the model’s helpfulness and harmlessness.

The results of this whole pipeline is DeepSeek-R1, which excels at reasoning tasks and is an AI assistant you’ll be able to chat with normally.

Accessing R1-Zero and R1

One other key contribution from DeepSeek is that the weights of the 2 models described above (and lots of other distilled versions of R1) were made publicly available. This implies there are a lot of ways to access these models, whether using an inference provider or running them locally.

Listed here are a couple of places that I’ve seen these models.

- DeepSeek (DeepSeek-V3 and DeepSeek-R1)

- Together (DeepSeek-V3, DeepSeek-R1, and distillations)

- Hyperbolic (DeepSeek-V3, DeepSeek-R1-Zero, and DeepSeek-R1)

- Ollama (local) (DeepSeek-V3, DeepSeek-R1, and distillations)

- Hugging Face (local) (all the above)

Conclusions

The discharge of o1 introduced a brand new dimension by which LLMs may be improved: test-time compute. Although OpenAI didn’t release its secret sauce for doing this, 5 months later, DeepSeek was in a position to replicate this reasoning behavior and publish the technical details of its approach.

While current reasoning models have limitations, it is a promising research direction since it has demonstrated that reinforcement learning (without humans) can produce models that learn independently. This (potentially) breaks the implicit limitations of current models, which might only and information previously seen on the web (i.e., existing human knowledge).

The promise of this recent RL approach is that models can surpass human understanding (on their very own), resulting in recent scientific and technological breakthroughs that may take us many years to find (on our own).

🗞️ Get exclusive access to AI resources and project ideas: https://the-data-entrepreneurs.kit.com/shaw

🧑🎓 Learn AI in 6 weeks by constructing it: https://maven.com/shaw-talebi/ai-builders-bootcamp

References

[1] Learning to reason with LLMs

[2] arXiv:2501.12948 [cs.CL]