Although the deepfaking of personal individuals has turn out to be a growing public concern and is increasingly being outlawed in various regions, actually proving that a user-created model – comparable to one enabling revenge porn – was specifically trained on a selected person’s images stays extremely difficult.

To place the issue in context: a key element of a deepfake attack is falsely claiming that a picture or video depicts a selected person. Simply stating that somebody in a video is identity #A, fairly than simply a lookalike, is enough to create harm, and no AI is crucial on this scenario.

Nonetheless, if an attacker generates AI images or videos using models trained on real person’s data, social media and search engine face recognition systems will mechanically link the faked content to the victim –without requiring names in posts or metadata. The AI-generated visuals alone make sure the association.

The more distinct the person’s appearance, the more inevitable this becomes, until the fabricated content appears in image searches and ultimately reaches the victim.

Face to Face

Essentially the most common technique of disseminating identity-focused models is currently through Low-Rank Adaptation (LoRA), wherein the user trains a small variety of images for just a few hours against the weights of a far larger foundation model comparable to Stable Diffusion (for static images, mostly) or Hunyuan Video, for video deepfakes.

Essentially the most common of LoRAs, including the brand new breed of video-based LoRAs, are female celebrities, whose fame exposes them to this type of treatment with less public criticism than within the case of ‘unknown’ victims, attributable to the belief that such derivative works are covered under ‘fair use’ (at the very least within the USA and Europe).

There isn’t any such public forum for the non-celebrity victims of deepfaking, who only surface within the media when prosecution cases arise, or the victims speak out in popular outlets.

Nonetheless, in each scenarios, the models used to fake the goal identities have ‘distilled’ their training data so completely into the latent space of the model that it’s difficult to discover the source images that were used.

If it possible to accomplish that inside a suitable margin of error, this might enable the prosecution of those that share LoRAs, because it not only proves the intent to deepfake a selected identity (i.e., that of a specfic ‘unknown’ person, even when the malefactor never names them through the defamation process), but additionally exposes the uploader to copyright infringement charges, where applicable.

The latter can be useful in jurisdictions where legal regulation of deepfaking technologies is lacking or lagging behind.

Over-Exposed

The target of coaching a foundation model, comparable to the multi-gigabyte base model that a user might download from Hugging Face, is that the model should turn out to be well-generalized, and ductile. This involves training on an adequate variety of diverse images, and with appropriate settings, and ending training before the model ‘overfits’ to the info.

An overfitted model has seen the info so many (excessive) times through the training process that it should are likely to reproduce images which are very similar, thereby exposing the source of coaching data.

Source: https://arxiv.org/pdf/2301.13188

Nonetheless, overfitted models are generally discarded by their creators fairly than distributed, since they’re in any case unfit for purpose. Subsequently that is an unlikely forensic ‘windfall’. In any case, the principle applies more to the expensive and high-volume training of foundation models, where multiple versions of the identical image which have crept right into a huge source dataset may make sure training images easy to invoke (see image and example above).

Things are a little bit different within the case of LoRA and Dreambooth models (though Dreambooth has fallen out of fashion attributable to its large file sizes). Here, the user selects a really limited variety of diverse images of a subject, and uses these to coach a LoRA.

Steadily the LoRA can have a trained-in trigger-word, comparable to . Nonetheless, fairly often the specifically-trained subject will appear in generated output , because even a well-balanced (i.e., not overfitted) LoRA is somewhat ‘fixated’ on the fabric it was trained on, and can are likely to include it in any output.

This predisposition, combined with the limited image numbers which are optimal for a LoRA dataset, expose the model to forensic evaluation, as we will see.

Unmasking the Data

These matters are addressed in a brand new paper from Denmark, which offers a technique to discover source images (or groups of source images) in a black-box Membership Inference Attack (MIA). The technique at the very least partially involves using custom-trained models which are designed to assist expose source data by generating their very own ‘deepfakes’:

Source: https://arxiv.org/pdf/2502.11619

Though the work, titled , is a most interesting contribution to the literature around this particular topic, it is usually an inaccessible and tersely-written paper that needs considerable decoding. Subsequently we’ll cover at the very least the essential principles behind the project here, and a choice of the outcomes obtained.

In effect, if someone fine-tunes an AI model in your face, the authors’ method will help prove it by searching for telltale signs of memorization within the model’s generated images.

In the primary instance, a goal AI model is fine-tuned on a dataset of face images, making it more more likely to reproduce details from those images in its outputs. Subsequently, a classifier attack mode is trained using AI-generated images from the goal model as ‘positives’ (suspected members of the training set) and other images from a distinct dataset as ‘negatives’ (non-members).

By learning the subtle differences between these groups, the attack model can predict whether a given image was a part of the unique fine-tuning dataset.

The attack is handiest in cases where the AI model has been fine-tuned extensively, meaning that the more a model is specialized, the better it’s to detect if certain images were used. This generally applies to LoRAs designed to recreate celebrities or private individuals.

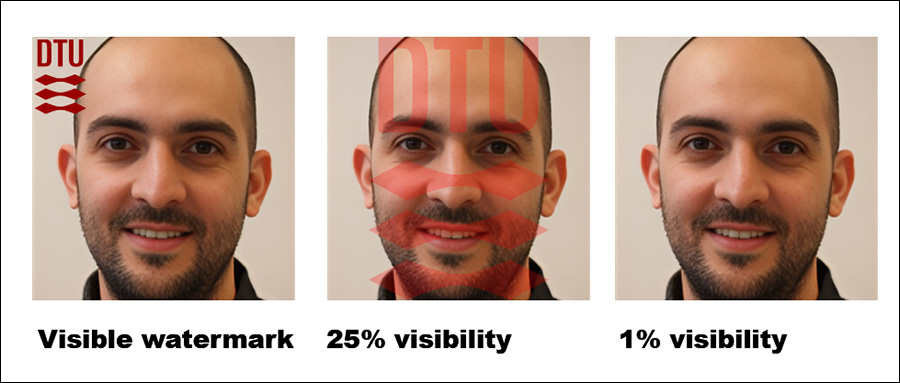

The authors also found that adding visible watermarks to training images makes detection easier still – though hidden watermarks don’t help as much.

Impressively, the approach is tested in a black-box setting, meaning it really works without access to the model’s internal details, only its outputs.

The tactic arrived at is computationally intense, because the authors concede; nevertheless, the worth of this work is in indicating the direction for extra research, and to prove that data will be realistically extracted to a suitable tolerance; due to this fact, given its seminal nature, it needn’t run on a smartphone at this stage.

Method/Data

Several datasets from the Technical University of Denmark (DTU, the host institution for the paper’s three researchers) were utilized in the study, for fine-tuning the goal model and for training and testing the attack mode.

Datasets used were derived from DTU Orbit:

The bottom image set.

Images scraped from DTU Orbit.

A partition of DDTU used to fine-tune the goal model.

A partition of DDTU that was not used to fine-tune any image generation model and was as an alternative used to check or train the attack model.

A partition of DDTU with visible watermarks used to fine-tune the goal model.

A partition of DDTU with hidden watermarks used to fine-tune the goal model.

Images generated by a Latent Diffusion Model (LDM) which has been fine-tuned on the DseenDTU image set.

The datasets used to fine-tune the goal model consist of image-text pairs captioned by the BLIP captioning model (perhaps not by coincidence one of the crucial popular uncensored models within the casual AI community).

BLIP was set to prepend the phrase to every description.

Moreover, several datasets from Aalborg University (AAU) were employed within the tests, all derived from the AU VBN corpus:

Images scraped from AAU vbn.

A partition of DAAU used to fine-tune the goal model.

A partition of DAAU that shouldn’t be used to fine-tune any image generation model, but fairly is used to check or train the attack model.

Images generated by an LDM fine-tuned on the DseenAAU image set.

Corresponding to the sooner sets, the phrase was used. This ensured that every one labels within the DTU dataset followed the format , reinforcing the dataset’s core characteristics during fine-tuning.

Tests

Multiple experiments were conducted to judge how well the membership inference attacks performed against the goal model. Each test aimed to find out whether it was possible to perform a successful attack throughout the schema shown below, where the goal model is fine-tuned on a picture dataset that was obtained without authorization.

With the fine-tuned model queried to generate output images, these images are then used as positive examples for training the attack model, while additional unrelated images are included as negative examples.

The attack model is trained using supervised learning and is then tested on latest images to find out whether or not they were originally a part of the dataset used to fine-tune the goal model. To guage the accuracy of the attack, 15% of the test data is put aside for validation.

Since the goal model is fine-tuned on a known dataset, the actual membership status of every image is already established when creating the training data for the attack model. This controlled setup allows for a transparent assessment of how effectively the attack model can distinguish between images that were a part of the fine-tuning dataset and those who weren’t.

For these tests, Stable Diffusion V1.5 was used. Though this fairly old model crops up lots in research attributable to the necessity for consistent testing, and the extensive corpus of prior work that uses it, that is an appropriate use case; V1.5 remained popular for LoRA creation within the Stable Diffusion hobbyist community for a very long time, despite multiple subsequent version releases, and even despite the arrival of Flux – since the model is totally uncensored.

The researchers’ attack model was based on Resnet-18, with the model’s pretrained weights retained. ResNet-18’s 1000-neuron last layer was substituted with a fully-connected layer with two neurons. Training loss was categorical cross-entropy, and the Adam optimizer was used.

For every test, the attack model was trained five times using different random seeds to compute 95% confidence intervals for the important thing metrics. Zero-shot classification with the CLIP model was used because the baseline.

The researchers’ attack method proved handiest when targeting fine-tuned models, particularly those trained on a selected set of images, comparable to a person’s face. Nonetheless, while the attack can determine whether a dataset was used, it struggles to discover individual images inside that dataset.

In practical terms, the latter shouldn’t be necessarily a hindrance to using an approach comparable to this forensically; while there is comparatively little value in establishing that a famous dataset comparable to ImageNet was utilized in a model, an attacker on a non-public individual (not a celeb) will are likely to have far less selection of source data, and want to completely exploit available data groups comparable to social media albums and other online collections. These effectively create a ‘hash’ which will be uncovered by the methods outlined.

The paper notes that one other option to improve accuracy is to make use of AI-generated images as ‘non-members’, fairly than relying solely on real images. This prevents artificially high success rates that might otherwise mislead the outcomes.

An extra factor that significantly influences detection, the authors note, is watermarking. When training images contain visible watermarks, the attack becomes highly effective, while hidden watermarks offer little to no advantage.

Finally, the extent of guidance in text-to-image generation also plays a job, with the perfect balance found at a guidance scale of around 8. Even when no direct prompt is used, a fine-tuned model still tends to provide outputs that resemble its training data, reinforcing the effectiveness of the attack.

Conclusion

It’s a shame that this interesting paper has been written in such an inaccessible manner, correctly of some interest to privacy advocates and casual AI researchers alike.

Though membership inference attacks may change into an interesting and fruitful forensic tool, it’s more vital, perhaps, for this research strand to develop applicable broad principles, to stop it ending up in the identical game of whack-a-mole that has occurred for deepfake detection normally, when the discharge of a more moderen model adversely affects detection and similar forensic systems.

Since there’s some evidence of a higher-level tenet cleaned on this latest research, we will hope to see more work on this direction.