Interest in Deep Chic’s reasoning model ‘R1’ is soaring, and comparison with open AI ‘O1’ is being made in earnest. Increasingly, the benchmarks which have already been released by Deep Chic, in addition to the newly rounded benchmarks, are increasing.

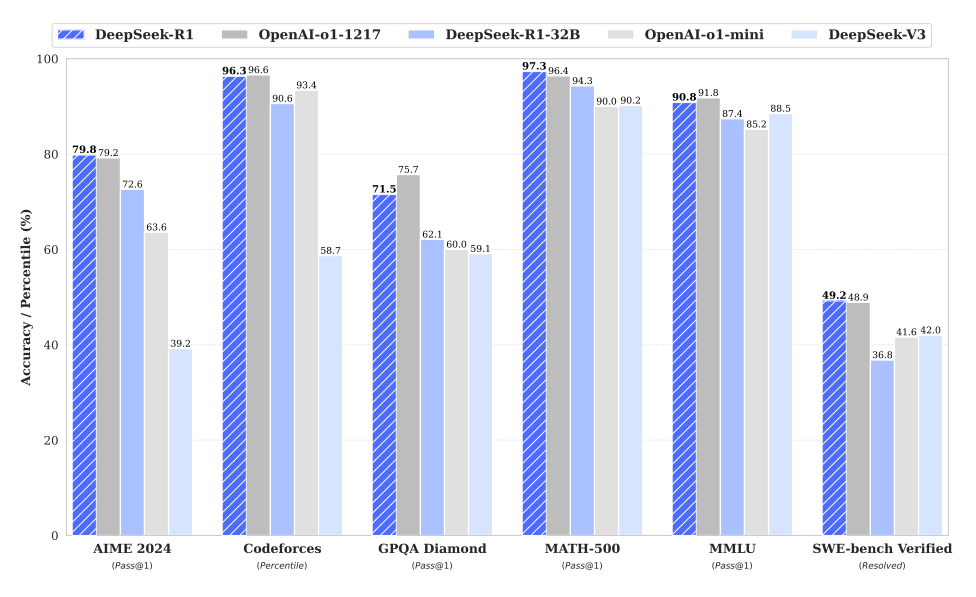

Initially, in response to the benchmark that Deep Chic released on the twentieth (local time), R1 scored the most effective over three of the six benchmarks. Nevertheless, O1 also ranked first in three areas, and in the long run, the benchmark didn’t have a superiority.

R1 is 97.3%in ‘Math-500’, which consists of greater than 500 interactive mathematics in ‘AIME’ that tests the mathematical competition problem, and ‘SWE Berry Fide’ 49.2, which solves the actual software problem of the AI model. The share and backs were ahead of O1.

O1, alternatively, outperformed R1 in ‘Codeforce’, which evaluates coding skills, ‘GPQ diamonds’ to judge doctoral science inferences, and MMLU, which tests the common sense of the model.

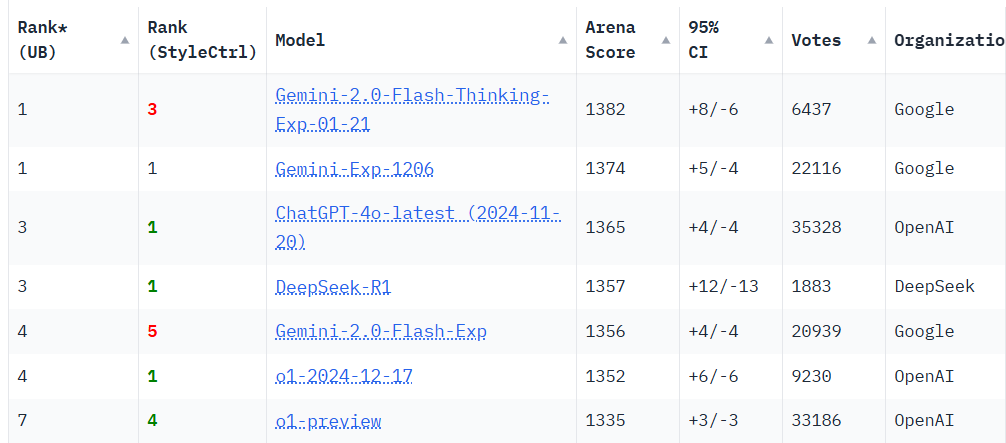

Lately, the competition for the ‘Chatbot Arena’, which has been the test Peter of the newest models, can also be continuing. As of the twenty seventh, ‘Geminai 2.0 Flash Sinking (1382 points)’ was ranked first within the IM Arena Leatherboard.

Nevertheless, the R1 and ‘GPT-4O’ are barely different scores of 1357 and 1365, respectively. As well as, when the ‘Style Removal’, apart from elements corresponding to response formats and lengths, is applied, R1 is in tone with Geminai and GPT-4O.

As well as, within the ‘HLE’ benchmark of the AI Safety Center, often known as the best difficulty level, R1 ranked first with an 9.4%answer rate, beating 8.3%of O1.

In consequence, the benefits of the 2 models also appeared. As we have now confirmed on the benchmark, it doesn’t suggest much.

Due to this fact, many experts cited the facts of R1 as ▲ open source and ▲ cost efficiency.

Anyone can access the R1 and use it with a tailoring and customization. Actually, the variety of days of interest has increased just a few days, and the variety of downloads that reached 100,000 two days ago increased to 150,000. It’s an explosive popularity.

Deep Chic also found that R1’s base model, V3, had trained on the NVIDIA ‘H800’ GPU -based data center at about $ 5.57 million for 2 months. It is because it’s one tenth of the price of ‘Rama 3.1’.

The R1 also adopts the ‘MOE’ architecture and designs only about 34 billion of the entire parameters to keep up high performance by reducing the price and memory usage by greater than 90% in comparison with O1.

Specifically, the ‘Distillation’ model group, which relocates performance to smaller models based on R1, is costlier, included 1.5B, 7B (or more cue), and 8B (lama) with parameters. It may even be utilized in on -vice or edge devices.

After all, questions were raised. Actually, Deep Chic has said that it has secured 100,000 GPUs before the US sanctions have been tightened, and a few experts identified that it was not true that it developed the V3 in two months with only $ 55.7 million.

Experts first cited ‘safety’ because the advantage of O1. Because it is a closed model and is used commercially, it has spent a number of time in safety and regulations through private tests and personal tests before the launch.

R1 can also be controversial that the open source is more advantageous than a closed model, because the R1 scientist Jan Leckun Meta identified that “R1 just isn’t a victory of China, but an open source.” Specifically, this debate was at all times a giant voice on this debate, but now it has modified a bit.

That is as a result of the news that last 12 months that China will use an open source model for military purposes. Some warn of outstanding models corresponding to R1 to make use of the danger of getting used as military use, and so they are also openly against open source.

By Dae -jun Lim, reporter ydj@aitimes.com