Microsoft (MS) introduced the world’s first server composed of NVIDIA’s latest ‘Blackwell’ chip. Contrary to expectations that the server can be accepted in early December, it was revealed that the server was already in operation.

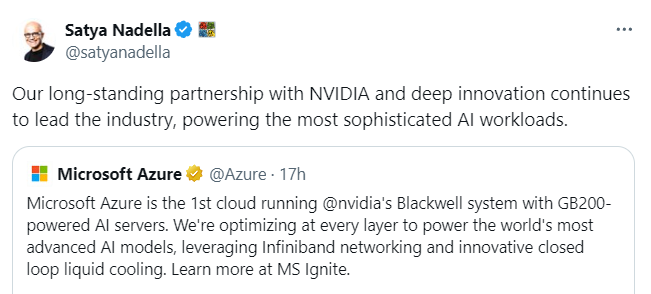

MS unveiled its self-built Nvidia ‘GB200’ server through Azure’s official X (Twitter) account on the eighth (local time).

“MS Azure is the primary cloud service provider to run the NVIDIA Blackwell-based GB200 AI server,” he said. “We’ll leverage Infiniband networking and revolutionary closed-loop liquid cooling to power the world’s most advanced AI models.”

It is a whopping two months sooner than Taiwanese technology expert Kim Culpan’s prediction. He predicted last month that the GB200 server can be shipped from early December and can be delivered to Microsoft first.

Nevertheless, it seems that MS has already obtained the product and is working the server.

Tom’s Hardware analyzed that the server released by MS appears to be ‘GB200-NVL36’ equipped with 36 GPU ‘B200’. It is a lower specification than the ‘GB200-NVL72’ server equipped with 72 B200s, and is prone to be used for testing purposes.

NVL36 is understood to be price about $2 million (about 2.7 billion won), and NVL72 is understood to be price greater than $3 million (about 4 billion won).

Moreover, the GB200-NVL72 server requires roughly 120 kilowatts (kW) of power, so liquid cooling is crucial. Subsequently, MS explains that it built this server rack to check power and cooling problems upfront before full-scale service of the GB200-NVL72.

The B200 GPU installed within the server provides 2.5 times higher performance than the prevailing ‘H100’. Subsequently, it is beneficial for learning very large and complicated large language models (LLMs). This server is anticipated to undergo testing and be put into full service from the top of this 12 months or early next 12 months.

Microsoft CEO Satya Nadella also emphasized through

Microsoft is anticipated to announce more details about Blackwell Server and AI projects at its annual ‘Ignite’ conference in Chicago from November 18th to twenty second.

Meanwhile, The Information reported on today that OpenAI is breaking away from relying solely on Microsoft’s computing power and is discussing a server supply contract with Oracle.

In response to this, OpenAI is dissatisfied that Microsoft will not be moving quickly to offer the most recent servers, and has concluded that it’s difficult to rely solely on MS.

Oracle also recently announced that it can create an AI supercluster consisting of 131,072 NVIDIA Blackwell GPUs. This has a performance of two.4 zeta FLOPS, which is more powerful than xAI’s Memphis data center with 100,000 ‘H100’ GPUs.

For that reason, Oracle Chairman Larry Ellison revealed that he and CEO Elon Musk implored Nvidia CEO Jensen Huang to “please take our money.”

Reporter Lim Da-jun ydj@aitimes.com