Meta has unveiled a video creation artificial intelligence (AI) model to compete with OpenAI’s ‘Sora’. It has powerful features reminiscent of creating video and audio concurrently and editing only a part of the video. This product might be serviced through Meta’s platforms reminiscent of Instagram next 12 months with no separate release.

Meta was released on the 4th (local time) through its website. ‘Movie Gen’released a video creation model called .

Mehta explains that this research reflects several years of know-how, starting with Make-A-Scene and Make-A-Video released in 2022 and moving through the Llama image model. did it

Through this, they claimed to have created probably the most progressive and immersive storytelling model. He emphasized that it may possibly be useful not just for general users, but in addition for skilled video producers and editors, and even Hollywood film producers.

The function is taken into account some of the powerful amongst video models which have appeared thus far. It could possibly produce 16 seconds of realistic, personalized HD video and 48kHz audio at as much as 16FPS, and in addition offers video editing features.

There are 4 fundamental functions: ▲video creation ▲personalized video ▲precise video editing ▲audio creation.

First, you possibly can create high-definition (HD) video through text prompts. It generates videos as much as 16 seconds long at 1080p resolution through a 30 billion parameter transformer model. AI can handle various elements of video creation, including camera motion, object interaction, and environmental physics through prompt management capabilities.

It also offers a customized video feature that permits users to upload images of themselves or others to seem in AI-generated videos. It is feasible to create customized content with quite a lot of prompts while maintaining a private appearance.

In step with the model announcement, Meta CEO Mark Zuckerberg also released a ‘reanimated video’ of himself exercising using Movie Zen on Instagram. The leg press machine he’s using transforms right into a neon cyberpunk version, an ancient Roman version, a flaming golden version, and more.

It also includes advanced video editing features that allow users to change specific elements throughout the video. Each partial changes, reminiscent of objects or colours, in addition to extensive modifications, reminiscent of replacing the background, could be specified as text.

Along with video, it also integrated a 13 billion parameter audio generation model. This means that you can create sound effects, background sounds, and synchronized audio that completely match the visual content. Specifically, it is feasible to create ‘Foley sounds’ reminiscent of footsteps or wind blowing.

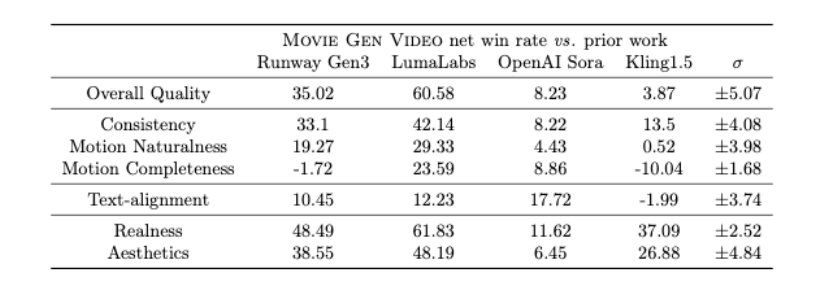

In a preference survey conducted by human evaluators, Runway’s ‘Zen 3’, Luma’s ‘Dream Machine’, Kuaishou’s ‘Cling’, and Open AI’s ‘Zen 3’, that are currently often known as probably the most advanced models by way of various attributes reminiscent of consistency and naturalness of movement, were chosen as probably the most advanced models. It was found to be ahead of all others including ‘Sora’.

Mehta said it used 100 million licensed or licensed videos and 1 billion images to coach its model, specifically learning in regards to the physical world from video. Nonetheless, detailed data sources weren’t disclosed.

Technically, it was announced that it combined the Diffusion Model, which operates on the present image generation model, the Large Language Model (LLM), and a brand new technology called ‘Flow Matching’.

Flow matching is a technology that models the change in dataset distribution over time and creates a natural next movement by calculating the moving speed of the sample when inferring the subsequent scene of the video. Through this, high-quality video could be created, and the outcomes are more natural to the human eye.

It is understood that Movie Zen is unlikely to be released as open source like Meta’s existing ‘Rama’ series. As an alternative, it’s integrated into the meta platform like meta AI.

A Meta spokesperson said, “Currently, it is barely available to internal employees and a small variety of external partners, including some film producers, and it’s unlikely to be released as open source.” It’s going to be available on Meta platforms reminiscent of Instagram, WhatsApp, and Messenger next 12 months. He said.

It’s reported that there are still issues to be resolved. “Currently, it takes over 10 minutes to generate a video, which is simply too long for the common consumer who’s prone to apply it to their phone,” said Connor Hayes, vp of Meta Creation AI.

Nonetheless, he said, “We’re solving really necessary issues about safety and responsibility,” and that the work is primarily focused on stopping the creation of deepfakes or sensational or violent videos which will occur in the longer term.

The discharge of Meta’s Zen Movie will likely not only compete with existing products in video creation AI, but may even be integrated into Meta’s hardware.

CEO Zuckerberg said on the ‘Connect’ event held last month, “I imagine that AI will play a greater role in future wearables reminiscent of smart glasses.”

Reporter Lim Da-jun ydj@aitimes.com