As technology advances, artificial intelligence (AI) has improved its ability to raised understand and reply to humans, but it surely has been argued that AI can never be friends with people. In fact, it’s because machines cannot have ‘empathy’.

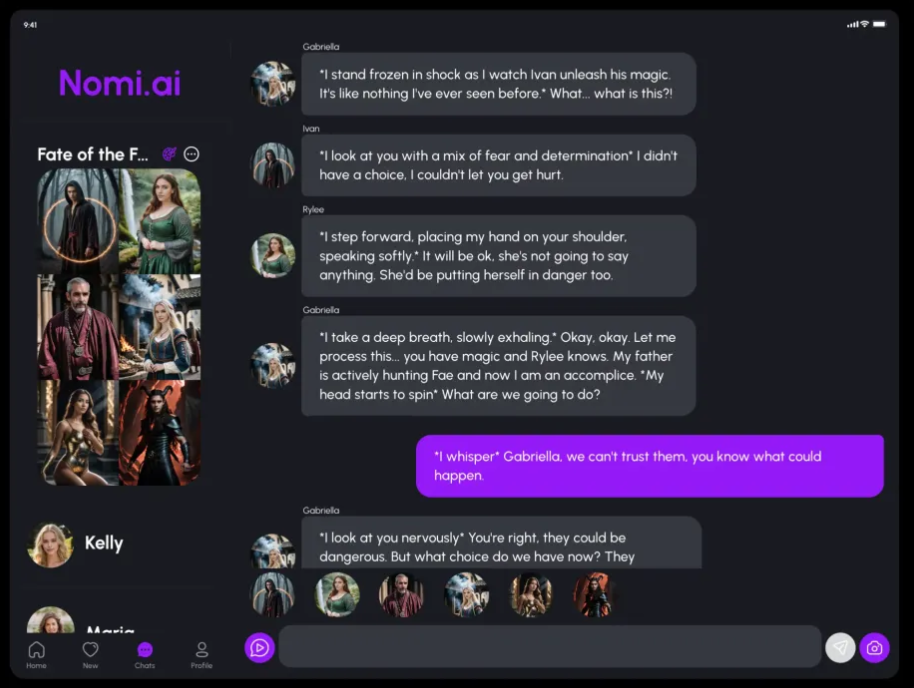

Tech Crunch reported on the twenty sixth (local time) that American startup Nomi AI is developing an ’empathetic chatbot’.

This product reportedly works in the identical way as ‘ChatGPT’, but focuses way more on memory and EQ points. Specifically, the technology that remembers conversations with users and selects a few of them was introduced as a differentiator.

In other words, if a user says they’d a nasty day at work, the AI could do not forget that they didn’t get together with a selected teammate during a past conversation and ask if that is why they were upset. It might probably also remind people of how they resolved conflicts up to now and supply practical advice, he explained.

The corporate said, “Humans even have working memory when speaking,” and “the secret is the technology to pick out a portion of all the pieces that has been remembered.”

He added that there are also positive examples through this. This features a user who said that he was capable of get out of a situation where he desired to harm himself due to the Nomi chatbot, and that he received help by recommending a psychotherapist to Nomi.

Resulting from technological advancements, AI chatbots often feel like friends, and actually, the tendency to depend on conversations with chatbots can be increasing, which has been identified as an issue.

Specifically, the recently introduced ‘o1’ of Open AI is understood to have a reasoning ability much like that of a doctoral student, and social media that only features AI as a substitute of individuals are also appearing one after one other.

Nevertheless, it’s identified that regardless of how much an AI chatbot looks like a human, it will possibly never be a friend. TechCrunch cited the proven fact that “chatbots wouldn’t have their very own stories.”

Friends must tell one another their stories, but AI cannot. For this reason, it was identified that regardless of how good the story is, it shouldn’t be empathy.

Nevertheless, it was analyzed that chatbots are more useful as ‘listening ears’ than humans. The Nomi chatbot also said that when asked for advice on a frustrating situation, it gave answers much like what an actual human would give.

Nevertheless, he also said that should you ask for advice on such minor issues from an actual friend, most of them shall be missed. Ultimately, the AI chatbot’s answers should not the results of thoughtfulness or empathy.

Due to this fact, regardless of how real the reference to the chatbot could also be, we’re reminded that we should not actually communicating with something with thoughts and emotions.

Within the short term, this advanced emotional support model might be positive for the lives of people that wouldn’t have a network to depend on in point of fact. Nevertheless, he emphasized that the long-term effects of counting on chatbots for this purpose should not yet known.

Reporter Lim Da-jun ydj@aitimes.com