Meta has launched the primary large-scale multimodal model (LMM) within the ‘Rama’ series that understands each images and text. Because the open source representative LMM, it declared that it will compete with closed models resembling OpenAI and Antropic.

Meta held its annual developer conference ‘Connect 2024’ at its headquarters in Menlo Park, California on the twenty fifth (local time) and unveiled ‘Rama 3.2’.

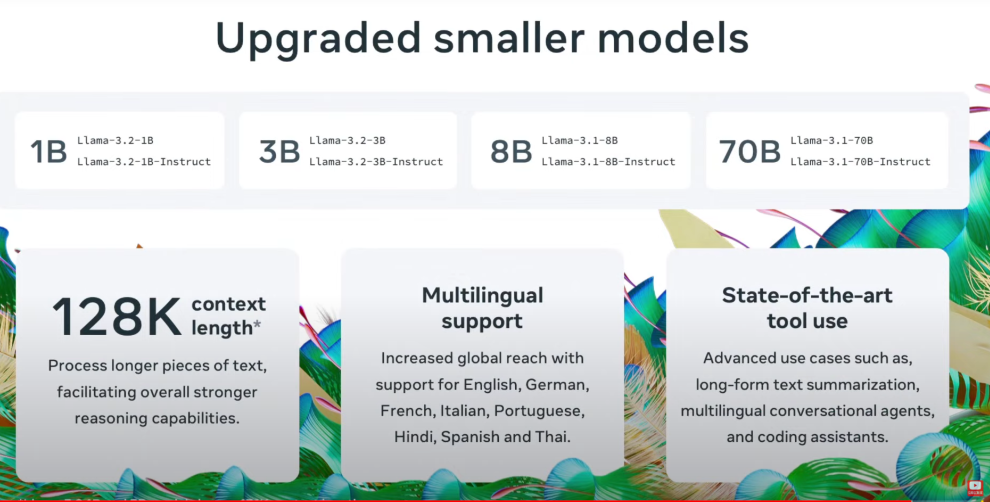

LMM is released in two small and medium models with parameters 11B and 90B. Moreover, 1B and 3B text-only models for on-device AI suitable for mobile and edge devices have been added.

“That is our first open source multimodal model,” Meta CEO Mark Zuckerberg said in his keynote. “It can enable many applications that require visual understanding.”

Like previous versions, Llama 3.2 has a context length of 128,000 tokens, allowing you to input a whole bunch of pages of text.

Meta shared the official ‘Rama Stack Distribution’ for the primary time today, allowing developers to make use of the model in various environments resembling on-premise, devices, cloud, and single node.

“Open source has already grow to be probably the most cost-effective, customizable, reliable and high-performing option,” said CEO Zuckerberg. “We’ve got reached a tipping point within the industry and are beginning to grow to be the industry standard. We call it the Linux of AI.” “He emphasized.

Moreover, Meta launched ‘Rama 3.1’ two months ago and announced that this model has achieved 10-fold growth up to now.

“Lama continues to enhance rapidly, enabling increasingly features,” CEO Zuckerberg explained.

It was revealed that Rama 3.2 can compete with Antropic’s ‘Claude 3 Haiku’ and OpenAI’s ‘GPT4o-Mini’ in image recognition and visual understanding tasks. In areas resembling following directions, summarizing, using tools, and rewriting prompts, benchmarks showed that it outperformed Google’s ‘Gemma’ and Microsoft’s ‘Pie 3.5-Mini’.

The Rama 3.2 model is homepageIt will possibly be downloaded from partner platforms Hugging Face and Meta.

Meanwhile, Meta said greater than 1 million advertisers are using its generative AI tools, with 15 million ads created using the tool last month. It was claimed that ads that used meta-generating AI had an 11% higher click-through rate and a 7.6% higher conversion rate than ads that didn’t.

Moreover, ‘voice’ was added to meta AI for consumers. It was officially announced that Rama 3.2 includes the voices of famous actors Judi Dench, Awkwafina, and John Cena.

“I believe voice can be a far more natural approach to interact with AI than text,” Zuckerberg said.

Lastly, he added, “Meta AI will grow to be probably the most used assistant on this planet,” and “it might be already at that stage.”

Reporter Lim Da-jun ydj@aitimes.com