Upstage has announced a significant update to its Large Language Model (LLM) ‘Solar’.

Upstage (CEO Kim Seong-hun) announced on the eleventh that it is going to fully release the ‘Solar Pro Preview’ version, an early test model of the next-generation LLM ‘Solar Pro’ scheduled for release in November, through open source and free API.

The Solar Pro is the highest model of the Solar LLM series. We decided to release the open source preview version in order that developers can test it before the official release. We even provide free support for API call costs.

The preview version only supports English and limits the variety of input tokens to 4096 characters, however the official release version emphasizes that the context window will likely be greatly expanded. Nevertheless, the precise figure was not disclosed.

Above all, Solar Pro is greater than twice as large as the prevailing ‘Solar Mini (10.7B)’ with 22 billion (22B) parameters, and its performance has also been greatly improved.

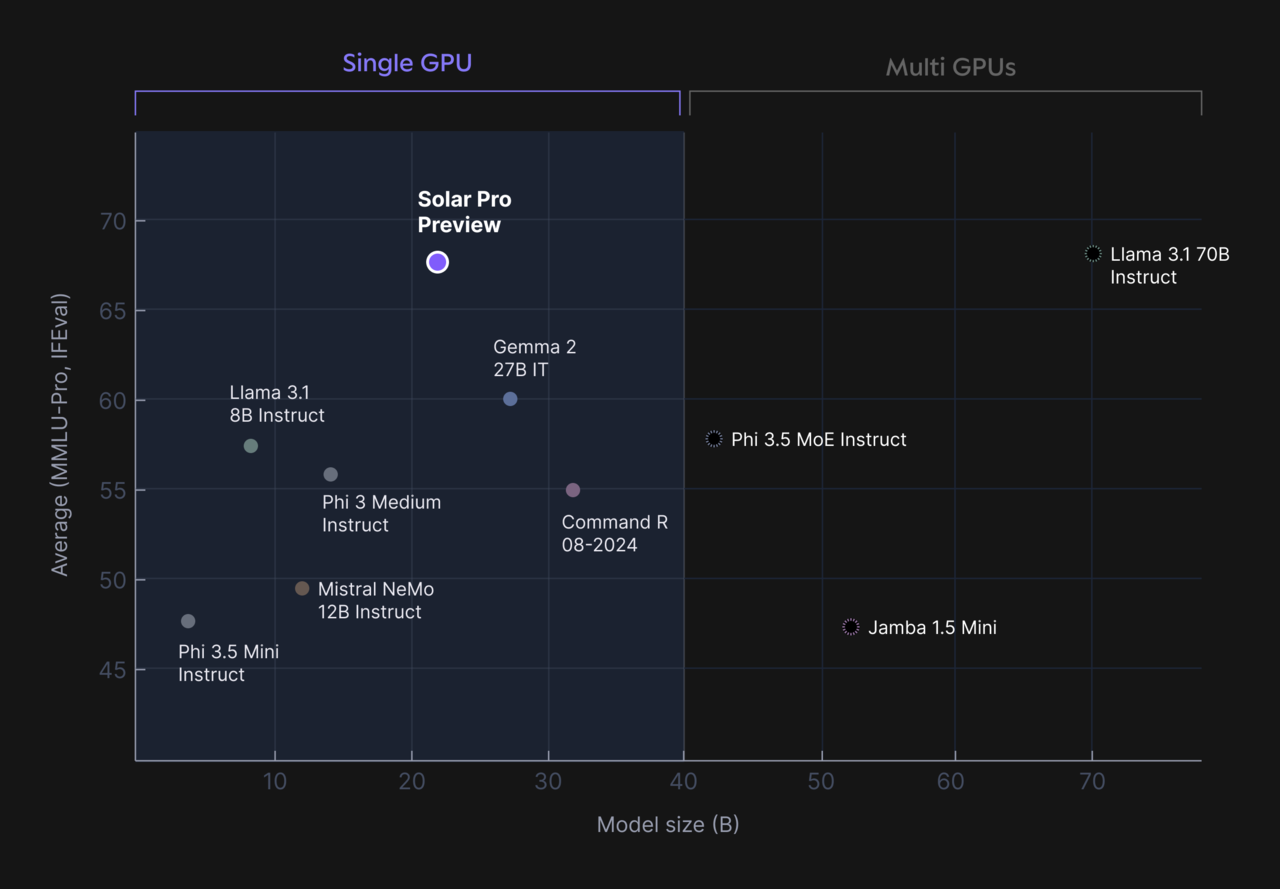

As well as, we have now succeeded in reducing the load of our own LLM modeling methodology, resembling DUS (Depth Extension Scaling) technology, to a level where it might probably still be run on a single GPU. This can be Upstage’s development direction.

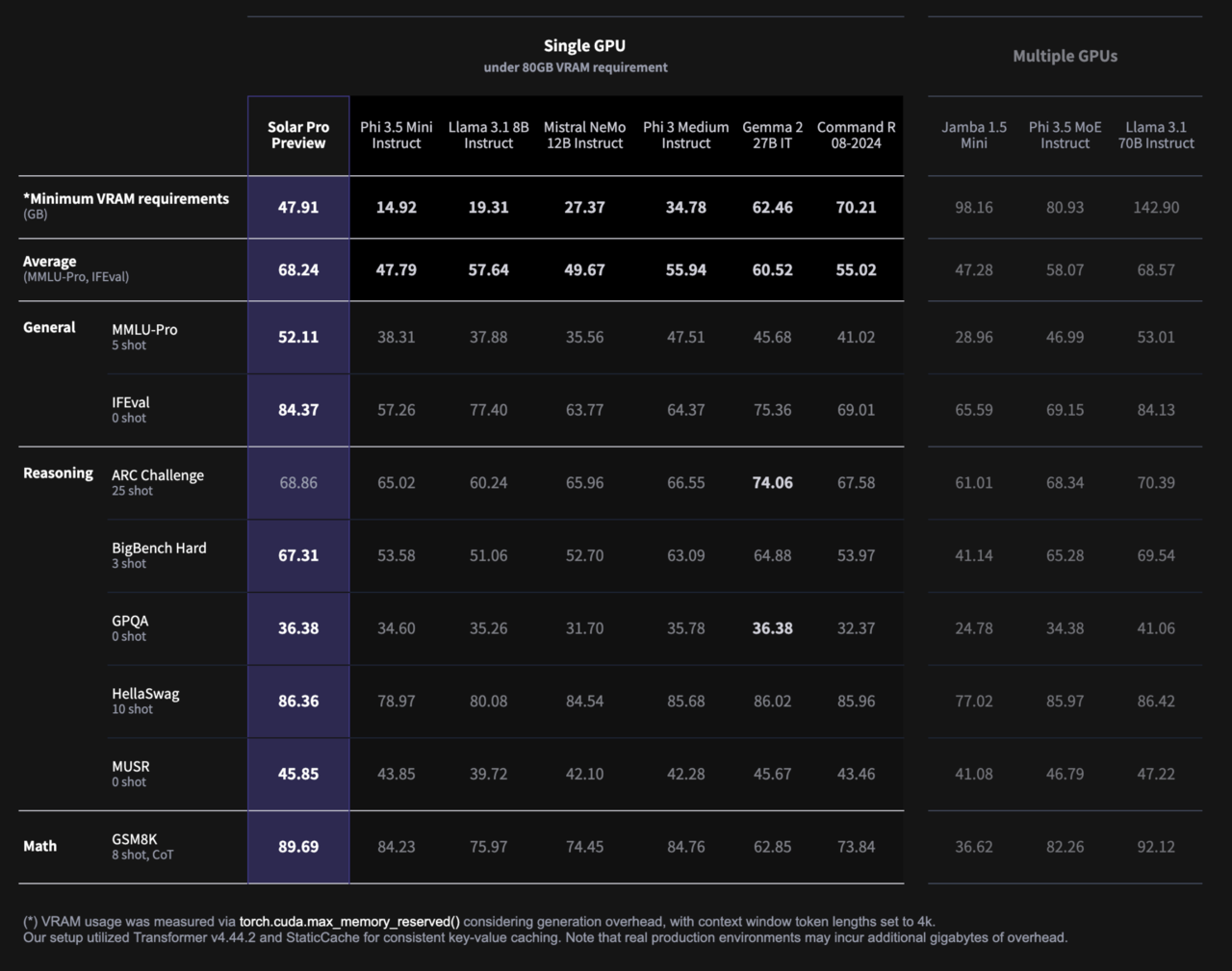

In the newest LLM benchmark indices, resembling ‘MMLU Pro’, which evaluates comprehensive knowledge of science, technology, engineering, and arithmetic (STEM) in addition to humanities, and ‘IF Ebel’, which evaluates the power to follow directions, the performance was improved by a median of 51% in comparison with Solar Mini.

The most recent indicator, MMLU Pro, is a benchmark that increases the accuracy of LLM answers from the prevailing multiple selection to 10. That is explained as having a fame for being difficult, requiring reasoning beyond the undergraduate level, with a lower correct answer rate.

Specifically, he emphasized that the figure surpasses the performance of similar-sized big tech models, resembling Microsoft’s ‘Pi-3 Medium’, Meta’s ‘Rama 3.1 8B’, Mistral AI-NVIDIA’s ‘Nemo 12B’, and Google’s ‘Gemma 2 27B’. Additionally it is an analogous level of performance to models that require multiple GPUs, resembling ‘Rama 3.1 70B’, which has parameters which might be greater than 3 times larger.

In this fashion, Solar Pro emphasized that it has reached a level where it might probably implement high-performance AI in all areas of labor, including ▲document and report writing ▲data evaluation and management ▲project management, based on its vast knowledge and reasoning ability that exceeds the human average.

Prior to this, Upstage developed Solar based on the model that ranked first in Hugging Face’s open source LLM leaderboard in August of last 12 months. Since then, Solar has established itself as a representative open source model in Korea.

Kim Sung-hoon, CEO of Upstage, said, “We’re ambitiously preparing an excellent more powerful next model as we have now challenged the worldwide AI market with the world’s best LLM Solar developed with our own technology,” and “We hope that many individuals will test the preview version of Solar Pro, which boasts the industry’s strongest performance with the bottom infrastructure cost.”

Meanwhile, it was reported that details on the model learning method will likely be released as a ‘technical paper’ in time for the official launch in November. It was also added that no specific plans have been set for the event of the multimodal model yet.

Reporter Jang Se-min semim99@aitimes.com