Apple has released a brand new open source Small Language Model (SLM) that it claims is its strongest, trained on high-quality datasets through data curation.

VentureBeat reported on the nineteenth (local time) that Apple has released as open source the 7 billion (7B) and 1.4 billion (1.4B) parameter open source SLM ‘DCLM (DataComp for Language Models)’ that gives a context window of two,000 tokens.

In line with this, DCLM is a model that learned ‘DCLM-Baseline’. This dataset was built through ‘data curation’, which routinely filters and selects high-quality data from a considerable amount of data using a machine learning (ML) model.

DCLM-Baseline was built as a part of the DataComp project, a collaboration between Apple, the University of Washington, Tel Aviv University, and the Toyota Research Institute to design high-quality multimodal datasets. The project emphasizes that constructing a high-quality training dataset through effective data curation is more vital for performance than model size.

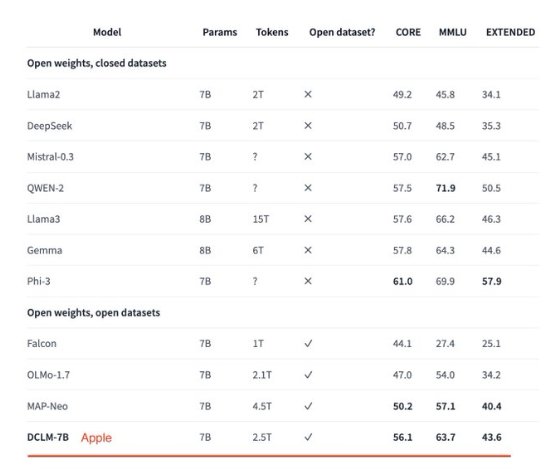

Trained with 2.5 trillion tokens of the DCLM baseline, DLCM-7B achieved 63.7% 5-shot accuracy on MMLU, a benchmark for measuring inference ability.

This can be a 6.6% improvement over MAP-Neo, the state-of-the-art model within the previous open source data language model category, and uses 40% less computational resources for training. MAP-Neo is a 7 billion-parameter SLM jointly developed by the open source community MAP, the University of Waterloo, Wuhan AI Lab, and 01.AI.

It also recorded similar performance to the key open source SLMs, equivalent to ‘Mistral-7B’ at 62.7%, Meta’s ‘Rama3-8B’ at 66.2%, Google’s ‘Gemma’ at 64.3%, and Microsoft’s ‘Pi-3’ at 69.9%.

Also, DLCM-1.4B, trained with 2.6 trillion tokens, scored 41.9% within the MMLU test, which surpassed HuggingFace’s ‘SmallLM-1.7B’ with 39.97%, Alibaba’s ‘QONE-1.5B’ with 37.87%, and MS’s ‘Pie-1.5B’ with 35.9%.

Currently, DLCM-7B is conditionally available for business use under Apple’s Sample Code License, and DLCM-1.4B is accessible for business use under the Apache 2.0 License.

Apple said these models can be found for download from HuggingFace, but they should not made for the device.

Nevertheless, starting with the 7B and 13B multimodal models ‘Ferret’ in October of last 12 months, open source models have been released consistently and have been welcomed.

Reporter Park Chan cpark@aitimes.com